As those customers that are (normally) housed out of our Boston datacenter know, we had to exercise a failover from East Coast to West Coast datacenter. (Why? Short version – a subcontractor of the colocation provider moved the wrong rack of servers.)

So we were confident in our failover – we have identical servers idle in the other datacenter, just waiting to take over, and we’d tested processes, so we were not expecting any issues. Failover went fine, and all customers were running happily within an hour of the event. But.. there were issues. Some customers complained that the UI reported intermittent “Access Denied” errors.

We weren’t getting alerts trigger from LogicMonitor, but we were getting emails about exceptions from within the Java application servers.

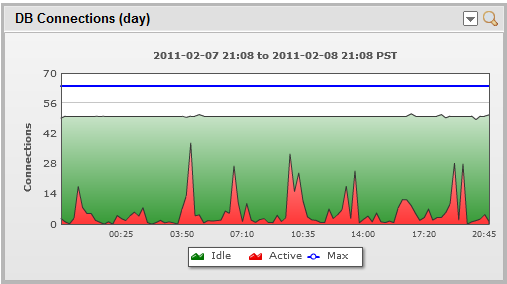

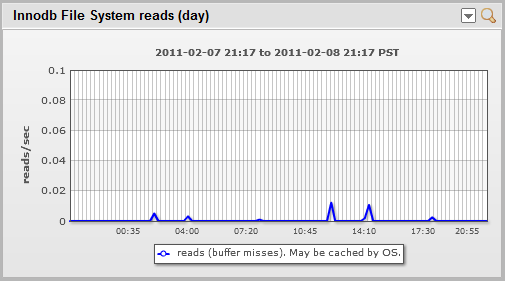

And a quick bit of investigation showed definite spikes in Tomcat monitoring of database connections:

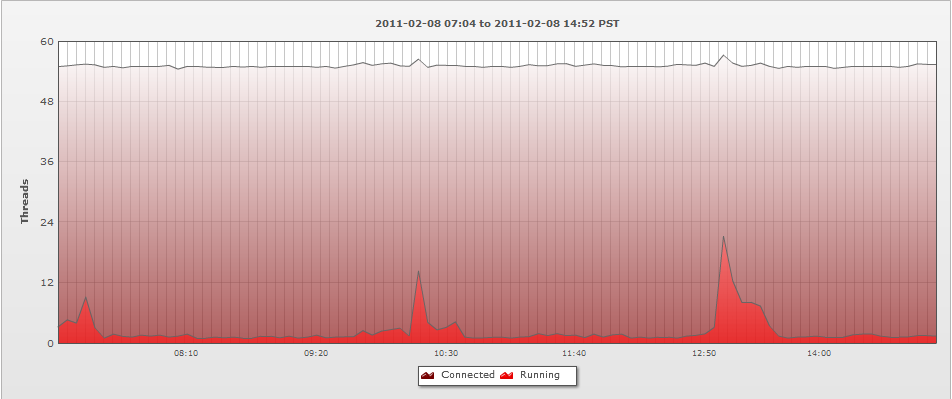

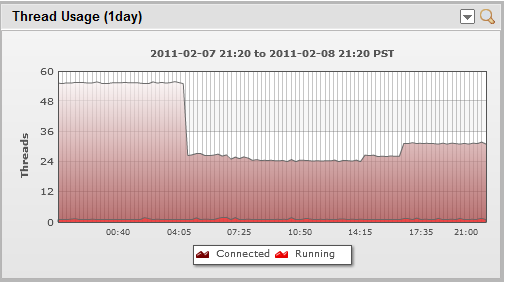

mirrored by spikes in MySql monitoring of thread usage:

So, clearly something was slowing down the DB, causing brief momentary spikes in threads, until we hit the limit (the default of 100 in our case, as we usually run so low, we haven’t needed to increase it.) The clue lay in the periodicity of the spikes – they coincided with the batch jobs that replicate data between servers.

The servers in LA that were taking over for Boston were also the servers that get a replica of the active LA servers. We didn’t think this would matter at all – the replication happened to SAS drives, while the customer data storage was on SSD (solid state disk) drives – and the MySQL instances have enough memory that innodb virtually never has to hit the disk:

However, clearly it did matter. The correlation with the periodic jobs was too close. So, we moved Mysql databases onto the SSDs too – and the issue went away.

So the lesson?

Nothing can test if systems will work under production load except…production load. But when you have an issue, you need good monitoring – even it it doesn’t trigger an alert, the graphs will help you quickly identify the issue. And, of course, we tuned our monitoring to alert us to this situation next time, in keeping with the practice that no issue can be considered closed if monitoring will not alert on its recurrence.