What the Hell is a Kibibyte?

LogicMonitor + Catchpoint: Enter the New Era of Autonomous IT

Proactively manage modern hybrid environments with predictive insights, intelligent automation, and full-stack observability.

Explore solutionsExplore our resource library for IT pros. Get expert guides, observability strategies, and real-world insights to power smarter, AI-driven operations.

Explore resources

Our observability platform proactively delivers the insights and automation CIOs need to accelerate innovation.

About LogicMonitor

In addition to being somewhat penurious, our founder Steve has a well-known penchant for being pedantic. If he bursts into the development area and starts grilling people, even seasoned engineers are frequently befuddled when first posed the question: “Kilobytes, or kibibytes?”.

Even if you haven’t heard the term, you’re probably at least familiar with the concept. A “kibibyte” is equal to 1024, or 2^10, bytes. Simple enough, but isn’t a “kilobyte” also 1024 bytes? Well, it is, sometimes. As defined by the International System of Units, the prefix “kilo” refers to 1000, or 10^3. Most storage manufacturers measure and label capacity in base 10 (1 kilobyte = 1000 bytes; 1 megabyte = 1000 kilobytes; 1 gigabyte = 1000 megabytes; 1 terabyte = 1000 gigabytes). RAM vendors and most operating systems, however, use base 2 (1 kilobyte = 1024 bytes; 1 megabyte = 1024 kilobytes; 1 gigabyte = 1024 megabytes; 1 terabyte = 1024 gigabytes). (A notable exception to this is macOS, which has used base 10 since OS X 10.6.) So in order to know what exactly is meant by “gigabyte”, you need to know the context in which the word is being used. If you are talking about raw hard drive capacity, a gigabyte is 1000000000 bytes. If you are talking about the file system on top of that hard drive, then gigabyte means 1073741824 bytes.

Confused yet? This is why the “kibibyte” is important; “kilo” (and “giga”, etc) is ambiguous when it’s used to mean both 1000 and 1024, so a new set of binary prefixes were established by the IEC in 1998 to curtail the confusion. Other prefixes include “mebi”, “gibi”, “tebi” and “pebi” to replace “mega”, “giga”, “tera” and “peta” respectively. (There are more prefixes for larger quantities, but if you really think you need them you can go look them up yourself.)

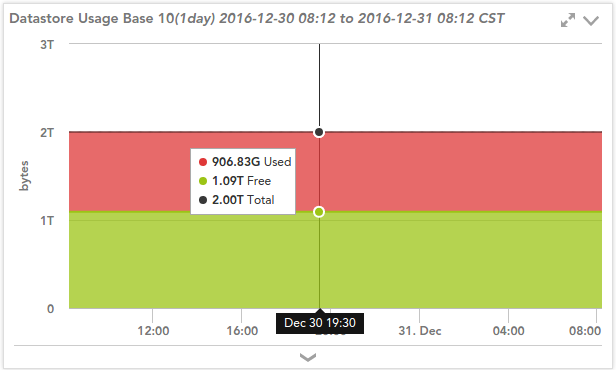

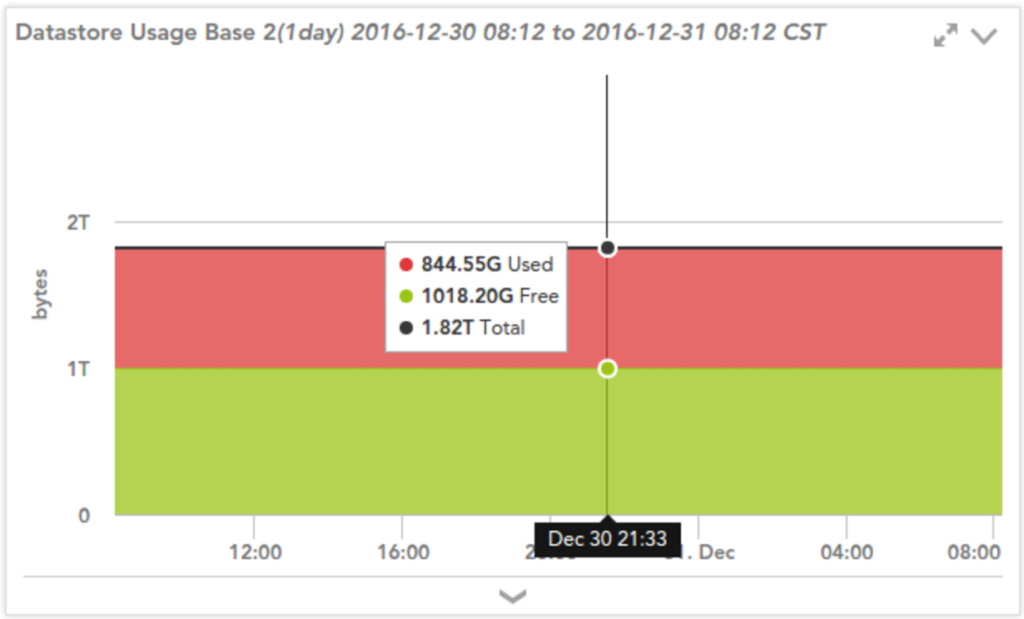

So, that’s all fine and dandy, but is it really that big of a deal? Does a difference of 24 bytes per kilobyte really matter? Let’s consider a drive advertised as having 2 terabytes (2 x 10^12 bytes) of capacity. I’ve got one formatted as a VMFS volume in my VMware lab. After formatting, VMware reports 2000112582656 bytes of capacity (curiously more than 2 terabytes, but I’m not going to complain about getting more bytes than advertised). Let’s see how this differs when displayed by LogicMonitor as terabytes (as the term is used by storage vendors) and tebibytes:

2000112582656 / 1000 / 1000 / 1000 / 1000 = 2.00 terabytes

2000112582656 / 1024 / 1024 / 1024 / 1024 = 1.82 tebibytes

The astute reader might observe that there actually is no difference between 2.000 terabytes and 1.819 tebibytes, and they’d be correct. However, unless you’re on a Mac, your OS and file manager are likely reporting capacity and file size in tebibytes. The notation won’t necessarily make it obvious. For example, the df command on linux would report 1.8T, whereas Windows Explorer would report 1.82TB. Windows uses the JEDEC standard instead of IEC, so while the value is equal to tebibytes, it would still be labelled as “terabytes”. The JEDEC standard considers 1 kilobyte to equal 1024 bytes. In this case, there is a ~180 gigabyte or gibibyte discrepancy. If you plug in your shiny new 2 terabyte drive, you may be disappointed when your operating system reports it as just 1.8 terabytes. Same word, but different meaning in the different contexts.

What does this all have to do with LogicMonitor? Well, if you define a graph in LogicMonitor, by default it will scale your graphed values using base 10 kilobytes. If you graph 2000112582656 bytes, your graph in LogicMonitor will display 2.00T when you mouseover the line. This isn’t ideal though, as you want your monitoring tool to display it the same way your OS does, using base 2 kibibytes (1.82T). Lucky for you (and us), that is an option! Every graph definition in LogicMonitor has the option to Scale by units of 1024; all you have to do is check the box and make sure to convert your values to bytes. LogicMonitor will handle the rest.

One final issue might be bothering you; why does LogicMonitor default to scaling by units of 1000 if most OSes scale storage units by 1024? The answer is that, despite what your storage administrator may tell you, monitoring is not just storage and server monitoring. Almost everything else – network bits per second, HTTP requests per second, latency, the depth of SQS queues, the number of objects in a cache – scales using base 10. It’s only for file systems and memory that you should check the “scale by 1024” option.

If all operating systems adopted the standard of reporting in tebibytes and gibibytes, it would remove the confusion. But until then, remember that the prefix “giga” means one thing when talking about memory and file systems, and another thing when talking about everything else.

Think you got an odd look from your sysadmin colleagues when mentioning “kibibyte”? Try asking your network team about “gibibits”.

Because manufacturers use base 10 (1TB = 1,000,000,000,000 bytes), but most operating systems use base 2 (1TiB = 1,099,511,627,776 bytes). That “missing” space is really a difference in measurement units, not lost capacity.

Yes, If you’re dealing with capacity marketing (e.g., drive specs), expect base 10. If it’s system-reported usage (e.g., your OS showing free space), expect base 2. Always be suspicious of any metric labeled only with “GB” or “TB” without clarification.

It can lead to confusion, mismatched expectations, or even troubleshooting wild goose chases, especially in storage planning, capacity alerts, or SLA reporting. Misinterpreting units might make you think you’re losing storage or your system is misreporting data.

JEDEC uses binary units (like 1024 bytes in a kilobyte) but labels them with base-10-style terms (KB, GB), which can mislead users. IEC assigns distinct names like “kibibyte” and “mebibyte” to remove this ambiguity. Understanding which standard your system follows helps decode file sizes accurately.

© LogicMonitor 2026 | All rights reserved. | All trademarks, trade names, service marks, and logos referenced herein belong to their respective companies.