Since the revolutionization of the concept by Docker in 2013, containers have become a mainstay in application development. Their speed and resource efficiency make them ideal for a DevOps environment as they allow developers to run software faster and more reliably, no matter where it is deployed. With containerization, it’s possible to move and scale several applications across clouds and data centers.

However, this scalability can eventually become an operational challenge. In a scenario where an enterprise is tasked with efficiently running several containers carrying multiple applications, container orchestration becomes not just an option but a necessity.

What is container orchestration?

Container orchestration is the automated process of managing, scaling, and maintaining containerized applications. Containers are executable units of software containing application code, libraries, and dependencies so that the application can be run anywhere. Container orchestration tools automate the management of several tasks that software teams encounter in a container’s lifecycle, including the following:

- Deployment

- Scaling and load balancing/traffic routing

- Networking

- Insights

- Provisioning

- Configuration and scheduling

- Allocation of resources

- Moving to physical hosts

- Service discovery

- Health monitoring

- Cluster management

How does container orchestration work?

There are different methodologies that can be applied in container orchestration, depending on the tool of choice. Container orchestration tools typically communicate with YAML or JSON files that describe the configuration of the application. Configuration files guide the container orchestration tool on how and where to retrieve container images, create networking between containers, store log data, and mount storage volumes.

The container orchestration tool also schedules the deployment of containers into clusters and automatically determines the most appropriate host for the container. After a host has been determined, the container orchestration tool manages the container’s lifecycle using predefined specifications provided in the container’s definition file.

Container orchestration tools can be used in any environment that runs containers. Several platforms offer container orchestration support, including Kubernetes, Docker Swarm, Amazon Elastic Container Service (ECS), and Apache Mesos.

Challenges and best practices in container orchestration

While container orchestration offers transformative benefits, it’s not without its challenges. Understanding these potential pitfalls and adopting best practices can help organizations maximize the value of their orchestration efforts.

Common challenges

- Complexity in setup and operation

Setting up container orchestration can be daunting, especially for teams new to the technology. Configuring clusters, managing dependencies, and defining orchestration policies often require significant expertise. The steep learning curve, particularly with tools like Kubernetes, can slow adoption and hinder productivity. - Security risks with containerized environments

Containerized applications introduce unique security challenges, including vulnerabilities in container images, misconfigurations in orchestration platforms, and potential network exposure. Orchestrators need robust security measures to safeguard data and applications. - Vendor lock-in with proprietary solutions

Organizations relying on proprietary orchestration tools or cloud-specific platforms may find it difficult to migrate workloads or integrate with other environments. This can limit flexibility and increase long-term costs. - Performance bottlenecks

Resource contention, inefficient scaling policies, and poorly optimized configurations can lead to performance issues, impacting application reliability and user experience.

Best practices for successful container orchestration

- Simplify and automate with CI/CD pipelines

Automating workflows using Continuous Integration and Continuous Deployment (CI/CD) pipelines reduces manual intervention and ensures consistency in deployments. Tools like Jenkins or GitLab can integrate seamlessly with container orchestration platforms to streamline operations. - Proactively monitor and manage clusters

Monitoring tools like LogicMonitor can be used to track container performance, resource usage, and application health. Proactive alerts and dashboards help identify and resolve issues before they impact users, ensuring reliability and uptime. - Prioritize security from the start

Implement security best practices such as:- Regularly scanning container images for vulnerabilities.

- Enforcing Role-Based Access Control (RBAC) to restrict permissions.

- Configuring network policies to isolate containers and protect sensitive data. By building security into the orchestration process, organizations can mitigate risks and maintain compliance.

- Start small and scale gradually

Begin with a minimal setup to gain familiarity with orchestration tools. Focus on automating a few processes, then gradually expand the deployment to handle more complex workloads as the team’s expertise grows. - Optimize resource allocation

Regularly review resource usage and scaling policies to ensure efficient operation. Use orchestration features like auto-scaling to adjust resources based on demand dynamically. - Choose flexible, open solutions

To avoid vendor lock-in, prioritize tools like Kubernetes that support multi-cloud or hybrid deployments and integrate with a wide range of environments and services.

How does Kubernetes orchestration work?

Kubernetes is an open-source container orchestration platform that is considered the industry standard. The Google-backed solution allows developers and operators to deliver cloud services, either as Platform-as-a-Service (PaaS) or Infrastructure-as-a-Service (IaaS). It’s a highly declarative solution, allowing developers to declare the desired state of their container environment through YAML files. Kubernetes then establishes and maintains that desired state.

The following are the main architecture components of Kubernetes:

Nodes

A node is a worker machine in Kubernetes. It may be virtual or physical, depending on the cluster. Nodes receive and perform tasks assigned from the Master Node. They also contain the necessary services to run pods. Each node comprises a kubelet, a container runtime, and a kube-proxy.

Master Node

This node controls all the worker nodes and originates all assigned tasks. It does this through the control pane, which is the orchestration layer that exposes the API and interfaces to define, deploy, and manage the lifecycles of containers.

Cluster

A cluster represents the master node and multiple worker nodes. Clusters combine these machines into a single unit to which containerized applications are deployed. The workload is then distributed to various nodes, making adjustments as nodes are added or removed.

Pods

Pods are the smallest deployable computing units that can be created and managed in Kubernetes. Each Pod represents a collection of containers packaged together and deployed to a node.

Deployments

A deployment provides declarative updates for Pods and ReplicaSets. It enables users to designate how many replicas of a Pod they want running simultaneously.

How does Docker orchestration work?

Docker, also an open-source platform, provides a fully integrated container orchestration tool known as Docker Swarm. It can package and run applications as containers, locate container images from other hosts, and deploy containers. It is simpler and less extensile than Kubernetes, but Docker provides the option of integration with Kubernetes for organizations that want access to Kubernetes’ more extensive features.

The following are the main architectural components of Docker Swarm:

Swarm

A swarm is a cluster of Docker hosts that run in swarm mode and manage membership and delegation while also running swarm services.

Node

A node is the docker engine instance included in a swarm. It can be either a manager node or a worker node. The manager node dispatches units of work called tasks to worker nodes. It’s also responsible for all orchestration and container management tasks like maintaining cluster state and service scheduling. Worker nodes receive and execute tasks.

Services and Tasks

A service is the definition of a task that needs to be executed on the nodes. It defines which container images to use and which commands to execute inside running containers.

A task carries a container alongside the commands to run inside the container. Once a task is assigned to a node, it cannot move to another node.

How does container orchestration work with other Platforms?

Although Docker and Kubernetes are leading the pack when it comes to container orchestration, other platforms are capitalizing on their open-source software to provide competition.

Red Hat OpenShift is an open-source enterprise-grade hybrid platform that provides Kubernetes functionalities to companies that need managed container orchestration. Its framework is built on a Linux OS that allows users to automate the lifecycles of their containers.

Google Kubernetes Engine is powered by Kubernetes and enables users to easily deploy, manage, and scale Docker containers on Google Cloud.

Other platforms like Apache Mesos and Amazon ECS have developed their own container tools that allow users to run containers while ensuring security and high scalability.

Tool comparisons: Finding the right fit for your needs

When choosing the best container orchestration tool for an organization, several factors have to be taken into consideration. These factors vary across different tools. With a tool like Mesos, for instance, the software team’s technical experience must be considered as it is more complex than simple tools like Swarm. Organizations also have to consider the number of containers to be deployed, as well as application development speed and scaling requirements.

With the right tools and proper resource management, container orchestration can be a valuable approach for organizations looking to achieve improved productivity and scalability.

Below is a comparison of the most popular tools in the container orchestration space, highlighting their key features and ideal use cases.

| Tool | Scalability | Learning Curve | Supported Environments | Key Integrations | Best For |

| Kubernetes | Excellent for large, complex setups | Steep, requires expertise | On-premises, cloud (AWS, GCP, Azure) | CI/CD pipelines, monitoring tools, Istio | Enterprises requiring robust orchestration for multi-cloud or hybrid environments. |

| Docker Swarm | Moderate, ideal for small clusters | Low, easy for Docker users | On-premises, cloud | Docker ecosystem, Kubernetes (optional integration) | Small to medium teams seeking straightforward orchestration within the Docker platform. |

| Amazon ECS | Highly scalable within AWS ecosystem | Moderate, AWS-specific knowledge | AWS (native service) | AWS services (EKS, CloudWatch, IAM) | Businesses already leveraging AWS services for containerized applications. |

| Red Hat OpenShift | Enterprise-grade, highly scalable | Moderate, depends on Kubernetes base | Hybrid environments, Linux-based on-premise/cloud | OpenShift tools, Kubernetes integrations | Enterprises needing managed Kubernetes with robust security and enterprise-grade features. |

| Apache Mesos | Extremely scalable for large systems | High, requires advanced expertise | On-premises, private cloud | Marathon, custom integrations | Advanced users managing diverse workloads beyond containers, such as big data and microservices. |

Examples of container orchestration

Container orchestration provides a number of benefits for organizations, but what do those benefits look like in real-world work situations? We included a couple of common orchestration examples below:

First, consider a large e-commerce platform that experiences heavy traffic during the holiday season. In the past, that platform would have to manually provision additional servers to handle the increased holiday load, which is a time-consuming and error-prone process. With container orchestration, the platform can use an auto-scaling feature that automatically provisions additional containers as traffic increases and scales back down when traffic decreases. That way, increased traffic for the holiday rush can die down in January once everyone buys, returns, and exchanges their items.

Second, consider a company that has a website, a mobile app, and a back-end processing system that all runs on different servers in different environments. In the past, managing these different applications and environments would require much manual effort and coordination. With container orchestration, the company can use a single platform to manage all of its containers and environments, allowing it to easily deploy, manage, and scale its applications across different environments. This allows the company to adopt new technologies more easily and streamline its development process.

Monitor your containers with LogicMonitor today

Container orchestration is a critical component of modern application development, enabling teams to efficiently manage, scale, and secure containerized environments. By addressing the challenges of complexity, security, and resource management, and leveraging best practices like CI/CD pipelines and proactive monitoring, organizations can maximize the benefits of container orchestration while minimizing operational overhead.

To fully realize the potential of container orchestration, having a reliable monitoring solution is essential. LogicMonitor offers scalable, dynamic monitoring for ephemeral containerized resources alongside your hybrid cloud infrastructure. With LogicMonitor, you gain visibility into your Kubernetes and Docker applications through a single, unified platform that automatically adapts to your container resource changes.

What is NoSQL?

NoSQL is a non-tabular database that has a different data structure than relational tables. It is sometimes referred to as Non-SQL. NoSQL typically avoids relational data storage; however, while it can handle relationships in data storage, those relationships are built for specialized purposes.

There is much debate regarding SQL vs. NoSQL, with each data management system geared toward specific uses. Unlike SQL, which was developed in the 1970s to limit data duplication, NoSQL is a relatively new type of database. NoSQL came about in response to increasing amounts of data, and it uses a distributed system to help organize large amounts of structured and unstructured data. NoSQL is popular in business tech and other industries, with large organizations such as Amazon, Google, and LinkedIn using NoSQL databases.

Today, large companies are increasingly using NoSQL for data management. For example, a business that needs to store large amounts of unstructured and structured data or manage real-time streaming will want to consider NoSQL.

How NoSQL databases work

NoSQL databases function differently from traditional relational databases, offering a more flexible and scalable approach to data management. Their unique operational mechanisms make them well-suited for handling large-scale, distributed data environments.

NoSQL databases use flexible schemas, allowing dynamic and adaptable data models. Unlike SQL databases with predefined schemas, NoSQL supports various data types, including structured, semi-structured, and unstructured formats. Developers can update schemas without disrupting existing records, enabling rapid application development.

These databases also operate on distributed architectures, spreading data across multiple servers or nodes to ensure high availability, fault tolerance, and seamless scaling. Data replication guarantees durability, while partitioning efficiently distributes workloads to maintain performance under heavy demand.

Additionally, NoSQL terminology differs from SQL’s traditional structure. Collections in NoSQL function similarly to tables, grouping related data. Documents replace rows, allowing more flexible records. Some NoSQL models use key-value pairs or column families instead of columns to organize data.

Types of NoSQL databases

The structure and layout of different NoSQL database types depend on the data model. The four main structures are document, graph, key-value, and wide-column.

Document Databases – These databases store data similar to JavaScript Object Notation (JSON). Every document will contain pairs of values and fields, but it does not need foreign keys because specific relationships between documents don’t exist. Other essential features include fast creation, easy maintenance, flexible schema, and open formats.

Graph Databases – This format is primarily for data represented in a graph, such as road maps and public transportation information. The graphs store data in edges and nodes. Nodes generally contain information about people, places, and things, while edges store relational information between the nodes. Using a graph database enables quick identification of data relationships.

Wide-Column Databases – A wide-column database stores information in columns instead of rows. The columns form subgroups, and columns in the same family or cluster can contain different data types. Databases with columns read data more efficiently, and each column has a dynamic schema and isn’t fixed in a table. If you want to store large data, you’ll likely want to consider using wide-column databases.

Key-Value Databases – With the simplest format, key-value databases only have two columns containing keys and values. More extensive data models are sometimes extensions of the key-value database, which uses the associative array as the basic data model. Data also comes in a collection of key-value pairs, and each key never appears more than once in each collection. Important features of this type of database include simplicity, speed, and scalability.

You’ll also see several specific types of NoSQL databases. Examples include:

- BigTable

- Cassandra

- CouchDB

- FaunaDB

- HBase

- MongoDB

- Redis

NoSQL use cases

NoSQL databases excel in handling diverse and complex data environments, making them indispensable for a wide range of modern applications. Their scalability, flexibility, and high performance allow businesses to tackle demanding workloads effectively.

Real-time data management is one of the most compelling use cases for NoSQL. These databases handle large streams of incoming data with minimal latency, making them ideal for real-time analytics, fraud detection, and live social media feeds. Their ability to process data at lightning speed ensures a seamless user experience even during peak demand.

NoSQL databases play an important role in cloud security by supporting dynamic data models and secure storage. Their distributed nature ensures data integrity, availability, and disaster recovery, making them valuable for enterprises managing sensitive information across multiple cloud environments.

High-availability apps benefit greatly from NoSQL’s fault-tolerant and distributed design. Industries like finance, healthcare, and telecommunications rely on NoSQL databases to maintain uptime and continuous service delivery, even during infrastructure failures or spikes in user traffic.

Diverse workloads such as IoT and e-commerce also thrive with NoSQL. In IoT applications, vast amounts of sensor data require scalable storage solutions that can handle real-time processing and analysis. Similarly, e-commerce platforms depend on NoSQL databases for personalized product recommendations, dynamic pricing, and efficient inventory management.

Benefits

NoSQL offers several benefits:

- Easy for developers to use – One of the first advantages of NoSQL is that some systems only require a few lines of code. The databases also require less general maintenance.

- Flexible schemas – NoSQL is non-rigid, making testing and implementing updates easier. This is necessary for most modern applications because fields are different, and you will often need to make changes quickly and easily.

- Horizontal scaling – Expanding NoSQL is relatively easy and inexpensive, providing horizontal scaling because of its lack of structure. This means every element is independent and doesn’t need links. On the other hand, SQL will require upgrades such as more RAM or CPUs for vertical expansion.

- High performance – NoSQL often has higher performance levels than SQL because it doesn’t need to query data through various tables. Since things move much quicker with all the information in one database, doing 10,000 queries each second with some NoSQL databases is possible.

- Large data storage – NoSQL databases have the potential to store massive amounts of data sets, and they can do so at high rates of speed. For example, Cloud BigTable is a NoSQL database that lets you store structured data while allowing addition and deletion without disturbance.

Drawbacks

The potential drawbacks include the following:

- Requires multiple databases – Since the databases are specialized for particular use cases, you’ll need to use various data models and databases. You might still need to use SQL to help streamline the overall process.

- Requires more support – NoSQL is much newer than SQL. SQL is, therefore, more mature and has a lot of online instructional support. With NoSQL, you’ll likely have more difficulty finding expert support when you need assistance.

- Lack of consistency – NoSQL databases lack standardization, and the programming language and design of these databases vary extensively. This variation among NoSQL products is more extensive than with SQL databases.

- Lack of compatibility – NoSQL is not always entirely compatible with SQL instructions.

- Limited ACID applications – Most NoSQL systems don’t support ACID (atomicity, consistency, isolation, durability) transactions. MongoDB is an exception to this situation.

Choosing a NoSQL database

Selecting the right NoSQL database depends on several factors that align with your organization’s data management needs and business goals. NoSQL databases come in various models, each suited to specific use cases, making it essential to evaluate your options carefully. Key considerations include:

1. Data model selection

- Document databases: Ideal for applications requiring flexible schemas, such as content management systems.

- Key-value stores: Best for caching and real-time session management.

- Wide-column stores: Useful for high-volume analytical applications.

- Graph databases: Perfect for applications emphasizing relationships, like social networks.

2. Consistency trade-offs

- Consider your application’s tolerance for data inconsistency. NoSQL databases often sacrifice strict consistency for availability and scalability, following the CAP theorem.

- Use databases with configurable consistency settings if data accuracy is critical.

3. Cloud compatibility

- Choose a NoSQL database that integrates seamlessly with your cloud provider’s ecosystem.

- Consider managed services to reduce operational overhead and focus on development.

4. Migration strategies

- Plan for data migration if switching from a relational database to NoSQL.

- Ensure your team has the skills and tools necessary for a smooth migration process.

Assessing these factors can help you identify the NoSQL database that best meets your business needs, ensuring optimal performance, scalability, and reliability.

What is MongoDB?

MongoDB is a type of NoSQL database that is document-oriented and uses various documents and collections. It is primarily for high-volume data storage. Key-value pairs are the basic unit for MongoDB.

The following are a few of the essential features of MongoDB:

- MongoDB is very scalable. This system allows developers to write code in whatever language they choose.

- It doesn’t need a schema before starting. You can create the fields as you go.

- Every database will contain collections, with each collection housing documents. Each document can have fields with varying sizes and different content.

- It provides quicker query responses.

- MongoDB provides advanced searching.

- The database features indexing to improve search query performance.

- MongoDB provides data replication to send it to multiple nodes. Both primary and secondary nodes can replicate data.

- It supports advanced features for searching any field, range of queries, or regular expression.

- MongoDB provides the oplog (operations log) feature. This is a system that collects and stores all database changes. It keeps the changes chronologically and can help with deeper analysis since the oplog is entirely granular.

Many of these features point to a common theme, which is flexibility. When using SQL best practices, you must work within the database structure. There’s usually only one best way to do things. When using MongoDB, you’ll have several options for optimizing code throughout the process.

Is MongoDB NoSQL?

Yes, MongoDB is a type of NoSQL. MongoDB is a database management system that stores data using binary storage in flat files. This structure is helpful for large amounts of data since data storage is efficient and compact. It is document-based and open-sourced.

When using MongoDB, consider the following tips:

- The _id field must appear in every MongoDB document.

- Sharding is a way to distribute data through several partitions.

- There are size limits to keep in mind. When using MongoDB, you cannot exceed 16 MB for documents.

- There are limits on nested data. However, storing too many arbitrary objects when using MongoDB is usually not a good idea.

- Note that MongoDB restricts certain characters, including the $ sign and a period (.).

Like NoSQL, you’ll need to monitor MongoDB effectively. Several specific areas need monitoring:

- Instance Status

- Instance Hardware Metrics

- Replication Metrics

- Connections Metrics and Cluster Operations

What is the difference between SQL and NoSQL?

SQL is the acronym for Structured Query Language. As the most basic type of database management, SQL is a relational model that searches and retrieves information through different data, fields, and structures. Some of the most fundamental differences between SQL and NoSQL include:

- NoSQL uses dynamic schema for its unstructured data, while SQL uses queried language with a predefined schema.

- NoSQL databases are scalable horizontally, while SQL is scalable vertically.

- NoSQL has document, graph, key-value, or wide-column store databases, while SQL has table-based databases.

- NoSQL is better suited for unstructured data, while SQL will likely use multi-row transactions.

The bottom line

Each database has its merits, but when considering SQL vs. NoSQL, it’s important to remember a few key points. These include SQL being relational while NoSQL is non-relational, SQL databases generally scaling vertically, and NoSQL falling into four types of structures. When selecting from the NoSQL options, consider MongoDB an advanced database capable of handling dynamic schema and big data.

When evaluating NoSQL databases, consider factors such as scalability, consistency, and use case compatibility. Databases like MongoDB, Cassandra, and Redis provide powerful features designed to handle massive workloads and dynamic data models, making them essential for modern cloud-native applications.

Looking to optimize your data management strategy? Explore how LogicMonitor can help you monitor and manage your database infrastructure. Our comprehensive platform ensures visibility, performance, and reliability across all your IT environments.

The art of monitoring the influence of an application’s performance on business outcomes is constantly evolving. It used to be directing IT teams to act on insights from an Application Performance Monitoring (APM) solution was enough to drive business outcomes. Now we know the user experience has a heavy hand in determining whether a digital platform survives or dies. An APM solution keeps tabs on the performance of application components such as servers, databases, and services. When it comes to monitoring user experience, Digital Experience Monitoring (DEM) is the key component organizations need to go a step further and really understand how users (human, machine, or digital) are interacting with their digital platforms.

So what is DEM exactly?

DEM is a practice within application performance management that focuses on monitoring and optimizing the overall user experience of digital apps and services. A DEM-enabled monitoring solution combines various techniques to gain insights into user behaviors, experience metrics (page load times, transaction responses, and error rates), application performance, network performance, and infrastructure performance. This allows organizations to proactively identify and address issues driving user satisfaction, improve the overall user experience, and positively drive business outcomes.

While DEM shares a connection with APM, it focuses more on the user’s perspective by tying performance metrics directly to user behaviors and experiences. DEM also complements observability practices by integrating telemetry data into user-centric insights, bridging the gap between technical performance and real-world user interactions.

Over time, DEM has evolved from basic performance monitoring to a sophisticated practice that combines real user monitoring, synthetic testing, and advanced analytics. This progression reflects the growing importance of delivering seamless digital experiences in increasingly complex environments.

Why does DEM matter?

As a monitoring capability, DEM is what mines and presents critical user patterns and trends to IT teams so they can collaboratively elevate their organization’s digital user experience from good to great. In many organizations, APM data gets splintered and analyzed through the lens of the team looking at it. Where DevOps teams are more likely to look at APM insights to keep tabs on application components and code-level performance, ITOps teams are more likely to pay attention to the data regarding broader infrastructure performance (servers, network devices, and databases). DEM provides unified insights from a variety of sources so both DevOps and ITOps get a unified look at the intertwined influences of user behavior, application performance, network metrics, and infrastructure data. This singular data set, coming directly from the users, gets IT teams out of their silos and at the whiteboard to collaborate on solutions.

Consider one scenario organizations will likely experience: a surge in CPU spikes on the servers. In the absence of DEM, DevOps and ITOps teams likely have separate insights into different application components and services, which limits their ability to troubleshoot the problem collaboratively. DEM bridges the gap between DevOps and ITOps, fostering a unified and cohesive approach to monitoring and optimizing the digital experience. It facilitates cross-functional collaboration, breaking down barriers that traditionally impede effective troubleshooting. By eliminating silos and promoting shared visibility, organizations can streamline incident response, reduce mean time to resolution (MTTR), and enhance the overall user experience.

How digital experience monitoring works

DEM works by leveraging a combination of monitoring techniques and technologies to capture, analyze, and interpret data related to user interactions with digital systems. The primary goal is to provide IT teams with actionable insights into how applications, networks, and infrastructure components impact the end-user experience. Here’s how it operates:

- Data collection: DEM solutions collect data from multiple sources, including real user monitoring (RUM), synthetic monitoring, application logs, and network performance metrics. This data spans application transactions, network latencies, server performance, and user interactions.

- Data correlation: Once collected, DEM correlates data points from these sources to build a cohesive picture of the end-to-end digital experience. For example, it links slow page load times with network bandwidth issues or high CPU usage on backend servers.

- Performance analysis: The solution uses advanced analytics and machine learning to identify patterns and anomalies. This enables IT teams to understand the root causes of performance bottlenecks, such as broken application dependencies or network congestion.

- Visualization of insights: DEM provides intuitive dashboards and reports that showcase user experience metrics, performance trends, and incident details. These visualizations are tailored to different teams, allowing DevOps to focus on application-level details while ITOps can monitor broader infrastructure health.

- Proactive alerting: By leveraging synthetic monitoring and threshold-based alerts, DEM identifies potential issues before they impact users. Simulated user journeys test critical workflows like logins or transactions, offering early warning signs of degradation.

- Collaboration enablement: DEM fosters cross-team collaboration by providing unified insights into user experience. Teams can access the same datasets, identify shared goals, and work cohesively to optimize performance and reduce mean time to resolution (MTTR).

By combining these operational mechanisms, DEM ensures organizations can maintain high-quality digital experiences for their users while proactively addressing performance challenges.

Components of digital experience monitoring

DEM is built on several key components that deliver a comprehensive view of the user experience. These components provide the data and insights necessary to monitor and optimize the performance of applications, networks, and infrastructure. Here are the essential building blocks of DEM:

- Real user monitoring (RUM):

RUM captures data from actual user interactions with an application or website in real time. It measures page load times, transaction durations, and error rates, offering insights into how users experience the platform. This component is invaluable for identifying pain points in the user journey and uncovering opportunities to enhance engagement. - Synthetic transaction monitoring:

Synthetic monitoring uses simulated user interactions to test critical workflows, such as logging into an account, completing a purchase, or searching for a product. By automating these tests, synthetic monitoring helps IT teams proactively detect issues like slow load times, failed transactions, or outages before they affect real users. - Endpoint monitoring:

Endpoint monitoring tracks the performance of devices and applications used by end users, such as desktops, laptops, and mobile devices. By analyzing factors like application responsiveness, network connectivity, and device health, this component ensures that user-side issues are addressed promptly, minimizing frustration and downtime. - Application performance monitoring (APM):

APM focuses on the performance of the application’s backend components, such as databases, APIs, and servers. It helps IT teams detect code-level issues, optimize application performance, and ensure smooth integration with other systems. - Network monitoring:

Since network performance directly affects the digital experience, DEM includes monitoring network metrics such as latency, bandwidth, and packet loss. This ensures that connectivity issues are identified and resolved to maintain seamless user interactions. - Session replay:

This component records and replays user sessions, allowing IT teams to see how users navigate and interact with digital platforms. Session replay is especially useful for diagnosing complex issues that require context beyond raw data points.

Why customer experience matters

Users don’t know which digital offerings use DEM to improve their experiences.

But they will ditch the ones that don’t.

Consider users in the e-commerce and digital retail space. DEM lets those platforms and websites monitor website performance, transaction times, and user interactions. If any of those experiences are suffering from downtime, disrupted transactions, or delayed user interactions, IT teams can use DEM analysis to identify the cause. They can then implement a solution and prevent a spike in cart abandonment rates while improving conversion rates and customer satisfaction ratings.

Let’s explore a second use case for Software-as-a-Service (SaaS) providers. DEM allows them to track user interactions, application response times, and errors to identify opportunities to enhance the customer experience and retain users (who hopefully tell their networks about the positive experience).

In both scenarios, integrating a DEM-enabled application monitoring solution would speed up the process of pinpointing the users’ pain points, diagnosing the root cause, and enabling IT teams to collaboratively solve the problem faster than they could without DEM insights.

Benefits of DEM

DEM-driven insights provide a variety of benefits to organizations looking for data-based strategies to help optimize their resources (both human and financial).

Enhanced user satisfaction

Organizations that monitor user experience metrics, such as page load times, transaction response times, and user interactions, can use this information to prioritize addressing the issues that have the most sway in user satisfaction. Proactively identifying and fixing those high-impact problems will result in higher engagement rates and increased customer loyalty.

Improved performance optimization

The holistic presentation of the end-to-end experience (application, network, and infrastructure performance) enables organizations to identify performance bottlenecks, diagnose issues, and prioritize areas for improvement faster than the competition ruled by an APM solution alone. Leveraging these insights lets IT teams optimize their applications and websites, resulting in faster load times, smoother interactions, and better overall performance.

Data-driven decision making

IT teams can know the solutions they are working on are backed by data that came from the users they are trying to impress. DEM helps developers uncover trends, patterns, and areas of improvement so those teams can prioritize resources to deliver an improved user experience effectively.

Drawbacks of DEM

Before investing, organizations need to consider some of the complexities they are signing up for when they deploy DEM capabilities in their monitoring solution.

Implementation complexity

For large or complex digital environments, integrating various monitoring techniques, tools, and systems may require upskilling or hiring the expertise needed for a successful implementation. In addition to configuring and fine-tuning the monitoring setup, ongoing maintenance and management of DEM can be a long-term investment.

Data volume challenges

DEM generates vast amounts of monitoring data, which can be overwhelming to process and analyze effectively. Organizations need to have robust data management and analysis capabilities already in place to sort through the onslaught of data, as well as a process in place for converting it into actionable insights for IT teams.

Resource considerations

Integrating and maintaining a DEM solution may require financial and resource investments ranging from procuring monitoring tools to hiring skilled personnel. Ongoing data analysis efforts may require long-term resource allocation.

Despite these drawbacks, many organizations will want to harness the benefits of DEM, as they outweigh the challenges.

Developing a digital experience monitoring strategy

Establishing an effective DEM strategy is essential for enhancing user satisfaction and business outcomes. A well-defined approach ensures that DEM integrates seamlessly with existing processes while delivering actionable insights. Here are the key steps to building a robust DEM strategy:

- Start with user-centric goals:

Define objectives that focus on improving the user experience. This includes reducing page load times, minimizing transaction errors, and ensuring seamless navigation. A user-centric approach aligns IT teams with what matters most—satisfaction and retention. - Leverage real-time analytics:

Enable real-time data collection and analysis to identify and resolve issues as they occur. This proactive monitoring approach minimizes downtime and ensures that problems are addressed before they impact users. - Integrate across tools and teams:

Ensure your DEM solution integrates with other monitoring tools, such as application performance monitoring (APM), network monitoring, and log management systems. This creates a unified view of the digital ecosystem, fostering cross-team collaboration between DevOps, ITOps, and other stakeholders. - Prioritize key metrics:

Identify and track metrics directly influencing the digital experience, such as transaction response times, error rates, and network latency. Tailor these metrics to your industry and use case to ensure relevance and accuracy. - Adopt synthetic monitoring:

Incorporate synthetic transaction monitoring to test critical workflows and identify issues before they reach end users. This proactive testing complements real user monitoring and strengthens overall system reliability. - Establish a feedback loop:

Create a process for continuously evaluating the effectiveness of your DEM strategy. Use insights from monitoring data to make iterative improvements, such as optimizing application code, upgrading network infrastructure, or refining user interfaces. - Communicate insights effectively:

Provide tailored dashboards and reports for different teams. For instance, technical teams may need granular data, while business teams benefit from high-level KPIs. Ensuring clarity in communication helps align efforts across the organization.

Not all DEM-enabled solutions are the same

Selecting the right APM is about more than the list of capabilities. The first consideration should be how a new DEM-enabled APM solution will complement any existing monitoring solutions.

Integration and compatibility

It is essential to evaluate how well the DEM-enabled APM solution integrates with your existing monitoring ecosystem. Consider whether it can seamlessly integrate with other monitoring tools and systems you rely on, such as application performance monitoring (APM) tools, log management, network monitoring, network performance diagnostics, or cloud monitoring platforms. Compatibility between the DEM-enabled APM solution and your existing infrastructure ensures smooth data aggregation, correlation, and analysis.

Scalability and flexibility

Consider whether the DEM-enabled APM solution can scale as your digital infrastructure grows and evolves. It should be able to handle increasing data volumes, monitor diverse applications and services, and adapt to changing technology stacks. Additionally, assess the flexibility of the solution in terms of customization and configuration to align with your specific monitoring requirements.

Context and correlation

An APM solution should provide DevOps and ITOps with context and correlation within observability platforms to manage application performance and gain digital experience insight across hybrid and multi-cloud environments to allow for cross-team collaboration. By proactively sharing those insights into the digital experience, both teams can own the solutions that enhance user satisfaction, increase productivity, and drive better business outcomes.

How LogicMonitor can help

If DEM is a measure of how much an organization values its users’ experiences, then LogicMonitor’s Application Performance Monitoring solution is how organizations show they’re serious about improving the processes and technologies that ensure their operations don’t just meet – but they exceed – users’ expectations.

OpenTelemetry integration monitors end-to-end application requests through distributed services in your existing environment.

Performance metrics capabilities can graph everything from high-level KPIs to granular technical metrics, visualizing business outcomes for the teams that need to deliver them.

Synthetic monitoring brings solution theories to life before users can test them in real time. This capability simulates end-user traffic through automated browser tests of user interactions or transactions, giving early insights into the quality of the end-user experience.

The collaboration challenges of remote work

A key conversation topic that repeatedly comes up with our customers is the challenge of collaboration in a remote work environment. Too many channels of communication or documentation are ineffective, and IT professionals are starting to feel fatigued by never feeling quite “in the know” about business decisions that are happening in real-time. Collaboration platforms such as MS Teams and Slack are intended to be solutions for these challenges, yet finding the right fit requires careful consideration. When separated from colleagues, teams can feel distant and unmotivated or find it hard to stay focused. Below, we have outlined Zoom vs. Slack vs. Teams, and some of the most common team collaboration tools teams use to communicate effectively and, ultimately, find balance in a work-from-home lifestyle.

Best online collaboration tools for IT teams

IT professionals have favorite collaboration tools, and recent data highlights their preferences. Each company tracks its statistics differently. While Microsoft hasn’t yet publicly disclosed the exact number of daily meetings conducted, Teams reports up to 5 billion meeting minutes in a single day. It remains a go-to platform for organizations already immersed in the Microsoft ecosystem. With its user-friendly interface and top-notch video quality, Zoom reports 300 million daily active users as of 2024, making it a favorite for virtual meetings. With its robust messaging capabilities and extensive integrations, Slack enjoys a more modest market share, with 32.3 million active users on average each day, catering to teams that prioritize real-time communication.

Unsurprisingly, many organizations mix and match these tools to fit their specific needs, using each where it works best to keep everything running smoothly and strengthen IT business continuity.

Microsoft Teams

Microsoft Teams was the most common response, but what is MS Teams? MS Teams is a chat-based collaboration tool that allows organizations to work together and share information in a common space. It’s part of Microsoft’s robust 365 product suite and offers a range of features that make it stand out for many users.

Public and private chat is a core feature, and with the absorption of Skype for Business, Teams offers integrated video capabilities, including popular social features like emojis and custom memes.

‘Hub’ is another important capability that offers a shared workspace for various Microsoft Office applications such as PowerPoint, Word, Excel, Planner, OneNote, SharePoint, and Power BI. Delve was once an integrated tool, but most of its features have been absorbed into Microsoft 365’s broader capabilities. Teams can remotely work together in one space without toggling between applications.

The users of Microsoft Teams that we polled recognized the ability to share documents across multiple locations and chat across multiple offices as the tool’s most widely used application. They also acknowledged the options for screen sharing or whiteboards.

Video conferencing and online meetings can include anyone outside or inside a business and are also important features of the tool. However, many offices use online video calling and screen sharing internally, as well as other tools, such as Zoom, for externally facing meetings.

As IT organizations implement a collaboration tool like MS Teams, the ability to deliver monitoring alerts directly into the MS Teams chat is a common need (LogicMonitor can utilize the Microsoft Teams API to deliver alerts via a custom HTTP integration). Monitoring user activity, quality of calls, private messages, team messages, and types of devices is also important.

Looking ahead, LogicMonitor will take a more cloud-based approach to monitoring MS Teams to pull important data, such as call quality metrics. Stay up to date by subscribing to our release notes.

At the end of the day, if a company uses Microsoft 365, MS Teams is probably a good collaboration solution. It is included for free with Office 365 and can be easily accessed through 365’s centralized management console.

Microsoft Teams vs. Zoom

Zoom remains one of the most commonly used video conferencing tools, valued for its ease of use, reliable video quality, and popularity in externally facing communication. While both Zoom and Microsoft Teams enable video conferencing, private and public chat, virtual meeting spaces, screen sharing, and file sharing, Microsoft Teams stands out as part of the Microsoft 365 suite and continues to expand its capabilities with AI-powered tools like Microsoft Copilot. This makes Teams a one-stop shop for organizations already using Microsoft’s tools, though it may be less accessible to participants outside the organization than Zoom’s simpler setup process.

Both platforms have made significant advancements in security. Microsoft Teams provides end-to-end encryption for data in transit and at rest, along with multi-factor authentication and Rights Management Services to safeguard sensitive information. Zoom has introduced robust security measures, including end-to-end encryption for meetings, enhanced data privacy controls, and user-friendly security dashboards. Its refined two-factor authentication (2FA) provides flexibility, allowing users to verify identities through authentication apps or SMS codes while ensuring alternative available methods if needed.

Both Microsoft Teams and Zoom offer free versions with optional paid upgrades at competitive per-user rates. The choice between the two ultimately depends on your organization’s specific needs. Many businesses find value in using both tools—leveraging MS Teams internally for collaboration and Zoom externally for virtual meetings. Their integration capabilities further enhance workflow efficiencies, ensuring teams can use the right tool for every scenario.

LogicMonitor offers out-of-the-box monitoring for Zoom to further optimize your collaboration tools, compatible with any Zoom account. Learn more about our Zoom monitoring.

Microsoft Teams vs. Slack

Slack is a fun and easy-to-use chat and channel-based messaging platform developed by Salesforce. Slack shines with its bot and app integrations, improving the user’s workplace experience. Onboarding is easy, and there are shortcuts and productivity hacks for just about anything. In terms of features, both MS Teams and Slack are fairly evenly matched. Both offer private and public chat, searchable message history, screen sharing, file sharing, and fun integrations to generate gifs and memes. Both offer free versions of their platform, with upgraded features and integrations on paid plans.

MS Teams beats Slack when it comes to online audio and video sharing and also wins out where security and compliance are of concern. Not only does Microsoft’s data encryption and compliance come into play, but the admin controls are more extensive than any other platform.

We recently updated our Slack integration, and it’s now bidirectional.

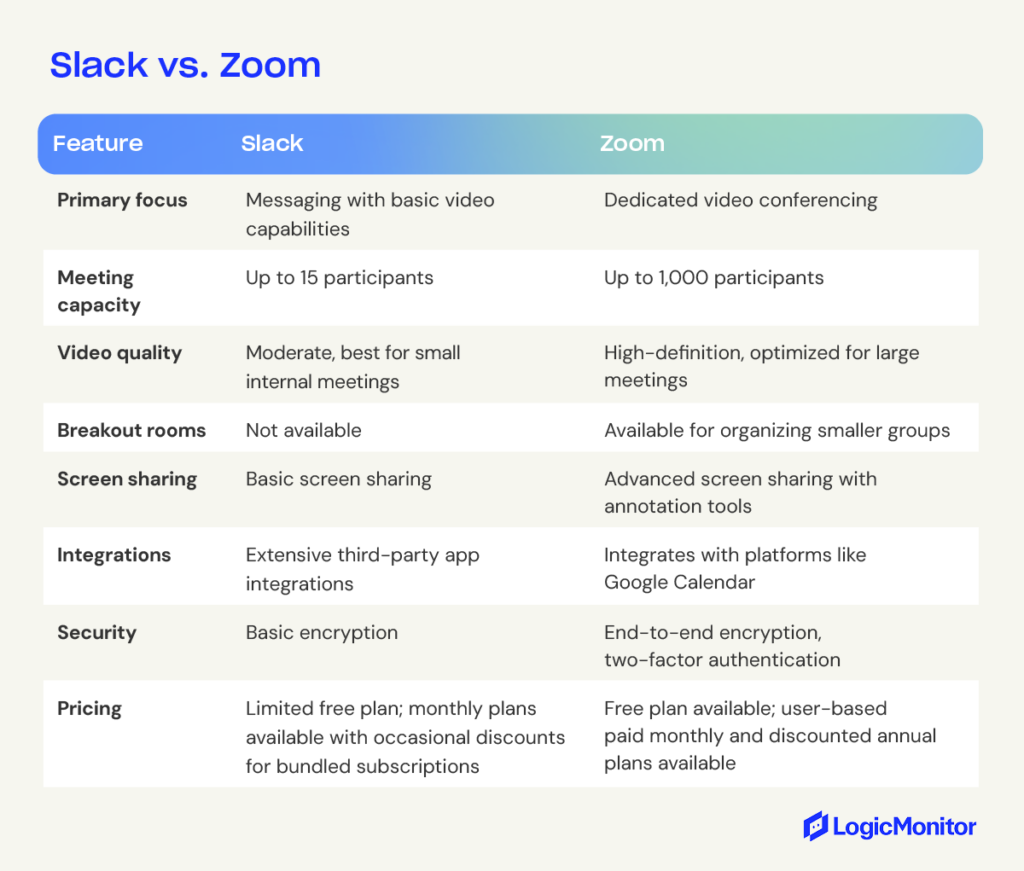

Zoom vs. Slack

Slack and Zoom are both cloud-based collaboration tools. Slack excels at team messaging and integrations, while Zoom specializes in high-quality video conferencing. Each platform caters to distinct communication needs, making them suited for different teams and projects.

Slack is a powerhouse for team messaging and integrations, offering robust real-time communication. It organizes conversations into channels, simplifying collaboration on specific projects. While its video calls are limited in functionality and best suited for smaller team discussions, Slack excels in messaging-based capabilities. It also supports integrations with third-party tools like Google Drive. Features such as pinned messages, customizable notifications, and emoji reactions enhance its usability for day-to-day collaboration.

Zoom specializes in high-quality video conferencing, offering a smooth, reliable experience for any size group. Its key features include HD video and audio, breakout rooms, virtual backgrounds, and whiteboard functionality. These capabilities make Zoom a go-to for presentations, team meetings, and webinars. While Zoom has a functional chat feature, Slack’s is more robust.

For many organizations, Zoom complements messaging platforms like Slack to create a complete collaboration suite. Teams might use Slack for daily messaging and collaboration while relying on Zoom for high-quality virtual meetings. Both platforms offer free versions, making evaluating their fit for your team’s needs easy.

Other collaboration tools to consider

Google Workspace has its own collaboration tool, Google Meet. In the same way that MS Teams is available right from 365, Google Meet is available to any business or individual with Gmail or a Workspace account. However, some features, such as recording meetings or exceeding the 60-minute mark, are reserved for paid plans. If your business already has Google Workspace, Google Meet is a great solution; some find it slightly easier to use than MS Teams.

Cisco Webex is also a leader in online meetings and video conferencing solutions. It has features similar to MS Teams, Google Meet, and Zoom, such as one-to-one or group conferencing, file sharing, and a vast library of integrations. The security features are robust, and there are a variety of protection tools to keep data safe. Learn more about LogicMonitor’s Webex monitoring capabilities.

Trello, Asana, and Monday are all popular project management applications most commonly used in marketing, customer support, sales, and HR. They allow teams to create, track, and manage complex workflows in a centralized hub and are often used in tandem with some of the video, chat, and file-sharing tools discussed above.

Using more than one collaboration tool

Work environments have changed dramatically in recent years. As organizations rely more on remote and hybrid work environments, it makes sense for them to take advantage of multiple collaboration tools to meet diverse needs.

Different platforms excel in specific areas, offering distinct advantages that make them ideal for certain workflows. For example, many teams use Slack for internal messaging and quick collaboration paired with Zoom for virtual meetings, then turn to Google Workspace for email, calendar management, and file sharing. This multi-tool approach provides teams with IT resources to tackle various aspects of their work seamlessly. Discover how LogicMonitor supports remote monitoring to enhance IT workflows.

LogicMonitor embraces this strategy by utilizing Slack for internal chat. We rely on Google Workspace for scheduling and document sharing, while preferring Zoom for internal and external video calls. This combination lets teams leverage the strengths of each platform, staying productive and maintaining a collaborative culture without compromise.

Choosing the right combination depends on your organization’s size, budget, and specific requirements. By exploring different tools and identifying the best fit for your workflows, you can empower your teams to stay connected and productive. Explore integrations with LogicMonitor to enhance your collaboration stack and support your business needs.

How to maximize value with Jira and AWS Lambda integration

One of our engineers on the TechOps team coined the term “Value++.” It references the shorthand operator for “increment” in various coding languages. It is also a motto for what we should be doing as a team—always adding value.

Here are a few things in our day-to-day operations that have been a serious “value –”

- Answering JIRA tickets that have no description

- “Customer has issue B,” but both the customer name and issue details are omitted from that sentence

- Doing things manually, over and over again

At LogicMonitor, most of the tasks requested of the TechOps team come in the form of JIRA tickets. A new application may be ready for deployment, or a customer account may require a rename. We also have to deal with operational tasks like moving new customer accounts from demo to production environments.

Because LogicMonitor is rapidly growing, we always try to be more efficient by automating ourselves out of work. We decided to automate parts of our DevOps tasks through AWS Lambda functions, API calls, and JIRA tickets. This allows the team to keep track of existing tasks that show up in our queue and spend their time doing more important things.

It’s “Value ++.”

Understanding projects and issue types for automation

We first had to lock down specific JIRA projects and issue types to differentiate tasks from other items, creating a separate issue type for every task we wanted to automate. This makes things easy to organize and allows us to lock down who can or cannot make specific tickets.

In this blog, we’ll go over one of our simpler use cases: automatically performing an account rename.

Streamlining workflows with straightforward solutions: The simple stupid

This crude Lucidchart (below) shows the basics of what we did. Every 5 minutes, a CloudWatch Event rule triggers a Lambda function. The function will make a JIRA API call to retrieve a list of tickets. Using those tickets, we will grab the necessary information and make subsequent API calls to backend services within LogicMonitor to perform specific actions, such as renames. Lambda will also actively update and close the tickets upon task completion. The first thing we need to do is know what tickets to look for.

Executing JQL queries directly from AWS Lambda

JIRA Query Language (JQL) is one of the most flexible ways to search for issues in JIRA. We use a JQL query with the JIRA REST API to find specific open tickets with issue types of “account rename.” This should return a list of associated tickets.

endpoint = "https://jira_url/rest/api"

jql_issuetype = "issuetype='Account Rename'"

jql_project = "project='TechOps Request'"

status = "status=Open"

jql = ("jql=" + jql_project +

"+AND+" + jql_issuetype +

"+AND+" + status

)

r = session.get(endpoint + "/2/search?" + jql % locals(), headers=headers_jira)

response = json.loads(r.text)

for issues in response["issues"]:

customer = issues["fields"]["customfield_10001"]

target_name = issues["fields"]["customfield_14673"]

Taking the list of open tickets, we need to be able to glean important information out of them, some of them in the form of custom fields.

Customizing workflows with Jira’s custom fields

Users create custom fields, which are not by default available in JIRA. For our specific use case, we created a few fields, such as customer name, target name, and rename date. From the code example above, you can see that within the JIRA API, you can not specify just the field’s name; you’ll need to add a customfield_id.

Pro tip:

If you don’t want to look at a page of ugly JSON, you can also use the advanced JIRA search bar and type in the field’s name.

Embracing event-driven automation with AWS Lambda… most of the time

Usually, when we build apps on Lambda, we have components like Lambda functions and event sources. An event source is an AWS service that publishes events for processing by code within a Lambda function. In this case, performing a rename upon JIRA ticket creation could have been handled with a post function and an API Gateway. However, customers have their own maintenance windows and preferred times for an account rename to happen. Sometimes, customers may want their account renamed on Saturday at 4 a.m. during my personal maintenance (sleep) window. As a workaround, we decided to use a CloudWatch event as a lambda scheduler.

today = datetime.datetime.today() - datetime.timedelta(hours=7)

desired_date = datetime.datetime.strptime(issues["fields"]["customfield_16105"].replace("-0700",""), "%Y-%m-%dT%H:%M:%S.%f")

if today > desired_date:

create_rename(customer, target_name)

Our CloudWatch event would run every 5 minutes, triggering our Lambda function. The function will first check if the current time exceeds the value we parsed from the custom field rename date (see code above), and then we will allow the function to continue.

Combining tools to create seamless automation

At this point, we have collected the information we need. We can perform the rename by making API calls to the backend LogicMonitor services, but we won’t show that code in this blog. However, we also want to treat the JIRA ticket as a state file. We don’t want to keep grabbing the same open tickets repeatedly. This is where we want to use another JIRA API call to move the ticket to a different workflow step (e.g., from “Open” to “In Progress”). However, just like custom fields, we need a specific transition id, which you can find by editing your existing project workflow. We can now update the status of our JIRA ticket programmatically:

def changeStatus(key, id):

jira_request = {"transition":{"id": id }, "fields": {"resolution": {"name": "Done"}}}

endpoint = "https://jira_url.com/rest/api"

r = session.post(endpoint + "/2/issue/%(key)s/transitions?expand=transitions.fields" % locals(), data=json.dumps(jira_request), headers=headers_jira)

return r.text

Reducing human errors through intelligent automation: Saving people from people

Customer renames for the team used to be an extremely arduous task. Looking back at the Confluence revision history for our account rename runbook is akin to cleaning out your basement after 20 years. Besides being extremely time-consuming, the process involved halting puppets and, for unknown reasons, executing both a Ruby and a Bash script simultaneously. Sometimes, an application restart was required, but it was not always. As we grow, the only scalable solution is to automate repetitive, manual, and often mind-boggling tasks. It allows us to provide better service for customers and allows us to bypass the mundane to embrace the innovative.

One last tip—and this is the most important part—when we want to automate anything that requires manual input from other people, we have to take human stupidity… uh… error into consideration. Make sure to create validators and conditionals to combat this.

Plus, witty warning messages are a “value++.”

IT automation uses software and technology to handle repetitive IT tasks automatically, reducing the need for manual work and accelerating processes like infrastructure management and application deployment. This transformation is essential for IT teams needing to scale efficiently, as seen in the case of Sogeti, a Managed Service Provider (MSP) that provides tech and engineering resources worldwide.

Sogeti had a crucial IT challenge to solve. The MSP operates in more than 100 locations globally and uses six different monitoring tools to monitor its customers’ environments. It was a classic example of tool sprawl and needing to scale where multiple teams of engineers relied on too many disparate tools to manage their customers’ environments. It soon became too arduous for the service provider to collect, integrate, and analyze the data from those tools.

Sogeti had teams of technicians managing different technologies, and they all existed in silos. But what if there was a way to combine those resources?

IT automation provided a solution.

After working with LogicMonitor, Sogeti replaced the bulk of its repeatable internal processes with automated systems and sequences. The result? Now, they could continue to scale their business with a view of those processes from a single pane of glass.

Conundrum cracked.

That’s just one example of how IT automation tools completely revolutionizes how an IT services company like an MSP or DevOps vendor can better execute its day-to-day responsibilities.

By automating repeatable, manual processes, IT enterprises streamline even the most complicated workflows, tasks, and batch processes. No human intervention is required. All it takes is the right tech to do it so IT teams can focus on more strategic, high-priority efforts.

But what exactly is IT automation? How does it work? What are the different types? Why should IT companies even care?

IT automation, explained

IT automation is the creation of repeated software processes to reduce or eliminate manual or human-initiated IT tasks. It allows IT companies with MSPs, DevOps teams, and ITOps teams to automate jobs, save time, and free up resources.

IT automation takes many forms but almost always involves software that triggers a repeated sequence of events to solve common business problems—for example, automating a file transfer. It moves from one system to another without human intervention or autogenerates network performance reports.

Almost all medium and large-sized IT-focused organizations use some automation to facilitate system and software processes, and smaller companies benefit from this tech, too. The most successful ones invest heavily in the latest tools and tech to automate an incredible range of tasks and processes to scale their business.

The production, agricultural, and manufacturing sectors were the first industries to adopt IT automation. However, this technology has since extended to niches such as healthcare, finance, retail, marketing, services, and more. Now, IT-orientated companies like MSPs and enterprise vendors can incorporate automation into their workflows and grow their businesses exponentially.

How does IT automation work?

The software does all the hard work. Clever programs automate tasks that humans lack the time or resources to complete themselves.

Developers code these programs to execute a sequence of instructions that trigger specific events from specific operating systems at specific times. For example, programming software so customer data from a customer relationship management system (CRM) generates a report every morning at 9 a.m. Users of those programs can then customize instructions based on their business requirements.

With so many benefits of IT automation, it’s no wonder that two-thirds of CFOs plan to accelerate the automation of repetitive tasks within their companies.

Why do businesses use IT automation?

IT-focused businesses use automation for various reasons:

- It makes life easier for tech teams. For example, engineers and technicians at MSP companies no longer have to execute tasks like network performance analysis, data security management, or reporting manually. The software takes care of everything for them so they can better focus their efforts on other tasks.

- It makes life easier for non-tech teams. Employees across all departments within an IT-focused organization benefit from automation because they can carry out responsibilities on software and systems with less manual work. For example, administrative employees in a DevOps consulting firm can generate payroll reports without manually entering information into a computer by hand.

- It helps CIOs and executives scale their businesses because other employees, such as engineers and MSP professionals, can complete jobs with minimum effort. Automation frees up tech resources and removes as much manual IT work as possible, allowing IT-centered organizations to improve their margins and grow.

- It helps CIOs and executives fulfill client-orientated objectives by improving service delivery. Automation can also advance productivity across an organization, which results in better service level agreement (SLA) outcomes. Again, the right automation software reduces as much manual work for tech teams so businesses can grow and carry out responsibilities more efficiently.

- It allows MSPs and other IT companies, especially smaller ones, to survive in ever-competitive environments. By automating IT processes, these enterprises can stay competitive with more tech resources and reduced manual labor.

- It allows for improved profitability in IT companies. For example, MSPs can onboard more clients without hiring new engineers. That’s because automated systems delegate tasks and resources seamlessly.

- It reduces costs for IT companies by saving time and improving operational efficiencies. For example, by freeing up human resources, enterprises can focus on generating more sales and revenue. As a result, CIOs and executives have more money to spend on labor and can add highly skilled IT professionals to their tech teams.

Key benefits of IT automation

IT automation delivers many advantages that extend beyond simple task delegation. Let’s look at a few benefits your organization will see.

Enhanced organizational efficiency

With the complexity of modern IT infrastructure, modern environments may handle thousands of requests daily—everything from password resets to system failures. Automation can help reduce the time it takes to handle many of those requests. For example, look at an IT telecommunications company with a lot of infrastructure. They can automate their network configuration process, cutting the deployment time from a few weeks to less than a day.

Reduce errors

Human error in IT environments can be costly. Errors can lead to unexpected system downtime, security breaches, and data entry errors—all of which you can avoid by standardizing consistency and standards through automation. Automation helps your team eliminate routine data entry and other tasks and greatly reduces the chance of human error. For example, your team may decide to create backup scripts for more complicated setups to ensure you always have reliable backups.

Faster service delivery

Automation helps speed up responses to common IT requests. If your IT team is stuck needing to perform every task manually, it increases incident response time and the length of time your customer waits on the other end of the line for a fix. Automation speeds up common tasks—setting up VPN access, account resets, report creation, and security scans—allowing your team to focus on finding the root cause of problems, deploying resources, and bringing systems back online.

Streamlined resource allocation

Your organization’s IT needs may fluctuate depending on how many users you have and their activities. A strict guide for resource usage may result in some users being unable to work efficiently because of slow systems. Automation can help by automating resource allocation. For cloud services, you can scale your servers based on demand, and for network traffic, you can dynamically adjust traffic routes based on usage.

Enhanced compliance and security

Automated systems can help your team maintain detailed audit trails and enforce consistent security policies. They can also help with continuous monitoring, allowing your team to get alerts immediately when your solution detects suspicious activity. Additionally, your IT systems can automatically generate compliance reports, such as SOC 2, for review, helping your team find potential problems and comply with audit requests.

Different IT automation types

IT companies benefit from various types of IT automation.

Artificial intelligence

A branch of computer science concerned with developing machines that automate repeatable processes across industries. In an IT-specific context, artificial intelligence (AI) automates repetitive jobs for engineers and IT staff, reduces the human error associated with manual labor, and allows companies to carry out tasks 24 hours a day.

Machine learning

Machine learning (ML) is a type of AI that uses algorithms and statistics to find real-time trends in data. This intelligence proves valuable for MSPs, DevOps, and ITOps companies. Employees can stay agile and discover context-specific patterns over a wide range of IT environments while significantly reducing the need for case-by-case investigations.

Robot process automation

Robot Process Automation (RPA) is a technology that instructs ‘robots’ (machines) to emulate various human actions. Although less common in IT environments than in AI and ML, RPA still provides value for MSPs and other professionals. For example, enterprises can use RPA to manage servers, data centers, and other physical infrastructure.

Infrastructure automation

IT infrastructure automation involves using tools and scripts to manage computing resource provisioning with manual intervention. This includes tasks like server provisioning, bandwidth management, and storage allocation. This allows for dynamic resource usage, with the most resources going to the users and applications with the most need.

How can businesses use IT automation?

A proper automation strategy is critical for IT companies. CIOs and executives should decide how to achieve automation within their organizations and then choose the right tools and technologies that facilitate these objectives.

Doing so will benefit your business in many ways.

- Improve your company’s operation by removing redundant tasks and freeing up time to work on more mission-critical jobs

- Enhance customer satisfaction by more quickly responding and resolving problems

- Improve employee satisfaction by making sure business systems stay online, helping meet their expectations and improving their ability to do their jobs

Here are some examples of how IT companies use automation:

Templating/blueprints

Companies can automate templates and blueprints, promoting the successful rollout of services such as network security and data center administration.

Workflow/technology integration

Automation allows companies to integrate technology with workflows. As a result, CIOs and executives complete day-to-day tasks more effectively with the latest hardware and software. For example, automating server management to improve service level management workflows proves useful if clients expect a particular amount of uptime from an MSP.

AI/ML integration

AI and ML might be hard for some companies to grasp at first. However, teams can learn these technologies over time and eventually combine them for even more effective automation within their organizations.

Auto-discovery

Automated applications like the LogicMonitor Collector, which runs on Linux or Windows servers within an organization’s infrastructure, use monitoring protocols to track processes without manual configuration. Users discover network changes and network asset changes automatically.

Auto-scaling

IT companies can monitor components like device clusters or a VM in a public cloud and scale resources up or down as necessary.

Automated remediation/problem resolution

Hardware and software can provide companies like MSPs with all kinds of problems (downtime, system errors, security vulnerabilities, alert storms, etc.). Automation, however, identifies and resolves infrastructure and system issues with little or no human effort.

Performance monitoring and reporting

Automation can automatically generate regular performance reports, SLA reports, compliance reports, and capacity planning forecasts. It can also generate automated alerting systems in case of problems and report trends to help your business with capacity planning.

Best practices for automation success

Successfully automating IT in business requires careful planning and thoughtful execution. Follow these best practices to avoid the common mistakes and maximize efficiency:

- Align automation and business goals: Don’t just start automating everything possible without a plan. Begin by identifying what you want to achieve with automation. Look for areas to reduce operational costs, improve service, and enhance customer satisfaction, and start with the areas that have the most impact and help you reach your goals. Consider asking stakeholders and employees about their biggest friction points and the ability to automate them.

- Start small: Investing in IT automation is an ongoing task, and you may not do things right the first time. Start small with quick wins. Learn what works for your business and pilot your initial automation tasks to test how they work. Eventually, begin scaling as you gain insights from smaller projects to inform larger, more impactful ones.

- Focus on security: Although your team may not be working with data manually as much, security is still a must with IT automation. Integrate secure protocols at every layer of your systems and processes. Look at your regulatory requirements to determine your needs, and regularly audit your systems to identify potential weaknesses.

- Document everything: If things go wrong, you need detailed records about your automation process. Create documents that detail every system, automation tools and scripts that belong to those systems, and common troubleshooting tips for quickly dealing with problems. Make documentation available to team members so all your team members can look up how things work and manage their designated automation systems.