OpenShift is a platform that allows developers, operations engineers, or even DevOps professionals to run containerized applications and workloads. It is best described as a cloud-based container orchestration platform, although an “on-prem” version is also possible.

Under the hood, it’s powered by Kubernetes, but an additional architectural layer makes life simpler for DevOps teams. OpenShift is from enterprise software specialist Red Hat and provides a range of automation options and lifecycle management, regardless of where you run your applications.

OpenShift architecture runs in any environment. The OS is usually Linux, but it also can use Centos. On top of that is the standard Kubernetes layer. However, there’s also an additional layer transforming Kubernetes into OpenShift.

OpenShift versions

Red Hat’s OpenShift container platform comes in a few different “flavors,” as IBM likes to call them. OKD or Origin Key Distribution powers an open-source version. OpenShift’s payable versions come with dedicated support, but only within the version lifecycle. For example, OpenShift 4.12 went live on January 17, 2023, and is supported until January 2025.

Why is OpenShift so popular?

OpenShift provides a range of enterprise-ready services straight out of the box. Plenty of other container orchestration services are available, such as Amazon EKS or the Google Kubernetes Engine (GKE). However, with any of these, businesses often need to invest in multiple additional services to make them useful as a full deployment and delivery solution.

OpenShift is a more desirable solution for enterprises that want to be able to build, deploy, and scale fast using a single platform.

How OpenShift differs from other container orchestration platforms

Other container orchestration platforms are aimed at everyone, from individual developers to SMEs, but OpenShift is marketed toward large enterprises. OpenShift empowers businesses to shift to cloud-native development and embrace continuous integration and delivery (CI/CD). Various levels of automation simplify day-to-day tasks and free up DevOps to spend time on other tasks. The platform includes features designed to facilitate faster deployment, plus a full suite of services.

Unlike many competitors, OpenShift provides extensive integrated services that support the full application lifecycle out of the box. Let’s examine a couple of popular OpenShift competitors.

- Docker Swarm is known for its simplicity and ease of use, appealing to smaller teams or projects that need straightforward container management without complex setup. However, it lacks the robust CI/CD capabilities and advanced security features that OpenShift offers.

- Amazon EKS and Amazon GKE provide scalable, cloud-native Kubernetes environments that are tightly integrated with their respective cloud platforms. While they offer powerful options for teams already using AWS or Google Cloud, they often require supplementary services. OpenShift’s all-in-one approach delivers built-in developer tools, automation for CI/CD, and strong multi-cloud and hybrid support.

Architectural components of OpenShift

OpenShift’s multi-layered architecture combines infrastructure and service layers with a structured node system to ensure flexibility, scalability, and performance across various environments.

Layer types

- Infrastructure layer: This foundational layer is the launchpad for deployment across physical, virtual, or cloud-based setups. Compatible with major public clouds like AWS, Azure, and GCP, it abstracts hardware quirks to provide a seamless environment for containerized apps.

- Service layer: Built on Kubernetes, the service layer powers OpenShift’s core. Packed with Red Hat’s integrated tools for monitoring, logging, and automation, it acts as a central command hub—managing networking, storage, and security. Plus, built-in CI/CD pipelines keep development and deployment fast and friction-free.

Node types

In OpenShift, nodes are the backbone of the cluster, working together to stay organized and efficiently manage workloads:

- Master nodes: The brains of the operation, master nodes handle API requests, coordinate workloads, and allocate resources across the infrastructure.

- Infrastructure nodes: Dedicated to essential service components, such as routing, image registries, and monitoring, infrastructure nodes free up worker nodes so they can focus solely on running your apps.

- Worker nodes: Running the containerized applications, worker nodes keep workloads balanced across the cluster to maintain high performance and ensure that reliability never wavers.

By combining these layers and nodes, OpenShift simplifies operational complexity without sacrificing scalability or security. This powerful mix lets enterprises confidently approach cloud-native development by utilizing built-in CI/CD, observability, and strong security practices to support every stage of the application lifecycle.

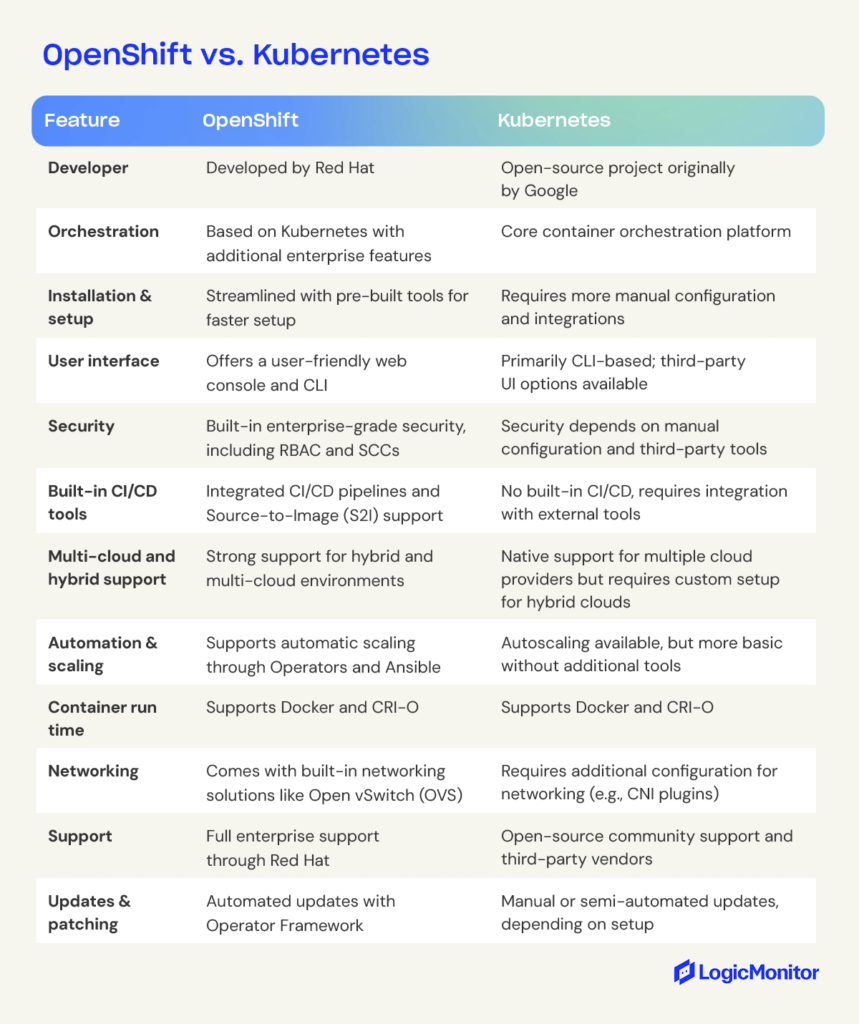

OpenShift vs. Kubernetes

Both OpenShift and Kubernetes offer powerful container orchestration, except OpenShift builds on Kubernetes with additional enterprise-ready features. Let’s take a closer look at how these platforms compare in terms of functionality, setup, and support.

Key features of OpenShift

OpenShift utilizes image streams to shift container images to the cluster. Image streams allow changes to occur via automation. Basically, as soon as any alteration occurs in the source code, an image stream allows a developer to push those changes through with minimal application downtime.

On the monitoring and automation side, OpenShift has some serious tools for streamlined management. Built-in monitoring dives deep into container performance, resource usage, and troubling issues you might encounter, helping DevOps pinpoint and remedy bottlenecks quickly.

On the automation side, OpenShift uses Operators and Ansible Playbooks to handle routine management tasks and scale infrastructure. Operators act like custom helpers that simplify deployment and maintenance, while Ansible Playbooks add scripting power, letting teams easily spin up new nodes or containers.

Since OpenShift is cloud-based, it plays well with any infrastructure, making it ideal for multi-platform development. Developers don’t have to constantly shift how they code to match different ecosystems. Plus, OpenShift includes upstream Kubernetes and Linux CoreOS, delivering an all-in-one solution right out of the box.

Best practices for monitoring

Built-in tools like Prometheus and Grafana are great for tracking container health and resource usage, while external tools like Dynatrace bring real-time insights and anomaly detection for enhanced observability. Dynatrace’s integration with OpenShift helps teams monitor app health, dependencies, and resource demands, giving them a proactive option for tackling issues.

With OpenShift 4.12, new features like IBM Secure Execution, pre-existing VPC setups, and custom cluster installations improve monitoring and automation capabilities, making it even better suited for the continual demands and unique needs of enterprise environments.

Benefits of OpenShift

One of OpenShift’s standout advantages is its support for hybrid and multi-cloud environments. This allows you to launch and manage applications seamlessly across a mix of on-prem, private, and public cloud environments. This flexibility helps avoid vendor lock-in, balance workloads between environments, and give you value for your cost with top-notch performance.

Seamless integration across platforms

OpenShift’s consistent Kubernetes-based foundation makes it easier to deploy, manage, and scale applications across cloud providers and on-premises data centers. With built-in automation tools like Operators and Ansible Playbooks, OpenShift maintains application consistency and performance across different platforms, providing users a uniform experience even in complex multi-cloud deployments.

Hybrid Cloud benefits

If your business embraces a hybrid cloud, OpenShift offers tools for optimizing resources and scaling applications between on-prem and cloud environments. Its hybrid support enables you to keep critical workloads on-prem while taking advantage of the cloud’s scalability and cost efficiency. OpenShift’s centralized management is all about simplicity and efficiency, giving DevOps cloud and on-prem resource management with a single console.

Streamlined operations

With its unified management console and automation features, OpenShift enables your team to deploy updates across multiple environments without needing custom solutions for each platform. This reduces operational overhead and helps you stay agile, making OpenShift a compelling option for organizations moving toward cloud-native development.

Use case example: financial services

A financial institution looking to maximize operational efficiency while meeting regulatory requirements could use OpenShift’s multi-cloud support to manage sensitive data in an on-prem environment while launching customer-facing applications in the cloud. This setup balances security with scalability, letting them respond rapidly to changing customer needs without compromising data protection.

Scaling with OpenShift

Scalability can be a challenge as apps acquire larger user bases or need to perform additional tasks. OpenShift supports the deployment of large clusters or additional hosts and even provides recommended best practices to assure persistent high performance even as applications grow. For example, the default cluster network is:

cidr 10.128.0.0/14

However, this network only allows clusters of up to 500 nodes. OpenShift documentation explains how to switch to one of the following networks:

10.128.0.0/12 or 10.128.0.0/10

These networks support the creation of clusters with more than 500 nodes.

OpenShift allows developers to create “stacks” of containers without reducing performance or speed.

OpenShift also utilizes other tools in its tech stack to support scaling, such as Ansible Playbooks. Ansible is an open-source infrastructure automation tool that Red Hat initially developed. By taking advantage of Ansible Playbooks, OpenShift allows developers to create a new host speedily and bring it into the cluster, simplifying scaling up or down.

OpenShift security

OpenShift is built with enterprise security in mind, supporting secure deployment and scaling while also protecting the development infrastructure. Considering cyberattacks surged by 30% year-over-year in Q2 2024, reaching an average of 1,636 attacks per organization per week, this is a key benefit for many developers.

With built-in support for Role-Based Access Control (RBAC) and Security Context Constraints (SCCs), OpenShift lets you enforce strict access control policies, giving only authorized users access to specific resources. OpenShift’s security framework also integrates seamlessly with many existing corporate identity management systems, providing Single Sign-On (SSO) capabilities that make user management even easier.

Automated security updates and patching

One of OpenShift’s most outstanding security features is its automated updates and patching. By making these tasks automatic, OpenShift reduces the possibility of security risks that tend to go along with outdated software versions or configurations. This reduces the likelihood of vulnerabilities in your production environments. Through frameworks like Operators, OpenShift manages updates for both the platform and applications it supports, enabling DevOps teams to keep security measures current with little to no manual intervention.

Network and data protection

OpenShift offers several powerful network security features, including encrypted communication between containers and stringent network traffic flow restriction policies to reduce exposure. It also offers data encryption both at rest and in transit, helping to keep sensitive information protected throughout its lifecycle.

Security across hybrid and multi-cloud environments

For organizations with hybrid and multi-cloud architectures, OpenShift ensures that security policies are consistent across environments, giving teams unified security protocols to manage applications. OpenShift’s multi-environment security supports compliance while retaining the flexibility of a hybrid cloud, making it especially valuable if your company handles sensitive data that has to comply with regulatory standards.

OpenShift use cases

OpenShift is ideal for modernizing existing apps as well as creating new ones. It transforms the deployment of upgrades and changes, allowing for effortless scaling. Because OpenShift runs on any cloud, it effectively future-proofs applications while ensuring they remain secure and stable. Use cases include:

- Lifting and shifting existing web apps into containerized environments

- Developing cloud-native applications

- Creating apps via distributed microservices

- Quickly add a new service or feature to an existing app

This last point is a key feature of continuous integration and continuous delivery (CI/CD) and is vital for retaining an engaged user base.

Industry use cases

OpenShift is widely adopted across industries, offering flexibility, security, and scalability that make it a top choice for diverse applications:

Financial services: Financial institutions benefit from OpenShift’s security features, ensuring compliance with GDPR and PCI DSS regulations. Banks can keep sensitive data secure on-premises by utilizing hybrid cloud capabilities while deploying customer-facing applications in the cloud. For example, a financial institution in Peru used OpenShift to regularly increase the number of services available to users, reducing the need for in-branch visits and cutting transaction costs by 3%.

Healthcare: Healthcare providers rely on OpenShift to maintain HIPAA compliance and secure patient data across on-premises and cloud environments. OpenShift’s RBAC, SCCs, and data encryption help keep patient data protected at all stages. Another helpful feature is OpenShift’s automated updating, which reduces the need for manual patching, freeing IT resources to focus on other critical tasks.

Retail: In retail, OpenShift empowers companies to build and scale e-commerce platforms quickly, providing a sturdy foundation for handling high traffic volumes during peak times. With CI/CD automation, retailers can update their online stores and integrate new features as often as necessary to keep up with market demands, giving their customers a more pleasant shopping experience.

Implementing continuous integration and delivery (CI/CD)

CI/CD is a growing development approach that uses automation to ensure app updates and adjustments happen as quickly as possible with minimal downtime. Containerized development environments already support continuous integration—the rapid testing and deployment of small code changes—by allowing tests to occur in isolation prior to deployment. Thanks to its simplified interface, OpenShift makes CI/CD pipelines even more efficient by reducing the risk of human error and helping developers maintain consistent processes.

Research shows that even though the benefits of CI/CD are clear, not all organizations are confident of their ability to make this shift. OpenShift could help businesses maximize their digital transformation efforts by empowering developers to embrace the CI/CD culture and get apps to users faster.

OpenShift provides templates for objects and utilizes Jenkins jobs and pipelines to improve automation and promote CI/CD for all application development and deployment. For those comparing Jenkins tools for CI/CD, this article on Jenkins vs. Jenkins X can help clarify which solution best fits your needs.

How to set up and deploy using an OpenShift cluster

Firstly, a developer or DevOps professional needs to get access to OpenShift. You can download and manage the free version yourself, but the fully managed version needs to be purchased from Red Hat. When you subscribe to a hosted version of OpenShift, you’ll get the secure credentials needed to deploy the OpenShift environment.

The simplest way to interact with OpenShift is via the web console. There is also an oc command-line tool.

Before deploying any application, you must create a “project.” This contains everything related to the application.

At this point, you can also use the web console to add collaborators.

You can deploy applications to OpenShift clusters via various methods, including:

- Using an existing container image hosted outside the OpenShift cluster

- Importing an existing container image into an image registry within the OpenShift cluster

- Using source code from a Git repository hosting service

OpenShift also provides templates to simplify the deployment of apps with multiple components. Within the template, you can set your own parameters to exercise complete control over the deployment process. To access these, use the console’s “Add to Project” function. There’s a whole section here dedicated to CI/CD.

To enter image stream tags, use the “Deploy Image” tab in the console or “oc new-app” in the CLI. You can monitor or even scale up from here by adding more instances of that container image.

Wrapping up

Red Hat provides extensive resources to support teams deploying and optimizing OpenShift, making it easier to get the best from this platform. With robust automation and security features and its support for hybrid and multi-cloud environments, OpenShift proves to be a powerful solution for modern app development and deployment. OpenShift enables you to confidently scale, secure, and streamline applications, creating an agile and resilient infrastructure that meets today’s evolving demands.

NetApp, formerly Network Appliance Inc., is a computer technology company specializing in data storage and management software.

Known for its innovative approach to data solutions, NetApp provides comprehensive cloud data services to help businesses efficiently manage, secure, and access their data across diverse environments. Alongside data storage, NetApp offers advanced management solutions for applications, enabling organizations to streamline operations and enhance data-driven decision-making across hybrid and multi-cloud platforms.

What is NetApp?

NetApp is a computer technology company that provides on-premises storage, cloud services, and hybrid data services in the cloud. Its hardware includes storage systems for file, block, and object storage. It also integrates its services with public cloud providers. NetApp’s services offer solutions for data management, enterprise applications, cybersecurity, and supporting AI workloads. Some of its main products include different storage software and servers.

NetApp has developed various products and services, and according to Gartner, was ranked the number one storage company in 2019. The following includes detailed definitions of NetApp’s key terms and services.

Azure NetApp is a popular shared file-storage service used for migrating POSIX-compliant Linux and Windows applications, HPC infrastructure, databases, SAP HANA, and enterprise web applications.

Why choose NetApp?

NetApp provides organizations with advanced data storage and management solutions designed to support diverse IT environments, from on-premises to multi-cloud. For businesses looking to enhance their infrastructure, NetApp offers several key advantages:

- Cost efficiency: NetApp’s tools help optimize cloud expenses, enabling companies to manage data growth without excessive costs, while its efficient storage solutions reduce overall spending.

- Scalability: Built to grow with your business, NetApp’s services seamlessly scale across cloud, hybrid, and on-premises environments, making it easier to expand as data needs evolve.

- Enhanced security: NetApp prioritizes data protection with features designed to defend against cyber threats, ensuring high levels of security for critical information.

- Seamless cloud integration: With strong support for leading cloud providers like Google Cloud Platform (GCP) and AWS, NetApp simplifies hybrid cloud setups and provides smooth data migration across platforms.

Understanding the dos and don’ts of NetApp monitoring can help you maximize its benefits. By selecting NetApp, IT professionals and decision-makers can leverage streamlined data management, improved performance, and flexible integration options that fit their organization’s unique needs.

What are NetApp’s key services?

NetApp offers several important services and products to help customers meet their data storage and management goals.

Ansible

Ansible is a platform for automating networking, servers, and storage. This configuration management system enables arduous manual tasks to become repeatable and less susceptible to mistakes. The biggest selling points are that it’s easy to use, reliable, and provides strong security.

CVO

CVO (Cloud Volumes ONTAP) is a type of storage delivering data management for block and file workloads. This advanced storage allows you to make the most of your cloud expenses while improving application performance. It also helps with compliance and data protection.

Dynamic Disc Pool

Dynamic Disc Pool technology (DDP) addresses the problem of RAID rebuild times and the potential increase in disk failure and reduced performance this may cause. DDP delivers prime storage solutions while maintaining performance. The technology can rebuild up to four times more quickly while featuring exceptional data protection. DDP allows you to group similar disks in a pool topology with faster rebuilds than RAID 5 or 6.

For more on monitoring disk performance and latency in NetApp environments, explore how LogicMonitor visualizes these metrics to optimize storage efficiency.

FAS

FAS (Fabric Attached Storage) is a unified storage platform in the cloud. FAS is one of the company’s core products. NetApp currently has six models of storage to choose from, allowing users to select the best model that meets their organization’s storage needs. These products consist of storage controllers with shelves made of hard disk enclosures. In some entry-level products, the storage controller contains the actual drives.

Flexpod

Flexpod is a type of architecture for network, server, and storage components. The components of a Flexpod consist of three layers: computing, networking, and storage. Flexpod allows users to select specific components, making it ideal for almost any type of business. Whether you’re looking for rack components or optimizing for artificial intelligence, Flexpod can help you put together the architecture your organization needs.

FlexCache

FlexCache offers remote simplified file distribution. It can also improve WAN usage with lower bandwidth costs and latency. You can distribute through multiple sites. FlexCache provides a more significant storage system ROI, improves the ability to handle workload increases, and limits remote access latency. It’s also easier to scale out storage performance with read-heavy applications. ONTAP Select running on 9.5 versions or later, FAS, and AFF support FlexCache.

OnCommand (OCI)

An OnCommand Insight Server (OCI) provides access to storage information and receives updates involving environment changes from acquisition units. The updates pass through a secure channel and then go to storage in the database. OCI can simplify virtual environments and manage complex private cloud systems. OCI allows analysis and management across networks, servers, and storage in both virtual and physical environments. It specifically enables cross-domain management.

OnCommand has two different Acquisition units. These are the Local Acquisition Unit (LAU), which you can install along with the OnCommand Insight Server, and the Remote Acquisition Unit (RAU). This one is optional. You can install it on a single remote server or several servers.

ONTAP

This is the operating system for hybrid cloud enhancement that helps with staffing, data security, and promoting future growth. New features for ONTAP include greater protection from ransomware, simplification for configuring security profiles, and more flexibility for accessing storage.

StorageGRID

If your organization has large data sets to store, StorageGrid is a solution that can help you manage the data cost-efficiently. StorageGrid offers storage and management for large amounts of unstructured data. You can reduce costs and optimize workflows when you place content in the correct storage tier. Some of the reviews for NetApp StorageGRID state that three of its best features are its valuable backup features, easy deployment, and cost-effectiveness.

Snapshot

Snapshots are designed to help with data protection but can be used for other purposes. NetApp snapshots are for backup and restoring purposes. When you have a snapshot backup, you save a specific moment-in-time image of the Unified database files in case your data is lost or the system fails. The Snapshot backup is periodically written on an ONTAP cluster. This way, you’ll have an updated copy.

Solidfire

Solidfire is one of NetApp’s many acquisitions, as it took over the company in January 2016. Solidfire uses the Element operating system for its arrays. This NetApp product provides all-flash storage solutions. SolidFire is not as successful as other products at NetApp; ONTAP, in particular, overshadows SolidFire. Some industry professionals may question how long SolidFire will continue as a NetApp product. So far, SolidFire is still a private cloud hardware platform.

Trident

Trident is an open-source project that can meet your container application demands. It utilizes Kubernetes clusters as pods. This offers exceptional storage services and allows containerized apps to consume storage from different sources. Trident provides full support as an open-source project and uses industry-standard interfaces. These interfaces include the Container Storage Interface.

NetApp’s integration with public cloud platforms

NetApp’s solutions are designed to support organizations working across hybrid and multi-cloud environments, offering seamless compatibility with major cloud providers like GCP and AWS. NetApp’s tools, including CVO and StorageGRID, enable efficient data management, transfer, and protection, ensuring that businesses can maintain control of their data infrastructure across platforms.

- CVO: CVO offers data management across cloud environments by combining NetApp’s ONTAP capabilities with public cloud infrastructure. This solution allows organizations to optimize cloud storage costs and manage data protection, compliance, and performance. For example, a company using CVO with GCP can streamline its data backup processes, reducing both costs and latency by storing frequently accessed data close to applications.

- StorageGRID: Designed for storing large amounts of unstructured data, StorageGRID enables multi-cloud data management with the flexibility to tier data across cloud and on-premises environments. By supporting object storage and efficient data retrieval, StorageGRID allows businesses to manage compliance and access requirements for big data applications. A healthcare organization, for example, could use StorageGRID to store and retrieve extensive medical records across both private and public cloud environments, ensuring secure and compliant access to data when needed.

With NetApp’s hybrid and multi-cloud capabilities, businesses can reduce the complexity of managing data across cloud platforms, optimize storage expenses, and maintain compliance, all while ensuring data accessibility and security across environments.

What are NetApp’s key terms?

To understand how NetApp works, it’s necessary to know some of its terminology and product selections. The following are some basic terms and products with brief definitions.

Aggregate

An aggregate is a collection of physical disks you can organize and configure to support various performance and security needs. According to NetApp, if your environment contains certain configurations, you’ll need to create aggregates manually. A few of these configurations include flash pool aggregates and MetroCluster configurations.

Cluster MTU

This feature enables you to configure MTU size by using an ONTAP Select multi-node cluster. An MTU is the maximum transmission unit size that specifies the jumbo frame size on 10 Gigabit interfaces as well as 1 Gigabit Ethernet. Using the ifconfig command, you can select the particular MTU size for transmission between a client and storage.

FlexVol Volume

FlexVol volumes are a type of volume that generally connects to each of its containing aggregates. Several FlexVol volumes can receive their storage sources from a single aggregate. Since these volumes are separate from the aggregates, you can dynamically change the size of each FlexVol volume without a disruption in the environment.

Initiator

An initiator is a port for connecting with a LUN. You can select an iSCSI hardware or software adapter or an FC. The ONTAP System Manager enables you to manage initiator groups. If you want to control which LIFs each initiator has access to, you can do this with portsets.

IOPS

IOPS measures how many Input/Output operations per second occur. You would generally use IOPS to measure your storage performance in units of bytes for read or write operations. You’ll sometimes need different IOP limits in various operations that are in the same application.

License Manager

This software component is part of the Deploy administration utility. This is an API you can use to update an IP address when the IP address changes. To generate a file, you need to use the License Lock ID (LLID) and the capacity pool license serial number.

LUN

LUNs are block-based storage objects that you can format in various ways. They work through the FC or iSCSI protocol. ONTAP System Manager is able to help you create LUNS if there is available free space. There are many ways you can use LUNs; for example, you might develop a LUN for a QTree, volume, or aggregate that you already have.

Multiple Cluster Systems

If you need an at-scale system for a growing organization, you’ll want to consider NetApp systems that have multiple clusters. A cluster consists of grouped nodes to create scalable clusters. This is done primarily to use the nodes more effectively and distribute the workload throughout the cluster. An advantage of having clusters is to provide continuous service for users even if an individual node goes offline.

ONTAP Select Cluster

You can create clusters with one, two, four, six, or even eight nodes. A cluster with only one node doesn’t produce any HA capability. Clusters with more than one node, however, will have at least one HA pair.

ONTAP Select Deploy

You can use this administration utility to deploy ONTAP Select clusters. The web user interface provides access to the Deploy utility. The REST API and CLI management shell also provide access.

Qtrees

Qtrees are file systems that are often subdirectories of a primary directory. You might want to use qtrees if you’re managing or configuring quotas. You can create them within volumes when you need smaller segments of each volume. Developing as many as 4,995 qtrees in each internal volume is possible. Internal volumes and qtrees have many similarities. Primary differences include that qtrees can’t support space guarantees or space reservations. Individual qtrees also can’t enable or disable snapshot copies. Clients will see the qtree as a directory when they access that particular volume.

Snapshot Copy

Snapshot copy is a read-only image that captures a moment-in-time of storage system volume. The technology behind ONTAP Snapshot enables the image to take up a minimum of storage space. Instead of copying data blocks, ONTAP creates Snapshot copies by referencing metadata. You can recover LUNS, contents of a volume, or individual files with a Snapshot copy.

SnapMirror

This replication software runs as a part of the Data ONTAP system. SnapMirror can replicate data from a qtree or a source volume. It’s essential to establish a connection between the source and the destination before copying data with SnapMirror. After creating a snapshot copy and copying it to the destination, the result is a read-only qtree or volume containing the same information as the source when it was last updated.

You will want to use SnapMirror in asynchronous, synchronous, or semi-synchronous mode. If at the qtree level, SnapMirror runs only in asynchronous mode. Before setting up a SnapMirror operation, you need a separate license and must enable the correct license on the destination and source systems.

Storage Pools

Storage pools are data containers with the ability to hide physical storage. Storage pools increase overall storage efficiency. The benefit is that you may need to buy fewer disks. The drawback is disk failure can have a ripple effect when several are members of the same storage pool.

System Manager

If you’re just beginning to use NetApp and need a basic, browser-based interface, you may want to consider the OnCommand System Manager. System Manager includes detailed tables, graphs, and charts for tracking past and current performance.

Discover the power of NetApp with LogicMonitor

NetApp provides valuable data and storage services to help your organization access and manage data throughout multi-cloud environments more efficiently. With various products and services, NetApp enables you to put together the data management and storage solutions that meet your organization’s needs.

As a trusted NetApp technology partner, LogicMonitor brings automated, insightful monitoring to your NetApp environment. Transition seamlessly from manual tracking to advanced automated monitoring and gain access to essential metrics like CPU usage, disk activity, and latency analysis—all without configuration work.

With LogicMonitor’s platform, your team can focus on strategic goals, while LogicMonitor ensures efficient and precise monitoring across your NetApp systems, including ONTAP.

Monitoring once provided straightforward insights into IT health: you collected data, identified metrics to monitor, and diagnosed issues as they arose. However, as IT infrastructure evolves with cloud, containerization, and distributed architectures, traditional monitoring can struggle to keep pace. Enter observability, a methodology that not only enhances visibility but also enables proactive issue detection and troubleshooting.

Is observability simply a buzzword, or does it represent a fundamental shift in IT operations? This article will explore the differences between monitoring and observability, their complementary roles, and why observability is essential for today’s IT teams.

In this blog, we’ll cover:

- What is monitoring?

- What is observability?

- Key differences between monitoring vs. observability

- How observability and monitoring work together

- Steps for transitioning from monitoring to full observability

What is monitoring?

Monitoring is the practice of systematically collecting and analyzing data from IT systems to detect and alert on performance issues or failures. Traditional monitoring tools rely on known metrics, such as CPU utilization or memory usage, often generating alerts when thresholds are breached. This data typically comes in the form of time-series metrics, providing a snapshot of system health based on predefined parameters.

Key characteristics of monitoring:

- Reactive by nature: Monitoring often triggers alerts after an issue has already impacted users.

- Threshold-based alerts: Notifications are generated when metrics exceed specified limits (e.g., high memory usage).

- Primary goal: To detect and alert on known issues to facilitate quick response.

An example of monitoring is a CPU utilization alert that may notify you that a server is under load, but without additional context, it cannot identify the root cause, which might reside elsewhere in a complex infrastructure.

What is observability?

Observability goes beyond monitoring by combining data analysis, machine learning, and advanced logging to understand complex system behaviors. Observability relies on the three core pillars—logs, metrics, and traces—to provide a holistic view of system performance, enabling teams to identify unknown issues, optimize performance, and prevent future disruptions.

Key characteristics of observability:

- Proactive approach: Observability enables teams to anticipate and prevent issues before they impact users.

- Unified data collection: Logs, metrics, and traces come together to offer deep insights into system behavior.

- Root cause analysis: Observability tools leverage machine learning to correlate data, helping identify causation rather than just symptoms.

An example of observability: In a microservices architecture, if response times slow down, observability can help pinpoint the exact microservice causing the issue, even if the problem originated from a dependency several layers deep.

For a deeper understanding of what observability entails, check out our article, What is O11y? Observability explained.

Key differences of monitoring vs. observability

Monitoring and observability complement each other, but their objectives differ. Monitoring tracks known events to ensure systems meet predefined standards, while observability analyzes outputs to infer system health and preemptively address unknown issues.

| Aspect | Monitoring | Observability |

| Purpose | To detect known issues | To gain insight into unknown issues and root causes |

| Data focus | Time-series metrics | Logs, metrics, traces |

| Approach | Reactive | Proactive |

| Problem scope | Identifies symptoms | Diagnoses causes |

| Example use case | Alerting on high CPU usage | Tracing slow requests across microservices |

Monitoring vs. observability vs. telemetry vs. APM

Monitoring and observability are not interchangeable terms, but they do work together to achieve a common goal. Monitoring is an important aspect of an observability workflow, as it allows us to track the state of our systems and services actively. However, monitoring alone cannot provide the complete picture that observability offers.

Observability encompasses both monitoring and telemetry as it relies on these components to gather data and analyze it for insights into system behavior. Telemetry provides the raw data that feeds into the analysis process, while monitoring ensures that we are constantly collecting this data and staying informed about any changes or issues in our systems. Without telemetry and monitoring, observability cannot exist.

Application Performance Monitoring (APM) tools give developers and operations teams real-time insights into application performance, enabling quick identification and troubleshooting of issues. Unlike traditional monitoring, APM offers deeper visibility into application code and dependencies.

How monitoring and observability work together

Monitoring and observability are complementary forces that, when used together, create a complete ecosystem for managing and optimizing IT systems. Here’s a step-by-step breakdown of how these two functions interact in real-world scenarios to maintain system health and enhance response capabilities.

Monitoring sets the foundation by tracking known metrics

Monitoring provides the essential baseline data that observability builds upon. Continuously tracking known metrics ensures that teams are alerted to any deviations from expected performance.

- Example: Monitoring tools track key indicators like CPU usage, memory consumption, and response times. When any of these metrics exceed set thresholds, an alert is generated. This serves as the initial signal to IT teams that something may be wrong.

Observability enhances monitoring alerts with contextual depth

Once monitoring generates an alert, observability tools step in to provide the necessary context. Instead of simply reporting that a threshold has been breached, observability digs into the incident’s details, using logs, traces, and correlations across multiple data sources to uncover why the alert occurred.

- Example: If monitoring triggers an alert due to high response times on a specific service, observability traces can reveal dependencies and interactions with other services that could be contributing factors. Analyzing these dependencies helps identify whether the latency is due to a database bottleneck, network congestion, or another underlying service.

Correlating data across monitoring and observability layers for faster troubleshooting

Monitoring data, though essential, often lacks the detailed, correlated insights needed to troubleshoot complex, multi-service issues. Observability integrates data from various layers—such as application logs, user transactions, and infrastructure metrics—to correlate events and determine the root cause more quickly.

- Example: Suppose an e-commerce application shows a spike in checkout failures. Monitoring flags this with an error alert, but observability allows teams to correlate the error with recent deployments, configuration changes, or specific microservices involved in the checkout process. This correlation can show, for instance, that the issue started right after a specific deployment, guiding the team to focus on potential bugs in that release.

Machine learning amplifies alert accuracy and reduces noise

Monitoring generates numerous alerts, some of which are not critical or might even be false positives. Observability platforms, particularly those equipped with machine learning (ML), analyze historical data to improve alert quality and suppress noise by dynamically adjusting thresholds and identifying true anomalies.

- Example: If monitoring detects a temporary spike in CPU usage, ML within the observability platform can recognize it as an expected transient increase based on past behavior, suppressing the alert. Conversely, if it identifies an unusual pattern (e.g., sustained CPU usage across services), it escalates the issue. This filtering reduces noise and ensures that only critical alerts reach IT teams.

Observability enhances monitoring’s proactive capabilities

While monitoring is inherently reactive—alerting when something crosses a threshold—observability takes a proactive stance by identifying patterns and trends that could lead to issues in the future. Observability platforms with predictive analytics use monitoring data to anticipate problems before they fully manifest.

- Example: Observability can predict resource exhaustion in a specific server by analyzing monitoring data on memory usage trends. If it detects a steady increase in memory use over time, it can alert teams before the server reaches full capacity, allowing preventive action.

Unified dashboards combine monitoring alerts with observability insights

Effective incident response requires visibility into both real-time monitoring alerts and in-depth observability insights, often through a unified dashboard. By centralizing these data points, IT teams have a single source of truth that enables quicker and more coordinated responses.

- Example: In a single-pane-of-glass dashboard, monitoring data flags a service outage, while observability insights provide detailed logs, traces, and metrics across affected services. This unified view allows the team to investigate the outage’s impact across the entire system, reducing the time to diagnosis and response.

Feedback loops between monitoring and observability for continuous improvement

As observability uncovers new failure modes and root causes, these insights can refine monitoring configurations, creating a continuous feedback loop. Observability-driven insights lead to the creation of new monitoring rules and thresholds, ensuring that future incidents are detected more accurately and earlier.

- Example: During troubleshooting, observability may reveal that a certain pattern of log events signals an impending memory leak. Setting up new monitoring alerts based on these log patterns can proactively alert teams before a memory leak becomes critical, enhancing resilience.

Key outcomes of the monitoring-observability synergy

Monitoring and observability deliver a comprehensive approach to system health, resulting in:

- Faster issue resolution: Monitoring alerts IT teams to problems instantly, while observability accelerates root cause analysis by providing context and correlations.

- Enhanced resilience: Observability-driven insights refine monitoring rules, leading to more accurate and proactive alerting, which keeps systems stable under increasing complexity.

- Operational efficiency: Unified dashboards streamline workflows, allowing teams to respond efficiently, reduce mean time to resolution (MTTR), and minimize service disruptions.

In short, monitoring and observability create a powerful synergy that supports both reactive troubleshooting and proactive optimization, enabling IT teams to stay ahead of potential issues while maintaining high levels of system performance and reliability.

Steps for transitioning from monitoring to observability

Transitioning from traditional monitoring to a full observability strategy requires not only new tools but also a shift in mindset and practices. Here’s a step-by-step guide to help your team make a seamless, impactful transition:

1. Begin with a comprehensive monitoring foundation

Monitoring provides the essential data foundation that observability needs to deliver insights. Without stable monitoring, observability can’t achieve its full potential.

Set up centralized monitoring to cover all environments—on-premises, cloud, and hybrid. Ensure coverage of all critical metrics such as CPU, memory, disk usage, and network latency across all your systems and applications. For hybrid environments, it’s particularly important to use a monitoring tool that can handle disparate data sources, including both virtual and physical assets.

Pro tip:

Invest time in configuring detailed alert thresholds and suppressing false positives to minimize alert fatigue. Initial monitoring accuracy reduces noise and creates a solid base for observability to build on.

2. Leverage log aggregation to gain granular visibility

Observability relies on an in-depth view of what’s happening across services, and logs are critical for this purpose. Aggregated logs allow teams to correlate patterns across systems, leading to faster root cause identification.

Choose a log aggregation solution that can handle large volumes of log data from diverse sources. This solution should support real-time indexing and allow for flexible querying. Look for tools that offer structured and unstructured log handling so that you can gain actionable insights without manual log parsing.

Pro tip:

In complex environments, logging everything indiscriminately can quickly lead to overwhelming amounts of data. Implement dynamic logging levels—logging more detail temporarily only when issues are suspected, then scaling back once the system is stable. This keeps log data manageable while still supporting deep dives when needed.

3. Add tracing to connect metrics and logs for a complete picture

In distributed environments, tracing connects the dots across services, helping to identify and understand dependencies and causations. Tracing shows the journey of requests, revealing delays and bottlenecks across microservices and third-party integrations.

Adopt a tracing framework that’s compatible with your existing architecture, such as OpenTelemetry, which integrates with many observability platforms and is widely supported. Configure traces to follow requests across services, capturing data on latency, error rates, and processing times at each stage.

Pro tip:

Start with tracing critical user journeys—like checkout flows or key API requests. These flows often correlate directly with business metrics and customer satisfaction, making it easier to demonstrate the value of observability to stakeholders. As you gain confidence, expand tracing coverage to additional services.

4. Introduce machine learning and AIOps for enhanced anomaly detection

Traditional monitoring relies on static thresholds, which can lead to either missed incidents or alert fatigue. Machine learning (ML) in observability tools dynamically adjusts these thresholds, identifying anomalies that static rules might overlook.

Deploy an AIOps (Artificial Intelligence for IT Operations) platform that uses ML to detect patterns across logs, metrics, and traces. These systems continuously analyze historical data, making it easier to spot deviations that indicate emerging issues.

Pro tip:

While ML can be powerful, it’s not a one-size-fits-all solution. Initially, calibrate the AIOps platform with supervised learning by identifying normal versus abnormal patterns based on historical data. Use these insights to tailor ML models that suit your specific environment. Over time, the system can adapt to handle seasonality and load changes, refining anomaly detection accuracy.

5. Establish a single pane of glass for unified monitoring and observability

Managing multiple dashboards is inefficient and increases response time in incidents. A single pane of glass consolidates monitoring and observability data, making it easier to identify issues holistically and in real-time.

Choose a unified observability platform that integrates telemetry (logs, metrics, and traces) from diverse systems, cloud providers, and applications. Ideally, this platform should support both real-time analytics and historical data review, allowing teams to investigate past incidents in detail.

Pro tip:

In practice, aim to customize the single-pane dashboard for different roles. For example, give SREs deep trace and log visibility, while providing executive summaries of system health to leadership. This not only aids operational efficiency but also allows stakeholders at every level to see observability’s value in action.

6. Optimize incident response with automated workflows

Observability is only valuable if it shortens response times and drives faster resolution. Automated workflows integrate observability insights with incident response processes, ensuring that the right people are alerted to relevant, contextualized data.

Configure incident response workflows that trigger automatically when observability tools detect anomalies or critical incidents. Integrate these workflows with collaboration platforms like Slack, Teams, or PagerDuty to notify relevant teams instantly.

Pro tip:

Take the time to set up intelligent incident triage. Route different types of incidents to specialized teams (e.g., network, application, or database), each with their own protocols. This specialization makes incident handling more efficient and prevents delays that could arise from cross-team handoffs.

7. Create a feedback loop to improve monitoring with observability insights

Observability can reveal recurring issues or latent risks, which can then inform monitoring improvements. By continually refining monitoring based on observability data, IT teams can better anticipate issues, enhancing the reliability and resilience of their systems.

Regularly review observability insights to identify any new patterns or potential points of failure. Set up recurring retrospectives where observability data from recent incidents is analyzed, and monitoring configurations are adjusted based on lessons learned.

Pro tip:

Establish a formal feedback loop where observability engineers and monitoring admins collaborate monthly to review insights and refine monitoring rules. Observability can identify previously unknown thresholds that monitoring tools can then proactively track, reducing future incidents.

8. Communicate observability’s impact on business outcomes

Demonstrating the tangible value of observability is essential for maintaining stakeholder buy-in and ensuring continued investment.

Track key performance indicators (KPIs) such as MTTR, incident frequency, and system uptime, and correlate these metrics with observability efforts. Share these results with stakeholders to highlight how observability reduces operational costs, improves user experience, and drives revenue.

Pro tip:

Translating observability’s technical metrics into business terms is crucial. For example, if observability helped prevent an outage, quantify the potential revenue saved based on your system’s downtime cost per hour. By linking observability to bottom-line metrics, you reinforce its value beyond IT.

Embrace the power of observability and monitoring

Observability is not just an extension of monitoring—it’s a fundamental shift in how IT teams operate. While monitoring is essential for tracking known issues and providing visibility, observability provides a deeper, proactive approach to system diagnostics, enabling teams to innovate while minimizing downtime.

To fully realize the benefits of observability, it’s important to combine both monitoring and observability tools into a cohesive, holistic approach. By doing so, businesses can ensure that their systems are not only operational but also resilient and adaptable in an ever-evolving digital landscape.

Logging is critical for gaining valuable application insights, such as performance inefficiencies and architectural structure. But creating reliable, flexible, and lightweight logging solutions isn’t the easiest task—which is where Docker helps.

Docker containers are a great way to create lightweight, portable, and self-contained application environments. They also give IT teams a way to create impermanent and portable logging solutions that can run on any environment. Because logging is such a crucial aspect of performance, Docker dual logging is more beneficial in complex, multi-container setups that depend on reliable log management for troubleshooting and auditing.

Docker dual logging allows the capture of container logs in two separate locations at the same time. This approach ensures log redundancy, improved compliance, and enhanced operating system (like Windows, Linux, etc.) observability by maintaining consistent log data across distributed environments.

This guide covers the essentials of Docker logging, focusing on implementing Docker dual logging functionality to optimize your infrastructure.

What is a Docker container?

A Docker container is a standard unit of software that wraps up code and all its dependencies so the program can be moved from one environment to another, quickly and reliably.

Containerized software, available for Linux and Windows-based applications, will always run the same way despite the infrastructure.

Containers encapsulate software from its environment, ensuring that it performs consistently despite variations between environments — for example, development and staging.

Docker container technology was introduced as an open-source Docker Engine in 2013.

What is a Docker image?

A Docker image is a lightweight, standalone, executable software package that contains everything required to run an application: code, system tools, system libraries, and settings.

In other words, an image is a read-only template with instructions for constructing a container that can operate on the Docker platform. It provides an easy method to package up programs and preset server environments that you can use privately or openly with other Docker users.

What is Docker logging?

Docker logging refers to the process of capturing and managing logs generated by containerized applications. Logs provide critical insights into system behavior, helping you troubleshoot issues, monitor performance, and ensure overall application log health.

Combined with monitoring solutions, you can maintain complete visibility into your containerized environments, helping you solve problems faster and ensure reliability. Using other data insights, you can examine historical data to find trends and anticipate potential problems.

Docker container logs

What are container logs?

Docker container logs, in a nutshell, are the console output of running containers. They specifically supply the stdout and stderr streams running within a container.

As previously stated, Docker logging is not the same as logging elsewhere. Everything that is written to the stdout and stderr streams in Docker is implicitly forwarded to a driver, allowing accessing and writing logs to a file.

Logs can also be viewed in the console. The Docker logs command displays information sent by a currently executing container. The docker service logs command displays information by all containers members of the service.

What is a Docker logging driver?

The Docker logging drivers gather data from containers and make it accessible for analysis.

If no additional log-driver option is supplied when a container is launched, Docker will use the json-file driver by default. A few important notes on this:

- Log-rotation is not performed by default. As a result, log files kept using the json-file logging driver can consume a significant amount of disk space for containers that produce a large output, potentially leading to disk space depletion.

- Docker preserves the json-file logging driver — without log-rotation — as the default to maintain backward compatibility with older Docker versions and for instances when Docker is used as a Kubernetes runtime.

- The local driver is preferable because it automatically rotates logs and utilizes a more efficient file format.

Docker also includes logging drivers for sending logs to various services — for example, a logging service, a log shipper, or a log analysis platform. There are many different Docker logging drivers available. Some examples are listed below:

- syslog — A long-standing and widely used standard for logging applications and infrastructure.

- journald — A structured alternative to Syslog’s unstructured output.

- fluentd — An open-source data collector for unified logging layer.

- awslogs — AWS CloudWatch logging driver. If you host your apps on AWS, this is a fantastic choice.

You do, however, have several alternative logging driver options, which you can find in the Docker logging docs.

Docker also allows logging driver plugins, enabling you to write your Docker logging drivers and make them available over Docker Hub. At the same time, you can use any plugins accessible on Docker Hub.

Logging driver configuration

To configure a Docker logging driver as the default for all containers, you can set the value of the log-driver to the name of the logging driver in the daemon.json configuration file.

This example sets the default logging driver to the local driver:

{

“log-driver”: “local”

}Another option is configuring a driver on a container-by-container basis. When you initialize a container, you can use the –log-driver flag to specify a different logging driver than the Docker daemon’s default.

The code below starts an Alpine container with the local Docker logging driver:

docker run -it –log-driver local alpine ashThe docker info command will provide you with the current default logging driver for the Docker daemon.

Docker Logs With Remote Logging Drivers

Previously, the Docker logs command could only be used with logging drivers that supported containers utilizing the local, json-file, or journald logging drivers. However, many third-party Docker logging drivers did not enable reading logs from Docker logs locally.

When attempting to collect log data automatically and consistently, this caused a slew of issues. Log information could only be accessed and displayed in the format required by the third-party solution.

Starting with Docker Engine 20.10, you can use docker logs to read container logs independent of the logging driver or plugin that is enabled.

Dual logging requires no configuration changes. Docker Engine 20.10 later allows double logging by default if the chosen Docker logging driver does not support reading logs.

Where are Docker logs stored?

Docker keeps container logs in its default place, /var/lib/docker/. Each container has a log that is unique to its ID (the full ID, not the shorter one that is generally presented), and you may access it as follows:

/var/lib/docker/containers/ID/ID-json.log

docker run -it –log-driver local alpine ashWhat are the Docker logging delivery modes?

Docker logging delivery modes refer to how the container balances or prioritizes logging against other tasks. The available Docker logging delivery modes are blocking and non-blocking. Both the options can be applied regardless of what Docker logging driver you selected.

Blocking mode

When in blocking mode, the program will be interrupted whenever a message needs to be delivered to the driver.

The advantage of the blocking mode is that all logs are forwarded to the logging driver, even though there may be a lag in your application’s performance. In this sense, this mode prioritizes logging against performance.

Depending on the Docker logging driver you choose, your application’s latency may vary. For example, the json-file driver, which writes to the local filesystem, produces logs rapidly and is unlikely to block or create a significant delay.

On the contrary, Docker logging drivers requiring the container to connect to a remote location may block it for extended periods, resulting in increased latency.

Docker’s default mode is blocking.

When to use the blocking mode?

The json-file logging driver in blocking mode is recommended for most use situations. As mentioned before, the driver is quick since it writes to a local file. Therefore it’s generally safe to use it in a blocking way.

The blocking mode should also be used for memory-hungry programs requiring the bulk of the RAM available to your containers. The reason is that if the driver cannot deliver logs to its endpoint due to a problem such as a network issue, there may not be enough memory available for the buffer if it’s in non-blocking mode.

Non-blocking

The non-blocking Docker logging delivery mode will not prevent the program from running to provide logs. Instead of waiting for logs to be sent to their destination, the container will store logs in a buffer in its memory.

Though the non-blocking Docker logging delivery mode appears to be the preferable option, it also introduces the possibility of some log entries being lost. Because the memory buffer in which the logs are saved has a limited capacity, it might fill up.

Furthermore, if a container breaks, logs may be lost before being released from the buffer.

You may override Docker’s default blocking mode for new containers by adding an log-opts item to the daemon.json file. The max-buffer-size, which refers to the memory buffer capacity mentioned above, may also be changed from the 1 MB default.

{

“log-driver”: “local”,

“log-opts”: {

“mode”: “non-blocking”

}

}Also, you can provide log-opts on a single container. The following example creates an Alpine container with non-blocking log output and a 4 MB buffer:

docker run -it –log-opt mode=non-blocking –log-opt max-buffer-size=4m alpineWhen to use non-blocking mode?

Consider using the json-file driver in the non-blocking mode if your application has a big I/O demand and generates a significant number of logs.

Because writing logs locally is rapid, the buffer is unlikely to fill quickly. If your program does not create spikes in logging, this configuration should handle all of your logs without interfering with performance.

For applications where performance is more a priority than logging but cannot use the local file system for logs — such as mission-critical applications — you can provide enough RAM for a reliable buffer and use the non-blocking mode. This setting should ensure the performance is not hampered by logging, yet the container should still handle most log data.

Why Docker logging is different from traditional logging

Logging in containerized environments like Docker is more complex than in traditional systems due to the temporary and distributed nature of containers. Docker containers generate multiple log streams, often in different formats, making standard log analysis tools less effective and debugging more challenging compared to single, self-contained applications.

Two key characteristics of Docker containers contribute to this complexity:

- Temporary containers: Docker containers are designed to be short-lived, meaning they can be stopped or destroyed at any time. When this happens, any logs stored within the container are lost. To prevent data loss, it’s crucial to use a log aggregator that collects and stores logs in a permanent, centralized location. You may use a centralized logging solution to aggregate log data and use data volumes to store persistent data on host devices.

- Multiple logging layers: Docker logging involves log entries from individual containers and the host system. Managing these multi-level logs requires specialized tools that can gather and analyze data from all levels and logging formats effectively, ensuring no critical information is missed. Containers may also generate large volumes of log data, which means traditional log analysis tools may struggle with the sheer amount of data.

Understanding Docker dual logging

Docker dual logging involves sending logs to two different locations simultaneously. This approach ensures that log data is redundantly stored, reducing the risk of data loss and providing multiple sources for analysis. Dual logging is particularly valuable in environments where compliance and uptime are critical.

Benefits of Docker dual logging

- Redundancy: Dual logging ensures that log messages are preserved even if one logging system fails and logging continues in case of service failure.

- Enhanced troubleshooting: With logs available in two places, cross-referencing data leads to diagnosing issues more effectively.

- Compliance: For industries with strict data retention and auditing requirements, dual logging helps meet these obligations by providing reliable log storage across multiple systems.

Docker dual logging in action

Docker dual logging is widely implemented in various industries to improve compliance, security, and system reliability. By implementing Docker dual logging, you can safeguard data, meet regulatory demands, and optimize your infrastructure. Below are some real-world examples of how organizations benefit from dual logging:

- E-commerce compliance: A global e-commerce company uses dual logging to meet data retention laws by storing log files both locally and in the cloud, ensuring regulatory compliance (such as GDPR and CCPA) and audit readiness.

- Financial institution security: A financial firm uses dual logging to enhance security by routing logs to secure on-premise and cloud systems, quickly detecting suspicious activities, aiding forensic analysis, and minimizing data loss.

- SaaS uptime and reliability: A SaaS provider leverages dual logging to monitor logs across local and remote sites, minimizing downtime by resolving issues faster and debugging across distributed systems to ensure high service availability.

How to implement Docker dual logging

Implementing dual logging in the Docker engine involves configuring containers to use multiple logging drivers. For example, logs can be routed to both a local JSON file and a remote logging service like AWS CloudWatch. Here’s a simple configuration file example:

bash

copy code

docker run -d \

--log-driver=json-file \

--log-driver=fluentd \

--log-opt fluentd-address=localhost:24224 \

your-container-imageThe specific logging driver and other settings will vary based on your specific configuration. Look at your organization’s infrastructure to determine the driver names and the address of the logging server.

This setup ensures that logs are stored locally while also being sent to a centralized log management service. If you’re using Kubernetes to manage and monitor public cloud environments, you can benefit from the LogicMonitor Collector for better cloud monitoring.

Docker Daemon Logs

What are Daemon logs?

The Docker platform generates and stores logs for its daemons. Depending on the host operating system, daemon logs are written to the system’s logging service or a log file.

If you only collected container logs, you would gain insight into the state of your services. On the other hand, you need to be informed of the state of your entire Docker platform, and the daemon logs exist for that reason as they provide an overview of your whole microservices architecture.

Assume a container shuts down unexpectedly. Because the container terminates before any log events can be captured, we cannot pinpoint the underlying cause using the docker logs command or an application-based logging framework.

Instead, we may filter the daemon log for events that contain the container name or ID and sort by timestamp, which allows us to establish a chronology of the container’s life from its origin through its destruction.

The daemon log also contains helpful information about the host’s status. If the host kernel does not support a specific functionality or the host setup is suboptimal, the Docker daemon will note it during the initialization process.

Depending on the operating system settings and the Docker logging subsystem utilized, the logs may be kept in one of many locations. In Linux, you can look at the journalctl records:

sudo journalctl -xu docker.serviceAnalyzing Docker logs

Log data must be evaluated before it can be used. When you analyze log data, you’re hunting for a needle in a haystack.

You’re typically hunting for that one line with an error among thousands of lines of regular log entries. A solid analysis platform is required to determine the actual value of logs. Log collecting and analysis tools are critical. Here are some of the options.

Fluentd

Fluentd is a popular open-source solution for logging your complete stack, including non-Docker services. It’s a data collector that allows you to integrate data gathering and consumption for improved data utilization and comprehension.

ELK

ELK is the most widely used open-source log data analysis solution. It’s a set of tools:ElasticSearch for storing log data, Logstash for processing log data, and Kibana for displaying data via a graphical user interface.

ELK is an excellent solution for Docker log analysis since it provides a solid platform maintained by a big developer community and is free.

Advanced log analysis tools

With open-source alternatives, you must build up and manage your stack independently, which entails allocating the necessary resources and ensuring that your tools are highly accessible and housed on scalable infrastructure. It can necessitate a significant amount of IT resources as well.

That’s where more advanced log analysis platforms offer tremendous advantages. For example, tools like LogicMonitor’s SaaS platform for log intelligence and aggregation can give teams quick access to contextualized and connected logs and metrics in a single, unified cloud-based platform.

These sophisticated technologies leverage the power of machine learning to enable companies to reduce troubleshooting, streamline IT operations, and increase control while lowering risk.

Best practices for Docker dual logging

Docker dual logging offers many benefits. But to get the most out of it, you’ll need to implement best practices to build a reliable logging environment. Use the best practices below to get started.

- Monitor log performance: Regularly check the performance impact of dual logging on containers by gathering metrics like CPU usage and network bandwidth, and adjust configurations as necessary.

- Ensure log security: Use encryption and secure access controls when transmitting logs to remote locations, and verify your controls comply with regulations.

- Automate log management: implement automated processes to manage, review, and archive logs from devices ingesting logs to prevent storage issues.

Analyzing Docker logs in a dual logging setup

When logs are stored in two places, analyzing them becomes more complicated. Using log aggregation tools like Fluentd or ELK to collect and analyze logs from both sources provides a comprehensive view of a system’s behavior. This dual approach can significantly increase the ability to detect and resolve your issues quickly.

Overview of Docker logging drivers

Docker supports various logging drivers, each suited to different use cases. Drivers can be mixed and matched when implementing dual logging to achieve the best results for whole environments. Common drivers include:

- json-file: Stores logs in a JSON format on the local filesystem

- Fluentd: Sends logs to a Fluentd service, ideal for centralized logging

- awslogs: Directs logs to AWS CloudWatch, suitable for cloud-based monitoring

- gelf: Sends logs to in Graylog Extended Log Format for GrayWatch and Logstash endpoints

Tools and integration for Docker dual logging

To fully leverage Docker dual logging, integrating with powerful log management tools is essential. These popular tools enhance Docker dual logging by providing advanced features for log aggregation, analysis, and visualization.

- ELK Stack: An open-source solution comprising Elasticsearch, Logstash, and Kibana, ideal for collecting, searching, and visualizing log data.

- Splunk: A platform offering comprehensive log analysis and real-time monitoring capabilities suitable for large-scale environments.

- Graylog: A flexible, open-source log management tool that allows centralized logging and supports various data sources.

Conclusion

Docker dual logging is a powerful strategy for ensuring reliable, redundant log management in containerized environments. Implementing dual logging enhances your system’s resilience, improves troubleshooting capabilities, and meets compliance requirements with greater ease. As containerized applications continue to grow in complexity and scale, implementing dual logging will be critical for maintaining efficient infrastructures.

Familiarity with key abbreviations for incident management KPIs (Key Performance Indicators) is essential for effective performance analysis. In this article, we’ll explore calculating metrics like MTTR and MTBF, compare different metrics, and consider the role of software tools, such as CMMS and EAM systems, in managing and improving metrics like MTBF and MTTR.

Definitions of reliability metrics

What is MTTF?

MTTF stands for mean time to failure. It is the average lifespan of a given device. The mean time to failure is calculated by adding up the lifespans of all the devices and dividing it by their count.

MTTF = total lifespan across devices / # of devices

MTTF is specific to non-repairable devices, like a spinning disk drive; the manufacturer would talk about its lifespan in terms of MTTF.

For example, consider three dead drives pulled out of a storage array. S.M.A.R.T. indicates that they lasted for 2.1, 2.7, and 2.3 years, respectively.

(2.1 + 2.7 + 2.3) / 3 = ~2.37 years MTTF

We should probably buy some different drives in the future.

MTTF alternatively stands for mean time to fix, but it seems that “failure” is the more common meaning.

Related:

What is MTBF?

MTBF stands for the mean time between failures. MTBF is used to identify the average time between failures of something that can be repaired.

The mean time between failures is calculated by adding up all the lifespans of devices and dividing by the number of failures:

MTBF = total lifespan across devices / # of failures

The total lifespan does not include the time it takes to repair the device after a failure.

An example of MTBF would be how long, on average, an operating system stays up between random crashes.

Related:

What is MTTR?

MTTR stands for mean time to repair, mean time to recovery, mean time to resolution, mean time to resolve, mean time to restore, or mean time to respond. Mean time to repair and mean time to recovery seem to be the most common.

The mean time to repair (and restore) is the average time it takes to repair a system once the failure is discovered. It is calculated by adding the total time spent repairing and dividing that by the number of repairs.

MTTR (repair) = total time spent repairing / # of repairs

For example, let’s say three drives we pulled out of an array, two of which took 5 minutes to walk over and swap out a drive. The third one took 6 minutes because the drive sled was a bit jammed. So:

(5 + 5 + 6) / 3 = 5.3 minutes MTTR

The mean time to repair assumes that the failed system is capable of restoration and does not require replacement. It is synonymous with the mean time to fix.

Mean time to recovery, resolution, and resolve is the time it takes from when something goes down to when it is back and at full functionality. This includes everything from finding the problem, fixing it, and using technology (like CMMS and EAM systems) to analyze historical data and current assets to develop your maintenance strategy. In DevOps and ITOps, keeping MTTR to an absolute minimum is crucial.

MTTR (recovery) = total time spent discovery & repairing / # of repairs