Microservices are the future of software development. This approach serves as a server-side solution to development where services remain connected but work independently. More developers are using microservices to improve performance, precision, and productivity, and analytical tools provide them with valuable insights about performance and service levels.

The argument for microservices is getting louder: Different teams can work on services without affecting overall workflows, something that’s not possible with other architectural styles. In this guide, we’ll take a deep dive into microservices by learning what they are, what they do, and how they benefit your team.

What are microservices?

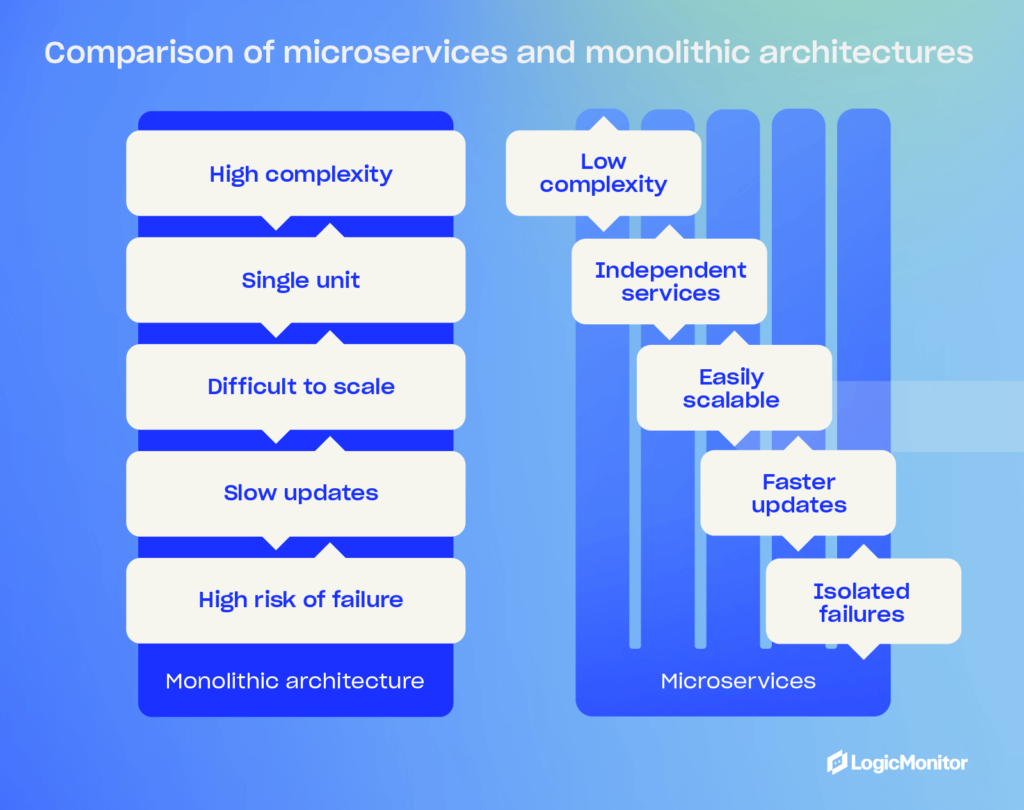

In software development, microservices are an architectural style that structures applications as a collection of loosely connected services. This approach makes it easier for developers to build and scale apps. Microservices differ from the conventional monolithic style, which treats software development as a single unit.

The microservices method breaks down software development into smaller, independent “chunks,” where each chunk executes a particular service or function. Microservices utilize integration, API management, and cloud deployment technologies.

The need for microservices has come out of necessity. As apps become larger and more complicated, developers need a novel approach to development—one that lets them quickly expand apps as user needs and requirements grow.

Did you know that more than 85 percent of organizations with at least 2,000 employees have adopted microservices since 2021?

Why use microservices?

Microservices bring multiple advantages to teams like yours:

Scalability

Microservices are much easier to scale than the monolithic method. Developers can scale specific services rather than an app as a whole and execute bespoke tasks and requests together with greater efficiency. There’s less work involved because developers concentrate on individual services rather than the whole app.

Faster development

Microservices lead to faster development cycles because developers concentrate on specific services that require deployment or debugging. Speedier development cycles positively impact projects, and developers can get products to market quicker.

Improved data security

Microservices communicate with one another through secure APIs, which might provide development teams with better data security than the monolithic method. Because teams work somewhat in silos (though microservices always remain connected), there’s more accountability for data security because developers handle specific services. As data safety becomes a greater concern in software development, microservices could provide developers with a reliable security solution.

Better data governance

Just like with data security, where teams handle specific services rather than the entire app, microservices allow for greater accountability when complying with data governance frameworks like GDPR and HIPAA. The monolithic method takes more of a holistic approach to data governance, which can cause problems for some teams. With microservices, there’s a more specific approach that benefits compliance workflows.

Multiple languages and technologies

Because teams work somewhat independently of each other, microservices allow different developers to use different programming languages and technologies without affecting the overall architectural structure of software development. For example, one developer might use Java to code specific app features, while another might use Python. This flexibility results in teams that are programming and technology “agnostic.”

For example, see how we scaled a stateful microservice using Redis.

Did you know 76 percent of organizations believe microservices fulfill a crucial business agenda?

Microservices architecture

Microservice architecture sounds a lot more complicated than it is. In simple terms, the architecture comprises small independent services that work closely together but ultimately fulfill a specific purpose. These services solve various software development problems through unique processes.

A good comparison is a football team, where all players share the same objective: To beat the other team. However, each player has an individual role to play, and they fulfill it without impacting any of the other players. Take a quarterback, for example, who calls the play in the huddle. If the quarterback performs poorly during a game, this performance shouldn’t affect the other team members. The quarterback is independent of the rest of the players but remains part of the team.

Unlike monolithic architectures, where every component is interdependent, microservices allow each service to be developed, deployed, and scaled independently.

Did you know the cloud microservices market was worth 1.63 billion in 2024?

Microservices vs. monolithic architectures

When you’re considering a microservices architecture, you’ll find that they offer a lot of benefits compared to a traditional monolithic architecture approach. They will allow your team to build agile, resilient, and flexible software. On the other hand, monolithic software is inherently complex and less flexible—something it pays to avoid in today’s world of increasingly complex software.

So, let’s look at why businesses like yours should embrace microservices, and examine a few challenges to look out for.

Microservices architecture advantages

- Agility and speed: By breaking your applications into smaller, independent services, you can speed up development cycles by allowing your teams to work on various services simultaneously, helping them perform quicker updates and release features faster.

- Resilience: Service independence and isolation improve your software’s overall stability since a service going offline is less likely to disrupt other services, meaning your application continues running while you troubleshoot individual service failures.

- Flexibility: Your team can use different technologies and frameworks for different services, allowing them to choose the best tools for specific tasks instead of sticking with a few specific technologies that may not be the right choice.

Monolithic architecture disadvantages

- Complexity and risk: When you build applications as single, cohesive units, your team needs to modify existing codes across several layers, including databases, front-ends, and back-ends—even when you only require a simple change. The process is time-consuming and risky, which adds stress to your team if they need to make regular changes, and a single change can impact entire systems.

- High dependency: When your application is made of highly dependent components, isolating services is more challenging. Changes to one part of a system can lead to unintended consequences across your entire application, resulting in downtime, impacted sales, and negative customer experience.

- Cost and inflexibility: Making changes to monolithic applications requires coordination and extensive testing across your teams, which means you’ll see a slower development process, potentially hindering your ability to respond quickly to problems and market demands.

Microservices in the cloud (AWS and Azure)

Perhaps the cloud is the most critical component of the microservices architecture. Developers use Docker containers for packaging and deploying microservices in private and hybrid cloud environments (more on this later.) Microservices and cloud environments are a match made in technological heaven, facilitating quick scalability and speed-to-market. Here are some benefits:

- Microservices run on different servers, but developers can access them from one cloud location.

- Developers make back-end changes to microservices via the cloud without affecting other microservices. If one microservice fails, the entire app remains unaffected.

- Developers create and scale microservices from any location in the world.

Various platforms automate many of the processes associated with microservices in the cloud. However, there are two developers should consider:

Once up and running, these systems require little human intervention from developers unless debugging problems occur.

AWS

Amazon pioneered microservices with service-based architecture many years ago. Now its AWS platform, available to developers worldwide, takes cloud microservices to the next level. Using this system, developers can break down monolithic architecture into individual microservices via three patterns: API-driven, event-driven, and data streaming. The process is much quicker than doing it manually, and development teams can create highly scalable applications for clients.

Azure

Azure is another cloud-based system that makes microservices easier. Developers use patterns like circuit breaking to improve reliability and security for individual services rather than tinkering with the whole app.

Azure lets you create APIs for microservices for both internal and external consumption. Other benefits include authentication, throttling, monitoring, and caching management. Like AWS, Azure is an essential tool for teams that want to improve agile software development.

Did you know the global cloud microservices market is expected to grow from USD 1.84 billion in 2024 to USD 8.33 billion by 2032, with a CAGR of 20.8%?

How are microservices built?

Developers used to package microservices in VM images but now typically use Docker containers for deployment on Linux systems or operating systems that support these containers.

Here are some benefits of Docker containers for microservices:

- Easy to deploy

- Quick to scale

- Launched in seconds

- Can deploy containers after migration or failure

Microservices in e-Commerce

Retailers used to rely on the monolithic method when maintaining apps, but this technique presented various problems:

- Developers had to change the underlying code of databases and front-end platforms for customizations and other tweaks, which took a long time and made some systems unstable.

- Monolithic architecture requires services that remain dependent on one another, making it difficult to separate them. This high dependency meant that some developers couldn’t change services because doing so would affect the entire system, leading to downtime and other problems that affected sales and the customer experience.

- Retailers found it expensive to change applications because of the number of developers that required these changes. The monolithic model doesn’t allow teams to work in silos, and all changes need to be tested several times before going ‘live.’

Microservices revolutionized e-commerce. Retailers can now use separate services for billing, accounts, merchandising, marketing, and campaign management tasks. This approach allows for more integrations and fewer problems. For example, developers can debug without affecting services like marketing and merchandising if there’s an issue with the retailer’s payment provider. API-based services let microservices communicate with one another but act independently. It’s a much simpler approach that benefits retailers in various niches.

Real-world examples of microservices in e-commerce

If you aren’t sure if microservers are the best choice for your company, just look at some of the big players that use microservices to serve their customers worldwide. Here are a few examples that we’ve seen that demonstrate how you can use microservices to build and scale your applications.

Netflix

Netflix began transitioning to microservices after a major outage due to a database failure in 2008 that caused four days of downtime, which exposed the limitations of its monolithic architecture. Netflix started the transition to microservices in 2009 and completed the migration to microservices in 2011. With microservices performing specific functions, such as user management, recommendations, streaming, and billing, Netflix can deploy new features faster, scale services independently based on demand, and improve the overall resilience of its platform.

Amazon

Amazon shifted to microservices in the early 2000s after moving to service-oriented architecture (SOA) to manage its large-scale e-commerce platform. Amazon’s microservices helped it handle different aspects of the company’s platform, such as order management, payment processing, inventory, and customer service. This helped Amazon innovate rapidly, handle massive traffic, and maintain uptime—even during peak shopping periods like Black Friday.

Spotify

Spotify uses microservices to support its platform features like playlist management, search functionality, user recommendations, and music streaming. Spotify’s approach allows the company to innovate quickly, scale individual services based on user demand, and improve the resilience of its platform against failures. Spotify implemented microservices between 2013 and 2014 to handle increasing user demand and feature complexity as it expanded globally.

Airbnb

Airbnb employs microservices to manage its booking platform services for property listings, user authentication, search, reservations, and payments. Implemented between 2017 and 2020, microservices helped Airbnb scale its services as the company experienced massive growth. Airbnb was able to improve performance based on user demand and deploy features more quickly.

PayPal

Since early 2013, PayPal has used microservices to handle payment processing, fraud detection, currency conversion, and customer support services. Microservices helps PayPal offer high availability, improve transaction processing times, and scale its services across different markets and geographies.

How do you monitor microservices?

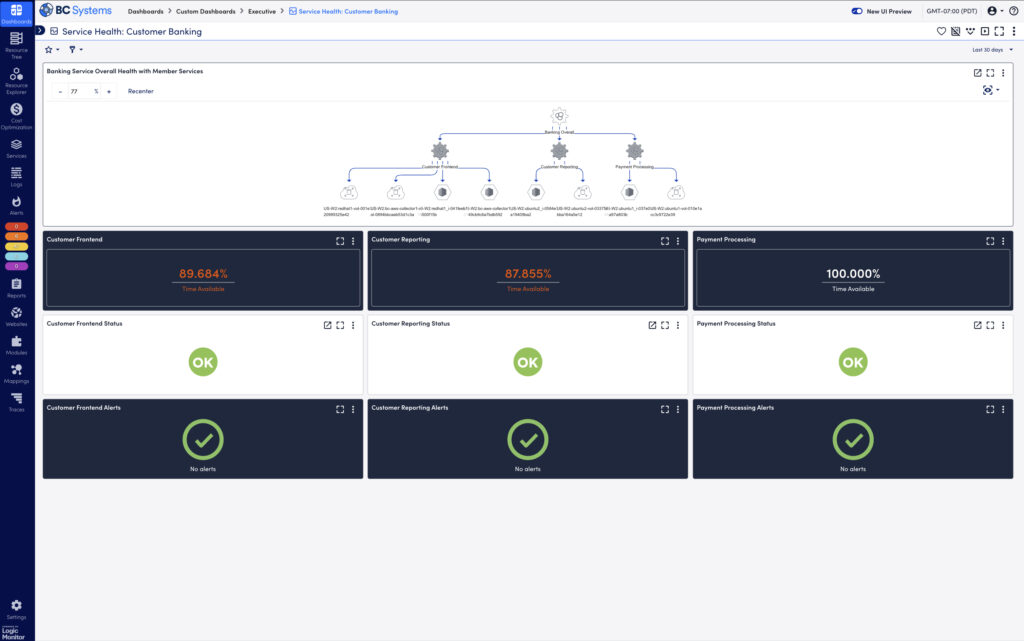

Various platforms automate the processes associated with microservices, but you will still need to monitor your architecture regularly. As you do, you’ll gain a deeper understanding of software development and how each microservice works with the latest application metrics. Use them to monitor key performance indicators like network and service performance and improve debugging.

Here’s why you should monitor microservices:

- Identify problems quickly and ensure microservices are functioning correctly.

- Share reports and metrics with other team members and measure success over time.

- Change your architecture to improve application performance.

The best monitoring platforms will help you identify whether end-user services are meeting their SLAs and help teams drive an optimized end-user experience.

Tools like LM Envision provide comprehensive monitoring solutions that help you maintain high-performance levels across all your services.

Did you know the cloud microservices market could reach $2.7 billion by 2026?

Best practices for implementing microservices

As you’ve seen above, microservices will offer many benefits to your business. But they aren’t something you can just substitute in and expect to run flawlessly. Here are a few best practices that will help you implement microservices in your application:

- Start small: Switching to microservices isn’t something you should do all at once. Start small by breaking your application down into smaller components and do it a little at a time. This approach will allow your team to learn and adapt as they go.

- Use automation tools: Use CI/CD pipelines to automate the deployment and management of microservices. Automation reduces the chance of your team making mistakes and speeds up repetitive deployment processes to reduce development time.

- Ensure robust monitoring and logging: Implement comprehensive monitoring and logging solutions so your team can track the performance of each microservice and quickly identify any issues.

- Prioritize security: Each service can become a potential attack entry point with microservices and may be harder to secure and monitor for your team because of the more distributed architecture. Implement strong authentication, encryption, and other security measures to protect the system.

- Maintain communication between teams: Since microservices allow your team to work independently on different parts of the application, it’s crucial to maintain clear communication and collaboration to ensure that all services work together seamlessly.

What are the benefits of microservices? Why do they exist today?

Now that we’ve looked at microservices and a few primary benefits, let’s recap some of them to learn more about why they exist today.

- Greater scalability: Although microservices are loosely connected, they still operate independently, which allows your team to modify and scale specific services without impacting other systems.

- Faster development: Your team can move faster by working on individual services instead of a monolithic application, meaning you can focus on specific services without worrying as much about how changes will impact the entire application.

- Better performance: You can focus on the performance of individual services instead of entire applications, changing and debugging software at a smaller level to learn how to optimize for the best performance.

- Enhanced security and data governance: Using secure APIs and isolated services will help your team improve security by controlling access to data, securing individual services, and monitoring sensitive services—helping facilitate compliance with security regulations like HIPPA and GDRP.

- Flexible technology: You aren’t tied to specific programming languages, frameworks, databases, and other technologies with microservices, which means you can pick the best tool for the job for specific tasks.

- Cloud-native capabilities: Platforms like AWS and Azure make creating and managing microservices easier, which means your team can build, deploy, and manage software from anywhere in the world without having a physical infrastructure.

- Quick deployment: Software like Docker is available to make deploying microservices easy for your team, helping them streamline deployment and ensure microservices run in the exact environment they were built in.

The future of microservices

Microservices are a reliable way to build and deploy software, but they are still changing to meet the evolving needs of businesses. Let’s look at what you can expect to see as microservices continue to evolve in the future.

Serverless Architecture

Serverless architecture allows you to run microservices without managing any other infrastructure. AWS is already developing this technology with its Lambda platform, which takes care of all aspects of server management.

PaaS

Microservices as a Platform as a Service (PaaS) combines microservices with monitoring. This revolutionary approach provides developers with a centralized application deployment and architectural management framework. Current PaaS platforms that are well-suited for microservices are Red Hat OpenShift and Google App Engine.

In the future, PaaS could automate even more processes for development teams and make microservices more effective.

Multi-Cloud Environments

Developers can deploy microservices in multiple cloud environments, which provides teams with enhanced capabilities. This can mean using multiple cloud providers, and even combining cloud services with on-prem infrastructure (for cases when you need more control over the server environment and sensitive data).

“Microservices related to database and information management can utilize Oracle’s cloud environment for better optimization,” says technology company SoftClouds. “At the same time, other microservices can benefit from the Amazon S3 for extra storage and archiving, all the while integrating AI-based features and analytics from Azure across the application.”

Service mesh adoption

Service meshes are becoming critical for managing more complex microservice ecosystems. They will provide your team with a dedicated infrastructure for handling service-to-service communication. This infrastructure will help improve monitoring, incident response, and traffic flow.

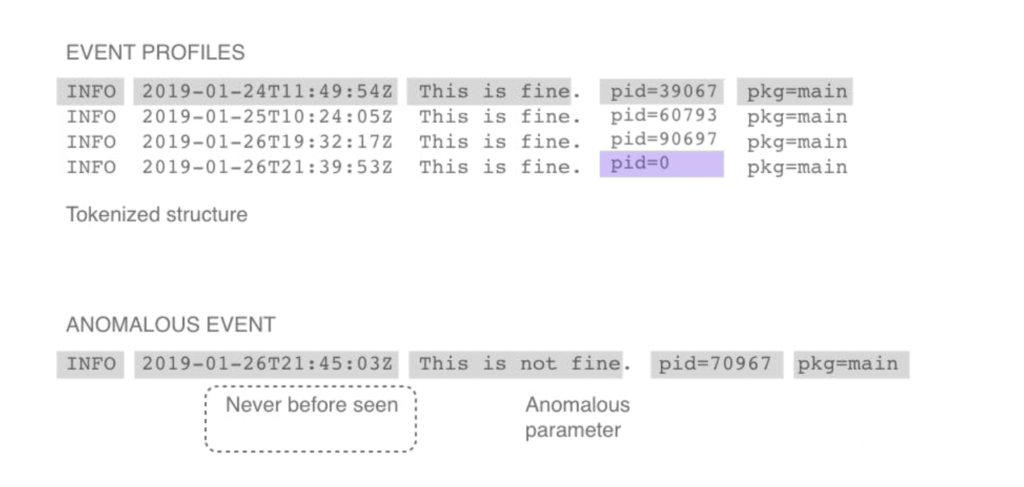

DevOps and AIOps

The integration of DevOps and AIOps with microservices and ITOps will help streamline development and operations. For example, new DevOps tools will help developers automate many deployment tasks instead of manually configuring individual environments. AIOps will also help your team, as it uses AI and machine learning to improve monitoring and reduce the time your team needs to look through data to find problems.

Event-driven architecture

Event-driven architecture is gaining more popularity among microservices because it allows for more de-coupled, reactive systems that are easier to manage. It allows them to process real-time data and complex event sequences more efficiently.

Advanced observability

As multi-cloud environments become more common, more advanced tools are needed to monitor these environments. Hybrid observability solutions will help your team manage hybrid environments to gather performance metrics (CPU usage, memory usage) about your services in a central location and send alerts when something goes wrong. Advanced observability solutions also use AI to monitor environments to ensure your team only sees the most relevant events and trace information that indicates a potential problem.

Before You Go

Microservices have had an immeasurable impact on software development recently. This alternative approach to the monolithic architectural model, which dominated software development for years, provides teams a streamlined way to create, monitor, manage, deploy, and scale all kinds of applications via the cloud. Platforms like AWS and Azure facilitate this process.

As you learn more about software development and microservices, you’ll discover new skills and become a more confident developer who solves the bespoke requirements of your clients. However, you should test your knowledge regularly to make every development project successful.

Do you want to become a more proficient software developer? Microservices Architecture has industry-leading self-assessments that test your microservice readiness, applicability, and architecture. How well will you do? Get started now.

Using multiple cloud environments in overly complex networks with outdated architectures puts tremendous strain on infrastructures. One of the best solutions for combating overworked architectures is Catalyst SD-WAN, Cisco’s new and improved version of Viptela SD-WAN.

Unveiled in 2023, Catalyst SD-WAN (an updated and rebranded version of Viptela SD-WAN technology) is one of the first Software-Defined Wide Area Network solutions. It provides increased infrastructure speeds and several other key features, like centralized management and security integration. The many benefits of SD-WAN technology are discussed in detail below.

Is Cisco Catalyst SD-WAN the same as Viptela SD-WAN?

Founded in 2012, Cisco Viptela was one of the first vendors to provide SD-WAN solutions. The Viptela SD-WAN platform gained such popularity that Cisco acquired the brand along with Viptela SD-WAN in 2017 and integrated the Viptela technology into its product portfolio.

In 2023, Cisco introduced Cisco Catalyst SD-WAN, an updated version of Viptela SD-WAN that not only rebrands the Viptela technology package but also offers advanced capabilities, like improved network performance, better security, and increased cost efficiency. Available as a PDF download, the Cisco SD-WAN Design Guide provides a deeper look into the evolution of Cisco’s SD-WAN platform.

The need for Catalyst SD-WAN

As network infrastructures become more complex and challenging to manage, traditional Wide Area Network (WAN) architectures struggle with scalability, flexibility, and cost-efficiency. Having an efficient, secure, and scalable network solution is no longer a luxury but a necessity, and Catalyst SD-WAN is one of the most effective solutions available. Using software-defined networking principles, Catalyst SD-WAN helps manage and control network traffic in a more agile and cost-effective manner.

How Catalyst SD-WAN works

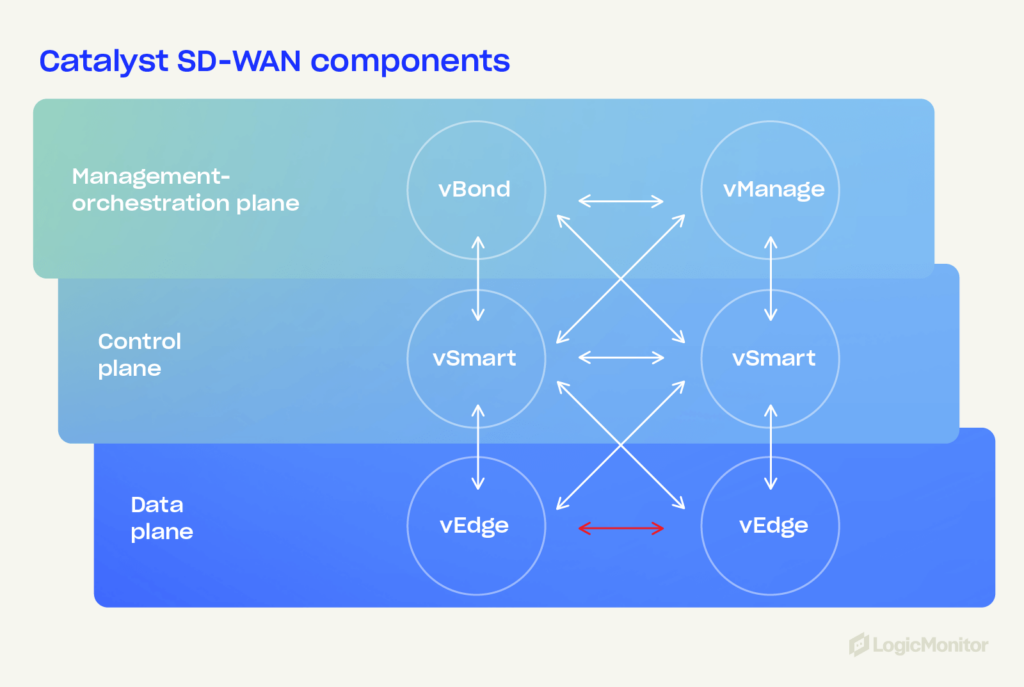

Cisco Catalyst SD-WAN is built on a flexible architecture that includes several key components—vEdge routers, vSmart controllers, vManage, and vBond orchestrators. These components create a secure, scalable, and efficient network environment.

Architecture overview

- vEdge routers: Can be physical or virtual and are deployed at a network’s edge to provide secure connectivity and create an overlay network. They support various transport methods like MPLS, broadband, and LTE, ensuring reliable data transfer.

- vSmart controllers: Manage the control plane, handling policy enforcement and route distribution across a network. They ensure that a network’s operational policies are applied consistently and securely.

- vManage: Allows administrators to configure, monitor, and manage an SD-WAN environment, providing a unified network view by integrating data center analytics, automation, and real-time monitoring. The LogicMonitor monitoring platform for Cisco SD-WAN solutions uses vManage API to monitor performance and availability metrics for various edge devices across networks.

- vBond orchestrators: Responsible for initial device authentication and establishing secure connections between vEdge routers, vSmart controllers, and vManage. It plays a critical role in maintaining on-premises and cloud network security and reliability.

Data plane and control plane separation

Separating the data plane (data forwarding) from the control plane (network control) optimizes traffic flow and allows the application of security policies across networks.

Secure overlay network

An encrypted overlay network over existing transport mediums, such as MPLS, broadband, and LTE, ensures consistent and secure connectivity across all locations and provides a more reliable network foundation for business operations.

Policy enforcement mechanisms

Centralized policies that govern traffic flow, security rules, and application performance can be easily defined and updated based on business needs.

8 key features of Cisco Catalyst SD-WAN

Cisco Catalyst SD-WAN provides a powerful suite of features that streamline network management, bolster security, and optimize performance. From centralized control to comprehensive analytics, these eight key features are designed to address the complexities of modern network environments.

- Centralized management and policy enforcement: Centralized management through a single dashboard enables administrators to make real-time adjustments from anywhere and centralizes security policy enforcement across entire networks. It also provides uniform applications of security standards, reducing the risk of misconfigurations. LogicMonitor’s platform supports SD-WAN monitoring and offers a free downloadable solution brief to get started.

- Integrated security and threat protection: Provides end-to-end encryption and advanced threat protection, allowing for seamless management of both network and security policies through a single interface. Cisco Catalyst SD-WAN supports secure connectivity across multiple transport methods, including broadband, LTE, and MPLS.

- Zero-trust security model: Continuously verifies the identity of every device and user, reducing the risk of breaches. Complemented by secure device onboarding, the zero-trust security model of Catalyst SD-WAN automates the integration of new devices with Zero-Touch Provisioning (ZTP) and automated certificate management.

- Advanced threat protection: Includes advanced threat protection mechanisms, such as intrusion prevention systems (IPS), anti-malware, and URL filtering, which detect and block sophisticated threats in real time before they impact networks. Integrating Cisco Talos Intelligence Group, one of the world’s largest commercial threat intelligence teams, ensures that Catalyst SD-WAN stays updated with the latest threat intelligence.

- Scalability and flexibility: Easily adapts to growing network demands and integrates seamlessly with other security solutions, particularly Cisco Umbrella for cloud-delivered security and Cisco Secure Network Analytics (formerly Stealthwatch) for network visibility and security analytics. Simple integration abilities extend security beyond the SD-WAN to provide comprehensive protection against threats throughout a network.

- Application-aware routing: Optimizes data traffic by tracking path characteristics and selecting the best routes for different applications. This ensures that critical applications receive the bandwidth and quality of service they need, reducing issues like jitter and packet loss.

- Network segmentation: Allows businesses to create multiple virtual networks over a single physical infrastructure. By isolating different types of traffic, critical applications remain unaffected by less important traffic.

- Comprehensive analytics and visibility: Offers real-time monitoring and reporting for more comprehensive visibility into network performance. With predictive analytics, administrators can proactively manage networks, identify potential issues, and prevent downtime.

Benefits of using Catalyst SD-WAN

Catalyst SD-WAN is an attractive solution for network management due to its potential for enhanced application performance. Reducing a network’s complexity reduces the risk of latency and connectivity issues and improves consumers’ user experience.

- Improved network performance: Optimizes network performance by reducing latency and increasing throughput for critical applications. By leveraging multiple transport services, the solution ensures businesses can maximize their available bandwidth.

- Enhanced security: Offers robust security protocols that protect data in transit and at rest. With integrated security features, businesses can simplify compliance with industry regulations and safeguard their networks against modern threats.

- Cost efficiency: Leveraging cost-effective transport options, such as broadband and Cisco Catalyst SD-WAN, reduces operational costs and lowers hardware and maintenance expenses through centralized management and automation.

- Simplified network management: Simplifies the deployment and management of complex networks, allowing businesses to adapt quickly to changing network demands. With minimal manual effort required, IT teams can focus on strategic initiatives rather than routine network maintenance.

- Greater agility and flexibility: Enables rapid provisioning of new sites and services through seamless integration with cloud services and third-party applications, allowing businesses to remain agile and responsive to market changes.

- Enhanced user experience: Delivers consistent and reliable application performance, leading to improved end-user satisfaction. By prioritizing critical applications, business continuity is maintained and the overall user experience is enhanced.

Cisco Catalyst SD-WAN vs. traditional WAN solutions

Traditional WANs, rooted in hardware-centric models, struggle with scalability, flexibility, and the ability to adapt to modern network demands. Cisco Catalyst SD-WAN introduces a software-defined approach that addresses these challenges head-on. Upgrading network infrastructures requires understanding the distinct differences between SD-WAN and traditional WAN architecture.

Architecture comparison

Traditional WAN operations rely heavily on hardware, which isn’t always flexible and difficult to scale. In contrast, Cisco Catalyst SD-WAN uses a software-defined approach that separates network control from hardware, offering greater flexibility and scalability.

Performance and reliability

Cisco Catalyst SD-WAN offers superior performance compared to traditional WANs, with enhanced redundancy and failover capabilities. The solution’s dynamic routing and real-time traffic management features—compared to fixed pathways for data transmission—ensure that critical applications remain accessible even in the event of network disruptions.

Security considerations

While traditional WANs often rely on perimeter-based security models that offer limited visibility into network traffic, Cisco Catalyst SD-WAN integrates security into every layer of the network. This approach addresses modern security threats more effectively, providing a comprehensive defense against potential vulnerabilities with features like encryption, advanced threat detection, malware sandboxing, and centralized security policy management.

Cost and ROI

Catalyst SD-WAN offers a lower total cost of ownership (TCO) compared to traditional WANs by reducing hardware dependencies and leveraging cost-effective transport options. Because of centralized management, an SD-WAN can also lead to faster deployment (and lower operational costs), allowing businesses to achieve a higher return on investment (ROI).

Scalability and flexibility

Traditional WANs can struggle to adapt to changing network scales and configurations because they often require on-site physical configuration changes and struggle to integrate with new technology. Catalyst SD-WAN, however, is designed for scalability, allowing businesses to integrate new technologies—both on-prem and cloud services—as needs evolve.

Real-world applications of Catalyst SD-WAN

Understanding the practical applications of Cisco Catalyst SD-WAN can help illustrate its benefits for various industries. Here are some real-life scenarios that showcase how organizations leverage SD-WAN to enhance their network performance, security, and scalability.

1. Retail chain with distributed branch locations

Challenge: A large retail chain with hundreds of branch locations across different regions faces the challenge of managing network connectivity and security consistently across all stores. This means employees may not be able to do their jobs or serve customers. The traditional WAN infrastructure is complex and costly to maintain, and it is limited in its ability to prioritize critical applications like point-of-sale systems and inventory management.

Solution: By implementing Cisco Catalyst SD-WAN, the retail chain can centralize network management across all branches to gain complete visibility into the IT infrastructure and make changes on both high and low levels. The solution provides secure connectivity, application-aware routing, and network segmentation, ensuring that critical applications receive priority while maintaining robust security standards. With Zero-Touch Provisioning (ZTP), new branch locations can be brought online quickly, reducing setup time and operational costs.

2. Global financial services firm

Challenge: A global financial services company needs to ensure secure, high-performance connectivity for its offices and remote workers worldwide. Lost connectivity means customers may not be able to access their funds, leading to panic and lost revenue for the firm. The traditional WAN setup struggles with latency and security concerns, particularly as the firm expands into new markets and increases its reliance on cloud services.

Solution: Cisco Catalyst SD-WAN offers a scalable solution that enhances the firm’s global connectivity through optimized routing and integrated security features, ensuring fast online services for customers (something especially important for trading services like high-frequency trading). The firm can securely connect its offices and remote workers, leveraging multiple transport methods (like MPLS, broadband, and LTE) while ensuring compliance with stringent financial industry regulations—protecting customer data and avoiding hefty legal fees. The centralized management provided by vManage allows the IT team to monitor and manage the network in real time, responding quickly to any issues or threats.

3. Healthcare provider with multiple facilities

Challenge: A healthcare organization with multiple hospitals, clinics, and remote care facilities needs reliable, secure connectivity to support critical applications like electronic health records (EHR), telemedicine, and real-time patient monitoring. Traditional WAN solutions struggle to deliver the necessary performance and security, particularly as the organization expands its services.

Solution: With Cisco Catalyst SD-WAN, the healthcare service provider can create a secure, high-performance network that supports critical applications across all facilities. The solution’s integrated security features, including end-to-end encryption and advanced threat protection, ensure that patient data is protected, while application-aware routing optimizes the performance of essential healthcare applications. The SD-WAN’s scalability also allows the organization to quickly integrate new facilities, services, and technology (like IoT devices) into the network as it grows.

4. Manufacturing company with a global supply chain

Challenge: A manufacturing company with a global supply chain needs efficient and secure communication between its production facilities, suppliers, and distribution centers. Traditional WAN solutions can’t keep up with the dynamic nature of modern manufacturing, where real-time data and agile responses are critical for maintaining inventory and delivery schedules.

Solution: Cisco Catalyst SD-WAN enables the manufacturing company to establish a secure and agile network that connects all elements of its global supply chain. Using application-aware routing and network segmentation, the company can prioritize and protect critical communications and data transfers, giving each location the information it needs to make decisions and serve customers. This setup improves operational efficiency, reduces downtime, and supports the company’s digital transformation initiatives, such as the adoption of IoT and smart manufacturing technologies.

5. Educational institution with multiple campuses

Challenge: A university with multiple campuses and remote learning centers must provide reliable, high-performance connectivity to support online learning platforms, campus security systems, and administrative applications. The traditional WAN infrastructure is not flexible enough to adapt to the growing demand for bandwidth from student activity, new educational resources, and security.

Solution: Cisco Catalyst SD-WAN provides the university with a flexible, scalable network solution that ensures consistent connectivity across all campuses and remote learning centers. The centralized management platform allows the IT team to deploy security policies uniformly, optimize bandwidth allocation for critical applications, and monitor network performance in real time. The SD-WAN’s ability to integrate with cloud-based learning platforms also supports the university’s digital education initiatives.

Conclusion

By addressing the limitations of traditional WAN architecture, Catalyst SD-WAN enhances application performance, reduces costs, and simplifies network management. Its flexible and secure architecture enables organizations across various industries to remain agile, protect their data, and efficiently manage their infrastructures, whether for cloud migration, distributed systems, or global operations.

LogicMonitor’s tools help businesses improve network visibility while obtaining the maximum amount of benefits from Catalyst SD-WAN.

Network bandwidth is the maximum rate at which data can be transmitted over a network connection in a given time. Essentially, it’s the highway for your data. Bandwidth is often measured in bits per second (bps), but in larger systems, you’ll see measurements like megabits per second (Mbps) or gigabits per second (Gbps).

Bandwidth plays a critical role in IT infrastructure because it determines how quickly data can move. If you think of it like a road, bandwidth dictates how many cars can travel at once. This capacity lets IT teams decide how much traffic their systems can handle. Managing bandwidth helps you avoid congestion, ensuring that applications run smoothly and efficiently.

In this guide, we’ll explore network bandwidth, its impact on your infrastructure, and ways to optimize its usage to keep your systems running smoothly.

Basic concepts

To understand network bandwidth, learning its basic concepts and associated terms is important. Let’s break down these terms:

- Data rate: The speed at which data moves across a network

- Capacity: The maximum amount of data a network can handle

- Throughput: The actual amount of data that successfully travels across the network

- Bandwidth utilization: The percentage of total available bandwidth currently in use

- Packet: A small unit of data sent across the network

- Latency: The delay between sending and receiving data

- Jitter: Variability in packet delivery timing, which can cause delays or disruptions

Pro tip:

When dealing with latency issues, especially in real-time applications, aim to minimize traffic during peak hours and optimize your Quality of Service (QoS) settings to prioritize essential data streams.

How network bandwidth works

When data is transferred over a network, it gets broken into smaller units called packets. These packets are sent through the network to their destination, where they’re reassembled. However, various factors—such as infrastructure quality and network congestion—affect how smoothly this process happens.

Think of bandwidth as the width of a pipe: a larger pipe allows more water (data) to pass through, but obstructions (network issues) can slow everything down.

A customer I worked with, a mid-sized financial firm, was experiencing frequent network slowdowns, especially during peak business hours. After an assessment, they discovered that their network relied on outdated routers and switches that couldn’t handle the increased traffic from newer applications and employee devices.

By upgrading their equipment to support modern network standards (including WiFi 6 and gigabit ethernet switches), they were able to increase bandwidth efficiency dramatically. This upgrade reduced bottlenecks and allowed critical applications, like their real-time financial tracking systems, to run smoothly even during heavy traffic periods.

Pro tip:

When facing network congestion, assess your hardware first. Outdated equipment can be a hidden cause of slowdowns, and upgrading to the latest technology often delivers significant improvements in both bandwidth and overall performance.

Factors affecting network bandwidth and performance

Many factors impact network bandwidth and performance. These include internal factors within your organization and external factors that may be outside of your control.

Internal factors:

- Network hardware and configuration: The quality and configuration of network equipment like routers and modems

- Software and protocol efficiency: Applications and protocols used to transmit data across networks, including hardware firmware, device applications, and protocols (TCP, UDP, HTTP, FTP)

External factors:

- ISP limitations: Sometimes, your internet service provider’s infrastructure is the bottleneck

- Environmental interference: Environmental problems, such as physical obstacles, electromagnetic interference (disrupting WiFi), and distance, may degrade performance

Understanding network performance

Several factors impact network performance, and they often show up as issues like latency—one of the most common culprits. While bandwidth indicates how much data can be transferred, latency refers to how long it takes for that data to travel. This delay is especially problematic in real-time applications, like video conferencing or online gaming, where even small delays can cause noticeable disruptions.

Factors that increase latency include:

- High volumes of data, especially when nearing bandwidth limits.

- Type of internet connection (Cable, Fiber, DSL, Satellite).

- Network traffic volume during peak times.

- Quality of Service (QoS) settings that prioritize certain types of traffic.

- Distance between your device and the server.

- Network design and topology.

- Device performance, such as outdated routers or network switches.

Bandwidth vs. throughput vs. speed

Though bandwidth, throughput, and speed are often used interchangeably, they refer to different aspects of network performance.

Bandwidth is the maximum capacity—the total amount of data your network can handle at once. Think of it as the number of lanes on a highway. Throughput is the actual amount of data that reaches its destination. It’s affected by factors like network congestion and distance, making it the real-world measure of your network’s performance. Speed is how fast data moves from one point to another. While bandwidth defines potential, speed represents how quickly data can travel under current conditions.

Imagine bandwidth as the width of the road and speed as how fast cars are traveling. Throughput is how many cars (or data packets) actually make it to the end of the highway without hitting traffic jams.

Throughput in networking

Throughput gives you a clear picture of your network’s real-world performance—how much data is making it to its destination. Monitoring throughput can help identify issues such as:

- Extra data being transmitted, reducing efficiency

- Too much traffic slowing things down

- Older routers or switches struggling to keep up with demand

- Poorly optimized applications slowing down data transfer

Common bandwidth bottlenecks

Bandwidth bottlenecks can occur at various points in your network, from internal devices to external ISP limitations. Common causes include:

- Device limitations: Too many devices on a network can overwhelm available bandwidth, especially in large organizations where workstations, servers, and personal devices all compete for resources

- Old equipment: Outdated routers, switches, or network cables can limit your available bandwidth, slowing down data flow.

- Insufficient capacity: Sometimes, internet service providers don’t deliver enough bandwidth to meet your needs, particularly in small businesses or homes

- Peak usage times: During high-traffic periods, shared connections—whether at home or in the office—can become congested, causing slowdowns.

Recognizing these factors can help you start troubleshooting and optimizing your network performance more effectively.

Monitoring network bandwidth

Effective network monitoring helps you maintain performance and ensures your organization’s employees can access the resources they need to do their jobs.

You likely have a range of tools available to help with this, like network monitoring software, Simple Network Management Protocol (SNMP), and traffic analyzers. Here’s how you monitor your bandwidth in the most effective way possible:

- Establish a baseline by measuring your current network performance

- Reduce interference by testing under ideal conditions

- Connect a device directly to a modem or router to eliminate variability from WiFi interference

- Conduct tests during both peak and off-peak hours to identify patterns of network congestion

- Use your tool to breakdown bandwidth by application to understand what’s consuming the most resources

- Run multiple tests to gather reliable data, then compare against your expected performance benchmarks

- Rerun tests in different environments to look for differences in network speed based on different factors

- Document results and create a plan to improve network bandwidth, which may include tweaking QoS settings or addressing peak-time congestion

Pro tip:

Don’t just measure speed—track latency and packet loss, as these affect performance even when bandwidth seems sufficient

Monitoring network bandwidth with this approach helps with immediate issue detection, resulting in less downtime and improved operations—letting you do more strategic work rather than putting out fires. It also allows you to find network congestion areas and reallocate resources for more efficient operations. Lastly, you can detect unusual traffic patterns that may indicate threats, keeping you ahead of any security incidents.

Optimizing network bandwidth monitoring

Although your organization can use tools to monitor network traffic, a comprehensive tool allows you to combine multiple data sources in one solution and perform advanced analysis.

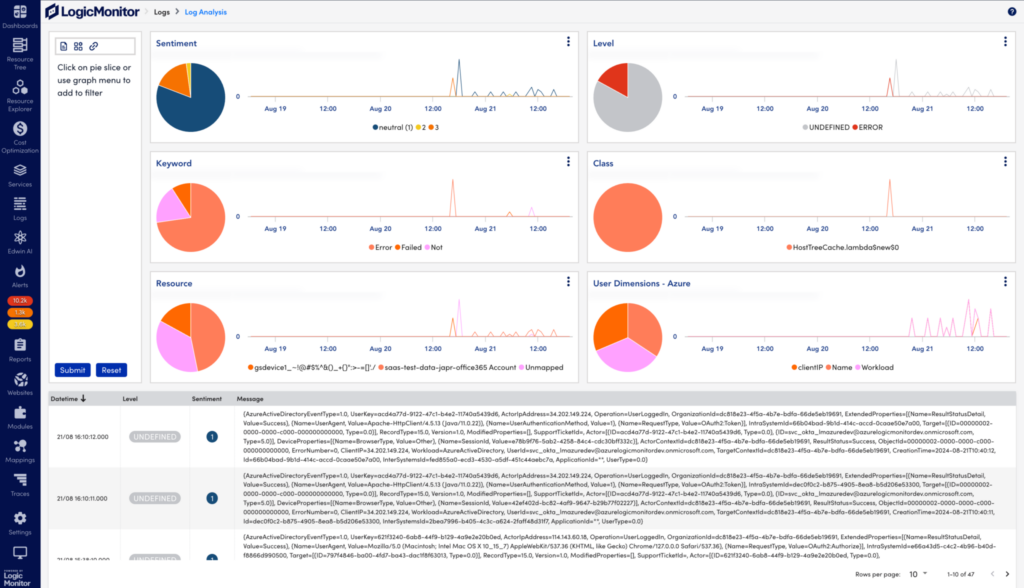

LogicMonitor offers the LM Envision platform, a complete solution for hybrid observability powered by AI. Here are a few features that can help you monitor network traffic:

- Monitoring of historical network performance to check for usage patterns that may indicate problems

- AI features to automatically look for anomalies instead of manually inspecting traffic logs and getting caught in the noise

- Reporting tools to plan the network for performance, capacity planning, and improved bandwidth usage

- Predictive analytics to get in front of problems proactively instead of reacting after they happen

- Security improvement through the detection of unusual bandwidth patterns that indicate potential security breaches

Improving and optimizing bandwidth

In my experience, optimizing bandwidth isn’t just about increasing capacity—it’s about making sure the network is designed and managed efficiently. Here are some strategies that I’ve found to be particularly effective when improving network performance.

Improve network design and architecture:

One of the quickest wins I’ve seen when optimizing bandwidth is improving network design. A well-planned architecture ensures data flows efficiently, and investing in modern equipment pays off almost immediately.

- Upgrade equipment: Outdated routers and switches are often the hidden culprits of slow networks. Moving to newer models, especially those supporting WiFi 6, made a huge difference in several projects I’ve worked on.

- Optimize topology: I once worked with a company where removing just a few unnecessary network hops drastically reduced latency and increased throughput. Simplifying your network’s layout is a low-cost way to free up bandwidth.

Manage traffic

Traffic management is critical. In large networks, I’ve seen bottlenecks occur simply because non-essential traffic was eating up precious bandwidth. By prioritizing the right services, you can avoid that.

- Set QoS policies: In a financial firm I worked with, implementing Quality of Service (QoS) ensured that mission-critical apps like trading platforms got the bandwidth they needed while less urgent traffic was deprioritized.

- Traffic shaping: Another useful trick is rate-limiting. We limited bandwidth for non-essential services like video streaming during peak hours, ensuring that critical operations weren’t affected.

Control bandwidth usage

You don’t always need more bandwidth. Sometimes, it’s about controlling when and how it’s used. I’ve seen great results by scheduling tasks that eat up bandwidth—like backups or updates—outside of peak times.

- Schedule intensive tasks: With one customer, simply running updates after hours dramatically reduced congestion during the workday.

- Set quotas: For some customers, setting bandwidth quotas for non-essential users prevented individual teams from consuming too much bandwidth and ensured more important processes always had enough to function smoothly.

- Educate users: I always recommend educating users. When employees understand that bandwidth isn’t infinite, they’re more likely to limit unnecessary activities like streaming or large file downloads during business hours.

Regularly monitor bandwidth usage

From my experience, regular monitoring is one of the most important steps for keeping a network running smoothly. You need to have visibility into what’s happening on your network in real-time and historically.

- Monitor in real-time: I’ve seen businesses thrive using tools like LM Envision, which allow you to identify issues as they happen. You can set alerts for unusual bandwidth usage, preventing problems before they affect users.

- Historical data analysis: Reviewing historical trends has helped many of my customers identify and resolve recurring bandwidth bottlenecks. By looking at when spikes occur, we were able to make smarter decisions about bandwidth allocation.

Planning for network bandwidth

Network demands don’t stay static, and I always encourage businesses to plan for growth. Your current setup might be fine now, but you need to think about what’s coming next.

Assess your needs

Start by assessing what you have now. I usually begin by looking at which applications are using the most bandwidth and how much traffic flows through both wired and wireless networks.

Remember, peak times matter. I’ve worked with customers who didn’t realize how much their network was struggling during peak hours until we analyzed the data. Knowing when your network is busiest helps you make smarter infrastructure decisions.

Plan for future growth

Planning ahead has saved many of my customers from network slowdowns. Look at your projected business growth—whether it’s more employees, new technologies, or cloud expansion—and make sure your network can handle the extra load.

The biggest mistake I see is companies waiting until they hit a bandwidth limit before upgrading. Planning for future growth prevents sudden crashes and keeps your business running smoothly.

Add redundancies

No matter how much planning you do, things can still go wrong. I always advise building redundancies into your network to avoid downtime when issues arise.

- Backup ISP: Having a secondary internet provider has saved several of my customers during outages. It’s a small investment for the peace of mind it brings.

- Load balancing: I’ve worked with teams that use load balancing to distribute traffic across servers, ensuring that no one server gets overwhelmed, especially during peak times.

- SD-WAN: For businesses that deal with a lot of traffic across multiple locations, SD-WAN has been a game changer. It automatically reroutes traffic to the best available path, reducing congestion and improving performance.

Applications of network bandwidth

Bandwidth isn’t just about the raw numbers; it’s about what you’re using it for. I’ve worked with customers across industries, and their bandwidth needs can vary dramatically depending on their use cases.

- Everyday tasks: For basic internet use—email, browsing, and remote work—the bandwidth requirements are modest

- Business applications: However, more advanced applications like ERP systems, cloud services, or data centers require significantly more bandwidth

- Advanced tech: For businesses dealing with AI, big data, or IoT services, planning for high bandwidth is crucial

Assessing how you use bandwidth today and how that might change in the future helps you design an IT infrastructure that supports growth.

Wrapping up

Understanding and managing network bandwidth is one of the most impactful things you can do to keep your IT infrastructure running smoothly. It’s not just about having enough bandwidth—it’s about optimizing how you use it. By controlling traffic, staying ahead of bottlenecks, and planning for future growth, you’ll set your business up for long-term success. I’ve seen firsthand how businesses that prioritize bandwidth management experience fewer slowdowns, better performance, and the ability to scale effortlessly. With the right approach, you’ll not only meet today’s needs but also be ready for whatever comes next.

In recent years, Software-Defined WAN Technology (SD-WAN) has changed the way networking professionals secure, manage, and optimize connectivity. As organizations continue to implement cloud applications, conventional backhaul traffic processes are now inefficient and can cause security concerns.

SD-WAN is a virtual architecture that enables organizations to use different combinations of transport services that can connect users to applications. Sending traffic from branch offices to data centers using SD-WAN provides consistent application performance, better security, and automates traffic based on application needs. It also delivers an exceptional user experience, increases productivity, and can reduce tech costs.

What is SD-WAN?

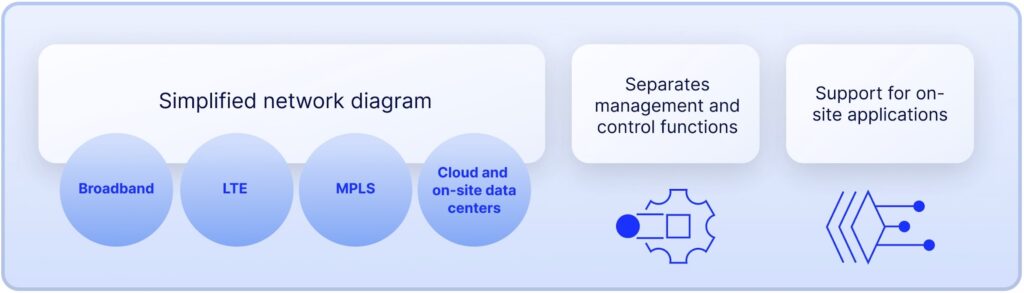

SD-WAN implements software to safely and effectively manage the services between cloud resources, data centers, and offices. It does this by decoupling the data plane and the control plane. The deployment process often includes vCPE (virtual customer premise equipment) and existing switches and routers. These run software that control most management functions, such as networking, policy, and security.

Until recently, a Wide Area Network (WAN) was the best method for connecting users to applications on data center servers. This would typically include Multiprotocol Label Switching (MPLS) circuits for secure connections. But today, MPLS is no longer adequate if you’re dealing with large amounts of data and working in the cloud. Backhauling from branch offices to corporate headquarters impairs performance. Gone are the days of connecting to corporate data centers to use business applications.

With SD-WAN, it’s now easier for you to deliver exceptional network experiences with less operational responsibility for IT staff.

What is the SD-WAN architecture?

Traditional WANs can limit growth and productivity due to their dependence on total hardwire network devices. SD-WAN depends on software to provide a virtual approach while implementing traditional technologies such as broadband connections.

The traditional architecture with conventional routers was not created for the cloud. Backhauling traffic was required from branch offices to data centers so detailed security inspection could occur. This method often hinders performance, causing a loss in productivity and a poor user experience.

SD-WAN, however, can fully support applications in on-site data centers. This includes SaaS services such as Microsoft 365 and Dropbox. The architecture can separate management and control functions, WAN transport services, and all applications. With centralized control, you can store and control all the data on the applications. The control plane can adapt traffic to fit application demands and provide a high-quality user experience.

How does SD-WAN work?

SD-WAN uses communication tunnels, network encryption, and firewall software to manage and safeguard computer networks across several locations. SD-WAN can distinguish and separate network hardware from central controls and streamline operations. A business that uses SD-WAN can create higher-performance WANS by using the internet instead of MPLS.

Traffic flows through a specific SD-WAN appliance, with each appliance centrally controlled and managed. This enables the consistent enforcement of policies. SD-WAN can determine each application traffic and has the ability to route each one to the correct destination. These machine learning-based capabilities enable the software to base destination routes on existing policies.

Because SD-WAN is built to work efficiently, these solutions generally offer greater bandwidth efficiency, increased application performance, and easy access to the cloud. Users enjoy all these benefits without sacrificing data privacy or security. This can also improve customer satisfaction and business productivity.

Furthermore, SD-WAN can identify different applications and provide specific security enforcement. This means that business needs are met, and the business is protected from threats. One of the reasons SD-WAN is so effective is because it can leverage new software technologies while implementing machine learning.

There are a few specific aspects of SD-WAN that enable it to work so well:

Ability to self-learn and adapt

SD-WAN normally guides traffic according to programmed templates and predefined rules. It has the ability to continuously self-monitor and learn. This is done by adapting to various changes in the network. These changes could include transport outages, network congestion, or brownouts. This adaptation occurs automatically and in real time. This limits the amount of manual technical intervention that is needed.

Ability to simultaneously use multiple forms of WAN transport

If a particular path is congested or fails, the system can implement solutions to redirect traffic to another link. SD-WAN can manage each transport service seamlessly and intelligently. The primary purpose of SASE is to provide the best experience possible for cloud applications. The ultimate goal is to be high quality for the user. The advanced capabilities provided by SD-WAN are necessary to enable optimum SASE and find solutions for these purposes in the event of technical problems.

How does SD-WAN and automation work?

SD-WAN already provides a certain amount of automation. To improve this process, each of the SD-WAN elements needs to communicate through APIs. Improving the communication will also enhance the changes the system can make to WAN edge devices. This affects the configuration of resources such as AWS, Google Cloud, and Microsoft Azure. This way, automation works through the entire system, not just in individual components.

Real-time path selection is an example of automation. As communication within the systems improves, the ability to increase the speed and precision of automated decisions will also improve. Insights based on instantaneous data collection will continue to increase efficiency and precision. You will want to continually integrate and update SD-WAN solutions with various machine learning forms to improve manual tasks’ automation. This will enable you to simplify and scale your system to meet the specific needs of each business operation.

Several SD-WAN benefits result from improved automation. These include less human error, faster operations, and improving quality of service. In the long run, the more automation you have, the more likely you will reduce overall operating costs. Automation means reducing the need to hire more engineers and other IT professionals. A self-learning network will increasingly automate many tasks currently done by humans.

What are the benefits of SD-WAN?

SD-WAN is able to offer solutions to many of the challenges you will likely experience when using traditional WAN. The many benefits of SD-WAN include:

Greater agility

While MPLS is good at routing traffic when there are only a few static locations, it’s certainly not as effective when doing business on the cloud. Policy-based routing is the key to SD-WAN’s agility. Traffic is sent through a network focusing on the needs of each basic application. You can use several different transport structures in the WAN. SD-WAN provides predictable agility while supporting cloud migration. This agility includes the ability to use a variety of connections interchangeably, including MPLS, LTE, and broadband.

Increased efficiency

Sending traffic from remote offices to primary data hubs can cause delays. SD-WAN can effectively tie in cloud services. As the use of cloud applications and containers that need edge access increases, so does the need to implement SD-WAN technology. Cloud resources are easily connected with the data hubs in a fast and cost-effective manner. This enables private data centers to grow while organizations can still efficiently expand their use of public cloud services. There is also a reduction in latency issues, which means greater application performance.

Improved security

SD-WAN allows security specification for individual customers that is scalable. Organizations can set up secure zones to guide traffic based on their business policies. A company can protect critical assets with specific partitions while also using firewalls as part of the security process. You can create partitioned areas, basing them on particular roles or identities. You can also monitor network connections, enable deep packet inspection, add data encryptions, and log all security events.

Reduced costs

Backhauling is not only more time-consuming, it’s also costly. MPLS connections between offices and data centers cost more than wireless WAN links or internet broadband. It may take weeks or longer to supply new MPLS links, and MPLS bandwidth is potentially expensive. The same process takes only days when using SD-WAN. In many ways, particularly when it comes to expense, SD-WAN is superior to MPLS. It can also save money by lowering maintenance and equipment costs.

Increased simplification

SD-WAN simplifies turning up new links to remote offices while managing how each link is used more effectively. There is sometimes the need to use several stand-alone appliances with MPLS. You’re able to centralize operations and more easily scale a growing network when using SD-WAN.

Better app performance

Supporting cloud usage and SaaS apps is a necessary part of the digital progress. Applications generally need a lot of bandwidth. SD-WAN provides adequate support with high priority for critical applications. The network hardware separates from the control pane using an overlay network. Network connections then determine the best paths for every application in real time.

Remote access

Cloud access is the primary reason many organizations choose SD-WAN. No matter where your branch or office is, you can easily access all available cloud applications. You can also direct traffic through the data center for critical business applications.

What are the drawbacks of SD-WAN?

SD-WAN has some disadvantages, but the correct tools can overcome many of these drawbacks. Some disadvantages include:

Providing security

Because of how network security is set up, a breach could occur in several remote locations throughout the organization if a hacker breaches security and gains access to the central data branch. This type of connectivity could affect an entire company.

Training staff

Adapting to SD-WAN is not always easy if you’re running or working for a smaller business. Your current staff may not have adequate training to understand and implement this particular technology. In some cases, you may find it counterproductive to hire new IT personnel or train existing staff to build and maintain SD-WAN systems.

Supporting WAN routers

Your SD-WAN system may not support WAN routers. An ethernet connection is likely to interfere with the WAN architecture. You’ll have to come up with a method to eliminate this potential problem. Time-division multiplexing is one option.

How do you select the best SD-WAN?

You’ll want to consider several factors when selecting any SD-WAN model:

- The SD-WAN you select should have the ability to collect real-time statistics.

- The model should connect with all endpoints from any software and application.

- Your selection must be able to encrypt all traffic over the network.

- You should choose a model that provides policy-driven solutions.

- You’ll want an SD-WAN with advanced security that meets your organization’s needs.

- You’ll want to select an SD-WAN that can efficiently utilize bandwidth.

- Your selection should have mobility features, including access control and automatic ideal route selection.

- Your selection should be able to connect with several stations with various internet data services.

What SD-WAN choices are available?

The following are a few of the best-rated SD-WAN solutions:

- Cisco Meraki SD-Wan – This model provides visibility and connects to any application.

- Oracle SD-WAN – Besides routing and firewall, Oracle provides cost-efficient internet connections and high bandwidth.

- CenturyLink SD-WAN – This will help you create a more agile and wide network. It also gives users data reports and analytics.

- Fortinet FortiGate SD-WAN – This solution offers next-generation firewall and advanced routing.

- Wanify SD-WAN – This model delivers VeloCloud SD-WAN through a partnership with VeloCloud. You’ll have end-to-end process management and Wanify’s customer support.

- Aruba Edge Connect – Ratings state that this software is one of the easier types to use. It focuses on reducing costs while simplifying the process.

- Masergy SD-WAN – Masergy has built-in Fortinet security. It also uses AI for its IT operations.

If your organization is using the cloud and subscribing to SaaS, connecting back to a central data center to access applications is no longer efficient or cost-effective. SD-WAN provides a software-centric process that will give your organization optimal access to cloud applications from all remote locations. Your team can create a network that relates to the company’s business policies and promotes the long-term goals of the organization.

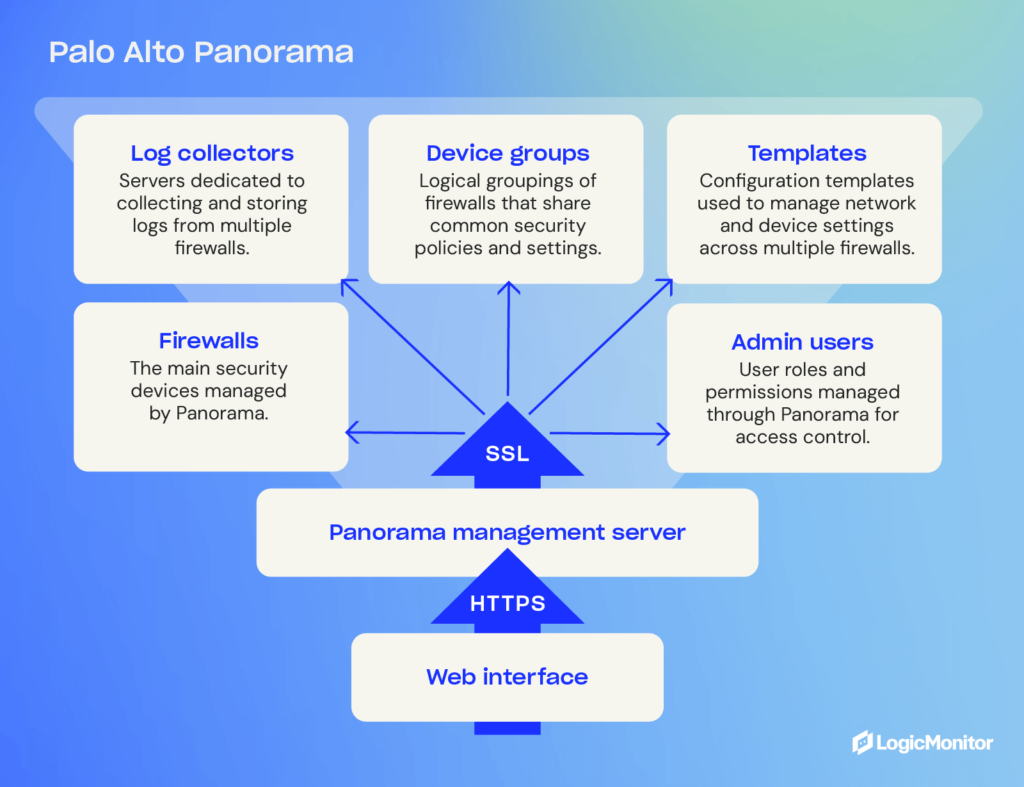

Palo Alto Panorama is an advanced management platform that streamlines the oversight of Palo Alto Networks firewalls. It offers centralized control, real-time insights, and simplified security management through a user-friendly web interface.

Implementing Palo Alto Panorama reduces administrator workload by building a real-time dashboard where you can monitor all of your IT operations in one place. It also alerts users of an impending threat so that they can protect themselves before an attack penetrates their network.

You can customize this centralized management to analyze and report specific logistics of interest to your company. It is a network security system that will enhance your security and protect your company.

The role of Palo Alto Panorama in network security

Palo Alto Panorama is essential to your company’s network security, as it gives you an easy way to protect sensitive information. You can manage all your firewall gateways, even in different regions. Since next-generation firewalls prevent unauthorized access, they must be managed effectively to maintain network security.

By using Palo Alto Networks Panorama, users can avoid duplication of work on their network security, especially if they need similar configurations on multiple firewalls—it continuously monitors the status of these firewalls. Palo Alto Panorama is excellent for large companies that have numerous firewall systems.

Palo Alto Panorama also monitors hardware firewalls, tracking traffic to and from all devices connected to the network. This monitoring helps enforce access controls and security policies, ensuring the network boundary remains secure.

A centralized management interface allows a company to observe, control, and review every firewall it wants to monitor. Palo Alto Panorama is crucial for businesses that must continuously monitor their network security, ensuring it operates correctly and preventing cyber-attacks or data center breaches.

How Palo Alto Panorama enhances network security

On top of the benefits mentioned above, Palo Alto Panorama has several benefits that help enhance network security, such as:

- Centralized updates: Allow simultaneous updates to the firewall and network settings for improved efficiency

- Automation: Automate specific processes for monitoring and updating specific security factors

- Comprehensive overview: Get a more complete view of your network security state and how it performs

- Customization: Use specific features to monitor parameters tailored to your needs instead of using pre-defined configurations

Features of Palo Alto Panorama

Palo Alto Panorama offers several features, including:

- Manages all firewalls and security tools

- Ensures consistency in firewall, threat prevention, URL filtering, and user identification rules

- Provides visibility into network traffic

- Offers actionable insights when threats are detected

- Identifies malicious behavior, viruses, malware, and ransomware

- Organizes firewall management with preconfigured templates for efficiency

- Implements the latest security updates with a single update

- Maintains a consistent web-based interface

- Performs analysis, reporting, and forensics on stored security data

Benefits of using Palo Alto Panorama

Palo Alto Panorama provides a centralized management system that simplifies network security management. Key benefits include:

- Monitors and updates applications automatically and efficiently

- Configures firewalls by group for simultaneous updates or changes

- Enables quick response to network threats

- Scales easily to grow with your company

- Supports additional services like data loss prevention and web protection

- Reduces time spent on network security by up to 50%

- Streamlines network security operations for better efficiency

- Adds an extra layer of security for more consistent management

How to set up and configure Palo Alto Panorama

You can set up Palo Alto Panorama by following a few simple steps. Use the small guide below to deploy it in your organization and get it ready to customize for your unique needs.

- Installation: Read the minimum requirements for Panorama and find dedicated hardware or virtual appliances that meet those specifications. Deploy on your chosen environment once chosen.

- Initial configuration: Connect to the Panorama web interface (192.168.1.1 by default). Set up the system settings (like IP address, DNS, and NTP) and activate the licenses. Then, update the software to the latest version.

- Set up managed devices: Connect your Palo Alto Network firewalls and other devices to Panorama and verify the device management connection.

- Create device groups and templates: Organize firewalls into logical groups. Once done, create configuration templates to ensure consistent policies across devices.

- Define policies: Define policies—such as NAT rules—and other device configurations. Use Panorama to push your configuration to managed devices.

- Configure logging and reporting: Configure log collector settings based on your organization’s needs. Set up custom reports and alerts to get notifications about potential issues.

- Set up User Access Controls (UAC): Create administrative accounts with proper permissions to control access to Panorama. Increase security by using multi-factor authentication.

- Test and verify: Conduct thorough testing to ensure all firewalls are properly managed. Check individual devices to verify policies are applied across the network.

Panorama Management Architecture

Panorama is a centralized management platform that offers simplified policy deployment, visibility and control over multiple firewalls within an organization. It offers a hierarchical structure for managing firewalls, with the ability to create templates and push policies across all devices, reducing the risk of misconfiguration and ensuring consistent security.

Device Groups

Device groups are logical groupings of firewalls within an organization that share similar characteristics. They can be created based on location, function or department.

These groups allow administrators to apply policies, templates and configurations to multiple firewalls simultaneously, saving time and effort. Device groups also provide a way to logically organize firewall management tasks and delegate responsibilities to different teams.

Templates

Templates in Panorama are preconfigured sets of rules and settings that can be applied to device groups or individual devices. This allows for consistent policy enforcement across all managed firewalls.

Templates can be created for different purposes, such as network security, web filtering, or threat prevention. They can also be customized to meet the specific needs of each device group or individual firewall. This level of flexibility and control ensures that policies are tailored to the unique requirements of each network segment.

Advanced capabilities of Palo Alto Panorama

Palo Alto Panorama offers a range of advanced features that make it useful for a range of applications and work environments. These capabilities can help your organization address specific security challenges and compliance requirements.

Some features you may find useful include:

- Application-layer visibility: Get deep insights into application usage across the network to gain a more granular level of control and policy enforcement

- Threat intelligence: Integrate with external threat intelligence feeds to boost detection and response capabilities

- Action automation: Automatic actions based on specific security events to lock down threats and improve incident response time

- Cloud support: Support both on-prem and cloud integration, allowing your organization to enforce consistent policies in every environment

- API support: Extensive API capabilities to integrate with other security tools to enhance capabilities, reporting, and automation

- Log correlation: Advanced log analysis to identify complex attack patterns across multiple devices

These features allow companies in any industry to meet their security requirements. Here are a few examples of how specific industries can put them to use.

Finance

Financial industries have strict requirements to protect customer data. They are required to comply with privacy rules like PCI DSS and OCX and held accountable if they don’t. Financial companies can use Palo Alto to detect potential intrusions that put customer records and funds at risk. It also helps segment specific banking services to put the most strict policies on the data that matters most.

Healthcare