There’s a common misconception in IT operations that mastering DevOps, AIOps, or MLOps means you’re “fully modern.”

But these aren’t checkpoints on a single journey to automation.

DevOps, MLOps, and AIOps solve different problems for different teams—and they operate on different layers of the technology stack. They’re not stages of maturity. They’re parallel areas that sometimes interact, but serve separate needs.

And now, a new frontier is emerging inside IT operations itself: Agentic AIOps.

It’s not another dashboard or a new methodology. It’s a shift from detection to autonomous resolution—freeing teams to move faster, spend less time firefighting, and focus on what actually moves the business forward.

In this article, we’ll break down:

- What DevOps, MLOps, AIOps, and agentic AIOps actually mean

- How they fit into modern IT (and where they don’t overlap)

- Why agentic AIOps marks a transformational leap for IT operations

Let’s start by understanding what each “Ops” term means on its own.

Why “Ops” Matters in IT Today

Modern IT environments are moving targets. More apps. More data. More users. More cloud. And behind it all is a patchwork of specialized teams working to keep everything running smoothly.

Each “Ops” area—DevOps, MLOps, AIOps, and now agentic AIOps—emerged to solve a specific bottleneck in how systems are built, deployed, managed, and scaled and how different technology professionals interact with them.

Notably, they aren’t layers in a single stack. They aren’t milestones on a maturity curve. They are different approaches, designed for different challenges, with different users in mind.

- DevOps bridges development and operations to accelerate application delivery.

- MLOps operationalizes the machine learning lifecycle at scale.

- AIOps brings intelligence into IT incident management and monitoring.

- Agentic AIOps pushes operations further—moving from insights to autonomous action.

Understanding what each “Ops” area does—and where they intersect—is essential for anyone running modern IT. Because if you’re managing systems today, odds are you’re already relying on several of them.

And if you’re planning for tomorrow, it’s not about stacking one on top of the other. It’s about weaving them together intelligently, so teams can move faster, solve problems earlier, and spend less time stuck in reactive mode.

DevOps, MLOps, AIOps, and Agentic AIOps: Distinct Terms, Different Challenges

Each “Ops” area emerged independently, to solve different challenges at different layers of the modern IT stack. They’re parallel movements in technology—sometimes overlapping, sometimes interacting, but ultimately distinct in purpose, users, and outcomes.

Here’s how they compare at a high level:

| Term | Focus Area | Primary Users | Core Purpose |

| DevOps | Application delivery automation | Developers, DevOps teams | Automate and accelerate code releases |

| MLOps | Machine learning lifecycle management | ML engineers, data scientists | Deploy, monitor, and retrain ML models |

| AIOps | IT operations and incident intelligence | IT Ops teams, SREs | Reduce alert fatigue, detect anomalies, predict outages |

| Agentic AIOps | Autonomous incident response | IT Ops, platform teams | Automate real-time resolution with AI agents |

What is DevOps?

DevOps is a cultural and technical movement that brings together software development and operations to streamline the process of building, testing, and deploying code. It’s responsible for replacing much of the slow, manual processes involved in automating pipelines for building, testing, and deploying code. Tools like CI/CD, Infrastructure as Code (IaC), and container orchestration became the new standard.

Bringing these functions together led to faster releases, fewer errors, and more reliable deployments.

DevOps is not responsible for running machine learning (ML) workflows or managing IT incidents. Its focus is strictly on delivering application code and infrastructure changes with speed and reliability.

- Used by: Software developers, DevOps engineers

- Purpose: Automate and accelerate the software delivery pipeline

- Key Tools: Jenkins, GitLab CI/CD, Terraform, Kubernetes

Why DevOps Matters:

DevOps automates the build-and-release cycle. It reduces errors, accelerates deployments, and helps teams ship with greater confidence and consistency.

How DevOps Interacts with Other Ops:

- MLOps adapts DevOps principles—like CI/CD and pipeline automation—to machine learning workflows.

- AIOps consumes the telemetry—metrics, events, logs, and traces—that DevOps pipelines generate to power incident detection and analysis.

What is MLOps?

As machine learning moved from research labs into enterprise production, teams needed a better way to manage it at scale. That became MLOps.

MLOps applies DevOps-style automation to machine learning workflows. It standardizes how models are trained, validated, deployed, monitored, and retrained. What used to be a one-off, ad hoc process is now governed, repeatable, and production-ready.

MLOps operates in a specialized world. It’s focused on managing the lifecycle of ML models—not the applications they power, not the infrastructure they run on, and not broader IT operations.

MLOps helps data scientists and ML engineers move faster, but it doesn’t replace or directly extend DevOps or AIOps practices.

- Used by: ML engineers, data scientists

- Purpose: Automate and govern the ML model lifecycle

- Key Tools: MLflow, Kubeflow, TFX, SageMaker

Why MLOps Matters:

MLOps ensures machine learning models stay accurate, stable, and useful over time.

How MLOps Interacts with Other Ops:

- Adapts DevOps principles, borrowing ideas like pipeline automation and versioning for model management.

- Supports AIOps use cases by providing trained models that can detect patterns, anomalies, and trends across IT environments. MLOps and AIOps can work together, but they solve very different problems for different practitioners.

- MLOps is not an extension of DevOps, nor is it a prerequisite for AIOps. It addresses a unique set of needs and typically operates in its own pipeline and toolchain.

What is AIOps?

AIOps brought artificial intelligence directly into IT operations. It refers to software platforms that apply machine learning and analytics to IT operations data to detect anomalies, reduce alert noise, and accelerate root cause analysis. It helps IT teams manage the growing complexity of modern hybrid and cloud-native environments.

It marked a shift from monitoring everything to understanding what matters.

But even the most advanced AIOps platforms often stop short of action. They surface the problem, but someone still needs to decide what to do next. AIOps reduces the workload, but it doesn’t eliminate it.

- Used by: IT operations, SREs, NOC teams

- Purpose: Improve system reliability and reduce mean time to resolution (MTTR)

- Key Capabilities: Correlation engines, anomaly detection, predictive analytics

Why AIOps Matters:

AIOps gives IT operations teams a critical edge in managing complexity at scale.

By applying machine learning and advanced analytics to vast streams of telemetry data, it cuts through alert noise, accelerates root cause analysis, and helps teams prioritize what matters most.

How AIOps Interacts with Other Ops:

- Ingests telemetry from across the IT environment, including metrics, events, logs, and traces from systems managed by DevOps, but operates independently of DevOps workflows.

- May use machine learning models—whether built-in, third-party, or homegrown—to improve anomaly detection and predictions, but does not rely on an internal MLOps process or teams.

What is Agentic AIOps?

Agentic AIOps is the next evolution inside IT operations: moving from insight to action.

These aren’t rule-based scripts or rigid automations. Agentic AIOps uses AI agents that are context-aware, goal-driven, and capable of handling common issues on their own. Think scaling up resources during a traffic spike. Isolating a faulty microservice. Rebalancing workloads to optimize cost.

Agentic AIOps isn’t about replacing IT teams. It’s about removing the repetitive, low-value tasks that drain their time, so they can focus on the work that actually moves the business forward. With Agentic AIOps, teams spend less time reacting and more time architecting, scaling, and innovating. It’s not human vs. machine. It’s humans doing less toil—and more of what they’re uniquely great at.

- Used by: IT operations, SREs, NOC teams

- Purpose: Close the loop between detection and resolution; enable self-managing systems

- Key Capabilities: Intelligent automation, safe autonomy, policy-driven guardrails

Why Agentic AIOps Matters:

Agentic AIOps closes the loop between detection and resolution. It can scale resources during a traffic spike, isolate a failing service, or rebalance workloads to cut cloud costs, all without waiting on human input.

How Agentic AIOps Interacts with Other Ops:

- Extends AIOps capabilities, taking incident insights and acting on them autonomously.

- Operates on telemetry from across the IT environment, including systems built and managed with DevOps practices.

- May incorporate ML models to inform decision-making, whether those models are homegrown, third-party, or built into the platform.

Agentic AIOps is not a convergence of DevOps, MLOps, and AIOps. It is a visionary extension of the AIOps category—focused specifically on automating operational outcomes, not software delivery or ML workflows.

These “Ops” Areas Solve Different Problems—Here’s How They Overlap

Modern IT teams don’t rely on just one “Ops” methodology—and they don’t move through them in a straight line. Each Ops solves a different part of the technology puzzle, for a different set of users, at a different layer of the stack.

- DevOps accelerates application delivery.

- MLOps manages the machine learning model lifecycle.

- AIOps brings intelligence into IT monitoring and incident management.

- Agentic AIOps pushes IT operations toward autonomous resolution.

They can overlap. They can support each other. But critically, they remain distinct—operating in parallel, not as steps on a single roadmap.

Here’s how they sometimes interact in a real-world environment:

DevOps and MLOps: Shared ideas, different domains

DevOps builds the foundation for fast, reliable application delivery. MLOps adapts some of those automation principles—like CI/CD pipelines and version control—to streamline the machine learning model lifecycle.

They share concepts, but serve different teams: DevOps for software engineers; MLOps for data scientists and ML engineers.

Example:

A fintech company uses DevOps pipelines to deploy new app features daily, while separately running MLOps pipelines to retrain and redeploy their fraud detection models on a weekly cadence.

AIOps: Using telemetry from DevOps-managed environments (and beyond)

AIOps ingests operational telemetry from across the IT environment, including systems managed via DevOps practices. It uses pattern recognition and machine learning (often built-in) to detect anomalies, predict issues, and surface root causes.

AIOps platforms typically include their own analytics engines; they don’t require enterprises to run MLOps internally.

Example:

A SaaS provider uses AIOps to monitor cloud infrastructure. It automatically detects service degradations across multiple apps and flags issues for the IT operations team, without depending on MLOps workflows.

Agentic AIOps: Acting on insights

Traditional AIOps highlights issues. Agentic AIOps goes further—deploying AI agents to make real-time decisions and take corrective action automatically. It builds directly on operational insights, not DevOps or MLOps pipelines. Agentic AIOps is about enabling true autonomous response inside IT operations.

Example:

A cloud platform experiences a sudden traffic spike. Instead of raising an alert for human review, an AI agent automatically scales up infrastructure, rebalances workloads, and optimizes resource usage—before users notice an issue.

Bottom Line: Understanding the “Ops” Landscape

DevOps, MLOps, AIOps, and Agentic AIOps aren’t milestones along a single maturity curve. They’re distinct problem spaces, developed for distinct challenges, by distinct teams.

In modern IT, success isn’t about graduating from one to the next; it’s about weaving the right approaches together intelligently.

Agentic AIOps is the next frontier specifically within IT operations: closing the loop from detection to real-time resolution with autonomous AI agents, freeing human teams to focus where they drive the most value.

Your tech stack is growing, and with it, the endless stream of log data from every device, application, and system you manage. It’s a flood—one growing 50 times faster than traditional business data—and hidden within it are the patterns and anomalies that hold the key to the performance of your applications and infrastructure.

But here’s the challenge you know well: with every log, the noise grows louder, and manually sifting through it is no longer sustainable. Miss a critical anomaly, and you’re facing costly downtime or cascading failures.

That’s why log analysis has evolved. AI-powered log intelligence isn’t just a way to keep up—it’s a way to get ahead. By detecting issues early, cutting through the clutter, and surfacing actionable insights, it’s transforming how fast-moving teams operate.

The stakes are high. The question is simple: are you ready to leave outdated log management behind and embrace the future of observability?

Why traditional log analysis falls short

Traditional log analysis methods struggle to keep pace with the complexities of modern IT environments. As organizations scale, outdated approaches relying on manual processes and static rules create major challenges:

- Overwhelming log volumes: Exponential growth in log data makes manual analysis slow and inefficient, delaying issue detection and resolution.

- Inflexible static rules: Predefined rules cannot adapt to dynamic workloads or detect previously unknown anomalies, leading to blind spots.

- Resource-intensive and prone to errors: Manual query matching requires significant time and effort, increasing the likelihood of human error.

These limitations become even more pronounced in multicloud environments, where resources are ephemeral, workloads shift constantly, and IT landscapes evolve rapidly. Traditional tools lack the intelligence to adapt, making it difficult to surface meaningful insights in real time.

How AI transforms log analysis

AI-powered log analysis addresses these shortcomings by leveraging machine learning and automation to process vast amounts of data, detect anomalies proactively, and generate actionable insights. Unlike traditional methods, AI adapts dynamically, ensuring organizations can stay ahead of performance issues, security threats, and operational disruptions.

The challenge of log volume and variety

If you’ve ever tried to make sense of the endless stream of log data pouring in from hundreds of thousands of metrics and data sources, you know how overwhelming it can be. Correlating events and finding anomalies across such a diverse and massive dataset isn’t just challenging—it’s nearly impossible with traditional methods.

As your logs grow exponentially, manual analysis can’t keep up. AI log analysis offers a solution, enabling you to make sense of vast datasets, identify anomalies as they happen, and reveal critical insights buried within the noise of complex log data.

So, what is AI log analysis?

AI log analysis builds on log analysis by using artificial intelligence and automation to simplify and interpret the increasing complexity of log data.

Unlike traditional tools that rely on manual processes or static rules, AI log analysis uses machine learning (ML) algorithms to dynamically learn what constitutes “normal” behavior across systems, proactively surfacing anomalies, pinpointing root causes in real time, and even preventing issues by detecting early warning signs before they escalate.

In today’s dynamic, multicloud environments—where resources are often ephemeral, workloads shift constantly, and SaaS sprawl creates an explosion of log data—AI-powered log analysis has become essential. An AI tool can sift through vast amounts of data, uncover hidden patterns, and find anomalies far faster and more accurately than human teams. And so, AI log analysis not only saves valuable time and resources but also ensures seamless monitoring, enhanced security, and optimized performance.

With AI log analysis, organizations can move from a reactive to a proactive approach, mitigating risks, improving operational efficiency, and staying ahead in an increasingly complex IT landscape.

How does it work? Applying machine learning to log data

The goal of any AI log analysis tool is to upend how organizations manage the overwhelming volume, variety, and velocity of log data, especially in dynamic, multicloud environments.

With AI, log analysis tools can proactively identify trends, detect anomalies, and deliver actionable insights with minimal human intervention. Here’s how machine learning is applied to log analysis tools:

Step 1 – Data collection and learning

AI log analysis begins by collecting vast amounts of log data from across your infrastructure, including applications, network devices, and cloud environments. Unlike manual methods that can only handle limited data sets, machine learning thrives on data volume. The more logs the system ingests, the better it becomes at identifying patterns and predicting potential issues.

To ensure effective training, models rely on real-time log streams to continuously learn and adapt to evolving system behaviors. For large-scale data ingestion, a data lake platform can be particularly useful, enabling schema-on-read analysis and efficient processing for AI models.

Step 2 – Define normal ranges and patterns

With enough log data necessary to see trends over time, the next step in applying machine learning is detecting what would fall in a “normal” range from log data. This means identifying baseline trends across metrics, such as usage patterns, error rates, and response times. The system can then detect deviations from these baselines without requiring manual rule-setting. It’s also important to understand that deviations or anomalies may also be expected or good in nature and not always considered problematic. The key is to establish a baseline and then interpret that baseline.

In multicloud environments, where workloads and architectures are constantly shifting, this step ensures that AI log analysis tools remain adaptive, even when the infrastructure becomes more complex.

Step 3 – Deploy algorithms for proactive alerts

With established baselines, machine learning algorithms can monitor logs in real time, detecting anomalies that could indicate potential configuration issues, system failures, or performance degradation. These anomalies are flagged when logs deviate from expected behavior, such as:

- Unusual spikes in network latency that may signal resource constraints.

- New log patterns appearing for the first time, which may indicate an emerging issue.

- Levels of error conditions in application logs increasing could indicate an outage on the horizon or that performance issues are happening.

- A sudden increase in failed login attempts suggesting a security breach.

Rather than simply reacting to problems after they occur, machine learning enables predictive log analysis, identifying early warning signs and reducing Mean Time to Resolution (MTTR). This proactive approach supports real-time monitoring, less outages by having healthier logs with less errors, capacity planning, and operational efficiency, ensuring that infrastructure remains resilient and optimized.

By continuously refining its understanding of system behaviors, machine learning-based log analysis eliminates the need for static thresholds and manual rule-setting, allowing organizations to efficiently manage log data at scale while uncovering hidden risks and opportunities.

Step 4 – Maintaining Accuracy with Regular Anomaly Profile Resets

Regularly resetting the log anomaly profile is essential for ensuring accurate anomaly detection and maintaining a relevant baseline as system behaviors evolve. If the anomaly profile is not reset there is potential that once seen as negative behavior may never be flagged again for the entire history of that log stream. Resetting machine learning or anomaly algorithms can allow organizations to test new log types or resources, validate alerts with anomalies or “never before seen” conditions, and reset specific resources or groups after a major outage to clear outdated anomalies.

Additional use cases include transitioning from a trial environment to production, scheduled resets to maintain accuracy on a monthly, quarterly, or annual basis, and responding to infrastructure changes, new application deployments, or security audits that require a fresh anomaly baseline.

To maximize effectiveness, best practices recommend performing resets at least annually to ensure anomaly detection remains aligned with current system behaviors. Additionally, temporarily disabling alert conditions that rely on “never before seen” triggers during a reset prevents unnecessary alert floods while the system recalibrates. A structured approach to resetting anomaly profiles ensures log analysis remains relevant, minimizes alert fatigue, and enhances proactive anomaly detection in dynamic IT environments.

Benefits of AI for log analysis

Raw log data is meaningless noise until transformed into actionable insights. Modern AI-powered log analysis delivers crucial advantages that fundamentally change how we handle system data:

Immediate impact

- Sort through data faster. AI automatically clusters and categorizes incoming logs, making critical information instantly accessible without manual parsing.

- Detect issues automatically. Unlike static thresholds that can’t keep up with changing environments, AI learns and adjusts in real time. It recognizes shifting network behaviors, so anomalies are detected as they emerge—even when usage patterns evolve.

- Only be alerted to important information. Alerts from logs, like many alerts in IT, are prone to “boy who cried wolf syndrome.” When a log analysis tool creates too many alerts, no single alert stands out as the cause of an issue, if there even is an issue at all. With AI, you can move towards only being alerted when something worth your attention is happening, clearing the clutter and skipping the noise.

- Detect anomalies before they create issues. In most catastrophic events, there’s typically a chain reaction that occurs because an initial anomaly wasn’t addressed. AI allows you to remove the cause, not the symptom.

Strategic benefits

- Know the root cause: AI doesn’t just flag an issue—it understands the context, helping you pinpoint the root cause before small issues escalate into major disruptions.

- Enhance security: Sensitive data is safeguarded with AI-enabled privacy features like anonymization, masking, and encryption. This not only protects your network but also ensures compliance with security standards.

- Allocate resources faster and more efficiently: By automating the heavy lifting of log analysis, AI frees up your team to focus on higher-priority tasks, saving both time and resources.

Measurable results

- Reduce system downtime. Quick identification of error sources leads to faster resolution and improved system reliability.

- Reduce noisy alerts. Regular anomaly reviews result in cleaner logs and more precise monitoring.

- Prevent issues proactively. Early detection of unusual patterns helps prevent minor issues from escalating into major incidents.

Why spend hours drowning in raw data when AI log analysis can do the hard work for you? It’s smarter, faster, and designed to keep up with the ever-changing complexity of modern IT environments. Stop reacting to problems—start preventing them.

How LM Logs uses AI for anomaly detection

When it comes to AI log analysis, one of the most powerful applications is anomaly detection. Real-time detection of unusual events is critical for identifying and addressing potential issues before they escalate. LM Logs, a cutting-edge AI-powered log management platform, stands out in this space by offering advanced anomaly detection features that simplify the process and enhance accuracy.

Let’s explore how LM Logs leverages machine learning to uncover critical insights and streamline log analysis.

To start — not every anomaly signals trouble—some simply reflect new or unexpected behavior. However, these deviations from the norm often hold the key to uncovering potential problems or security risks, making it critical to flag and investigate them. LM Logs uses machine learning to make anomaly detection more effective and accessible. Here’s how it works:

- Noise reduction: By filtering out irrelevant log entries, LM Logs minimizes noise, enabling analysts to focus on the events that truly matter.

- Unsupervised learning: Unlike static rule-based systems, LM Logs employs unsupervised learning techniques to uncover patterns and detect anomalies without requiring predefined rules or labeled data. This allows it to adapt dynamically to your environment and identify previously unseen issues.

- Highlighting unusual events: LM Logs pinpoints deviations from normal behavior, helping analysts quickly identify and investigate potential problems or security breaches.

- Contextual analysis: LM Logs combines infrastructure metric alerts and anomalies into a single view. This integrated approach streamlines troubleshooting, allowing operators to focus on abnormalities with just one click.

- Flexible data ingestion: Whether structured or unstructured, LM Logs can ingest logs in nearly any format and apply its anomaly detection analysis, ensuring no data is left out of the process.

By leveraging AI-driven anomaly detection, LM Logs transforms how teams approach log analysis. It not only simplifies the process but also ensures faster, more precise identification of issues, empowering organizations to stay ahead in an ever-evolving IT landscape.

Case Study: How AI log analysis solved the 2024 CrowdStrike incident

In 2024, a faulty update to CrowdStrike’s Falcon security software caused a global outage, crashing millions of Windows machines. Organizations leveraging AI-powered log analysis through LM Logs were able to pinpoint the root cause and respond faster than traditional methods allowed, avoiding the chaos of prolonged outages.

Rapid identification

When the incident began, LM Logs anomaly detection flagged unusual spikes in log activity. The first anomaly—a surge of new, unexpected behavior—was linked directly to the push of the Falcon update. The second, far larger spike occurred as system crashes, reboots, and error logs flooded in, triggering monitoring alerts. By correlating these anomalies in real time, LM Logs immediately highlighted the faulty update as the source of the issue, bypassing lengthy war room discussions and saved IT teams critical time.

Targeted remediation

AI log analysis revealed that the update impacted all Windows servers where it was applied. By drilling into the affected timeslice and filtering logs for “CrowdStrike,” administrators could quickly identify the common denominator in the anomalies. IT teams immediately knew which servers were affected, allowing them to:

- Isolate problematic systems.

- Initiate targeted remediation strategies.

- Avoid finger-pointing between teams and vendors by quickly escalating the issue to CrowdStrike.

This streamlined approach ensured organizations could contain the fallout and focus on mitigating damage while awaiting a fix from CrowdStrike.

Learning in progress

One of the most remarkable aspects of this case was the machine learning in action. For instance:

- LM Logs flagged the first occurrence of the system reboot error—”the system has rebooted without cleanly shutting down first”—as an anomaly.

- Once this behavior became repetitive, the system recognized it as learned behavior and stopped flagging it as an anomaly, allowing teams to focus on new, critical issues instead.

This adaptive capability highlights how AI log analysis evolves alongside incidents, prioritizing the most pressing data in real-time.

Results

Using LM Logs, IT teams quickly:

- Pinpointed the root cause of the outage.

- Determined the scope of the impact across servers.

- Avoided wasting valuable time and resources on misdirected troubleshooting.

In short, AI log analysis put anomaly detection at the forefront, turning what could have been days of confusion into rapid, actionable insights.

AI log analysis is critical for modern IT

In today’s multicloud environments, traditional log analysis simply can’t keep up with the volume and complexity of data. AI solutions have become essential, not optional. They deliver real-time insights, detect anomalies before they become crises, and enable teams to prevent issues rather than just react to them.

The CrowdStrike incident of 2024 demonstrated clearly how AI log analysis can transform crisis response—turning what could have been days of debugging into hours of targeted resolution. As technology stacks grow more complex, AI will continue to evolve, making log analysis more intelligent, automated, and predictive.

Organizations that embrace AI log analysis today aren’t just solving current challenges—they’re preparing for tomorrow’s technological demands. The question isn’t whether to adopt AI for log analysis, but how quickly you can integrate it into your operations.

Every minute of system downtime costs enterprises a minimum of $5,000. With IT infrastructure growing more complex by the day, companies are put at risk of even greater losses.

Adding insult to injury, traditional operations tools are woefully out of date. They can’t predict failures fast enough. They can’t scale with growing infrastructure. And they certainly can’t prevent that inevitable 3 AM crisis—the one where 47 engineers and product managers flood the war room, scrambling through calls and documentation to resolve a critical production issue.

Agentic AIOps flips the script. Unlike passive monitoring tools, it actively hunts down potential failures before they impact your business. It learns. It adapts. And most importantly, it acts—without waiting for human intervention.

This blog will show you how agentic AIOps transforms IT from reactive to predictive, why delaying implementation could cost millions, and how platforms like LogicMonitor Envision—the core observability platform—and Edwin AI can facilitate this transformation.

You’ll learn:

- What agentic AIOps is

- The core components driving agentic AIOps

- How agentic AIOps works

- A step-by-step guide to implementing agentic AIOps

- How agentic AIOps compares to traditional AIOps and related concepts

- Real-world use cases where agentic AIOps delivers measurable value

- The key benefits of agentic AIOps

- How LogicMonitor enables agentic AIOps success

What is agentic AIOps?

Agentic AIOps redefines IT operations by combining generative AI and agentic AI with cross-domain observability to autonomously detect, diagnose, and resolve infrastructure issues.

For IT teams floundering in alerts, juggling tools, and scrambling during incidents, this shift is transformative. Unlike traditional tools that merely detect issues, agentic AIOps understands them. It doesn’t just send alerts—it actively hunts down root causes across your entire IT ecosystem, learning and adapting to your environment in real time.

Agentic AIOps is more than a monitoring tool; it’s a paradigm shift. It unifies observability, resolves routine issues automatically, and surfaces strategic insights your team would otherwise miss. This is achieved through:

- Operating autonomously, learning and adapting in real time.

- Unifying observability across the entire infrastructure, minimizing blind spots.

- Automatically resolving routine issues, while surfacing critical insights.

With its zero-maintenance architecture, there’s no need for constant rule updates or alert tuning. The generative interface simplifies troubleshooting by transforming complex issues into actionable steps and clear summaries.

Agentic AIOps isn’t just a tool—it’s essential for the future of IT operations.

Why is agentic AIOps important?

As IT systems grow more complex—spanning hybrid environments, cloud, on-premises, and third-party services—the challenges of managing them multiply. Data gets scattered across platforms, causing fragmentation and alert overload.

Traditional AIOps can’t keep up. Static rules and predefined thresholds fail to handle the dynamic nature of modern IT. These systems:

- Require constant manual tuning

- Struggle to connect disparate data

- Are reactive, not proactive

As a result, IT teams waste time piecing together data, hunting down issues, and scrambling to prevent cascading failures. Every minute spent is costly.

Agentic AIOps changes that. By shifting to a proactive approach, it automatically detects and resolves issues before they escalate. This not only reduces downtime but also cuts operational costs.

With agentic AIOps, IT teams are freed from routine firefighting and can focus on driving innovation. By unifying observability and automating resolutions, it removes the noise, enhances efficiency, and supports smarter decision-making.

| Traditional AIOps | Agentic AIOps |

| Relies on static rules | Learns and adapts in real time |

| Requires constant updates to rules and thresholds | Zero-maintenance |

| Data is often siloed and hard to connect | Comprehensive view across all systems |

| Reactive | Proactive |

| Time-consuming troubleshooting | Actionable, clear next steps |

| Teams are overwhelmed with alerts and firefighting | Automates routine issue resolution, freeing teams for higher-value tasks. |

| Struggles with cross-functional visibility | Cross-tool integration |

| Noisy alerts | Filters out noise |

Key components of agentic AIOps

Enterprise IT operations are trapped in a costly paradox: despite pouring resources into monitoring tools, outages continue to drain millions, and digital transformation often falls short. The key to breaking this cycle lies in two game-changing components that power agentic AIOps:

Generative AI and agentic AI power autonomous decision-making

Agentic AIOps is powered by the complementary strengths of generative AI and agentic AI.

While generative AI creates insights, content, and recommendations, agentic AI takes the critical step of making autonomous decisions and executing actions in real-time. Together, they enable a level of proactive IT management previously beyond reach.

Here’s how the two technologies work in tandem:

- Generative AI: This component generates meaningful content from raw data, such as plain-language summaries, root cause analyses, and step-by-step guides for remediation. It transforms complex technical data into easily digestible insights and recommendations. In short, generative AI clarifies the situation, offering valuable context and potential solutions.

- Agentic AI: Once insights are generated by the system, agentic AI takes over. It doesn’t simply offer suggestions; it actively makes decisions and implements them based on real-time data. This allows the system to autonomously resolve issues, such as rolling back configurations, scaling resources, or initiating failovers without human intervention.

By combining the strengths of both, agentic AIOps transcends traditional IT monitoring. It enables the system to shift from a reactive stance—where IT teams only respond to problems—to a proactive approach where it can predict and prevent issues before they affect operations.

Why this matters

Instead of simply alerting IT teams when something goes wrong, generative AI sifts through data to uncover the underlying cause, offering clear, actionable insights. For example, if an application begins to slow down, generative AI might pinpoint the bottleneck, suggest the next steps, and even generate a root cause analysis.

But it’s agentic AI that takes the reins from there, autonomously deciding how to respond—whether by rolling back a recent update, reallocating resources, or triggering a failover to ensure continuity.

This ability to not only detect but also act, reduces downtime, cuts operational costs, and enhances system reliability. IT teams are freed from the constant cycle of fire-fighting, instead managing and preventing issues before they impact business operations.

Cross-domain observability provides complete operational visibility

Fragmented visibility creates significant business risks, but cross-domain observability mitigates these by integrating data across all IT environments—cloud, on-prem, and containerized—while breaking down silos and providing real-time, actionable insights. This capability is essential for agentic AIOps, transforming IT from a reactive cost center to a proactive business driver.

Here’s how it works:

- Data integration: Cross-domain observability connects structured data (like metrics and logs) with unstructured data (such as team conversations and incident reports) into a unified stream, ensuring no critical data is missed. This complete integration empowers agentic AIOps to detect and resolve issues across your entire IT ecosystem without human intervention.

- Dynamic response: Unlike traditional systems that wait for manual adjustments, agentic AIOps continually adapts to evolving conditions in real-time. Through intelligent event correlation and predictive modeling, it autonomously adjusts operations to mitigate risks as they arise.

With agentic AIOps, you gain what traditional IT operations can’t offer: autonomous, intelligent operations that scale with your business, delivering both speed and efficiency.

Why this matters

Cross-domain observability is essential to unlocking the full potential of agentic AIOps. It goes beyond data collection by providing real-time insights into the entire IT landscape, integrating both structured and unstructured data into a unified platform. This gives agentic AIOps the context it needs to make swift, autonomous decisions and resolve issues without manual oversight.

By minimizing blind spots, offering real-time system mapping, and providing critical context for decision-making, it enables agentic AIOps to act proactively, preventing disruptions before they escalate. This shift from reactive to intelligent, autonomous management creates a resilient and scalable IT environment, driving both speed and efficiency.

How does agentic AIOps work?

Agentic AIOps simplifies complex IT environments by processing data across the entire infrastructure. It uses AI to detect, diagnose, and predict issues, enabling faster, smarter decisions and proactive management to optimize performance and reduce downtime.

Comprehensive data integration

Modern IT infrastructures generate an overwhelming amount of data, from application logs to network metrics and security alerts. Agentic AIOps captures and integrates both structured (metrics, logs, traces) and unstructured data (like incident reports and team communications) across all operational domains. This unified, cross-domain visibility ensures no area is overlooked, eliminating blind spots and offering a comprehensive, real-time view of your entire infrastructure.

Real-time intelligent analysis

While traditional systems bombard IT teams with alerts, agentic AIOps uses generative and agentic AI to go beyond simply detecting patterns. It processes millions of data points per second, predicting disruptions before they occur. With continuous, autonomous learning, it adapts to changes without needing manual rule adjustments, offering smarter insights and more precise solutions.

Actionable intelligence generation

Unlike standard monitoring tools, agentic AIOps doesn’t just flag problems—it generates actionable, AI-powered recommendations. Using large language models (LLMs), it provides clear, contextual resolutions in plain language, easily digestible by both technical and non-technical users. Retrieval-augmented generation (RAG) ensures these insights are drawn from the most up-to-date and relevant data.

Autonomous resolution

This is where agentic AIOps stands apart: when it detects an issue, it takes action. Whether it’s scaling resources, rerouting traffic, or rolling back configurations, the system acts autonomously to prevent business disruption. This eliminates the need for manual intervention, allowing IT teams to focus on higher-level strategy.

Now—imagine during a product launch, the agentic AIOps system detects a 2% degradation in database performance. It could immediately correlate the issue with a recent change, analyze the potential impact—$27,000 per minute—and autonomously roll back the change. The system would then document the incident for future prevention. In just seconds, the problem would be resolved with minimal business impact.

Agentic AIOps stands out by shifting IT operations from constant firefighting to proactive, intelligent management. By improving efficiency, reducing downtime, and bridging the IT skills gap, it ensures your IT infrastructure stays ahead of disruptions and scales seamlessly with your evolving business needs.

Implementing agentic AIOps

Implementing agentic AIOps requires a strategic approach to ensure that your IT operations become more efficient, autonomous, and proactive.

Here’s a step-by-step framework for getting started:

- Assess your current IT infrastructure: Begin by understanding the complexity and gaps in your existing systems. Identify the areas where you’re struggling with scalability, visibility, or reliability. This will help you pinpoint where agentic AIOps can drive the most impact.

- Identify pain points: Take a deep dive into the challenges your IT team faces daily. Whether it’s alert fatigue, delayed incident resolution, or inadequate cross-domain visibility, recognize where agentic AIOps can make the biggest difference. The goal is to streamline processes and reduce friction in areas that are stalling progress.

- Choose the right tools and platforms: Select a platform that integrates observability and AIOps. For example, LogicMonitor Envision offers an all-in-one solution to bring together cross-domain observability with intelligent operations. Additionally, consider tools like Edwin AI for AI-powered incident management to automate and prioritize issues based on business impact.

- Plan a phased implementation strategy: Start with a pilot project to test the solution in a controlled environment. Use this phase to refine processes, iron out any issues, and collect feedback. Then, roll out the solution in stages across different parts of the organization. This phased approach reduces risk and ensures smooth adoption.

- Monitor and refine processes: Once your solution is live, continuously monitor its impact on IT efficiency and business outcomes. Track key metrics such as incident resolution time, alert volume, and downtime reduction. Be prepared to adjust processes as needed to ensure maximum effectiveness.

- Foster a culture of innovation and agility: For agentic AIOps to succeed, it’s important to build a culture that values continuous improvement and agility. Encourage your team to embrace new technologies and adapt quickly to evolving needs. This mindset will optimize the value of agentic AIOps, ensuring your IT operations stay ahead of disruptions.

We all know this part — getting started is often the hardest step, especially when you’re tackling something as transformative as agentic AIOps. But here’s the thing: you can’t afford to ignore the “why” behind the change. Without a clear plan, these innovations are just shiny tools that won’t stick. Your approach matters, because how you introduce agentic AIOps to your IT infrastructure is the difference between success and just another attempt at change that doesn’t stick.

Comparing agentic AIOps to related concepts

When you’re diving into the world of IT operations, it’s easy to get lost in the sea of buzzwords. Terms like AIOps, DevOps, and ITSM can blur together, but understanding the distinctions is crucial for making informed decisions about your IT strategy. Let’s break down agentic AIOps and see how it compares to some of the most common concepts in the space.

Agentic AIOps vs. traditional AIOps

Traditional AIOps typically relies on predefined rules and static thresholds to detect anomalies or failures. When these thresholds are crossed, human intervention is often required to adjust or respond. It’s reactive at its core, often requiring manual adjustments to keep the system running smoothly.

On the other hand, agentic AIOps takes autonomy to the next level. It learns from past incidents and adapts automatically to changes in the IT environment. This means it can not only detect problems in real time but also act proactively, providing insights and recommendations without the need for manual intervention. It’s the difference between being reactive and staying ahead of potential issues before they become full-blown problems.

Agentic AIOps vs. DevOps

DevOps is all about breaking down silos between development and operations teams to speed up software delivery and improve collaboration. It focuses on automating processes in the development lifecycle, making it easier to release updates and maintain systems.

Agentic AIOps, while complementary to DevOps, adds another layer to the IT operations landscape. It enhances DevOps by automating and optimizing IT operations, providing real-time, intelligent insights that can drive more informed decision-making. Instead of just focusing on collaboration, agentic AIOps automates responses to incidents and continuously improves systems, allowing DevOps teams to focus more on innovation and less on firefighting.

Agentic AIOps vs. MLOps

MLOps focuses on managing the lifecycle of machine learning models, from training to deployment and monitoring. It’s designed to streamline machine learning processes and ensure that models perform as expected in real-world environments.

Agentic AIOps also uses machine learning but applies it in a different context. It doesn’t just manage models; it’s geared toward optimizing IT operations. By leveraging AI, agentic AIOps can automatically detect, respond to, and prevent incidents in your IT infrastructure. While MLOps focuses on the performance of individual models, agentic AIOps focuses on the larger picture—improving the overall IT environment through AI-driven automation.

Agentic AIOps vs. ITSM

ITSM (IT Service Management) is about ensuring that IT services are aligned with business needs. It focuses on managing and delivering IT services efficiently, from incident management to change control, and typically relies on human intervention to resolve issues and improve service delivery.

Agentic AIOps enhances ITSM by bringing automation and intelligence into the equation. While ITSM handles service management, agentic AIOps can automate the detection and resolution of incidents, improving efficiency and dramatically reducing resolution times. It makes IT operations smarter by predicting problems and addressing them before they impact users or business outcomes.

By comparing agentic AIOps to these related concepts, it becomes clear that it stands out as a transformative force in IT operations. While other systems may focus on specific aspects of IT management or software development, agentic AIOps brings automation, intelligence, and proactive management across the entire IT ecosystem—making it a game-changer for businesses looking to stay ahead in the digital age.

Agentic AIOps use cases

When it comes to implementing agentic AIOps, the possibilities are vast. From reducing downtime to driving proactive infrastructure management, agentic AIOps has the potential to transform IT operations across industries. Let’s dive into some specific use cases where this technology shines, showcasing how it can solve real-world problems and drive value for businesses.

Incident response and downtime reduction

One of the core strengths of agentic AIOps is its ability to detect performance degradation in real-time. When an issue arises, agentic AIOps doesn’t wait for a human to notice the problem. It immediately analyzes the situation, correlates relevant data, and generates a root cause analysis. The system can then recommend solutions to restore performance before end users are affected. In cases where downtime is minimized, the system works swiftly, ensuring minimal disruption to the business.

Predictive maintenance and asset management

Asset management can be a challenge when it comes to proactively monitoring IT infrastructure. Agentic AIOps addresses this by analyzing performance data and detecting early signs of degradation in hardware or software. By identifying these issues before they become critical, the system can suggest optimal maintenance schedules or even recommend parts replacements to prevent failures. This predictive capability helps reduce unplanned downtime and ensures smooth operations.

Security incident management

In today’s digital landscape, cybersecurity is more important than ever. Agentic AIOps plays a vital role in enhancing security by identifying unusual network activity that may indicate a potential threat. It can match this activity to known threats, isolate the affected areas, and provide step-by-step guides for IT teams to contain the threat. The system’s proactive approach reduces the likelihood of security breaches and accelerates the response time when incidents occur.

Digital transformation and IT modernization

As organizations modernize their IT infrastructure and embrace digital transformation, cloud migration becomes a key challenge. Agentic AIOps streamlines this process by analyzing dependencies, identifying migration issues, and even automating parts of the data migration process. By ensuring a smooth transition to the cloud, businesses can maintain operational continuity and achieve greater flexibility in their infrastructure.

Better customer experience

The customer experience often hinges on the reliability and performance of the underlying IT systems. Agentic AIOps monitors infrastructure to ensure optimal performance, identifying and resolving bottlenecks before they affect users. By optimizing resources and automating issue resolution, businesses can ensure a seamless user experience that builds customer satisfaction and loyalty.

Proactive infrastructure optimization

As organizations scale, managing cloud resources efficiently becomes more critical. Agentic AIOps continuously monitors cloud resource usage, identifying underutilized instances and recommending adjustments to workloads. By optimizing infrastructure usage, businesses can reduce costs, improve resource allocation, and ensure that their IT environment is always running at peak efficiency.

H3: Hybrid and multi-cloud management

For companies using hybrid or multi-cloud environments, managing a complex IT ecosystem can be overwhelming. A hybrid observability platform can gathers real-time data from on-premises systems and cloud environments, while agentic AIOps analyzes patterns, detects anomalies, and automates responses—together delivering a unified, intelligent view of the entire infrastructure. With this comprehensive visibility, organizations can optimize resources across their IT landscape and ensure that security policies remain consistent, regardless of where their data or workloads reside.

Data-driven decision making

Agentic AIOps empowers IT teams with data-driven insights by aggregating and analyzing large volumes of performance data. This intelligence can then be used for informed decision-making, helping businesses with capacity planning, resource allocation, and even forecasting future infrastructure needs. By providing actionable insights, agentic AIOps helps organizations make smarter, more strategic decisions that drive long-term success.

These use cases illustrate just a fraction of what agentic AIOps can do. From improving operational efficiency to enhancing security, this technology can bring measurable benefits across many aspects of IT management. By proactively addressing issues, optimizing resources, and providing intelligent insights, agentic AIOps empowers organizations to stay ahead of disruptions and position themselves for long-term success in an increasingly complex IT landscape.

Benefits of agentic AIOps

Let’s face it: there’s no time for fluff when it comes to business decisions. If your IT operations aren’t running efficiently, it’s not just a minor inconvenience—it’s a drain on resources, a threat to your bottom line, and a barrier to growth. Agentic AIOps isn’t just about solving problems—it’s about preventing them, optimizing resources, and driving smarter business decisions. Here’s how agentic AIOps transforms your IT landscape and delivers measurable benefits.

Improved efficiency and productivity

In an age where time is money, agentic AIOps excels at cutting down the noise. By filtering alerts and reducing unnecessary notifications, the system helps IT teams focus on what truly matters, saving valuable time and resources. It also automates root cause analysis, enabling teams to resolve issues faster and boosting overall productivity. With agentic AIOps, your IT operations become leaner and more efficient, empowering teams to act with precision.

Reduced incident risks

Every minute spent resolving critical incidents costs your business. Agentic AIOps significantly reduces response times for high-priority incidents (P0 and P1), ensuring that issues are identified, analyzed, and addressed swiftly. By preventing service disruptions and reducing downtime, agentic AIOps helps you maintain business continuity and minimize the impact of incidents on your operations.

Reduced war room time

When disaster strikes, teams often scramble into “war rooms” to fix the problem. These high-stress environments can drain energy and focus. Agentic AIOps streamlines this process by quickly diagnosing issues and providing actionable insights, reducing the need for lengthy, high-pressure meetings. With less time spent managing crises, your IT teams can redirect their focus to strategic, value-driving tasks that move the business forward.

Bridging the IT skills gap

The demand for specialized IT skills often exceeds supply, leaving organizations scrambling to fill critical positions. Agentic AIOps alleviates this challenge by automating complex tasks that once required deep expertise. With this level of automation, even teams with limited specialized skills can handle sophisticated IT operations and manage more with less. This ultimately reduces reliance on niche talent and ensures your IT team can operate at full capacity.

Cost savings

Cost control is always top of mind for any organization, and agentic AIOps delivers on this front. By automating routine tasks and improving response times, the platform helps reduce labor costs and increase overall productivity. Additionally, its ability to prevent costly outages and minimize downtime contributes to a more cost-effective IT operation, offering significant savings in the long run.

In short, agentic AIOps doesn’t just make IT operations more efficient—it transforms them into a proactive, intelligent force that drives productivity, reduces risks, and delivers lasting cost savings. In a world where the competition is fierce, this level of optimization gives organizations the edge they need to stay ahead and scale effortlessly.

How LogicMonitor enables agentic AIOps success

Let’s be honest for a moment: the path to operational excellence isn’t paved with half-measures. It’s paved with the right tools—tools that not only keep the lights on but that proactively prevent the lights from ever flickering.

LogicMonitor is one such tool that enables agentic AIOps to thrive. By integrating observability with intelligence, LogicMonitor creates the foundation for successful AIOps implementation, making your IT operations smarter, more agile, and more efficient.

LM Envision: Comprehensive observability across hybrid environments

When it comes to achieving true agentic AIOps success, visibility is everything. LM Envision provides comprehensive, end-to-end observability across your entire hybrid IT environment. It delivers real-time data collection and analysis, empowering proactive insights that help you stay ahead of issues before they escalate. As the foundation of your agentic AIOps strategy, LM Envision enables seamless integration, providing the visibility and insights needed to optimize system performance and reduce downtime.

The scalability and flexibility of LM Envision ensures that as your business grows and IT complexity increases, your ability to monitor and manage your infrastructure does as well. Whether you’re operating on-premises, in the cloud, or in hybrid environments, LM Envision adapts, feeding your agentic AIOps system with the critical data it needs to function at peak performance. With LM Envision, you’re always a step ahead, shifting from reactive to proactive IT management and making smarter decisions based on real-time data.

Edwin AI: AI-powered incident management

In the world of agentic AIOps, speed and accuracy are paramount when it comes to incident management. That’s where Edwin AI comes in. As an AI-powered incident management tool, Edwin AI makes agentic AIOps possible by streamlining event intelligence, troubleshooting, and incident response. It automates critical processes, consolidating data from multiple sources to offer real-time incident summaries, auto-correlation of related events, and actionable insights—all while cutting through the noise.

With Edwin AI, teams no longer waste time dealing with irrelevant alerts. By filtering out the noise and presenting the most pertinent information, it speeds up incident resolution and minimizes downtime. One of its standout features is its ability to integrate with a variety of other tools, creating cross-functional visibility and enabling smarter decision-making.

Moreover, Edwin AI offers customizable models, ensuring that its insights are tailored to the unique needs of your organization. It simplifies complex technical details into plain language, enabling all team members—regardless of technical expertise—to understand the situation and take swift action. With Edwin AI, your teams can move faster, more confidently, and with greater precision, all while minimizing the risk of service disruption.

Together, LM Envision and Edwin AI form the ultimate platform for driving agentic AIOps success. By pairing observability with intelligent, autonomous incident management, these tools enable businesses to optimize operations, improve efficiency, and ultimately ensure a more proactive and resilient IT infrastructure.

Why enterprises must act now

Here’s the hard truth: if you don’t act now, you’ll fall behind. The future of IT operations is here, and it’s powered by agentic AIOps. The age of AI (GenAI) is reshaping everything, and companies that don’t harness its power risk being left in the dust.

Early adopters have the chance to redefine performance and cost efficiency. Agentic AIOps isn’t just about keeping up—it’s about staying ahead. Those who implement it today will not only meet the demands of tomorrow, they’ll shape them.

No more chasing buzzwords or empty promises. Organizations are looking for practical, scalable solutions that work. Agentic AI automates the routine so your teams can focus on what truly matters: innovation and strategic impact.

IT leaders know this: the future isn’t waiting. Adapt now or risk being irrelevant.

With the rapid growth of data, sprawling hybrid cloud environments, and ongoing business demands, today’s IT landscape demands more than troubleshooting. Successful IT leaders are proactive, aligning technology with business objectives to transform their IT departments into growth engines.

At our recent LogicMonitor Analyst Council in Austin, TX, Chief Customer Officer Julie Solliday led a fireside chat with IT leaders across healthcare, finance, and entertainment. Their insights highlight strategies any organization can adopt to turn IT complexity into business value. Here are five key takeaways:

1. Business value first: Align IT with core organizational goals

Rafik Hanna, SVP at Topgolf, emphasizes, “The number one thing is business value.” For Hanna, every tool, and every process, must directly enhance the player experience. As an entertainment destination, Topgolf’s success depends on delivering superior experiences that differentiate them from competitors and drive continued business growth. This focus on outcomes serves as a reminder for IT leaders to ask:

- How does this initiative impact our core business objectives? Every IT action should enhance the end-user experience, whether it’s for customers, clients, or internal users. At Topgolf, Hanna translates IT decisions directly to their “player experience,” ensuring every technology choice meets customer satisfaction and engagement goals.

- Are we measuring what matters? Key performance indicators (KPIs) should reflect business value, not just technical outputs. Hanna’s team, for instance, closely monitors engagement metrics to directly connect IT performance to customer satisfaction.

- Is the ROI on IT investments clear? Clear metrics and ROI assessments make the case for IT spending. For Hanna, measurable gains in customer satisfaction justify the IT budget, shifting it from a cost center to a driver of business value.

Executive insight: Aligning IT goals with organizational objectives not only secures executive buy-in but also positions IT as a strategic partner, essential to achieving broader company success.

2. Streamline your toolset: Consolidate for clarity and efficiency

Andrea Curry, a former Marine and Director of Observability at McKesson, inherited a landscape of 22 monitoring and management tools—each with overlapping functions and costs. Her CTO asked, “Why do we have so many tools?” she recalls. This sparked a consolidation effort from 22 to 5 essential solutions. Curry’s team reduced both complexity and redundancy, ultimately enhancing visibility and response time. Key lessons include:

- Inventory first: Conduct a comprehensive assessment of all current solutions and their roles. Curry’s team mapped out each tool’s purpose and cost, laying the groundwork for informed decisions.

- Eliminate redundancies: Challenge the necessity of every tool. Can one solution handle multiple functions? Curry found that eliminating overlapping tools streamlined support needs and freed resources for higher-value projects.

- Prioritize high-impact solutions: Retain tools that directly contribute to organizational goals. With fewer, more powerful tools, her team reduced noise and gained clearer insights into their environments.

Executive insight: Consolidating tools isn’t just about saving costs; it’s about building a lean, focused IT function that empowers staff to tackle higher-priority tasks, strengthening operational resilience.

3. Embrace predictive power: Harness AI for enhanced observability

With 13,000 daily alerts, Shawn Landreth, VP of Networking and NetDevOps at Capital Group, faced an overwhelming workload for this team. Implementing AI-powered monitoring leveraging LogicMonitor Edwin AI, Capital Group’s IT team cut alerts by 89% and saved $1 million annually. Landreth’s experience underscores:

- AI is a necessity: Advanced AI tools are no longer a luxury but a necessity for managing complex IT environments. For Landreth, Edwin AI is transforming monitoring from reactive to proactive by detecting potential issues early.

- Proactive monitoring matters: AI-driven insights allow teams to maintain uptime and reduce costly incidents by identifying and addressing potential failures before they escalate. This predictive capability saves time and empowers the team to focus on innovation.

- Reduce alert fatigue: AI filters out low-priority alerts, ensuring the team focuses on the critical few. In Capital Group’s case, reducing daily alerts freed up resources for high-value projects, enabling the team to be more strategic.

Executive insight: Embracing AI-powered observability can streamline operations, enhance service quality, and lead to significant cost savings, driving IT’s value beyond technical performance to real business outcomes.

4. Stay ahead: Adopt new technology proactively

When Curry took on her role at McKesson, she transitioned from traditional monitoring to a comprehensive observability model. This strategic shift from a reactive approach to proactive observability reflects the adaptive mindset required for modern IT leadership. Leaders aiming to stay competitive should consider:

- Continuously upskill: Keep pace with evolving technologies to ensure the team’s relevance and competitiveness. Curry regularly brings in training on emerging trends to ensure her team stays at the leading edge of technology.

- Experiment strategically: Curry pilots promising new technologies to assess their value before large-scale deployment. This experimental approach enables a data-backed strategy for technology adoption.

- Cultivate a culture of innovation: Foster an environment where team members feel encouraged to explore and embrace new ideas. Curry’s team has adopted a mindset of continual improvement, prioritizing innovation in their daily workflows.

Executive insight: Proactive technology adoption positions IT teams as innovators, empowering them to drive digital transformation and contribute to competitive advantage.

5. Strategic partnerships: Choose vendors invested in your success

Across the board, our panelists emphasized the importance of strong relationships. Landreth puts it simply, “Who’s going to roll their sleeves up with us? Who’s going to jump in for us?” The right partnerships can transform IT operations by aligning vendors with organizational success. When evaluating partners, consider:

- Shared goals: A successful vendor relationship aligns with your organizational vision, whether for scalability, cost-efficiency, or innovation. Landreth’s team prioritizes vendors that actively support Capital Group’s long-term objectives.

- Proactive support: A valuable partner offers prompt, ongoing support, not just periodic check-ins. For example, Curry’s vendors provide tailored, in-depth support that addresses her team’s specific needs.

- Ongoing collaboration: Partnerships that prioritize long-term success over quick wins foster collaborative innovation. Vendors who integrate their solutions with internal processes build stronger, more effective alliances.

Executive insight: Building partnerships with committed vendors drives success, enabling IT teams to achieve complex objectives with external expertise and support.

Wrapping up

Our panelists’ strategies—from tool consolidation to AI-powered monitoring and strategic partnerships—all enable IT teams to move beyond reactive firefighting into a proactive, value-driven approach.

By implementing these approaches, you can transform your IT organization from a cost center into a true driver of business value, turning the complexity of modern IT into an opportunity for growth and innovation.

The cloud has revolutionized the way businesses operate. It allows organizations to access computing resources and data storage over the internet instead of relying on on-premises servers and infrastructure. While this flexibility is one of the main benefits of using the cloud, it can also create security and compliance challenges for organizations. That’s where cloud governance comes in.

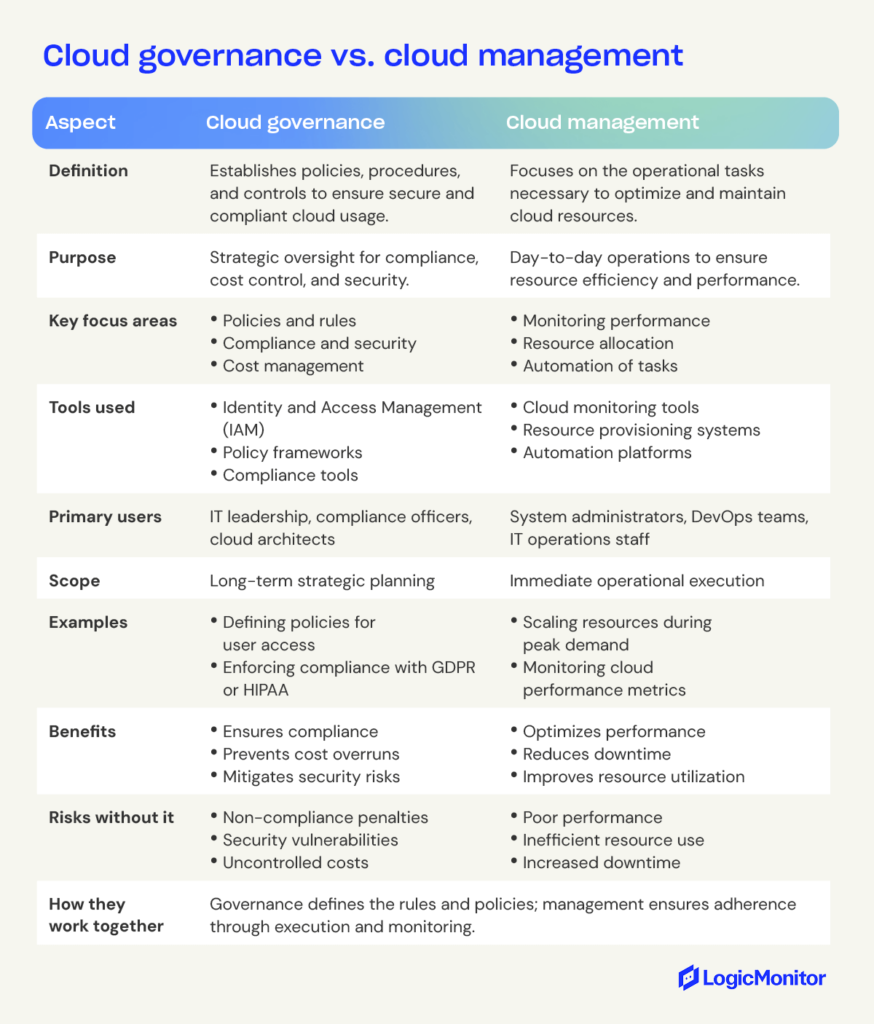

Cloud governance establishes policies, procedures, and controls to ensure cloud security, compliance, and cost management. In contrast, cloud management focuses on operational tasks like optimizing resources, monitoring performance, and maintaining cloud services.

Here, we’ll compare cloud governance vs. cloud management and discuss how you can use them to improve your organization’s cybersecurity posture.

What is cloud governance?

Cloud governance is managing and regulating how an organization uses cloud computing technology. It includes developing policies and procedures related to cloud services and defining roles and responsibilities for those using them.

Cloud governance aims to ensure that an organization can realize the benefits of cloud computing while minimizing risks. This includes ensuring compliance with regulatory requirements, protecting data privacy, and maintaining security.

Organizations should also develop policies and procedures related to cloud services. These should be designed to meet the organization’s specific needs and be reviewed regularly.

Why is cloud governance important?

Cloud governance is important because it provides a framework for setting and enforcing standards for cloud resources. This helps ensure that data is adequately secured and service levels are met. Additionally, cloud governance can help to prevent or resolve disputes between different departments or business units within an organization.

When developing a cloud governance strategy, organizations should consider the following:

- The types of data that will be stored in the cloud and the sensitivity of that data

- The regulations that apply to the data and the organization’s compliance obligations

- The security risks associated with storing data in the cloud

- The organization’s overall security strategy

- The costs associated with using cloud services

An effective cloud governance strategy will address all of these factors and more. It should be tailored to the organization’s specific needs and reviewed regularly. Additionally, the strategy should be updated as new technologies and regulations emerge.

Organizations without a cloud governance strategy are at risk of data breaches, regulatory non-compliance, and disruptions to their business operations. A well-designed cloud governance strategy can help mitigate these risks and keep an organization’s data safe and secure.

What are the principles of cloud governance?

Cloud governance is built on principles essential for ensuring that cloud resources are used securely, efficiently, and according to business objectives. Sticking to these principles lets your organization lay out clear expectations, streamline processes, and minimize several risks associated with cloud computing. By implementing these guidelines, you can establish a robust governance framework for secure and effective cloud operations:

- Defining roles and responsibilities: Clearly define who is responsible for managing cloud services and their roles and responsibilities.

- Establishing policies and procedures: Develop policies and procedures for using cloud services, including how to provision and de-provision them, how to monitor and audit usage, and how to handle data security.

- Ensuring compliance: Make sure their cloud services comply with all relevant laws and regulations.

- Managing risk: Identify and assess risks associated with using cloud services and put controls in place to mitigate those risks.

- Monitoring and auditing: Monitor their use of cloud services and audit them regularly to ensure compliance with policies and procedures.

What is the framework for cloud governance?

A framework for cloud governance provides guidelines and best practices for managing data and applications in the cloud. It can help ensure your data is secure, compliant, and aligned with your business goals.

When choosing a framework for cloud governance, consider a few key factors:

- First, you must decide what control you want over your data and applications. Do you want complete control, or will you delegate some responsibility to a third-party provider?

- Next, you need to consider the size and complexity of your environment. A smaller organization may only need a few simple rules around data storage and access, while a larger enterprise may require a more comprehensive approach.

- Finally, you need to consider your budget. Many different cloud governance frameworks are available, so it’s essential to find one that fits within your budget.

How do you implement a cloud governance framework?

Implementing a cloud governance framework can be challenging, but it’s essential to have one in place to ensure the success of your cloud computing initiative. Here are a few tips to help you get started:

Define your goals and objectives

Before implementing a cloud governance framework, you need to know what you want to achieve. Do you want to improve compliance? Reduce costs? Both? Defining your goals will help you determine which policies and procedures need to be implemented.

Involve all stakeholders

Cloud governance affects everyone in an organization, so it’s crucial to involve all stakeholders, including upper management, IT staff, and business users. Getting buy-in from all parties will make implementing and following the governance framework easier.

Keep it simple

Don’t try to do too much with your cloud governance framework. Start small and gradually add more policies and procedures as needed. Doing too much at once will only lead to confusion and frustration.

Automate where possible

Many cloud governance tools can automate tasks such as compliance checks and cost reporting. These tools can reduce the burden on your staff and make it easier to enforce the governance framework.

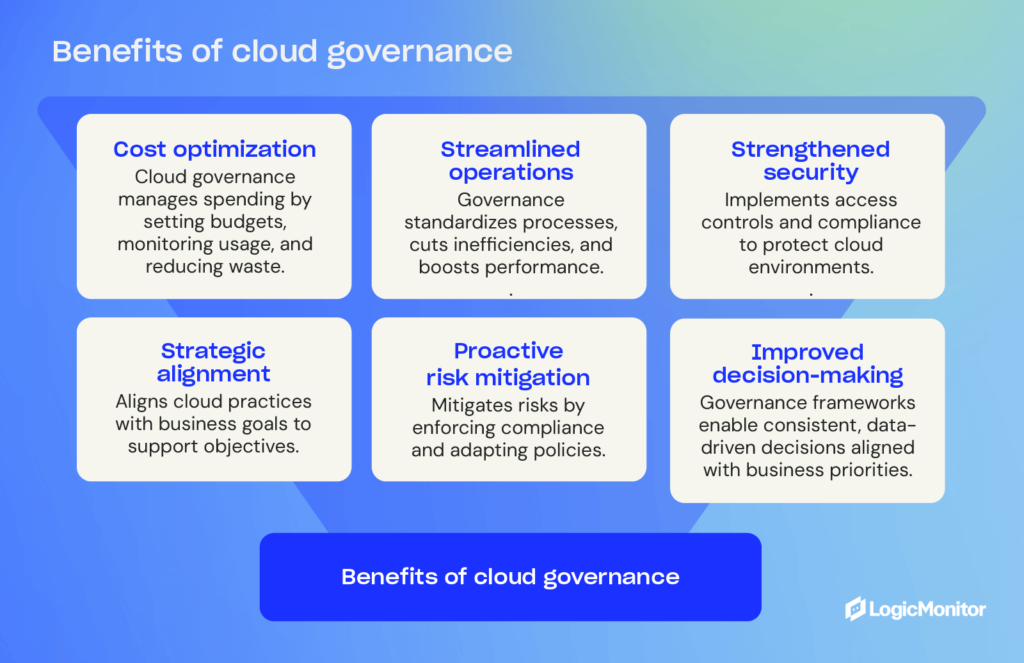

What are the benefits of implementing cloud governance?

Implementing cloud governance gives organizations a structured approach to managing cloud resources, keeping cloud management aligned with business goals, and reducing risks. Using this framework, you can gain better control over your cloud environments so they operate more efficiently while safeguarding sensitive data. Cloud governance offers the following advantages for navigating the complexities of modern cloud computing.

- Improved security: One of the main concerns businesses have regarding cloud computing is security. By implementing governance structures, companies can ensure their data is safe and secure. Cloud governance can help prevent data breaches and unauthorized access to sensitive information.

- Increased transparency: Cloud governance can help increase transparency within an organization. Clear policies and procedures help ensure that everyone knows what is happening with their data, reducing the risk of fraud and corruption.

- Better resource management: Cloud governance can help businesses manage their resources better. Establishing structured guidelines allows companies to use their resources efficiently and improve overall organizational efficiency. This can help save money and improve the organization’s overall efficiency.

What is cloud management?

Cloud management is the process of governing and organizing cloud resources within an enterprise IT infrastructure. It includes the policies, procedures, processes, and tools used to manage the cloud environment.

Cloud management is the process of governing and organizing cloud resources within an enterprise IT infrastructure. It includes the policies, procedures, processes, and tools used to manage the cloud environment.

Cloud management aims to provide a centralized platform for provisioning, monitoring, and managing cloud resources. This helps ensure that all resources are utilized efficiently and that the environment complies with corporate governance policies.

Why is cloud management important?

Cloud management is critical for businesses because it helps them optimize their cloud resources, control costs, and facilitate compliance with regulatory requirements. Cloud management tools help companies automate the provisioning, monitoring, and maintenance of their cloud infrastructure and applications.

How does cloud management work?

Organizations increasingly turn to cloud-based solutions to help them run their businesses, so it’s crucial to understand how cloud management works. By definition, cloud management is administering and organizing cloud computing resources. It includes everything from provisioning and monitoring to security and compliance.

Several different tools and technologies can be used for cloud management, but they all share a common goal: to help organizations maximize their investment in cloud computing.

The first step in effective cloud management is understanding the different types of clouds and how they can be used to meet your organization’s needs. There are three main types of clouds: public, private, and hybrid.

- Public clouds are owned and operated by a third-party service provider. They’re the most popular cloud type, typically used for applications that don’t require a high level of security or performance.

- Private clouds are owned and operated by a single organization. They offer more control and security than public clouds but are also more expensive. Private clouds are often used for mission-critical applications.