Multitenancy is one of the core concepts of cloud computing. As organizations continue to move more and more of their tech stacks to the cloud, understanding multitenancy, or how architecture is able to share environments together, is one of the most crucial topics to learn. This article will break down the intricacies of multitenancy, how it stacks up against other tenancy models, and its benefits.

Contents

- What Is Multitenancy?

- Single Tenancy vs. Multitenancy

- Why the Shift to Multitenancy?

- Multitenant Databases

- Access Control for Multitenant Environments

- Benefits of Multitenancy

- The Future of Multitenancy

What Is Multitenancy?

Multitenancy is an architectural pattern that enables multiple users to share one or more application instances at the same time. Each user is referred to as a tenant; this can be an individual user but is more commonly a group of users, such as a customer company. Each tenant’s data is isolated and invisible to other tenants but runs on the same server.

Single Tenancy vs. Multitenancy

Single tenancy involves a single application instance dedicated to a single customer. The difference between single tenancy and multitenancy can be explained in a real-estate format. Single tenancy can be viewed as a customer buying a single house of their own and customizing it to their needs as they see fit. Multitenancy can be likened to an apartment building with several isolated apartments leased out to individual customers, all sharing the same resources.

Generally, multitenant architecture is preferred because it’s significantly more cost-effective compared to single tenancy. While single tenancy was historically able to offer more control of configuration, increased isolation, and associated security, SaaS-based multi-tenant architecture has now outpaced single tenancy with almost all of these advantages as well.

Why the Shift to Multitenancy?

Traditionally, organizations have maintained on-premise servers with single tenancy. As the landscape has shifted naturally much more into the cloud, multitenancy is now the norm most organizations follow. Cloud-based platforms offer more flexibility, savings on hardware maintenance, and access to cloud technologies, becoming the obvious choice for most options.

There are three major types of cloud computing used by businesses: Software as a Service (SaaS), Platform as a Service (PaaS), and Infrastructure as a Service (IaaS).

Of these, SaaS is the most popular among businesses, and because its core architecture is multi-tenant, the shift to multitenancy has been inevitable for most organizations. In addition, in most cases, cloud-based applications require multitenancy.

Multitenant Databases

When choosing a database for multi-tenant applications, there are a few criteria developers must consider, and they include:

- Scalability, involving number of tenants, storage, and workload

- Development and operational complexity

- Tenant isolation

Outlined below are three major approaches to multitenant database patterns, each with varying levels of complexity and cost.

Single Shared Database and Schema

This is the simplest approach in the initial stages of development. Tenants share the same application instance and database, and each tenant has a table in the shared schema. It allows for easier scaling and larger numbers of tenants, but troubleshooting individual tenants can be difficult. Additionally, it provides very low tenant isolation. When the number of tenants increases exponentially, it will become more difficult to operate the database.

Shared Database, Separate Schemas

Here, there is a single database, but one schema per tenant. This approach is valuable when data from different tenants needs to be treated differently, for example, if it goes through different geographical regulations. It’s also more secure than the single schema approach. However, it doesn’t comply with PCI/HIPAA/FedRAMP regulations.

Separate Database, Separate Schemas

In this approach, computing resources and application code are shared among tenants on a single server, but each tenant has their own set of data that remains logically separated from other tenants’ data. It provides the highest level of tenant and data isolation but is also the costliest approach.

Access Control for Multitenant Environments

The difficulties in access control management in multitenancy lie in:

- Controlling different data and application resources

- Providing different tenants with access to the resources

- Designing an access control mechanism with many authorization rules across conflicting policy domains for a large number of users

The most common access control measure applied in multitenancy is Role-Based Access Control (RBAC). RBAC provides fine-grained authorization to users and builds trust among tenants.

Benefits of Multitenancy

There are quite a number of benefits that have made multitenancy the standard among organizations, and they include the following:

- It is highly cost-effective since cost for the multitenancy environment and resources are shared among tenants.

- It’s low-maintenance for tenants, as the duty of server upgrades and maintenance updates usually fall on the SaaS vendor and, because a single server is serving many tenants, updates can be rolled out more easily at once.

- It offers high scalability that is available on-demand for clients. There are also fewer infrastructure implications because new users get access to the same already existing basic software.

- It’s easy to add new tenants to servers, as the process of signing up and configuration of new domains/subdomains is automated, and integrations with other applications are made easier through the use of APIs.

The Future of Multitenancy

Although businesses are constantly looking for ways to capitalize on the benefits of both single tenancy and multitenancy through avenues like multi-cloud tenancy and hybrid tenancy, multitenancy remains at the forefront of cloud computing. It offers organizations boundless opportunities for horizontal expansion and would be the ideal tool for a business looking to move from on-premise servers to cloud services.

The workflow of IT teams is ever-changing. Businesses must adapt quickly and use safeguards to prevent operation interruptions.

An IT business continuity program ensures normal business functions after a disaster or other disruptive event. Given society’s dependence on IT for daily needs, making sure that your IT infrastructure and systems operate without disruption is crucial in the face of disaster. Without a plan in place, companies risk financial losses, reputational damage, and long recovery times.

How confident are you that your IT department can maintain continuous uptime and availability during a crisis with minimal disruptions? This guide will help IT operatives identify those solutions as they begin developing or strengthening their IT business continuity plans.

What is an IT business continuity plan and why is it essential?

An IT business continuity plan (IT BCP) is a specialized strategy that makes sure IT systems, infrastructure, and data remain resilient during and after major disruptions like natural disasters or cyberattacks. Unlike general business continuity plans that address broader areas like supply chain management, an IT BCP focuses on keeping an organization’s technical systems safe, including networks, servers, cloud services, and applications.

A strong IT BCP is able to:

- Protect mission-critical IT infrastructure: Ensure uninterrupted access to key systems that keep business operations running

- Support operational stability: Minimize downtime and maintain productivity during disruptions

- Prevent financial and reputational risks: Reduce the potential for costly downtime, regulatory fines, and damage to customer trust

IT BCPs protect organizations from risks such as:

- Cyberattacks: Ransomware and data breaches can lock users out of IT systems, causing widespread disruptions and expensive recovery processes.

- Natural disasters: Events like hurricanes or earthquakes can damage data centers, making IT systems inaccessible.

- System failures: Aging hardware, software bugs, or misconfigurations can bring operations to a halt.

An IT BCP also ensures regulatory compliance, such as GDPR, HIPAA, and SOX, which have strict continuity measures. Non-compliance can lead to significant penalties and legal challenges.

For example, the 2024 CrowdStrike outage disrupted 8.5 million Windows devices, causing Fortune 500 companies to collectively incur an estimated $5.4 billion in uninsured damages. This highlights the need for a strong IT BCP to protect systems, maintain compliance, and prevent costly incidents.

Key IT business continuity plan components

An effective IT BCP focuses on key components that strengthen systems and continue operations during disruptions.

Risk assessment

Audits and risk protocols help organizations anticipate disruptions and allocate resources. Risk assessment identifies vulnerabilities like outdated hardware, weak security, and single points of failure.

Dependency mapping

Dependency mapping identifies relationships between IT systems, applications, and processes. For example, replicating databases is critical if failure disrupts multiple services. Understanding IT interconnections helps organizations identify critical dependencies and blind spots so they can plan recovery procedures.

Backup and disaster recovery

Data backup and recovery are crucial for keeping information safe and quickly resuming operations after a significant disruption. Data recovery best practices include:

- Regular backups: Automate and schedule frequent backups to keep the latest data secure.

- Off-site storage: Use secure cloud solutions or off-site data centers in other locations to prevent data loss in localized disasters.

- Testing recovery plans: Periodically test disaster recovery processes to restore backups quickly and without errors.

Failover systems

Failover systems maintain operations by automatically switching to backups during hardware or software failures. Examples of failover systems include:

- Additional servers or storage systems for critical applications

- Secondary internet connections for minimal disruptions during outages

- Load balancers to distribute traffic evenly so there’s no single point of failure

Communication plans

Effective communication allows organizations to respond to an IT crisis. Strong IT BCPs include:

- Crisis roles: Assign clear responsibilities to team members during disruptions.

- Stakeholder communication: Prepare email templates, internal communication playbooks, and chat channels to quickly inform stakeholders, customers, and employees.

- Incident reporting tools: For real-time updates and task tracking, use centralized platforms like Slack, Microsoft Teams, or ServiceNow.

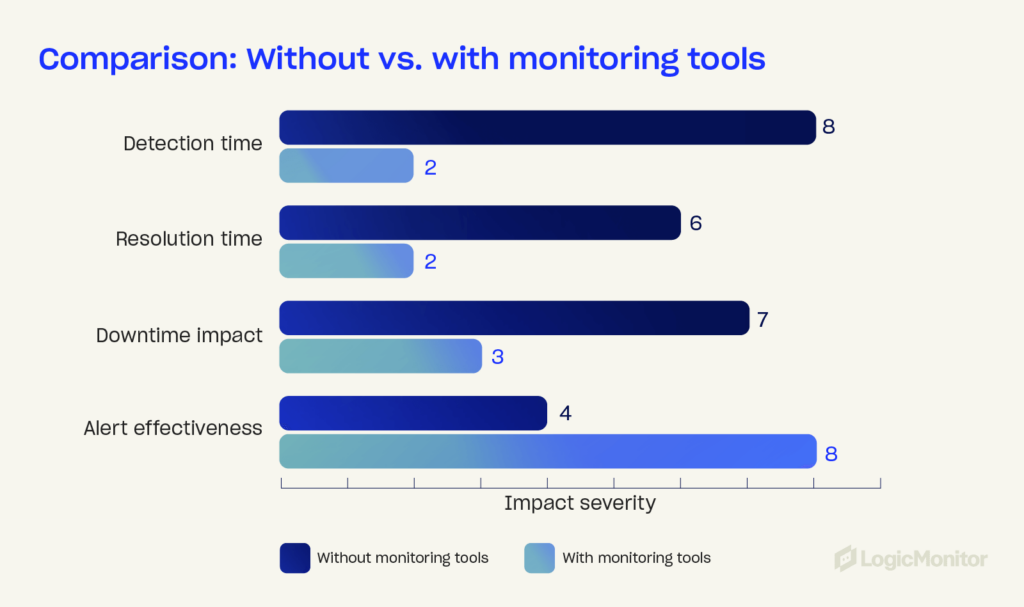

Continuous monitoring and testing

Tools that provide real-time insights and proactive alerts on system performance will find potential disruptions before they escalate. Routine simulation drills prepare employees for worst-case scenarios.

Cybersecurity measures

The rise in cyberattacks makes strong cybersecurity key to an IT BCP. Multi-factor authentication, firewalls, and endpoint protections guard systems against breaches, while incident response plans minimize attack damage.

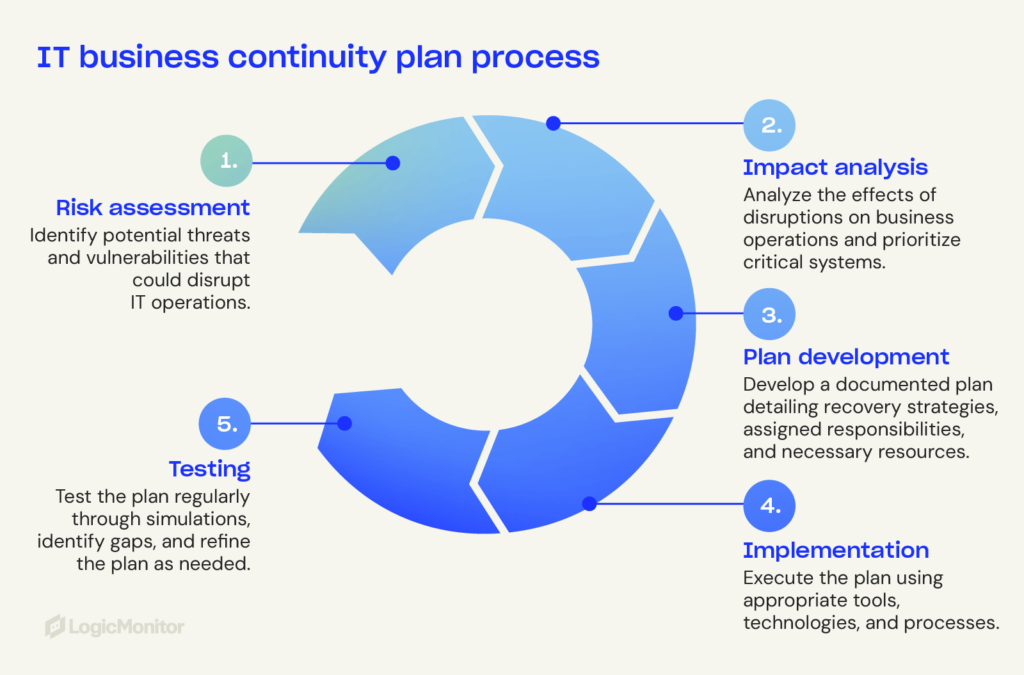

Steps to develop an IT business continuity plan

Protect critical systems and ensure fast disruption recovery with these steps.

1. Assess risks and conduct a business impact analysis

Conduct a business impact analysis (BIA) to evaluate how potential IT risks can affect your operations, finances, and reputation. Key BIA activities include:

- Identifying single points of failure in systems or networks

- Evaluating the impact of downtime on various business functions

- Quantifying the costs of outages to justify investments in continuity plans

Example: A financial services firm simulates a Distributed Denial-of-Service (DDoS) attack on its customer portal and identifies that its firewall rules need adjustment to prevent prolonged outages.

2. Define critical IT assets and prioritize systems

Not all IT systems and assets are equally important. Identify and prioritize systems that are vital in maintaining key business operations, including:

- Core infrastructure components like servers, cloud platforms, and networks

- Applications that support customer transactions or internal workflows

- Databases that hold sensitive or important operational information

Example: A retail company classifies its payment processing systems as a Tier 1 priority, ensuring that redundant servers and cloud-based failovers are always operational.

3. Develop a recovery strategy

Establish clear recovery time objectives (RTO) and recovery point objectives (RPO) to guide your strategy:

- RTO: Defines the maximum acceptable downtime for restoring systems or services

- RPO: Specifies the acceptable amount of data loss measured in seconds, minutes, or hours

Example: A healthcare provider sets an RTO of 15 minutes for its electronic medical records system and configures AWS cross-region replication for failover.

4. Obtain necessary tools

Equip your organization with tools that support continuity and recovery efforts, including:

- Monitoring platforms: Provide real-time insights into system health and performance

- Data backup solutions: Ensure secure storage and rapid data restoration

- Failover mechanisms: Automate transitions to backup systems during outages

- Communication tools: Facilitate seamless crisis coordination across teams

Example: A logistics company integrates Prometheus monitoring with an auto-remediation tool that reboots faulty servers when CPU spikes exceed a threshold.

Hypothetical case study: IT BCP in action

Scenario

An e-commerce company faces a ransomware attack that encrypts critical customer data.

Pre-BCP implementation challenges

- Single data center with no geo-redundancy.

- No air-gapped or immutable backups, making ransomware recovery difficult.

- No automated failover system, leading to prolonged downtime.

Post-BCP implementation

- Risk Assessment: The company identifies ransomware as a high-priority risk.

- System Prioritization: Customer databases and payment gateways are flagged as mission-critical.

Recovery strategy

- Immutable backups stored in AWS Glacier with multi-factor authentication.

- Cloud-based disaster recovery ensures failover to a secondary data center.

Monitoring and response

- AI-based anomaly detection alerts IT teams about unusual encryption activities.

- Automated playbooks in ServiceNow isolate infected systems within 10 seconds of detection.

Outcome

The company recovers operations within 30 minutes, preventing major revenue loss and reputational damage.

IT business continuity tools and technologies

Building an effective IT BCP requires advanced tools and technologies that ensure stability.

Monitoring systems

Modern infrastructure monitoring platforms are vital for detecting and eliminating disruptions. Tools such as AIOps-powered solutions offer:

- Real-time insights into system performance, helping teams to identify and resolve issues quickly

- Root-cause analysis (RCA) to determine why harmful events occur, improving response times

- Anomaly detection to catch irregular activities or performance bottlenecks and correct them

Cloud-based backup and disaster recovery

Cloud solutions offer flexibility and scalability for IT continuity planning. Key benefits include:

- Secure data backups: Backups stored in other geographic locations protect against localized disasters.

- Rapid disaster recovery: Multi-cloud strategies can restore systems quickly.

- Remote accessibility: Employees and IT teams can access critical resources anywhere, speeding up recovery times.

Failover and resource scaling automation tools

Automation streamlines recovery processes and ensures IT infrastructure stays agile during crises. Examples include:

- Automated failover systems: Switch operations to backup servers or connections during outages.

- Resource scaling: Adjust server capacity and network bandwidth to meet changing demands.

- Load balancing: Distribute traffic to prevent overloading and single points of failure.

Cybersecurity solutions to protect IT systems

Robust cybersecurity is essential to IT continuity. Protect your systems with:

- Multi-factor authentication (MFA) to secure user access

- Firewalls and endpoint protection to defend against threats

- Incident response plans to minimize the impact of breaches or ransomware attacks

Common IT business continuity planning challenges

Even well-designed IT BCPs face obstacles. Understanding these common pitfalls will help you proactively address vulnerabilities and maintain operational strength.

Lack of testing and updates

Outdated or untested IT BCPs risk gaps or ineffective processes during a crisis. Regular updates will help you adapt to threats.

Third-party dependencies

Modern IT systems rely heavily on external services like cloud providers, data centers, and software vendors. Failing to account for these dependencies can lead to significant disruptions during third-party outages or delays.

Human error

Even the most advanced IT systems require human intervention during a crisis. Human factors, such as unclear communication protocols and insufficient training, can compromise the execution of an IT BCP. Strategies for reducing human error include:

- Training and refreshers: Make sure employees are familiar with their responsibilities in your IT BCP during a crisis. Include role-specific training and regular simulations to reinforce their knowledge.

- Documentation: Develop quick-reference guides and checklists for team members to easily access during an incident.

- Communication protocols: Establish clear communication channels and use tools like incident response platforms to provide real-time updates and coordinate teams.

- Post-incident reviews: After each drill or real-world incident, evaluate team performance and identify areas for improvement.

Budget constraints

Financial limitations can keep organizations from creating effective continuity measures, like failover systems, backup solutions, or regular testing protocols. To address budget constraints:

- Invest in critical areas with the highest potential impact

- Explore cost-effective solutions, like open-source tools or scalable cloud platforms

- Quantify potential losses resulting from downtime

Complex multi-cloud and hybrid environments

As organizations adopt hybrid and multi-cloud systems, uninterrupted operations become a challenge. Issues like inconsistent configurations and siloed data can prolong disruptions and slow recovery. Regular audits, dependency mapping, and unified monitoring tools simplify crisis management and strengthen continuity.

Lack of executive buy-in

Without support from leadership, BCP efforts can lack funding, strategic alignment, or organizational priority. Secure executive support by:

- Demonstrating the ROI of continuity planning

- Presenting real-world examples of downtime costs and successful recoveries

- Highlighting compliance obligations

Best practices for maintaining IT business continuity

A strong IT BCP requires ongoing effort to remain effective against evolving threats. These practices ensure your plan stays effective during any crisis.

Test and refine

Regular tests can identify weaknesses in your IT BCP. Continuously improve processes to align with your current infrastructure and objectives. Testing methods include:

- Tabletop exercises: Simulate hypothetical scenarios to review decision-making and coordination

- Live drills: Engage teams in real-time responses to assess readiness and identify bottlenecks

- Post-test reviews: Use results to refine workflows and address gaps

Train staff on their crisis roles

Regular training with clear responsibilities ensures team members understand their duties and can act quickly during disruptions.

- Provide training for IT, operations, and leadership teams

- Develop playbooks or quick-reference guides for crisis scenarios

- Regularly update and refresh knowledge to account for staff turnover

Use RTO and RPO metrics to measure success

Set measurable goals to evaluate your strategy’s effectiveness. Track performance against these benchmarks to ensure your plan meets its objectives:

- Recovery Time Objective (RTO): Define how quickly IT systems must be restored after a disruption to minimize downtime.

- Recovery Point Objective (RPO): Specify the maximum acceptable data loss, measured in time, to guide backup frequency.

Collaborate with cross-functional teams

An effective IT BCP must align with organizational goals. By working with teams across departments, you can:

- Ensure all relevant teams understand your IT BCP

- Identify dependencies between IT systems and other functions

- Develop response strategies that integrate with company-wide plans

Leverage technology to automate processes

Automation enhances the speed and efficiency of IT continuity efforts. Tools like monitoring platforms, automated failover systems, and AI-driven analytics reduce manual workloads and allow proactive problem-solving.

Continuously monitor and assess risks

The threat landscape is constantly evolving. Regular risk assessments and real-time monitoring help identify emerging weaknesses before they escalate into major problems.

Emerging Trends in IT Business Continuity Planning

Key trends shaping IT BCP include:

1. AI and Machine Learning

- Predictive Analytics: Identifies potential failures before they occur.

- Automated Incident Response: Triggers failovers and restores backups autonomously.

- AI-Based Risk Assessments: Continuously refines risk models.

2. Cloud-Native Solutions

- Scalability & Redundancy: Cloud solutions offer flexibility and geographic backups.

- Faster Recovery: Minimized downtime with rapid disaster recovery.

3. Compliance and Regulations

Stricter standards like GDPR, CCPA, and supply chain mandates require robust continuity plans.

4. Zero Trust Architecture

Emphasizes restricted access, continuous authentication, and network segmentation to combat cyber threats.

5. Automated Disaster Recovery

- Self-Healing Systems: Auto-reconfigures after failures.

- Blockchain: Ensures data integrity.

- AI Compliance Monitoring: Tracks and reports in real time.

Final thoughts: Strengthening IT resilience

An effective IT BCP is a strategic investment in your organization’s future. Identifying weaknesses, prioritizing critical systems, and using proactive measures reduce risks and maintain operations during disruptions.

Continuity planning isn’t a one-time task, however. As challenges like cyberattacks, regulatory changes, and shifting business needs evolve, an effective plan must adapt. Regular updates, testing, and cross-functional collaboration ensure your plan grows with your organization.

Ultimately, an effective IT BCP supports business success by protecting revenue, maintaining customer trust, and enabling operational stability. Taking these steps will prepare your organization to navigate future challenges confidently.

If you’re working with Kubernetes and the thought of searching for each new term you come across seems exhausting, you’ve come to the right place! This glossary is a comprehensive list of Kubernetes terminology in alphabetical order.

Jump to:

A | C | D | E | F |G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W

Admission controller – A security feature native to Kubernetes that allows users to configure the objects allowed on a cluster. Admission controllers regulate how each cluster gets used. An admission controller quickly checks each request with the Kubernetes server prior to letting any objects run.

Affinity – A set of rules hinting at the placement of pods. There are two types:

- Node

- Pod-to-pod

Depending on how strict a scheduler must perform, these rules can be either preferred or required.

Aggregation Layer – an aggregation layer lets you extend Kubernetes with other APIs in addition to what’s available in basic Kubernetes APIs. You can use APIs that are tailored and ready-made, like service-catalog, or you can use APIs you’ve developed yourself.

AKS – Azure Kubernetes Service (AKS) is an open-source, fully managed container planning service that debuted in 2018. AKS is offered in the public Microsoft Azure cloud and can be used for deployment, scaling, and management of Docker containers and other containerized apps working in clusters. For all resources, AKS provides as required or on-demand:

- Provisioning

- Scaling

- Upgrading

You’ll see no cluster downtime and you don’t need a deep understanding of container organization to work with AKS.

Amazon EKS (EKS) – Amazon’s Elastic Kubernetes Service, or EKS, lets you run Kubernetes on Amazon Web Service (AWS) without the need for installation, operation, or maintenance of your own control plane/nodes.

Annotation – A value assigned to the metadata of an object.

API group – A group of related paths within the Kubernetes API.

API server – Part of the control plane that reveals the Kubernetes API. The front end of the control plane. This lightweight application allows you to create and extract API data from other data without needing customized development procedures.

Applications – The layer where containerized apps run. Applications are containerized solutions.

cgroup – container group – A group of processes native to Linux that have optional isolation of resources, accounting, and/or limits. A kernel feature limiting, accounting for, or isolating resource use for a specific set of processes. cgroups let you allocate various resources such as:

- System memory

- Bandwidth

- CPU time

- A combination of the above

cgroups also let you allocate these resources amongst any task groups or processes running within the system that have been user-defined. You can also:

- Monitor any cgroups you’ve configured.

- Deny certain resource access to specific cgroups.

- Dynamically reconfigure specific cgroups.

CIDR – Classless Inter-Domain Routing (CIDR) is a mask for IP addresses. Also referred to as supernetting, CIDR assigns IP addresses to improve address distribution efficiency, replacing the earlier system that was based on Class A, B, and C networks.

Cloud-controller manager – A cloud controller is a storage tool that moves data from a physical, on-premises storage location to a cloud location. Cloud controllers use either hard drives, SSDs, or a combination for on-premises storage. The cloud-controller manager is a control plane element that allows you to embed specific control logic into the cloud. This tool is structured with a plugin allowing various cloud service providers to integrate, or link up with, Kubernetes.

Cluster – Kubernetes clusters are sets of node machines that run containerized apps. In other words, if you’re using Kubernetes, you’re using clusters. At the bare minimum, clusters contain the control plane and at least one computing machine, or node. The nodes are responsible for running the apps and workloads.

ConfigMap – A dictionary of your configuration settings. A ConfigMap has strings of key-value pairs. The key-value pairs act as instructions that Kubernetes then provides to your containers. Like other Kubernetes dictionaries, you can see and set the value of the configuration. You can use ConfigMap to keep the application code separate from the configuration. It’s a crucial aspect of 12-factor apps used in creating software-as-a-service applications.

Container – Lightweight, executable image containing software and its dependencies. Containers are software packages that come ready to use. Everything needed to run an app is located in the container, such as:

- The code

- Runtime

- App libraries

- System libraries

- Default values for vital settings

Containerd – Concept of kernel features providing relatively high-level interfaces. Other software can use containerd for running and managing containers and images.

Container environment variables – “Name equals value” pairs that offer insight into the containers that run within a pod. Container environment variables provide application-specific, required information to each container about such resources as:

- System details

- Container information

- Service endpoints

Container network interface (CNI) – a project by the Cloud Native Computing Foundation, a CNI is comprised of libraries and specifications regarding the writing of plugins that configure the network interfaces within Linux containers. In Kubernetes, CNI acts as the interface between a network provider and networking pods.

Container runtime – The software that’s responsible for running the containers. This software executes each container and manages it on a node. Container runtime interface, or CRI, lets Kubernetes use several types of container runtimes without having to recompile. Theoretically, Kubernetes can use any given container runtime that uses CRI to manage its pods, containers, and images.

Container runtime interface (CRI) – A container runtime API that joins with kubelet on a node.

Container storage interface (CSI) – Offers a standardized interface between containers and storage systems, enabling customized storage plugins to cooperate with Kubernetes without the need to add each storage system to the Kubernetes repository.

Control plane – The layer in which container deployment, definition, and management occur. Some of the components of the control plane include etcd, Scheduler, and Controller Manager.

Controller – Loops that oversee the cluster’s state, making and requesting changes as necessary to move the cluster’s current state closer to the cluster’s most desired state. Some controller’s loops may run within the control plane if they are necessary for operations. For instance, the namespace controller runs inside kube-controller-manager.

CRI-O – CRI-O is an operation in Kubernetes’ container runtime interface (CRI) that lets you enable compatible runtimes that use Open Container Initiative, or OCI. If Docker, Moby, and rkt aren’t as lightweight as you need, CRI-O is a good alternative. It lets you use any runtime as the Kubernetes runtime to run pods. It also supports runc and other container runtimes that are OCI-compliant. CRIO sustains any OCI image and it can draw from all container registries as directed.

Custom controller – In some instances, Kubernetes’ core controllers may not provide the necessary control. A custom controller allows the user to extend Kubernetes core functionalities without having to change core code.

CustomResourceDefinition (CRD) – The customized code for a custom controller, used when the Kubernetes core controllers don’t provide the necessary functionality.

DaemonSet – The system’s daemons (log collectors, monitoring agents, etc.) usually must run on each node. A DaemonSet makes sure a Pod copy is functional across a group of nodes within a cluster.

Data plane – The layer that holds such working aspects as the CPU, memory, and storage for the containers to run. The data plane helps containers connect to a specific network.

Deployment – An API resource object providing updates to various applications. The deployment manages pod scheduling, management, updates, rollbacks, horizontal scaling, and lifecycles.

Device plugin – Run on nodes providing pods access to various resources. Specific setups or initializations for vendor-specific applications use device plugins to operate. A device plugin can be deployed as a DaemonSet or installed directly on each necessary node.

Disruption – An event that causes a pod (or multiple pods) to go out of service. This can have negative consequences on deployment and other resources necessary for workloads as it affects the pods required.

Docker – The software technology, Docker, or Docker Engine, provides virtualization at the operating system level in the form of containers.

Dockershim – a legacy term that is allows a Kubelet to communicate with Docker. Dockershim was removed from Kubernetes as of v.1.24.

Dynamic volume provisioning – Using the StorageClass API object, users can automatically provision storage volumes rather than needing pre-provisioned volumes. You can create storage volumes on-demand with dynamic volume provisioning. Without this tool, a cluster administrator must manually call the cloud to create a new storage volume, afterward creating PersistentVolume objects that represent those calls in Kubernetes. Dynamic provisioning eliminates pre-provisioning requirements. It simply provisions storage automatically as requested.

Endpoints – Endpoints are objects to which individual pod IP addresses are assigned. The endpoint’s object is then referenced by Kubernetes so there’s a record of all internal IP addresses for all pods so that communication can occur.

Endpoint slice – Released with Kubernetes Version 1.16. Endpoint slicing lets you group endpoints within the group using Kubernetes resources. The endpoint houses all pods matching a specific service. If there are hundreds of pods within a service and you make any changes, endpoint objects can become large. Prior to the release of endpoint slicing, every change made altered every object, passing that change to all nodes within the cluster, which caused critical performance and stability issues. Endpoint slicing lets you group similar endpoints, therefore no disruptions occur when changing multiple endpoints.

Ephemeral container – A type of container that can be run temporarily within a pod. If a pod shows signs of an issue, running an ephemeral container allows the user to diagnose the problem without affecting the entire workload. An important note is that this type of container has no resource or schedule guarantee and should not be used to run the actual workload.

Etcd – A storage configuration management tool, etcd is crucial to Kubernetes execution. It must always be consistent and available so services are scheduled and operate properly. Etcd data is critical, so having a cluster backup is highly recommended.

Event – a report of an event within a cluster normally showing a chance in a system’s state. Events have a limited retention time and K8s events should be used as supplemental data only.

Extensions – Software elements that “extend”, deeply integrating with the Kubernetes platform in support of new hardware types. Cluster admins can install one or more extensions rather than authoring a brand-new instance.

Finalizer – A key used to inform Kubernetes to wait to delete a specific marked resource until specific conditions are met. Finalizers can be used to clean up resources or infrastructure during the garbage collection process.

Garbage Collection – Garbage collection is used across multiple technologies. For Kubernetes it entails cleaning up resources, including unused containers, pods, completed jobs, and resources that have failed, amongst others.

Google Kubernetes Engine (GKE) – GKE offers a managed environment to deploy, manage, and scale containerized apps using Google’s infrastructure. This environment has several machines, such as Compute Engine that, grouped together, form a cluster.

Helm Chart – Helm Charts are YAML manifests that are combined into one package that can be presented to Kubernetes clusters. After packaging, placing a Helm Chart within your cluster is as simple as executing a helm install. The purpose of a Helm Chart is to simplify the deployment of any containerized apps. Read more about Helm.

Horizontal pod autoscaler – Also known as HPA, a horizontal pod autoscaler automatically scales the number of replica pods. Rather than using CPU power or memory thresholds to control the amount of replicated pods, the autoscaler does so. HPA can also be set for custom thresholds.

Host aliases – If a pod host file is specified, this optional mapping can be input into the host file. It is an optional map between an IP address and a hostname. Host aliases will only work in an non-host network pod.

Image – A stored container instance holding a software set that’s required for an application to run. An image is a packaging method for software allowing it to be stored in the container’s registry, sourced by a local system, and executed as an application. Metadata offers information regarding what to run, who built the application, and other information.

Ingress – This API object exposes both secure and non-secure routes (HTTPS and HTTP, respectively) beginning outside a cluster to the services running inside the cluster. An ingress provides external access to internal services. Types of ingresses include single service and load balancing, among others.

Init container – An initialization, or init container is comparable to a regular container, but an init container must run through to completion prior to another container having the ability to start. An init container must run in sequence with all other init containers before the next container may begin.

Istio – Istio, a service mesh, is a modern networking layer for transparent, language-independent automation applications for network functions. This service mesh sustains microservice communications and allows for data sharing.

Job – A Kubernetes Job object is a basic function or instruction for pod creation and termination. As Jobs are created, a Job controller then creates the pods necessary and makes sure pods are terminated successfully. As each pod terminates, the Job controller tracks the number of successfully completed pod tasks.

Kubeadm – This tool helps you dictate to Kubernetes that kubeadm init and kubeadm join are the best pathways to create Kubernetes clusters. Kubeadm takes care of the necessary actions to get a viable cluster working. Kubeadm was designed to only care about bootstrapping, not machine provisioning.

Kube-controller-manager – A component of the Control Plane running controller processes. To ease complexities, each process is rolled into one binary and run as one.

kube-apiserver – See API server.

kubectl – Command line tool that creates, inspects, updates, and deletes objects by communicating with the Kubernetes API server.

Kube-scheduler – One of the components of the Control Plane responsible for cluster management. Kube-scheduler looks for pods that have yet to be scheduled, assigning them to nodes by certain specifications, such as resource availability. As pods are assigned to nodes, each node’s kubelet gets triggered, creating the pod and its containers.

Kubelet – A mini application running on every node within a cluster. It requires a set of instructions, or PodSpecs, and uses these specifications to ensure each container is working properly. Kubelets execute the actions sent to each container from the master node.

kube-proxy – This is the network proxy for Kubernetes. This service runs on each node, handling request forwarding. kube-proxy’s main role is creating rules for iptables. Pods don’t have the same IP addresses, but with kube-proxy, the user can connect to any specific pod in Kubernetes. This is especially crucial for such actions as loan balancing enablement.

Kubernetes API – The app serving Kubernetes functionality. It operates in a RESTful interface, storing the cluster state. In other words, all Kubernetes resources and intent records get stored as objects and then modified according to RESTful orders to the API. The Kubernetes API lets the user declare management configuration. The user can interact with the API directly or with a tool such as kubectl. The Kubernetes API’s core is flexible and allows for extension into custom resources.

Kubernetes Operations (Kops) – Kops is a fast, easy, open-source method of setting up clusters. Technically, it’s the “kubectl” cluster setup method. Kops lets you deploy available clusters on Amazon Web Service (AWS) and Google clouds (GCP).

Label – Assigns meaningful, relevant identifying attributes to objects. Labels organize objects and select subsets.

Limit range – Assigned within namespace, the limit range allows resource consumption of containers and pods to be constrained, or limited. If only a certain number of object types are needed, limit range limits the number of resources that a container or pod within the namespace can request or consume.

Load Balancing – Load balancing is the process of distributing traffic across multiple servers to prevent specific servers from overloading.

Logging – The recording of events within a cluster or application. System and app logs help the user understand what happens within a cluster. Logs are especially useful in monitoring activity within clusters and debugging problems.

Manifest – A plan for the desired state of a specific object. When the user applies the manifest, Kubernetes maintains that desired object state. Every configuration file can hold several manifests. A manifest in Kubernetes illustrates all resources, you wish to create, such as:

- Deployments

- Services

- Pods

The manifest also lets you dictate how those resources should run within a cluster.

Master node – As the name suggests, this node holds Kubernetes objects in control of the cluster, its data on cluster state, and its configuration. Kubernetes objects in the Master Node include etcd, kube-controller-manager, kube-apiserver, kube-scheduler, and cloud-controller manager.

minikube – Allows users to run Kubernetes locally. A single-node cluster within a virtual memory file on the user’s computer. minikube allows you to run a cluster with just one node on your own PC, whether you’re running, Windows, macOS, or Linux. minikube is perfect for users and developers who simply want to take Kubernetes for a spin or to perform daily developmental work.

Mirror pod – An object used by a kubelet that represents a static pod. If kubelet finds static pods within the configuration, it attempts to create a pod object for it in the Kubernetes API server. The user will be able to see the pod on the API server but will not be able to control it there.

Name – A user-provided string referring to a specific object within a URL. No two objects of the same type can have the same name at the same time. If the user deletes the object, however, a new object using that name can be created.

Namespace – An abstract placeholder name Kubernetes uses to support several virtual clusters atop the same physical cluster. This helps organize cluster objects and lets the user divide that cluster’s resources. Resource names must be unique within a specific namespace in a cluster but can be used in other namespaces.

Network policy – Kubernetes pods have the ability to communicate with other pods and get traffic and information from other sources by default. This Kubernetes resource indicates how groups of pods can communicate with other pods and network endpoints. With the use of labels, pods are chosen and allowable traffic configured.

Node – A worker machine. Nodes can be either virtual or physical, depending on the individual cluster. Nodes have local daemons needed to run the pods. Nodes are managed on the Control Plane. Daemons on nodes include:

- kubelet

- kube-proxy

- Container runtime

Node Pressure Eviction – If a node appears to be failing, node-pressure eviction lets the kubelet terminate pods beforehand so as to reclaim needed node resources. If a workload resource, like StatefulSet, Deployment, or others) manages your pods and checks for pods that need replacing, your control plane (or kube controller manager) will create a new pod for each evicted pod.

Object – A system entity. The entities represent the cluster’s state. Objects are records of intent. Once a user creates an object, the Control Plane ensures the existence of the actual item the object represents. Objects represent to the system what your cluster’s workload should look like or the desired state of the cluster.

Persistent Volume (PV) – A cluster storage resource. Kubernetes doesn’t only manage containers that run an app; it also manages cluster application data. Persistent Volume (PV) abstracts data storage from existing storage infrastructure and can actually outlive the pod itself. Due to this feature, PV can create stateful pods.

Persistent volume claim (PVC) – A request for storage access. PVCs get mounted within a container and specify the storage amount required, how it can be accessed, and how to reclaim the resource.

Pod – The smallest object in Kubernetes representing a set of containers running on a cluster. Typically, a pod runs one primary container, but sidecar containers can also be added for additional features, such as logging. These objects are usually managed by a deployment.

Pod disruption – Pod disruption occurs in one of two ways:

- A user or controller destroys a pod

- There’s been an inevitable hardware, software, or system error

The inevitable, or unavoidable, types of pod disruption are called involuntary disruptions. A few examples of involuntary disruptions include:

- Hardware failure within the actual, physical machine that backs the node.

- A cluster administrator accidentally deletes an instance.

- A failure at the cloud provider occurs.

- A panicked kernel.

- A cluster network partition causes a node to disappear.

- A node runs out of resources and evicts a pod.

Aside from the last example, most users are probably familiar with the other examples. They do not occur only in Kubernetes.

Other disruptions are known as voluntary, and they include actions that can be started by an app’s owner and those started by a cluster admin. Some typical voluntary disruptions include:

- Deleting a deployment or another controller managing the pod.

- Updating the template for a deployment pod, which causes a restart.

- Simply deleting a pod.

Pod disruption budget (PDB) – Lets the user configure how many disruptions a class of pods can tolerate so there is always the correct number available even if a disruption event occurs. When a pod disruption causes a cluster to drop below budget, the operation gets paused till the budget is maintained.

Pod lifecycle – A pod has five distinct phases:

- Pending

- Running

- Succeeded

- Failed

- Unknown

The lifecycle of a pod is the order of these states throughout the pod’s existence.

Pod phase – A high-level summation of the point at which a pod is in its lifecycle. The five potential pod phases defined are:

- Pending: Accepted but waiting for the requisite amount of container images.

- Running: All containers are created, and the pod is attached to a node. One container at minimum is running, working toward starting, or is restarting.

- Succeeded: Each container within the pod has terminated successfully and will not restart.

- Failed: Each container within the pod has terminated, but one or more failed. Exits in non-zero status or system termination.

- Unknown: Pod state cannot be determined. Usually a failure to communicate with the host pod.

Pod priority – In production loads, some pods have a higher level of importance. Pod priority allows a user to give certain pods preference over others with less importance.

Pod preset – An API object that allows the user to put information in pods at the time of creation. Presets are then managed by an Admission Controller. The Admission Controller applies the preset at the time of creation request. Some of the most typical presets include:

- Secrets

- Volumes

- Mounts

- Environmental variables

Pod Security Policy – Pod Security Policies are cluster-level resources controlling sensitive aspects of a pod’s specifications. A PodSecurityPolicy object defines a specific set of conditions that a certain pod must be running for the system to accept it, in addition to defaults for other related fields.

Preemption – Pods are put in a queue after creation. Pod Priority and Preemption are turned on. As the scheduler grabs pods from this queue, it attempts to schedule them to a node within the cluster. If the pod can’t be scheduled, the scheduler uses the preemption logic to temporarily stop low-priority pods and allow the pending pod to be scheduled.

Proxy – The kube-proxy is the network proxy running on every node within a cluster that implements a piece of the Kubernetes Service model. Kube-proxy supports the network rules within each node. Network rules let the network communicate with your pods, whether the network session is in your cluster or not.

Quality of Service (QoS) Class – A concept that helps Kubernetes determine pod priority in terms of scheduling or eviction. These classes are assigned at the time of pod creation and determined by their resource requests and limitations. The three Quality of Service classes that can be assigned are:

- Guaranteed

- Burstable

- BestEffort

RBAC – Through Kubernetes API, RBAC manages authorization decisions. This allows users at the admin level to configure dynamic access policies by role. Roles contain permission rules. Role bindings grant each defined permission to a specific set of users.

ReplicaSet – A core Kubernetes controller that is used to ensure that the right amount of replicas are running.

ReplicationController – Controls the number of exact pod copies that should be allowed to run in a cluster. After the admin defines the number of replicas allowed, the Control Plane maintains cluster compliance even if pods fail, get deleted, or if too many started at deployment.

Resource quotas – Places a limitation on how many objects can be created, by type, within a namespace. Also ensures that the amount of computing resources allowed within a project is not exceeded.

Secrets – Security account credentials. Secrets are objects containing small packets of sensitive data. As the name suggests, Secrets in Kubernetes is a tool that allows you to store and manage information of a sensitive nature, such as:

- Login information

- OAuth tokens

- SSH keys

In the absence of Secrets, this sensitive data might be placed in a pod spec or image.

Security Context – A pod or container’s security context outlines the privileges and access controls. Discretionary Access Control restricts access permissions to certain objects, such as files, and is based on a user’s ID and/or group ID. Security context can be set to run as either with or without privileges.

Selector – Lets users filter lists of resources by labels. A selector in Kubernetes lets you choose resources based on their label values and assigned resource fields. Think of it like the filter you apply when using Excel spreadsheets. Selector lets you filter just the details you want to see of objects with similar labels.

Service – A conceptual method of exposing applications running on pod sets as a network service. Pod sets targeted by services are (normally) chosen by a selector. If the user adds or removes pods, the pod set that matches the selector changes. The service ensures that the network traffic gets directed to the right pod set.

Service Account – Gives an identity to processes running within a pod. Pod processes accessing the cluster are validated by the server as a specific service account, such as “default”. When a user creates a pod, if a particular service account is not specified, it automatically gets assigned as “default” within that namespace.

Service Catalog – This API extension allows apps that run on Kubernetes clusters to use externally managed software, such as datastore services provided by cloud providers. Service Catalog helps users with listing, provisioning, and binding to externally managed services without having to understand how each specific service is managed or was created.

Shuffle sharding – A technique to assign queue requests. Shuffle sharding is a nimble technique that does a better job isolating low-intensity flows from high-intensity flows than hashing the number of queues.

Sidecar container – A support extension for a main container. A sidecar container can be paired with as many main containers as necessary, enhancing the capabilities of the main containers. For instance, a sidecar container is a good addition to a main container to assist in processing or monitoring system logs.

Stateful – A process that saves data and tracks historical sessions and transactions.

StatefulSet – A Kubernetes controller that manages deployment and scaling for a pod set. Pods managed by this controller are provided unique identities. This identity is used if pods need to be rescheduled.

Stateless – A process that does NOT save data or track historical sessions and transactions.

Static pod – Similar to regular pods, static pods are managed on a node directly by kubelet, instead of on the server. The kube-scheduler ignores static pods, meaning they won’t be terminated or evicted if the node fails.

StorageClass – A way for admins to describe various options available for storage. Important fields in StorageClass specs are:

- Provisioner

- Parameters

- ReclaimPolicy

- VolumeBindingMode

If a Persistent Volume needs dynamic provisioning, these StorageClass terms can be used.

Systcl – A way for admins to modify or change kernel attributes or settings. sysctl, short for ‘system control’, is a utility in some operating systems that can read and/or modify system kernel attributes, such as:

- Version number

- Max limits

- Security settings

In compiled programs, it’s available as both a system call and administrator command. Sysctl can be used interactively or for scripting.

Taint – Core Kubernetes object. Consists of three necessary properties:

- Key

- Value

- Effect

Taint prevents pods from getting scheduled on nodes or on node groups. Specifically useful when nodes with special hardware are reserved, are not licensed for software already running within the cluster, and other reasons. Taints alert the scheduler that these nodes aren’t available for scheduling. Taints and Tolerations work together for better control over pod-to-node scheduling.

Toleration – Core Kubernetes object. Consists of three necessary properties:

- Key

- Value

- Effect

Opposite of Taints, Tolerations allow pod scheduling on nodes or on node groups. Tolerations allow pods to ignore Taints and are housed within PodSpec.

UID – A string generated by the Kubernetes system for object identification. A unique identifier.

Vertical Pod Autoscaler – This tool adjusts the CPU and memory reserves of your pods so that each application is exactly the right size. These adjustments help clusters use resources more appropriately and also frees up CPU processing and memory for your other pods.

Volume – A data directory. The volume can be accessed by containers within a pod. Volumes have a lifespan as long as its pod. As a result, volumes outlive containers running in the pod, and the data is preserved throughout container restarts.

Worker node – A Kubernetes node. See node. Kubernetes clusters consist of a worker machine set, also known as nodes. These nodes run the containerized apps. There’s at least one worker node in every cluster. Worker nodes contain the application’s workload pods. The mods and worker nodes in each cluster are managed by the control plane.

Workload – An app that runs on Kubernetes is a workload. It doesn’t matter whether the workload is just one or many components working together. Kubernetes runs the workload within a pod set. A pod is the set of containers running within the cluster. All your clusters represent the workload.

Last updated August 29, 2022

Modern businesses require increasingly complicated tech stacks to keep their teams up and running. What’s more, as businesses try to stay on top of the latest advancements, they wind up with interconnected layers of apps, hardware, and systems that become more and more interdependent on one another. Often, mission-critical technology ends up dependent on various connections, services, and infrastructure components.

This interweaving is difficult to avoid, but what matters most is that businesses take strides to understand it. With application dependency mapping, businesses can recognize how a seemingly simple change in one area could potentially have a ripple effect on the rest of their tech stack, helping them avoid unwanted downtime and complications.

Of course, there’s more than one way to go about application dependency mapping. So, let’s take a closer look at what it is, how to approach it, and where businesses should be looking to go from there.

What Is Application Dependency Mapping?

The purpose of application dependency mapping is to help businesses understand the complex connections between their various hardware, software, and system components. With more businesses using a hybrid collection of local and cloud-based services, finding the right way to approach application dependency mapping is crucial to maintaining uptime, functionality, and ease of use for internal teams.

The benefits of application dependency mapping are far-reaching, but it starts with the improved visibility and observability that these maps create. As a result of the right approach, businesses can unlock real-time notifications when network changes and problems occur, helping them react quickly. They can also speed up problem-solving by easing root cause analysis and other diagnostic processes.

Overall, application dependency mapping helps IT teams save time and money while improving project planning and execution. As a result, entire businesses benefit from reduced downtime and fewer instances of apps and services not functioning as planned. To unlock all of these benefits, you just need to find the right method for your tech stack.

What Are the Four Methods of Application Dependency Mapping?

As with most modern business challenges, there is more than one way to go about application dependency mapping. These four methods are considered the most widely used for Application Discovery and Dependency Mapping (ADDM), so let’s dive into how each one works along with the pros and cons they hold.

1. Sweep and Poll

Sweep and poll is considered the oldest method of application dependency mapping, and it’s fairly lightweight, meaning it won’t put a lot of strain on your IT resources to complete. This method begins with a “sweep” of your company network in which all IP addresses will be pinged. The automated process will work to gather as much information as possible on all devices pinged on the network, helping you understand what server those devices are operating on, along with information about the applications connected to it.

As you can imagine, the sweep and ping method is one of the fastest ways to generate a blueprint of all the devices your company needs to map together. It can also help you start to identify the interdependencies and get the hierarchy and connections in place. The biggest advantage of this method is that it’s so simple, but that’s also its biggest downfall. While you can get a headstart by pinging IPs, you’ll find that this method becomes less and less reliable in more complex environments.

In almost all cases, businesses using the sweep and poll method will want to go back and manually validate the interdependencies (and expand upon them) to ensure that their application dependency map is truly accurate and reliable.

2. Network Monitoring

Another way to start the application dependency mapping process is to analyze the network traffic patterns at the flow level or by capturing packets. This method is ideal for beginning to understand an unexplored system since it does not take any sort of pre-defined blueprint. However, it can be difficult to scale up. You’ll also need to watch for duplicate records.

One of the primary advantages of this method is that the network is monitored in real-time. As a result, you can track changes to your network as they occur, which can help you begin to see how certain applications are dependent on other components of your tech stack. Of course, this requires your IT team to monitor the results or review reports later on, both of which take substantial time and resources for complex environments.

3. Agent on Server

If the idea of monitoring traffic for real-time analysis appeals to you, another method you may pursue involves placing agents at every relevant app, server, or other connected technology. The purpose of these agents is to monitor the inflow and outflow of traffic, helping them identify when changes occur and, therefore, map dependencies across your tech stack.

The primary benefit of this method is that you can easily identify when multiple apps are being used by the same IP address, helping to improve the accuracy of your mapping efforts. However, because you will need to place multiple agents, the cost can quickly increase for more complicated environments.

4. Automated ADDM

For a done-for-you solution that minimizes the burden on your IT team, you might consider relying on an automated ADDM platform. These managed solutions offer a suite of tools that can speed up the discovery of tech stack components and map them together quickly, giving you a full blueprint without straining your internal team.

For businesses big and small, the best feature of ADDM is its ability to save you time and resources. However, there is an added and ongoing cost that comes along with using one of these platforms. Still, with the ability to schedule additional scans periodically, an ADDM platform can put you on the fast track to mapping dependencies and making sure your map remains accurate and up-to-date as changes occur to your infrastructure.

Application Mapping vs. Application Dependency Mapping

While most businesses recognize the need for application dependency mapping, many consider it one and the same as application mapping. However, these processes ultimately have different goals.

Application mapping is often the first step in application dependency mapping as it requires you to discover all applications on your network and the underlying infrastructure. The resulting application map helps you visualize your network along with a simple hierarchy (e.g., this app runs on this server, etc.).

Application dependency mapping takes the process even further. Once you have discovered all components of your tech stack, an application dependency map tasks your business with showing how they all connect. This means discovering the interdependencies that will demonstrate why changing the available resources in this database could negatively impact the functionality of a specific system.

Overall, if you’re looking to better understand your tech stack, reduce downtime, improve functionality, and prepare your team to effectively plan out successful projects without causing unexpected issues on your network, you are looking for an application dependency mapping solution.

Best Practices for ADDM

No matter which of the four methods you choose to utilize to complete your Application Discovery and Dependency Mapping (ADDM) project, it’s critical that you keep these best practices in mind to ensure the accuracy, efficiency, and reliability of your efforts.

Recognize All Types of Dependencies

Before diving into an ADDM project, your team must recognize that you won’t be able to discover all dependencies within the systems themselves, even if you devote endless time and energy trying to do so. This is because there are many types of dependencies that exist in business, including those relating to business cost and culture.

If you fail to recognize these “unseen” dependencies, your application dependency map (no matter how complete it is otherwise) will lead you astray the next time you go to plan a project or make a major change to business infrastructure. For that reason, all tech and non-tech dependencies need to be understood and considered going forward.

Actively Avoid Dependencies When Possible

By far, one of the easiest ways to simplify the application dependency mapping process is to actively work to avoid dependencies. While you will never be able to create an environment that’s void of dependencies, getting in the habit of thinking twice before creating additional dependencies is a smart practice.

As you go about this, remember that you should also choose the lesser of two evils. In other words, when dependency cannot be avoided, take strides to avoid a proprietary dependency that might lock you in with a specific vendor. Additionally, try to write agnostic, durable code when possible to avoid getting tied to a specific operating system or having to rework everything a few versions from now.

While all of this is certainly easier said than done, these are the habits you should begin to get your IT and development teams to follow as you move forward with application dependency mapping (and management).

Strive To Test Everything

Testing all dependencies and the underlying infrastructure is a necessary step to fully understanding how dependencies work and how you might alleviate some of them. Testing is also crucial to gaining insight into the performance of your environment, which can help you simplify, improve, and troubleshoot down the road.

The biggest challenge with testing everything is that third-party applications, such as cloud-based SaaS solutions, often limit what you can see and do when it comes to the underlying infrastructure. Identifying these limitations is yet another important step to help you decide where things should be changed or improved in the future.

Periodically Update Your Map

The single biggest mistake any business can make with application dependency mapping is treating it as an event instead of an ongoing activity. Things change fast in modern business environments. Failing to set up monitoring tools and schedule re-checks will mean that an application dependency map that took so long to create and perfect will rapidly deteriorate.

In general, the more complex your environment is, the more often you should plan to re-check your application dependency map and look for new components and dependencies on your network. You should also make an effort to do so after each new addition, such as when you implement a new application.

While it will take time and resources to maintain your application dependency map, doing so will allow you to unlock and uphold all of the key benefits of this process, like better observability, performance improvements, smoother IT project planning, and reduced downtime across the board.

Conclusion

Application dependency mapping is an ongoing investment of time and resources, but your business has some flexibility when it comes to choosing the path forward. With four methods to pick from, including the option to invest in an automated platform that can do most of the legwork for you, your company can easily get on the path to observability and reap all of the rewards that come along with it.

What matters most as you pursue an application dependency map is that you follow some established best practices. Namely, that means understanding the many technical and non-technical dependencies that will impact your map and how you use it. Additionally, you should seek to improve your processes going forward to help minimize new dependencies and phase out those that currently exist.

All in all, pursuing an application dependency map is practically a must-do for modern businesses to understand their increasingly complex environments. Now, it’s just a matter of deciding where to start.

There are many types of data compatible with SQL Server, and it’s important to understand what they are to avoid issues with non-compatible data types. Understanding the compatible data types is also fundamental to understanding the data type precedence, which determines what type of data will result when working with objects of two different types.

In this guide, we’ll go through all of the data types SQL Server supports, along with the process of defining custom data types using Transact-SQL or the Microsoft .NET Framework.

Contents

- What Is SQL Server?

- Why Are Data Types Important?

- What Are the Different Data Categories?

- Defining Custom Data Types

- Choosing the Right Data Type

What Is SQL Server?

Before we dive into the many data types SQL Server supports, here’s a quick recap on what SQL Server is. Microsoft developed SQL Server to serve as a relational database management system (RDBMS). SQL Server utilizes SQL, the standard language for using relational databases of all kinds.

Microsoft’s SQL Server is no longer exclusive to the Windows environment and is now available on Linux, which is great news for those interested in using SQL Server. Additionally, Microsoft’s cloud platform, known as Azure, supports SQL Server. So, if you need a place to host it, there’s no better place to look than the native solution.

Microsoft markets it as “the cloud that knows SQL Server best,” and Azure SQL Server indeed benefits from seamless integration, simplicity, and reliability since both the server and cloud infrastructure are developed and maintained by the same company.

Regardless of where you host SQL Server, though, it’s critical to note SQL Server uses a slightly different SQL language. Microsoft has developed Transact-SQL (T-SQL), which is very similar to standard SQL but defines a set of proprietary concepts necessary to program SQL Server.

If you are familiar with SQL, using Transact-SQL will not be difficult, and you’ll be able to utilize SQL Server with ease. But another critical aspect of effectively using SQL Server is understanding all of the data types it supports.

Why Are Data Types Important?

The wrong data type can lead to issues with database performance, query optimization, and data truncation. These problems are often first realized by the development team as they are the ones tracking speed and performance. Still, problems can trickle down to the entire organization, causing data integrity issues and other serious challenges.

If you’re new to SQL Server, the sheer number of data types may be overwhelming. However, they are neatly organized and well-documented, making it a bit easier to find what you need as long as you understand what type of data you are planning to store. Of course, while you can reference as you go along, gaining knowledge of SQL Server data types is paramount to efficiency and optimization in the long haul. Once you dive in, you’ll see that there is some overlap, and knowing when to choose float point over decimal or opt for variable-length over fixed is only possible if you fully understand all of your options.

What Are the Different Data Categories?

Below is a look at each category of data within SQL Server, along with all of the data types that fit within each one.

Exact Numerics

When using an exact numeric data type, it’s important to understand your options so that you can select the smallest data type suitable for your use case. It’s also necessary to choose the data type corresponding to the kind of numeral you’re storing—like money or smallmoney for currencies.

- tinyint: The smallest integer storage type, capable of storing numbers between 0 and 255.

- smallint: Integer storage type with twice the size, up to 2 bytes.

- int: Integer storage type with up to 4 bytes of storage.

- bigint: The largest integer storage type capable of holding up to 8 bytes of data.

- decimal and numeric: These synonymous terms refer to the same data type, which is characterized by its fixed scale and precision.

- bit: This data type always has a value of 1, 0, or NULL. You can convert true/false data to a bit, where 1 equals True, and 0 equals False.

- smallmoney: This data type represents monetary values and allows up to two decimals.

- money: This is another monetary data type but allows up to four decimals.

Unicode Character Strings

If you’re unfamiliar with Unicode, it is a universal standard that assigns a unique number to every character, allowing written text to be encoded and represented consistently. For example, “hey” in Unicode would be broken down as follows: U+0048 (“H”), U+0065 (“E”), and U+0059 (“Y”).

SQL Server supports the full range of Unicode character data using these character strings. They can be fixed or variable.

- nchar: Fixed in size, recommended for use when the data sizes in a column are consistent.

- nvarchar: Variable in size, recommended for use when data sizes in a column vary considerably.

- ntext: Scheduled for removal in future versions of SQL Server, designed as a variable-length data type for Unicode. Microsoft recommends nvarchar(max) instead.

Approximate Numerics

When numeric data cannot be represented exactly, it is referred to as “floating point” numeric data, and you would use approximate-number data types to store it. With floating-point data types, the number is written using scientific notation, so 825,000 would be stored as 8.5 x 105.

Floating-point numbers can be incredibly large or incredibly small. Both floating-point and decimal data types can store a number with a decimal—the difference is that floats require less storage space while decimals are more precise. SQL Server supports two kinds of approximate numerics with the float and real data types.

- float: Double precision floating number, which equates to 8 bytes or 64 bits.

real: Single precision floating number, which equates to 4 bytes or 32 bits.

Character Strings

Character strings have a self-explanatory name: These data types are used to store characters. They can be fixed or variable in size.

- char: Fixed-size string data that uses a static memory location. Ideal when you know the length of the string and all strings in a column will be the same

- varchar: Variable-sized string data that uses a dynamic memory location. Use if you are unsure of the length of the string or when the length of strings in a column will vary considerably.

- text: Scheduled for removal in future versions of SQL Server, designed as a variable-length data type for non-Unicode data. Microsoft recommends replacing it with varchar (max).

Binary Strings

Binary data types support either fixed or variable strings of data. The difference between character strings and binary strings is the data they contain: Character strings typically store text but could also store numbers or symbols. Binary strings typically store non-traditional data in the form of bytes, such as pictures.

- binary: Fixed length, ideal to use when the data sizes in a column are consistent.

- varbinary: Variable length is ideal when data sizes in a column vary considerably.

- image: Scheduled for removal in future versions of SQL Server, designed to store variable-length binary data. Microsoft recommends replacing it with varbinary (max).

Date and Time

These data types are explicitly designed for storing dates and times. Some support timezone awareness and others do not. When dealing with dates and times, it’s crucial to choose a data type that keeps entries consistent in format and select a data type that’s flexible enough to support the level of detail you need (i.e., time of day, timezone, etc.).

- date: Defines the date. The default format is YYYY-MM-DD but can be formatted in over 20 different ways, including DMY, DYM, and YMD.

- datetimeoffset: Defines a date and time of day. This data type is timezone aware.

- datetime2: Extension of the above data type with an optional fractional seconds precision.

- datetime: Similar to datetime2 but with less fractional seconds precision.

- smalldatetime: Defines a date and time of day, but seconds are always zero.

- time: Defines a time of day but without timezone awareness.

Other Data Types

Additional data types exist in SQL Server, but they don’t quite fit into any of the above categories. For that reason, these data types simply exist under “other.” The other data types include the following:

- rowversion: Used for version stamping rows within a table. A simple incrementing number that does not preserve date or time.

- hierarchyid: Variable length system data type used to represent the position in a hierarchy.

- uniqueidentifier: A globally unique identifier (GUID) capable of storing up to 16 bytes.

- sql_variant: Stores various data types supported by SQL. The most important part of sql_variant is that it is variable. For example, one sql_variant column could contain an int in one row and a binary value in another. In order for arithmetic operations, like SUM or PRODUCT to be applied, the type must be first cast to something that works with that operation.

- xml: Stores XML data.

- Spatial Geometry Types: Represents data in a flat coordinate system.

- Spatial Geography Types: Represents data in a round-earth coordinate system.

- table: Special data type used to store a result for processing at some point later.

Defining Custom Data Types