LogicMonitor uses the JMX collection method to collect performance and configuration data from Java applications using exposed MBeans (Managed Beans). These MBeans represent manageable resources in the Java Virtual Machine (JVM), including memory, thread pools, and application-specific metrics.

To collect data using the JMX collection method, configure a datapoint that references a specific MBean and one of its attributes. Specify the following:

- MBean ObjectName— A domain and one or more key–value properties.

For example,domain=java.lang, propertiestype=Memory. - Attribute— The attribute to collect from the MBean.

Each MBean exposes one or more attributes that can be queried by name. These attributes return one of the following:

- A primitive Java data type (for example,

int,long,double, orstring) - An array of data (for example, an array of primitives or nested objects)

- A hash of data (for example, key–value pairs, including nested structures or attribute sets)

LogicMonitor supports data collection for all JMX attribute types.

Note:

LogicMonitor does not support JMX queries that include dots (periods) unless they are used to navigate nested structures.For example:

- rep.Container is invalid if it is a flat attribute name.

- MemoryUsage.used is valid if MemoryUsage is a composite object and used in a field inside it.

When configuring the JMX datapoint, LogicMonitor uses this information to identify and retrieve the correct value during each collection cycle.

For more technical details on MBeans and the JMX architecture, see Oracle’s JMX documentation.

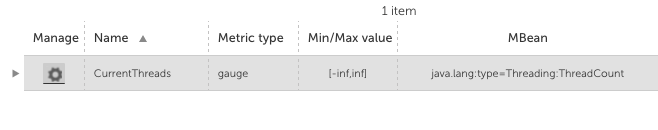

Simple Attribute Example

If the attribute is a top-level primitive:

- MBean ObjectName:

java.lang:type=Threading - MBean Attribute:

ThreadCount

LogicMonitor collects the total number of threads in the JVM.

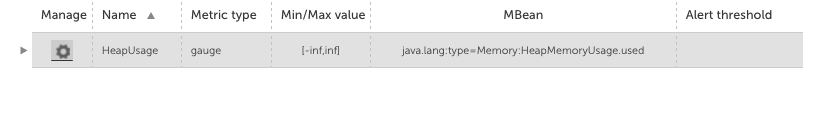

Nested Attribute Example

If the attribute is part of a composite or nested object, use the dot/period separator as follows:

- MBean ObjectName:

java.lang:type=Memory - MBean Attribute:

HeapMemoryUsage.used

LogicMonitor collects the amount of heap memory used.

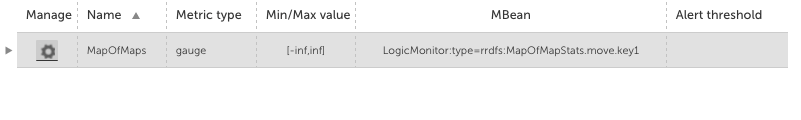

Multi Level Selector Example

To collect data from a map or nested structure with indexed values:

- MBean ObjectName:

LogicMonitor:type=rrdfs - MBean Attribute:

QueueMetrics.move.key1

LogicMonitor retrieves the value associated with the key key1 from the map identified by index move under the QueueMetrics attribute of the MBean.

CompositeData and Map Support

Some JMX MBean attributes return structured data such as:

- CompositeData: A group of named values, like a mini object or dictionary.

- Map: A collection of key-value pairs.

LogicMonitor supports collecting values from both.

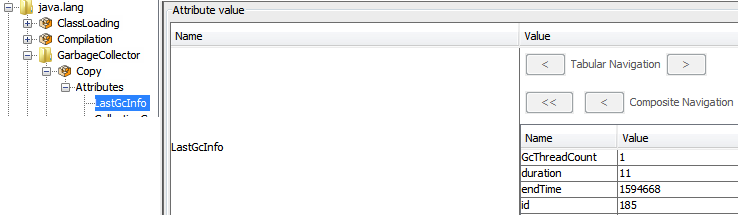

Accessing CompositeData Example

A CompositeData attribute is like a box of related values, where each value has a name (field). To collect a specific field from the structure, use a dot (.) separator.

MBean: java.lang:type=GarbageCollector,name=Copy

Attribute: LastGcInfo

Value Type: CompositeData

To access the specific value for the number of GC threads use: LastGcInfo.GcThreadCount

Note: Maps in JMX behave similarly to CompositeData, but instead of fixed fields, values are retrieved using a key.

TabularData Support

Some MBean attributes return data in the form of TabularData, a structure similar to a table, with rows and columns. LogicMonitor can extract specific values from these tables.

A TabularData object typically consists of:

- Index columns: Used to uniquely identify each row (like primary keys in a database)

- Value columns: Contain the actual data you want to collect

You can access a value by specifying:

- The row index (based on key columns)

- The column name for the value you want

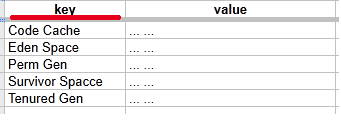

Single Index TabluarData Example

The MBean java.lang:type=GarbageCollector,name=Copy has an attribute LastGcInfo. One of its child values, memoryUsageAfterGc, is a TabularData.

The table has 2 columns – key and value. The column key is used to index the table so you can uniquely locate a row by specifying an index value.

For example, key=”Code Cache” returns the 1st row.

To retrieve the value from the value column of the row indexed by the key "Eden Space", use the expression: LastGcInfo.memoryUsageAfterGc.Eden Space.value

In this expression, "Eden Space" is the key used to identify the specific row, and value is the column from which the data will be collected.

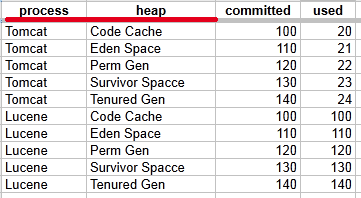

Multi-Index TabularData Example

Some tables use multiple index columns to identify rows.

This TabularData structure has four columns, with process and heap serving as index columns. A unique row is identified by the combination of these index values.

To retrieve the value from the committed column in the row where process=Tomcat and heap=Perm Gen, use the expression: LastGcInfo.memoryUsageAfterGc.Tomcat,Perm Gen.committed

Here, Tomcat,Perm Gen specifies the row, and committed is the column containing the desired value.

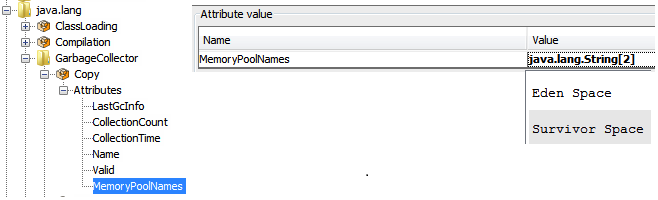

Array or List Support

Some MBean attributes return arrays or lists of values. LogicMonitor supports collecting data from these array or list values using index-based access.

For example, the MBean: java.lang:type=GarbageCollector,name=Copy has an Attribute: MemoryPoolNames with Type: String[]

To access/collect the first element of this array, the expression “MemoryPoolNames.0“ can be used, where “0” is the index to the array (0-based).

You can access the array elements by changing the index as follows:

MemoryPoolNames.0 = "Eden Space"MemoryPoolNames.1="Survivor Space"

The same rule applies if the attribute is a Java List. Use the same dot and index notation.

To enable JMX collection for array or list attributes:

- Make sure your Java application exposes JMX metrics.

- Confirm that the username and password for JMX access are correctly set as device properties:

jmx.userjmx.pass

These credentials allow the Collector to connect to the JMX endpoint and retrieve the attribute data, including elements in arrays or lists.

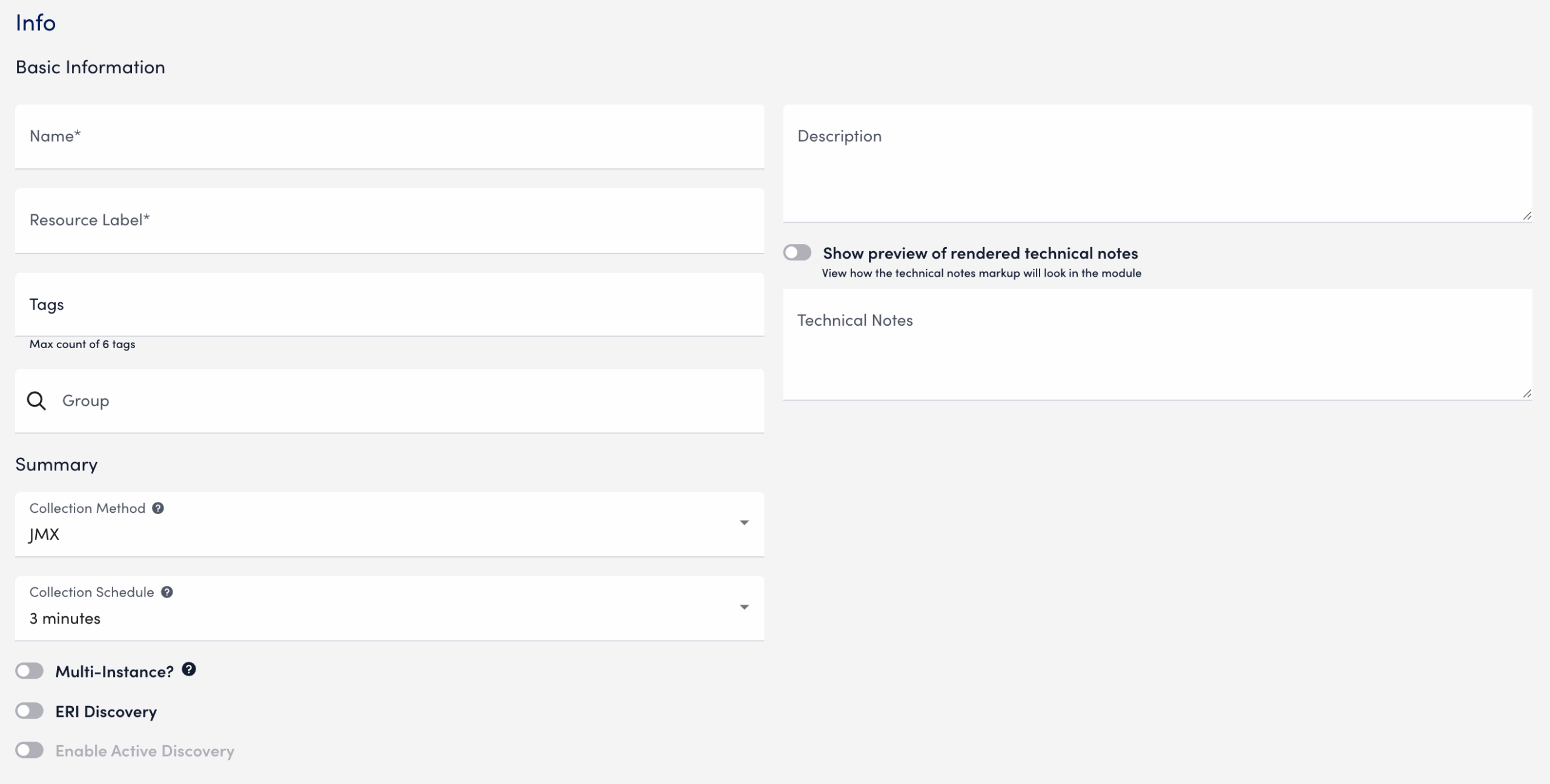

Configuring a Datapoint using the JMX Collection Method

- In LogicMonitor, navigate to Modules. Add a new DataSource or open an existing module to add a datapoint for JMX collection.

For more information, see Custom Module Creation or Modules Management in the product documentation. - In the Collection Method field, select “JMX”.

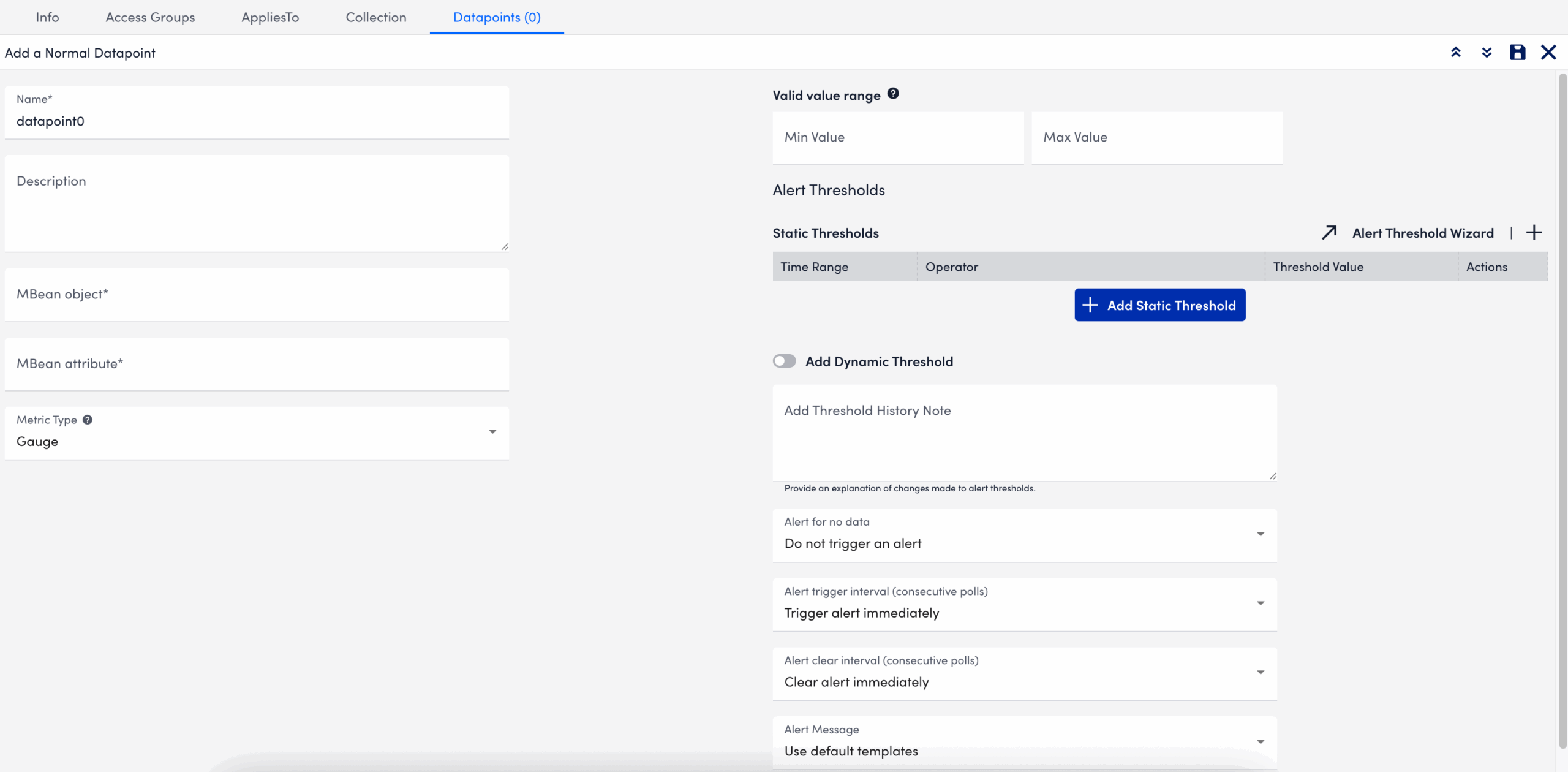

- Select Add a Normal Datapoint.

- In the Name field, enter a name for the datapoint.

- In the Description field, enter a description.

- In the MBean object field, enter the MBean path, including the domain and properties.

- In the MBean attribute field, enter the specific attribute or nested field to collect.

- In the Metric Type field, select the metric type for the response.

- Configure any additional settings, if applicable.

- Select

Save.

Save.

The datapoint is saved for the module and you can configure additional settings for the module as needed. For more information, see Custom Module Creation or Modules Management.

Troubleshooting JMX Data Collection

Collector does not support the first-level JMX attributes that contain dots(.). By default, the Collector treats dots as path separators to access nested data. If the dot is simply a part of the attribute’s name and not intended to indicate a hierarchy, it can cause:

- ANullPointerException in the JMX debug window,

- NaN (Not a Number) values in the Poll Now results, and

- Failure to collect data correctly

Mitigating JMX Data Collection Issues

To prevent data collection errors with attributes that include dots, do the following:

- Identify the attribute name in your MBean that contains dots.

For example (attribute name):jira-software.max.user.count - Determine whether the dots are part of the attribute name or indicate a nested path.

- If the dots are part of the attribute name, escape each dot with a backslash (

\.).

For example:jira-software\.max\.user\.count - If the dots indicate navigation inside a structure, do not escape them.

- If the dots are part of the attribute name, escape each dot with a backslash (

- Enter the attribute in the LogicModule or JMX Debug window, using the escaped form only when the dots are part of the attribute name.

- Verify the data collection using the Poll Now feature or JMX debug window.

Attribute Interpretation Examples

| Attribute Format | Interpreted in Collector Code as |

jira-software.max.user.count | jira-software, max, user, count (incorrect if flat attribute) |

jira-software\.max\.user\.count | jira-software.max.user.count (correct interpretation) |

jira-software\.max.user\.count | jira-software.max, user.count |

jira-software.max.user\.count | jira-software, max, user.count |

Introduction

Generally, the pre-defined collection methods such as SNMP, WMI, WEBPAGE, etc. are sufficient for extending LogicMonitor with your own custom DataSources. However, in some cases you may need to use the SCRIPT mechanism to retrieve performance metrics from hard-to-reach places. A few common use cases for the SCRIPT collection method include:

- Executing an arbitrary program and capturing its output

- Using an HTTP API that requires session-based authentication prior to polling

- Aggregating data from multiple SNMP nodes or WMI classes into a single DataSource

- Measuring the execution time or exit code of a process

Script Collection Approach

Creating custom monitoring via a script is straightforward. Here’s a high-level overview of how it works:

- Write code that retrieves the numeric metrics you’re interested in. If you have text-based monitoring data you might consider a Scripted EventSource.

- Print the metrics to standard output, typically as key-value pairs (e.g. name = value) with one per line.

- Create a datapoint corresponding to each metric, and use a datapoint post-processor to capture the value for each key.

- Set alert thresholds and/or generate graphs based on the datapoints.

Script Collection Modes

Script-based data collection can operate in either an “atomic” or “aggregate” mode. We refer to these modes as the collection types SCRIPT and BATCHSCRIPT respectively.

In standard SCRIPT mode, the collection script is run for each of the DataSource instances at each collection interval. Meaning: for a multi-instance DataSource that has discovered five instances, the collection script will be run five times at every collection interval. Each of these data collection “tasks” is independent from one another. So the collection script would provide output along the lines of:

key1: value1

key2: value2

key3: value3And then three datapoints would need be created: one for each key-value pair.

With BATCHSCRIPT mode, the collection script is run only once per collection interval, regardless of how many instances have been discovered. This is more efficient, especially when you have many instances from which you need to collect data. In this case, the collection script needs to provide output that looks like:

instance1.key1: value1

instance1.key2: value2

instance1.key3: value3

instance2.key1: value1

instance2.key2: value2

instance2.key3: value3As with SCRIPT collection, three datapoints would need be created to collect the output values.

Script Collection Types

LogicMonitor offers support for three types of script-based DataSources: embedded Groovy, embedded PowerShell, and external. Each type has advantages and disadvantages depending on your environment:

- Embedded Groovy scripting: cross-platform compatibility across all Collectors, with access to a broad number of external classes for extended functionality

- Embedded PowerShell scripting: available only on Windows Collectors, but allows for collection from systems that expose data via PowerShell cmdlets

- External Scripting: typically limited to a particular Collector OS, but the external script can be written in whichever language you prefer or you can run a binary directly

Overview

The BatchScript Data Collection method is ideal for DataSources that:

- Will be collecting data from a large number of instances via a script, or

- Will be collecting data from a device that doesn’t support requests for data from a single instance.

The Script Data Collection method can also be used to collect data via script, however data is polled for each discovered instance. For DataSources that collect across a large number of instances, this can be inefficient and create too much load on the device data is being collected from. For devices that don’t support requests for data from a single instance, unnecessary complication must be introduced to return data per instance. The BatchScript Data Collection method solves these issues by collecting data for multiple instances at once (instead of per instance).

Note:

- Datapoint interpretation methods are limited to multi-line key-value pairs and JSON for this collection method. For more information on datapoint interpretation methods, see Normal Datapoints.

- The instances’ WILDVALUE cannot contain the following characters:

:

#

\

spaceThese characters should be replaced with an underscore or dash in WILDVALUE in both Active Discovery and collection.

How the BatchScript Collector Works

Similar to when collecting data for a DataSource that uses the script collector, the batchscript collector will execute the designated script (embedded or uploaded) and capture its output from the program’s stdout. If the program finishes correctly (determined by checking if the exit status code is 0), the post-processing methods will be applied to the output to extract value for datapoints of this DataSource (the same as other collectors).

Output Format

The output of the script should be either JSON or line-based.

Line-based output needs to be in the following format:

JSON output needs to be in the following format:

{

data: {

instance1: {

values: {

"key1": value11,

"key2": value12

}

},

instance2: {

values: {

"key1": value21,

"key2": value22

}

}

}

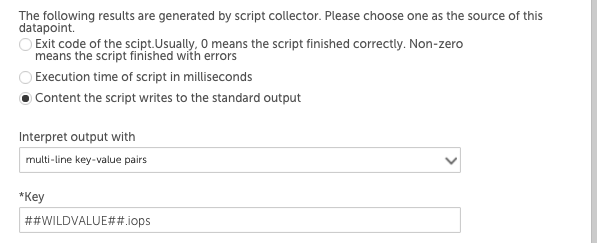

}Since the BatchScript Data Collection method is collecting datapoint information for multiple instances at once, the ##WILDVALUE## token needs to be used in each datapoint definition to pass instance name. “NoData” will be returned if your WILDVALUE contains the invalid characters named earlier in this support article.

Using the line-based output above, the datapoint definitions should use the multi-line-key-value pairs post processing method, with the following Keys:

- ##WILDVALUE##.key1

- ##WILDVALUE##.key2

Using the JSON output above, the datapoint definitions should use the JSON/BSON object post processing method, with the following JSON paths:

- data.##WILDVALUE##.values.key1

- data.##WILDVALUE##.values.key2

BatchScript Data Collection Example

If a script generates the following output:

Then the IOPS datapoint definition may use the key-value pair post processing method like this:

The ##WILDVALUE## token would be replaced with disk1 and then disk2, so this datapoint would return the IOPS values for each instance. The throughput datapoint definition would have ‘##WILDVALUE##.throughput’ in the Key field.

BatchScript Retrieve Wild Values Example

Wild values can be retrieved in the collection script:

def listOfWildValues = datasourceinstanceProps.values().collect { it.wildvalue }datasourceinstanceProps.each { instance, instanceProperties ->

instanceProperties.each

{ it -> def wildValue = it.wildvalue // Do something with wild value }

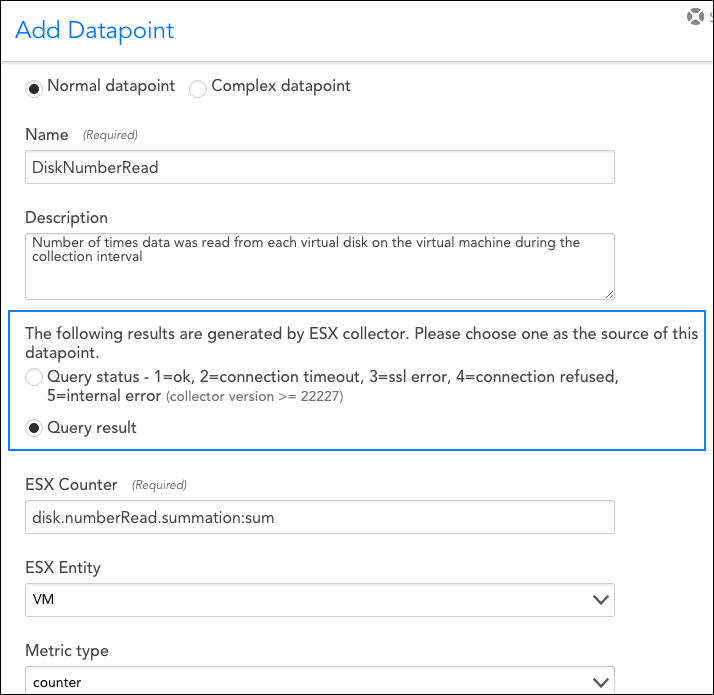

}The ESX collector allows you query data via the VMWare API. When adding or editing a datapoint using the ESX collector, you will see a dialog window similar to the one shown next.

As highlighted in the above screenshot and discussed next, the ESX collector generates two types of results. You’ll need to choose one of these as the source for your datapoint.

Query Status

When selected, the Query status option returns vCenter status. This allows the DataSource to identify the source of an inaccessible VM, whether the issue stems from the VM itself or vCenter.

The following response statuses may be returned:

- 1=ok

- 2=connection timeout (or failed login due to incorrect user name or password (Collector versions 26.300 or earlier))

- 3=ssl error

- 4=connection refused

- 5=internal error

- 6=failed login due to incorrect user name or password (Collection versions 26.400 or later)

Note: You must have Collector version 22.227 or later to use the Query status option.

Query Result

When selected, the Query result option returns the raw response of the ESX counter you designate in the following ESX Counter field. The counter to be queried must be one of the valid ESX counters supported by the API. (See vSphere documentation for more information on supported ESX counters.)

As shown in the previous screenshot, a LogicMonitor specific aggregation method can be appended to the counter API object name in the Counter field. Two aggregation methods are supported:

- :sum

Normally, the ESX API will return the average of summation counters for multiple objects when queried against a virtual machine. When “:sum” is appended, LogicMonitor will alternately collect the sum. For example, using the counter shown in the above screenshot, a virtual machine with two disks, reporting 20 and 40 reads per second respectively, would return 60 reads per second for the virtual machine, rather than 30 reads per second. - :average

Because the summation of counters is not always the average of counters, LogicMonitor also supports “:average” as an aggregation method. For example, the cpu.usagemhz.average counter would normally return the sum of the averages for multiple objects for the collection interval. When “:average” is appended, LogicMonitor will divide this sum by the number of instances in order to return an average.

For more general information on datapoints, see Datapoint Overview.

Note: There is a known bug in VMware’s vSphere 4. Once there has been a change in freespace of datastores, it may take up to 5 minutes for this change to be reflected in your display. Read more about this bug and potential work-arounds here.

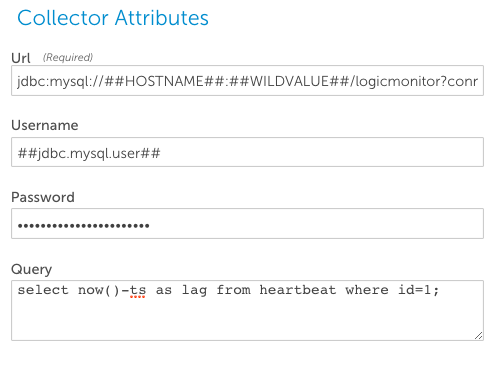

The JDBC collector allows you to create datasources that query databases, collect and store data from a SQL query, alert on them, and graph them.

If you select a Collector type of jdbc (in the datasource General Information section), the form will display JDBC specific attributes section:

URL

URL is the jdbc url used to connect to the database. As with other fields, token substitutions can be used to make the url generic (Typically, the ##HOSTNAME## token is used to substitute the host that the datasource is associated with; ##WILDVALUE## will be used in Active Discovery datasources, and be replaced with the port or ports that the database was discovered running on; ##DBNAME## can be used to define the database to connect to, and may vary from host to host, or group to group. Of course, any property can be used at any place in the string, or a literal string can be used, too.)

Examples of URLs:

jdbc:oracle:thin:@//##HOSTNAME##:1521/##DBNAME##jdbc:postgresql://##HOSTNAME##:##WILDVALUE##/##DBNAME##jdbc:sqlserver://##HOSTNAME##:##DBPORT##;databaseName=##DBNAME##;integratedSecurity=truejdbc:mysql://##HOSTNAME##:3306/##DBNAME##?connectTimeout=30000&socketTimeout=30000jdbc:sybase:Tds:##HOSTNAME##:##DBPORT##/##DBNAME##Please note that SyBase URLs do not require slashes before the hostname.

Username & Password

Username and password are the credentials used to connect to the database. They can be entered as literals, specific to this datasource, but are typically filled with the same tokens that are used by the rest of the LogicMonitor system – ##jdbc.DBTYPE.user## and ##jdbc.DBTYPE.pass##, where DBTYPE is mysql, oracle, postgres or mssql. This allows credentials to be specified individually for each host or group, if desired, simply by setting the property at the appropriate level.

Notes:

- Microsoft SQL Server offers 2 different authentication modes, Windows and SQL Server authentication. Windows authentication uses the Active Directory user account, while SQL authentication uses an account defined within SQL Server management system. If you want to use Windows authentication, do not define a username or password in the datasource. The collector’s Run as credentials are used to access the database. You cannot override this at the host level. If you use SQL Server authentication, the username and password defined in the datasource must have the rights to execute the query you want to run. For more information about authentication modes, see Choose an Authentication Mode on the MSDN site.

- If you are using a MySQL database, ensure the password does not contain the backslash (\) character.

Query

Query is the SQL statement to be run against the database. We support most valid SQL statements. However, due to the Collector’s use of the executeQuery method, SQL statements which do not return a result set are not supported for JDBC data collection. Such statements include those using ‘INSERT’, ‘DELETE’, or ‘UPDATE’, as well as ‘ALTER’, ‘CREATE’, ‘DROP’, ‘RENAME’, or ‘TRUNCATE’.

Please note that queries ending in semicolons (as shown in the above image) are commonplace- and sometimes required- when executed in Oracle via the SQL Developer/CLI. However, some JDBC drivers will reject queries that end with a semicolon, resulting in an error. For troubleshooting purposes, if your query returns an error when using JDBC drivers, please verify the compatibility of the driver with the use of semicolons.

Defining JDBC Datapoints

Like all datasources, you must define at least one datapoint for JDBC datasources. See Datapoint Overview for more information on configuring datapoints.

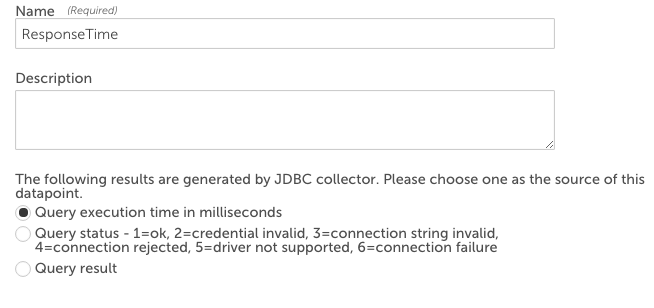

Measuring the Query Response Time

To measure how long a query took to run, add a datapoint and set the source to ‘Query execution time in milliseconds’:

Interpreting the Query Result

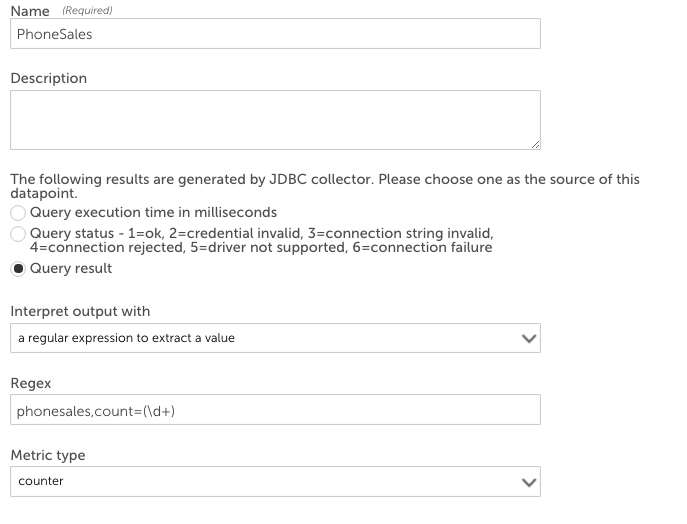

To interpret the query result, set the Use Value Of field to “Output”. There are three post processor methods that can be used to interpret SQL results:

- use the value of a specific column of the first row in the result: this post processor is the simplest to use. It expects a column name as the post-processor parameter, and returns the value of the first row for that column.

- a regular expression to extract a value: if you have multiple rows of output in your returned sql, you will probably need the regex postprocessor. It expects a regular expression as its post-processor parameter, with the expression returning a backreference indicating the numeric data to store.

E.g. a query of the form:

select application from applicationevents, count(*) as count where eventdate > NOW() – INTERVAL 1 hour GROUP BY application could return results such as:

When the logicmonitor collector is processing these results, it will prepend the column name to each value, for ease of regular expression construction. So the above result would be processed as:

The values reported for phonesales could be collected by defining a regular expression in the post-processor parameter of “phonesales,count=(\d+)”.

e.g.

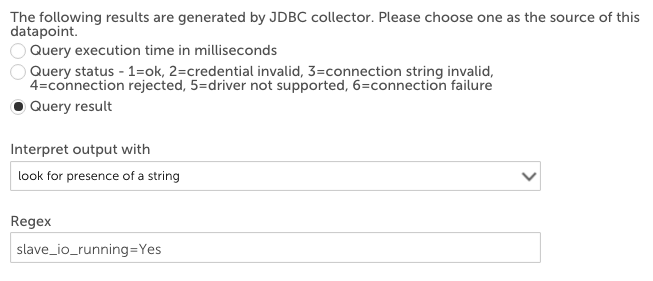

3. look for presence of a string: the text match post processor looks for the presence of the text specified in the post-processor parameter in the SQL result, and returns 1 if the text is present, and 0 if it is not:

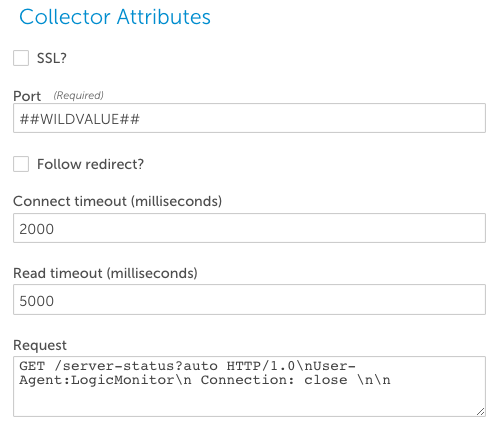

The webpage collector can be used to query data from any system via HTTP or HTTPS.

Note: The webpage collector supports circular redirects, up to a maximum of 3 redirects.

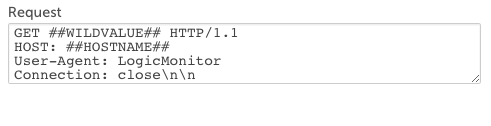

Token substitutions can be used to make the collector generic (Typically, ##WILDVALUE## will be used in Active Discovery datasources, and be replaced with the port or ports that the webpage was discovered on; ##WILDVALUE## could also be used in the Request section to allow the same datasource to collect different pages. Of course, any property can be used at any place in the string, or a literal string can be used, too.)

Parameters

- SSL: whether to use SSL for the request.

- port: the TCP port to use for the HTTP or HTTPS traffic.

- connect Timeout: time the collector will wait for a connection to be established

- readTimeout: time the collector will wait for data to be returned in response to a request

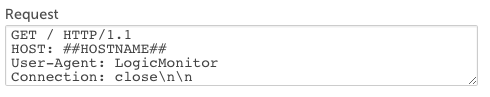

- request: the content to send in the HTTP or HTTPS request. This must be a valid HTTP 1.0 or HTTP 1.1 request.

Use ‘\n’ to send a newline.

Do not include content-length. This is automatically configured.

If sending an HTTP1.1 request, you must include the HOST header. You can use a token for this header- i.e. ##HOSTNAME## to substitute the system the datasource is currently collecting against. e.g.:

If you have a multi-instance datasource with Active Discovery enabled, you may use the ##WILDVALUE## in the Request, instead of as the Port, e.g.:

Authentication

The webpage collector supports basic, MD5 and NTLM authentication to access protected web pages. Authentication is configured by defining properties on the host with the web site. The properties http.userand http.pass are used to supply the username/password, and, for NTLM authentication, the property ntlm.domain is used to supply the NTLM domain to be used. No other configuration is needed – the page is first attempted to be accessed without authentication – if the server responds that authentication is required, the appropriate authentication method is used, with the relevant properties.

Note: NTLM authentication requires HTTP 1.1 requests, and that there is no Connection: close header.

It also requires that the collector be running with sufficient privileges – running as Local System is not sufficient.

Datapoints

- Use Value of Status: If Status is selected for the Use Value Of for a datapoint, a post processor of ‘None’ should be selected. Then the datapoint will contain information about the http response:

- 1 = HTTP returned code 200/OK.

- 2 = HTTP returned code in 300 range

- 3 = HTTP returned code in 400 range

- 4 = HTTP returned code in 500 range

- 5 = Network connection refused

- 6 = invalid SSL certificate

- Use Value of responseTime: a post processor of ‘None’ should be selected. The datapoint will contain the time, in milliseconds, for the http response.

- Use Value of Output: this selection will interpret the output of the web page response. A post processor method appropriate to the page being interpreted should be selected. The most common post processors are:

- NameValue: this will expect the Post-processor param column to contain a string to look foron the web page. The datapoint will be assigned the value found after an equall sign on the web page.

- Regex: this will return the value returned by the backreference in the regular expression of the post processor parameters.

- textmatch: the text match post processor looks for the presence of the text specified in the post-processor parameter in the returned web page , and returns 1 if the text is present, and 0 if it is not.

The JMX collector is used to collect data from java applications using exposed MBeans. For issues with configuring JMX collection, see this page.

An MBean is identified by the combination of a domain and a list of properties. For example, domain= java.lang, and properties type=Memory, identifies the MBean that reports on memory usage of a JVM.

Each MBean exposes one or more attributes that can be queried and collected by name.

An MBean attribute could be:

- A primitive java data type such as int, long, double, or String

- An array of data (‘data’ itself could be a primitive data type, an array of data, a hash of data, and so on)

- A hash of data

LogicMonitor can deal with all the above data types, so long as each attribute is fully specified to result in a primitive data type. However, please note that LogicMonitor does not currently support JMX queries that include a dot/period (i.e. “.” ) when they are not part of an array, e.g. an attribute name of rep.Container.

When specifying which JMX objects to collect, you must specify:

- name – used to identify the object

- MBean ObjectName – used to specify the JMX Domain and path of the MBean

- Mbean Attribute – used to specify an attribute to collect.

e.g. a simple case:

If the attribute to be collected is part of a complex object, it can be qualified by use of period separator:

And if necessary, multiple levels of selectors can be specified. For example, to collect the value of the attribute “key1” for the map with index “move” for the MBean with type rrdfs in the LogicMonitor domain:

CompositeData and Map Support

The MBean attribute expression supports CompositeData and Map. For example, the MBeanjava.lang:type=GarbageCollector,name=Copy has an attribute LastGcInfo whose value is a CompositeData (see the figure below).1

To access the value of GcThreadCount, the attribute expression could be “LastGcInfo.GcThreadCount“. Same rule could be used if the value is a Map.

TabularData Support

The MBean expression supports TabularData. For example, the MBean java.lang:type=GarbageCollector,name=Copy has an attribute LastGcInfo. One of its child values, memoryUsageAfterGc, is a TabularData. The figure below describes its schema and data.1

The table has 2 columns – ‘key‘ and ‘value‘. The column ‘key‘ is used to index the table (so you can uniquely locate a row by specifying an index value. For instance, key=”Code Cache” will return the 1st row). If you want to retrieve the value of the column ‘value’ of the second row, the expression could be“LastGcInfo.memoryUsageAfterGc.Eden Space.value”, where “Eden Space” is the value of the column ‘key’ to uniquely locate the row, and “value” is the name of the column whose value you want to access.

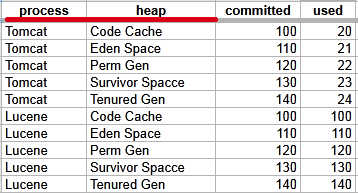

Sometimes, a TabularData may have multiple index columns. Here is an example (note that this example is faked):

This TabularData has 4 columns. The column “process” and “heap” are index columns. The combination of their value is used to uniquely locate a row. For example, process=Tomcat,heap=Code Cache will return the first row.

To retrieve the value of the column ‘committed’ of the 3rd row, the expression could be “LastGcInfo.memoryUsageAfterGc.Tomcat,Perm Gen.committed“, where ‘Tomcat,Perm Gen” are value for index columns (separated by comma), ‘committed‘ is the name of the column you want to access the value.

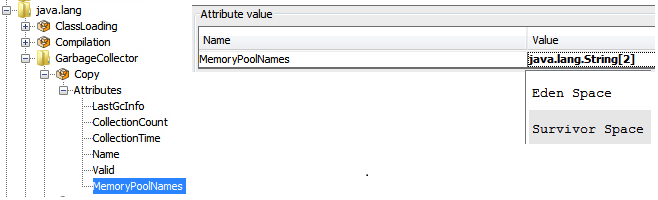

Array or List Support

The Mbean expression supports array or List. For example, the MBean java.lang:type=GarbageCollector,name=Copy has an attribute MemoryPoolNames whose value is a string array (String[], see figure below).

To access the first element of MemoryPoolNames, the expression could be “MemoryPoolNames.0“, where “0” is the index to the array (0-based). Same rule is used to access elements in a List.

The jmx collector gets username and password information from the device properties – jmx.user and jmx.pass.

Adding JMX Attributes to Collector

Collector does not support the first-level JMX attributes which contain dots. For example, jira-software.max.user.count. Collector treats such attributes as composite attribute and splits them based on the dots. As a result, you may get a null pointer exception in the debug window and NaN in Poll Now for the DataSource.

To instruct Collector not to split the attribute, you can add a backslash ( \ ) before a dot (.). For example, jira-software\.max\.user\.count. With this addition, Collector will not split only those dots which are separated by the escape character. If a string contains any other dots then they will be treated as composite attributes. This also applies to accessing JMX attributes from a debug window.

Additional examples:

| Attribute Format | Attribute Interpreted in Collector Code |

jira-software\.max\.user\.count | jira-software.max.user.count |

jira-software\.max.user\.count | jira-software.max, user.count |

jira-software.max.user.count | jira-software, max, user, count |

jira-software.max.user\.count | jira-software, max, user.count |

The SNMP method will start by trying v3, then v2c, then v1 in that order. See Defining SNMP Credentials and Properties for more details.

Datasources based on the SNMP Data Collection method primarily use snmpget to access hosts/devices. In some cases where the device has a mulit-instance structure, Active Discovery will use snmpwalk (SNMP GETNEXT requests) to identify the Wildcard values for the instances.

Note: Some legacy hardware devices may work with the snmpwalk method and not snmpget, or vice-versa, making this default approach for multi-instance configuration problematic.

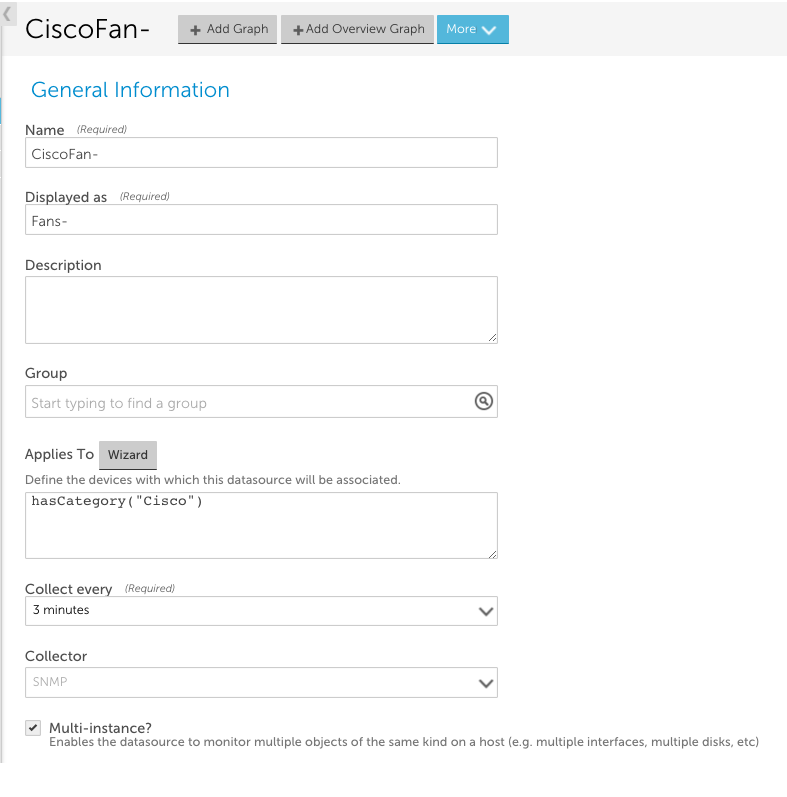

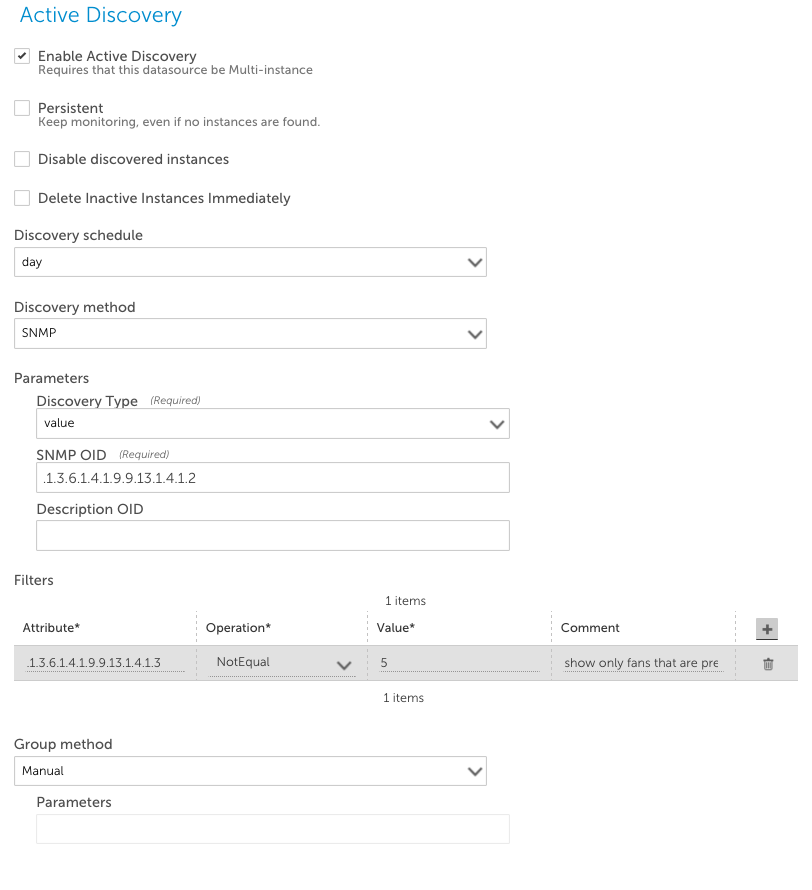

In the screenshot above, the Collector method is set to use SNMP.

Next, the Active Discovery section of the datasource uses SNMP for the AD method:

The Parameters configured above will walk the OIDs and return values identifying each fan instance as follows.

Names of Instances

Discovery Type is set to ‘value’, which defines how instances will be named.

- Value means the value returned from each OID walked

- Wildcard means the index of the OID walked

- Lookup means the value returned from the Lookup OID (referencing another OID which contains the value of interest)

SNMP OID is set to .1.3.6.1.4.1.9.9.13.1.4.1.2, defining the OID for the root of the snmpwalk.

Suppose we perform an snmpwalk of this OID on a device and receive the following response:

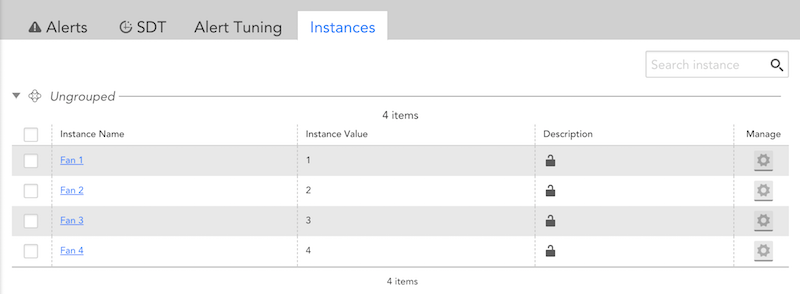

The Active Discovery process will identify four instances with the following names and values:

Datapoints

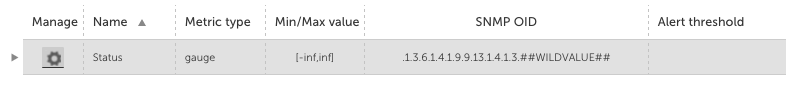

The instance values resulting from Active Discovery are substituted in the datapoint OIDs using the ##WILDVALUE## token as shown below.

In our example, the DataSource retrieves datapoint values for each instance from the following SNMP OIDs:

- .1.3.6.1.4.1.9.9.13.1.4.1.3.1

- .1.3.6.1.4.1.9.9.13.1.4.1.3.2

- .1.3.6.1.4.1.9.9.13.1.4.1.3.3

- .1.3.6.1.4.1.9.9.13.1.4.1.3.4

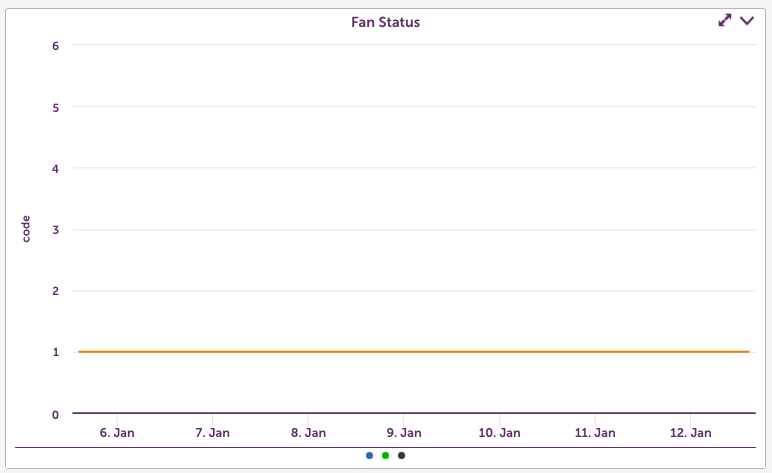

Each instance will have a ‘Status’ value, which is used for the ‘code’ on the Fan Status graph as shown below:

Overview

LogicMonitor allows NetApp data collection via three methods:

- Performance Data

- Request XML

- API Call

Note: NetApp storage arrays will also respond to SNMP, and some datasources employ SNMP to collect NetApp data. This article talks only about using the NetApp API to collect data.

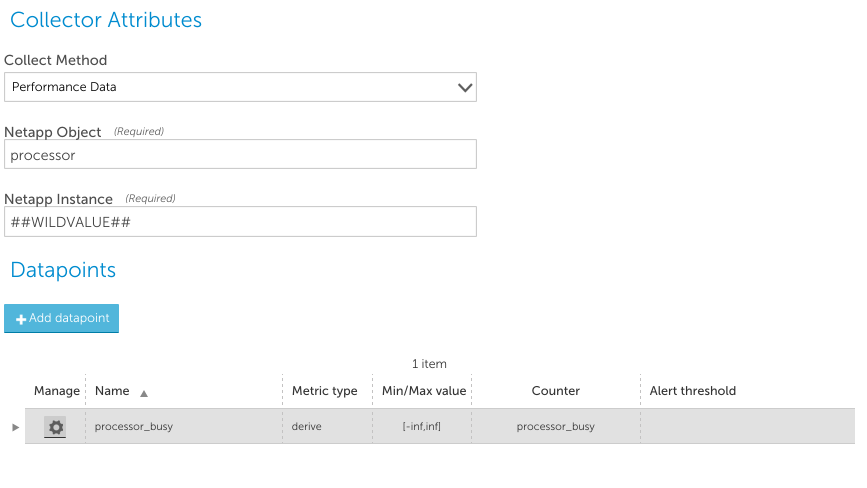

Performance Data

Performance Data Collection makes a perf-object-get-instances request for the specified object type (processor in the image below), and uses the supplied instance identifier (##WILDVALUE## in the image below – which would be replaced at runtime with an instance found in Active Discovery) to obtain a result set of all the performance counters for that object.

Note that it only can identify instances by the instance field – not the UUID – so this data collection method is only suitable where a unique instance name is available.

For example, the data collection configured in the following image below would work correctly on 7-mode NetApps, as each instance of the processor class (processor1, processor2, etc) will be unique per device. On cluster mode, however, there may be multiple “processor1” objects, on different nodes in the cluster, that are differentiated by UUID. The Performance Data collection method would not be capable of distinguishing between them, as it can only request objects by instance name – not UUID.

The datapoint Counter field should be the name of a counter returned by the NetApp API for the kind of object requested. The Performance collection method automatically uses aggregation – it designates one instance to be the master, which makes a single call to collect data about all instances. Individual instance collection for other instances will query the aggregation cache.

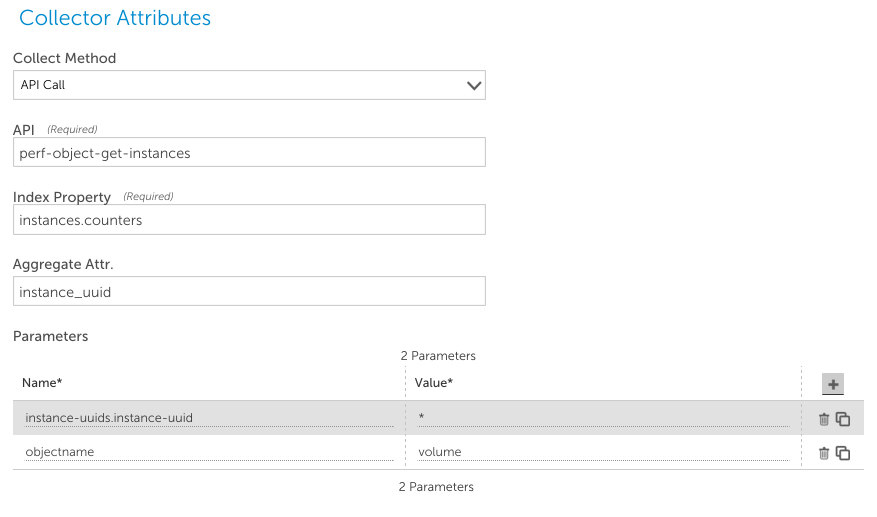

API Call

This method allows you to specify any NetApp API call, and will interpret returned the data either as nameand value attributes, or as elements with a value, which are the data points that can be collected. For example, to collect volume performance information on a NetApp cluster, you could use this configuration:

- API: the name of the API to use. Perf-object-get-instances in this case, cluster mode API call to retrieve performance information

- Index Property: this identifies where in the returned XML the actual results are. In the above example, the returned XML will have an instances element. The collector will walk this objects sub-elements, and look for a counters subelement. This is where the collector will retrieve information from (either if name and value elements are within that level, or counter elements)

- Aggregate Attr: this identifies how to locate a specific instance within a returned XML result. Specifies the name/value pair that will identify the instance – and thus all name/value pairs in the same context relate to the same instance. Because data for the above response includes the below: instance_uuid 7c2ff26c-bd32-4993-bcff-581727bbd522 internal_msgs 459431 specifying instance_uuid enables the collector to know that the value for that element will contain the instance wild value, as discovered by Active Discovery. This field is necessary when using collection aggregation – when the collector collects all instances of a class at once, caches them locally, and then individual collection queries the cache.

- Parameters: these are the parameters required by the specified API call. For instance, the performance API call requires an objectname parameter, to identify the kind of object that performance information is being requested about. It also requires an identifier of which instance or instances to query with the call. This is a nested object for this call: 7c2ff26c-bd32-4993-bcff-581727bbd522. To add this as a parameter, separate the levels with a period, as above. Note that to use aggregating collection, you can use “*”, which the collector will replace with all existing instance IDs.

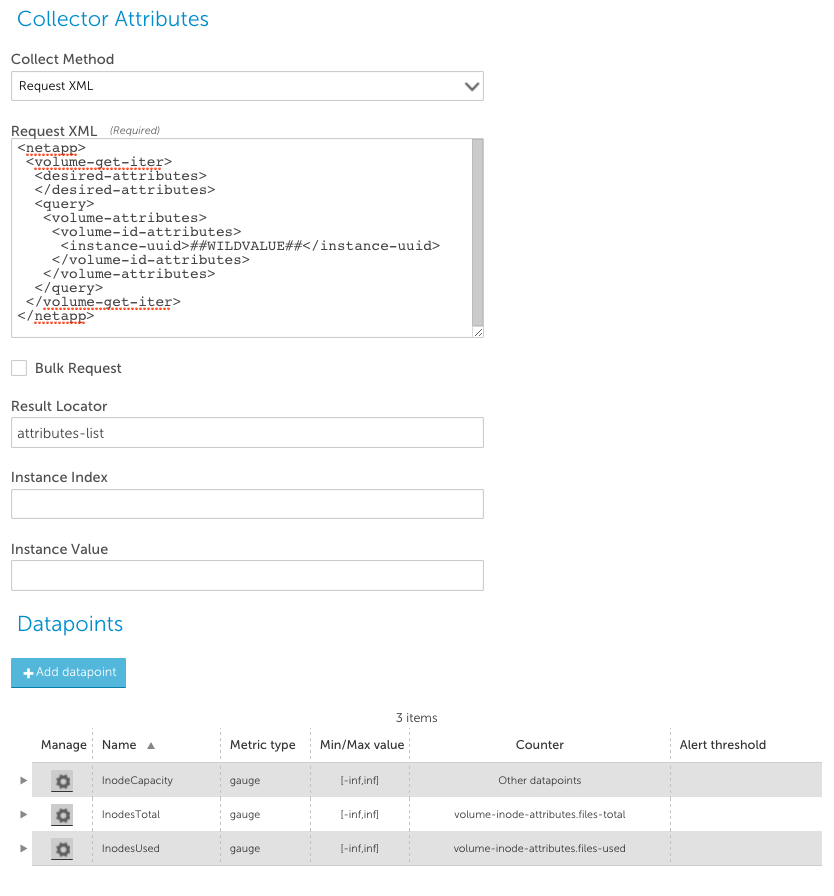

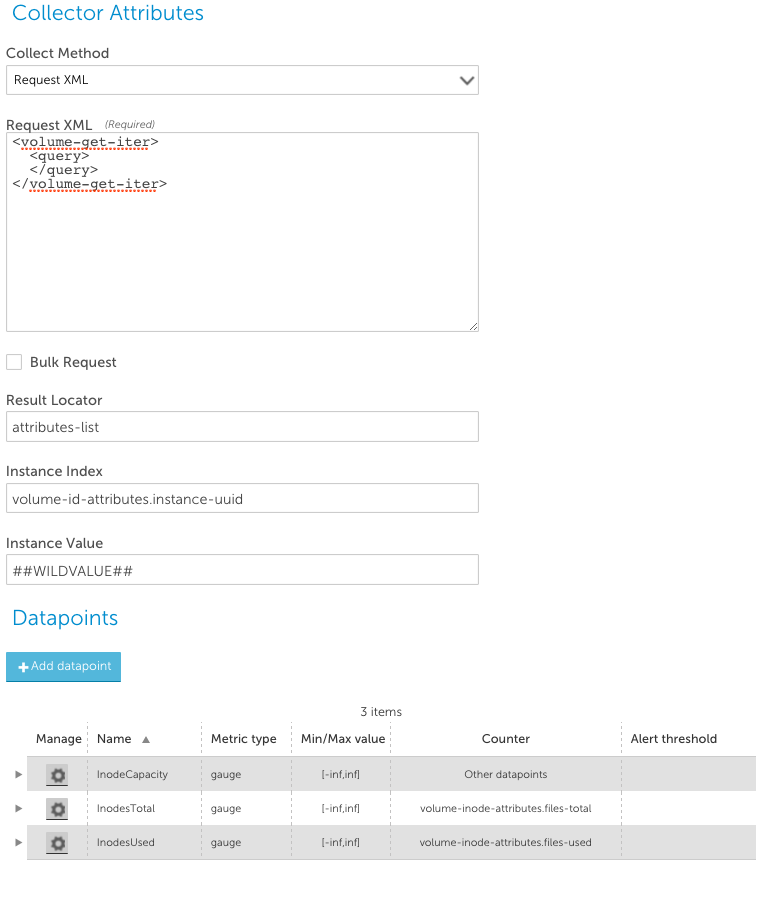

Request XML

The Request XML collection method allows you to send any NetApp API call, and interpret the results. It supports the aggregation of data collection (querying for multiple objects at once), as well as non-aggregated collection (issuing an API call for each object individually). The simplest case is to use non-aggregated collection, where each collection task collects the data for a single instance. This requires the use of the ##WILDVALUE## token to specify which object to collect, in the XML body. e.g. when the instances of a datasource are the volume UUIDs, you could collect the data about those volumes with the following datasource:

The Result Locator is used to locate the results object in the NetApp XML response. The format is “resultLocator[::attributeLocator]”. The resultLocator field usually points to an array of sub objects, and the counter values for the datapoints are then relative to this array. In non-aggregated collection, the first object in this array is assumed to contain the data. For example, the query from the above datasource may return results like the following:

<attributes-list>

<volume-attributes>

<volume-id-attributes>

<instance-uuid>53b10a8d-255c-4be9-9078-78b5d9d98e68</instance-uuid>

<name>server2_root</name>

<uuid>ccb2d972-7d75-11e3-91bb-123478563412</uuid>

</volume-id-attributes>

<volume-inode-attributes>

<files-private-used>502</files-private-used>

<files-total>566</files-total>

<files-used>96</files-used>

</volume-inode-attributes>

<...snip.....>

</volume-attributes>

</attributes-list>

By setting the Result Locator to “attributes-list”, this is stating that the list element contained therein (volume-attributes) contains the data that is being collected. As we are not doing aggregated collection, it is assumed that the first element of this location (the “volume-attributes” container) contains the relevant data. Thus the path to the actual data to be extracted (e.g. “volume-inode-attributes.files-total”) is relative to that object. To do aggregated data collection, it is necessary to understand how to request data about multiple objects in the XML query. There are two general classes of API calls used with the XML collection method – those that use a query attribute to select the objects to return, and those that explicitly enumerate the objects to return.

Aggregated Collection Using Query Parameters to Identify Instances

To use an API call that uses query parameters to retrieve all objects, it is generally easy to construct the API call to return a set of objects (See the NetApp SDK for details regarding the specific call). For example, to get a result set for all volumes, you can simply specify a query with no selectors:

The aggregate results from the above Request XML may be like the below:

<attributes-list>

<volume-attributes>

<volume-id-attributes>

<instance-uuid>53b10a8d-255c-4be9-9078-78b5d9d98e68</instance-uuid>

<name>server2_root</name>

<uuid>ccb2d972-7d75-11e3-91bb-123478563412</uuid>

</volume-id-attributes>

<volume-inode-attributes>

<files-private-used>502</files-private-used>

<files-total>566</files-total>

<files-used>96</files-used>

</volume-inode-attributes>

<...snip.....>

</volume-attributes>

<volume-attributes>

<volume-id-attributes>

<instance-uuid>53b10a8d-255c-4be9-9078-1239876123311</instance-uuid>

<name>server2_data</name>

<uuid>ccb2d972-7d75-11e3-91bb-a5b7c9911111</uuid>

</volume-id-attributes>

<volume-inode-attributes>

<files-private-used>502</files-private-used>

<files-total>566</files-total>

<files-used>96</files-used>

</volume-inode-attributes>

<...snip.....>

</volume-attributes>

</attributes-list>

As noted above, the Result Locator is used to locate the results object in the NetApp XML response. The format is “resultLocator[::attributeLocator]”. The resultLocator field usually points to an array of sub objects, and the counter values for the datapoints are then relative to this array. In the above datasource, the Result Locator is attributes-list, so the subsequent references and queries are relative to that. The Instance Index is used to isolate instances from the aggregated query. This needs to point to a field that will uniquely identify the instance – volume-id-attributes.instance-uuid, in the above datasource. All data queried for this instance will be collected from the object containing the matching instance index. (i.e. the volume-attributes object that contains the volume-id-attributes.instance-uuid that matches the WILDVALUE. Instance Value is the field used to match the Instance Index – this should always be ##WILDVALUE##.

Note that the Bulk Request checkbox does not need to be set to enable Aggregated data collection if the API call supports retrieving multiple objects without enumerating all the object identifiers.

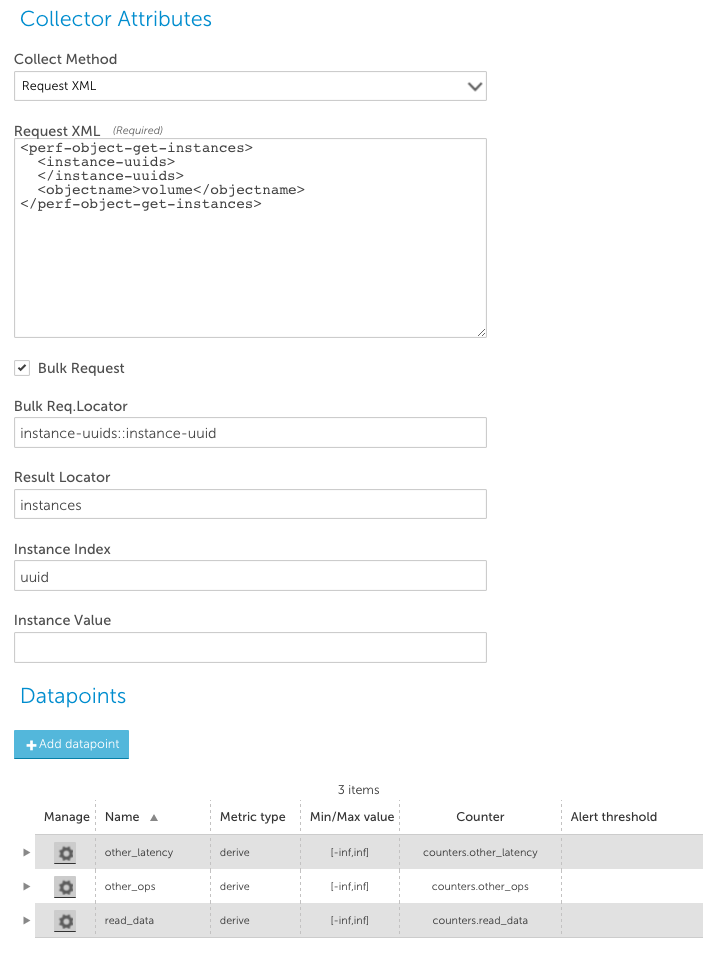

Aggregated Collection Using Enumerated Instances to Collect Data

If the API call requires the instances for which data is to be collected to be identified in the request, then in order to do aggregated collection, the LogicMonitor collector must create a query enumerating all the instances – and it must know how to construct the XML request for the specific API call. For example, to collect volume performance in an aggregated mode, you must list all the volume instance-uuids that you wish to collect data for. The following datasource accomplishes this:

- Bulk Request checkbox: This tells the LogicMonitor collector that it should construct a list of all the possible instance values, to enable aggregated collection.

- Bulk Request Locator: This details where in the request XML to insert the list of instances. In the above example, this is set to instance-uuids::instance-uuid. This means: “Insert the instances in a instance-uuid field, within the instance-uuids object.” This will result in a request like the following:

<perf-object-get-instances>

<instance-uuids>

<instance-uuid>WILDVALUE OF INSTANCE 1</instance-uuid>

<instance-uuid>WILDVALUE OF INSTANCE 2</instance-uuid>

<instance-uuid>WILDVALUE OF INSTANCE 3</instance-uuid>

<snip..>

<instance-uuid>WILDVALUE OF INSTANCE N</instance-uuid>

</instance-uuids>

<objectname>volume</objectname>

</perf-object-get-instances>

- Instance Index is used to isolate instances from the aggregated query. This needs to point to a field that will uniquely identify the instance – volume-id-attributes.instance-uuid, in the above datasource. All data queried for this instance will be collected from the object containing the matching instance index. (i.e. the volume-attributes object that contains the volume-id-attributes.instance-uuid that matches the WILDVALUE. Note that the Bulk Request Locator supports multiple level objects, and can construct the whole XML query, by using a double colon (“::”) to specify elements that should be surrounding the instances, and those used to construct the instances. e.g. you could set the request XML to:

level1.level2::sub-level1.sub-level2

The collector will then look for XML elements level2, within a level1 element. For each instance, it will insert XML fields of sub-level2 within sublevel1, located within the level2 element. E.g.

<level1>

<level2>

<sub-level1>

<sub-level2>WILDVALUE of Instance1</sub-level2>

</sub-level1>

<sub-level1>

<sub-level2>WILDVALUE of Instance2</sub-level2>

</sub-level1>

</level2>

</level1>

Adding Datapoints for NetApp Data Collection

In most cases, data points are defined simply by specifying the object by name containing the value to be collected, which will be collected within the context of the result locator using the instance index. e.g. to collect the files-private used value of 502 from the following result:

<volume-inode-attributes> <files-private-used>502</files-private-used>

you would simply create a normal datapoint with the counter volume-inode-attributes.files-private-used. However, if the XML result returns objects where the numeric data you wish to collect is not in a field that is distinctly named, but rather separate name/value pairs, this method cannot work. For example, the result to this query:

<netapp xmlns="https://www.netapp.com/filer/admin" version="1.20"> <perf-object-get-instances> <instance-uuids> <instance-uuid>##WILDVALUE##</instance-uuid> </instance-uuids> <objectname>system</objectname> </perf-object-get-instances> </netapp>

may look like:

<instances>

<instance-data>

<counters>

<counter-data>

<name>avg_processor_busy</name>

<value>24045244730</value>

</counter-data>

<counter-data>

<name>cifs_ops</name>

<value>0</value>

</counter-data>

</counters>

</instance-data>

</instances>

Where the results are Name and Value counters as siblings to each other, it is not possible to directly specify the path to the value in the NetApp Counter field. (LogicMonitor does not have access to a direct XML response – else XPath constructs could be used to get this data.) To get around this, there is special processing that will treat any sub XML element containing both “name” & “value” sub-elements to create attributes in the result object map. Thus the above output would in fact be processed to look like:

<instances> <instance-data> <counters> <avg_processor_busy>24045244730</avg_processor_busy> <cifs_ops>0>/cifs_ops> </counters> </instance-data> </instances>

allowing collection as usual.