Introduction

A datapoint is a piece of data that is collected during monitoring. Every DataSource definition must have at least one configured datapoint that defines what information is to be collected and stored, as well as how to collect, process, and potentially alert on that data.

Normal Datapoints vs. Complex Datapoints

LogicMonitor defines two types of datapoints: normal datapoints and complex datapoints. Normal datapoints represent data you’d like to monitor that can be extracted directly from the raw output collected.

Complex datapoints, on the other hand, represent data that needs to be processed in some way using data not available in the raw output (e.g. using scripts or expressions) before being stored.

For more information on these two types of datapoints, see Normal Datapoints and Complex Datapoints respectively.

Configuring Datapoints

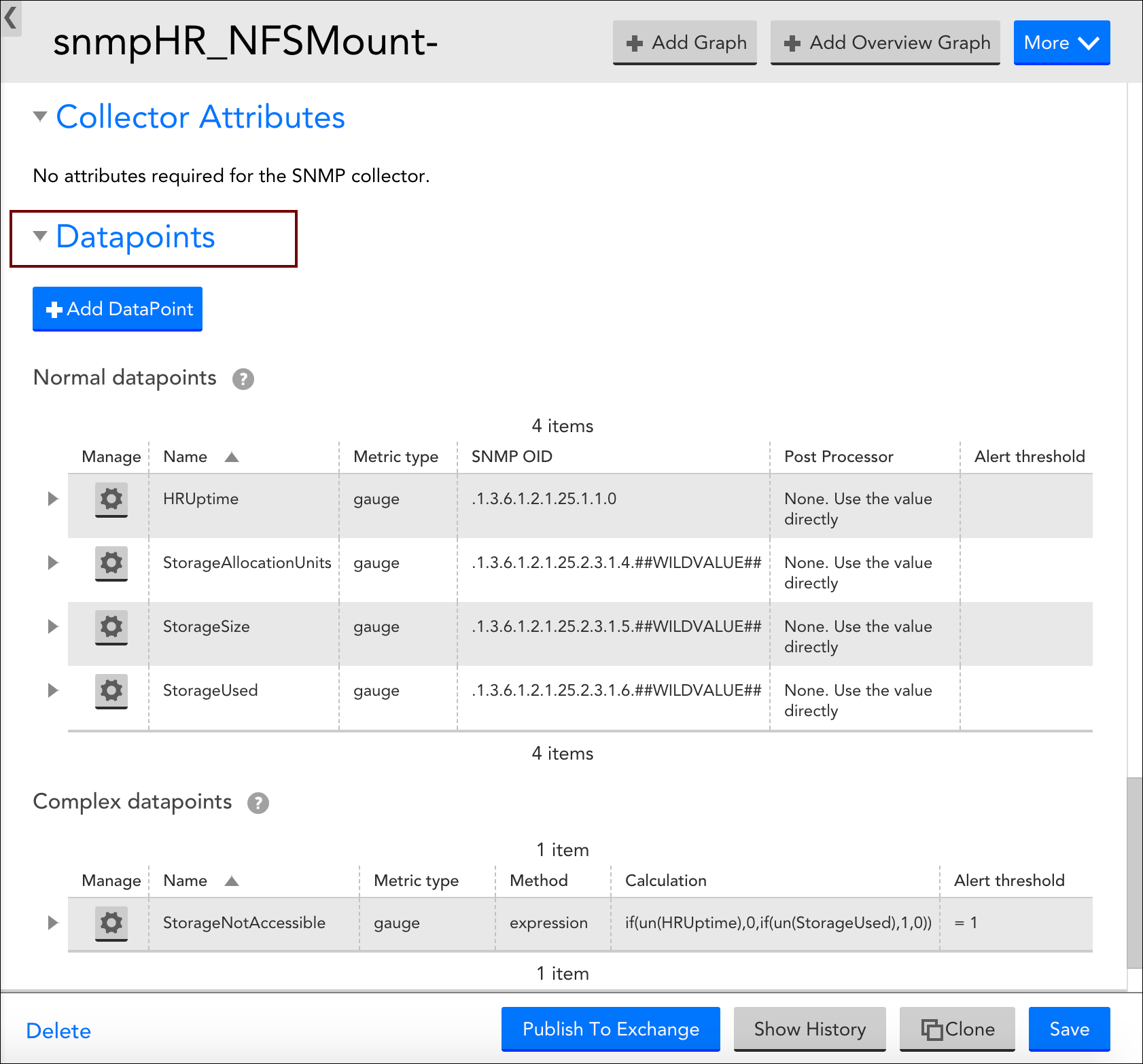

Datapoints are configured as part of the DataSource definition. LogicMonitor does much of the work for you by creating meaningful datapoints for all of its out-of-the-box DataSources. This means that, for the majority of resources you monitor via LogicMonitor, you’ll never need to configure datapoints.

However, there may be occasions where you want to customize an existing DataSource with new or edited datapoints (or are creating a custom DataSource). For these occasions, LogicMonitor does support the creation/editing of datapoints. This is done from the Datapoints area of a DataSource definition (navigate to Settings|DataSources|[DataSource Name]).

When adding a new datapoint or editing an existing datapoint, there are several settings that must be configured. The following three sections categorize these settings according to the type of datapoint being created.

Configurations Common to Normal and Complex Datapoints

Datapoint Type

If you are creating a brand new datapoint, choose whether it is a Normal datapoint or Complex datapoint. These options are only available when creating a new datapoint; existing datapoints cannot have their basic type changed. For more information on the differences between normal and complex datapoints, see the Normal Datapoints vs. Complex Datapoints section of this support article.

Name and Description

Enter the name and description of the datapoint in the Name and Description fields respectively. The name entered here will be displayed in the text of any alert notifications delivered for this datapoint so it is best practice to make the name meaningful (e.g. aborted_clients is meaningful whereas datapoint1 is not as it would not be helpful to receive an alert stating “datapoint1 is over 10 per second”.

Note: To ensure successful DataSource creation and updates for aggregate tracked queries, do not use reserved keywords as metric names.

A metric name (referred to as a datapoint name) is the identifier for a metric extracted from your log query, such as “events”, “anomalies”, or “avg_latency_ms”. Each metric from your logs becomes a datapoint within the created DataSource.

The following reserved keywords must not be used as datapoint names:

SIN, COS, LOG, EXP, FLOOR, CEIL, ROUND, POW, ABS, SQRT, RANDOM

LT, LE, GT, GE, EQ, NE, IF, MIN, MAX, LIMIT, DUP, EXC, POP

UN, UNKN, NOW, TIME, PI, E, AND, OR, XOR, INF, NEGINF, STEP

YEAR, MONTH, DATE, HOUR, MINUTE, SECOND, WEEK, SIGN, RND, SUM2

AVG2, PERCENT, RAWPERCENTILE, IN, NANTOZERO, MIN2, MAX2

Also, Tracked query names should follow the same guidelines as datasource display names. For more information, see Datasource Style Guidelines.

Note: Datapoint names cannot include any of the operators or comparison functions used in datapoint expressions, as listed in Complex Datapoints.

Valid Value Range

If defined, any data reported for this datapoint must fall within the minimum and maximum values entered for the Valid value range field. If data does not fall within the valid value range, it is rejected and “No Data” will be stored in place of the data. As discussed in the Alerting on Datapoints section of this support article, you can set alerts for this No Data condition.

The valid value range functions as a data normalization field to filter outliers and incorrect datapoint calculations. As discussed in Normal Datapoints, it is especially helpful when dealing with datapoints that have been assigned a metric type of counter.

Alert Settings

Once datapoints are identified, they can be used to trigger alerts. These alert settings are common across all types of datapoints and are discussed in detail in the Alerting on Datapoints section of this support article.

Configurations Exclusive to Normal Datapoints

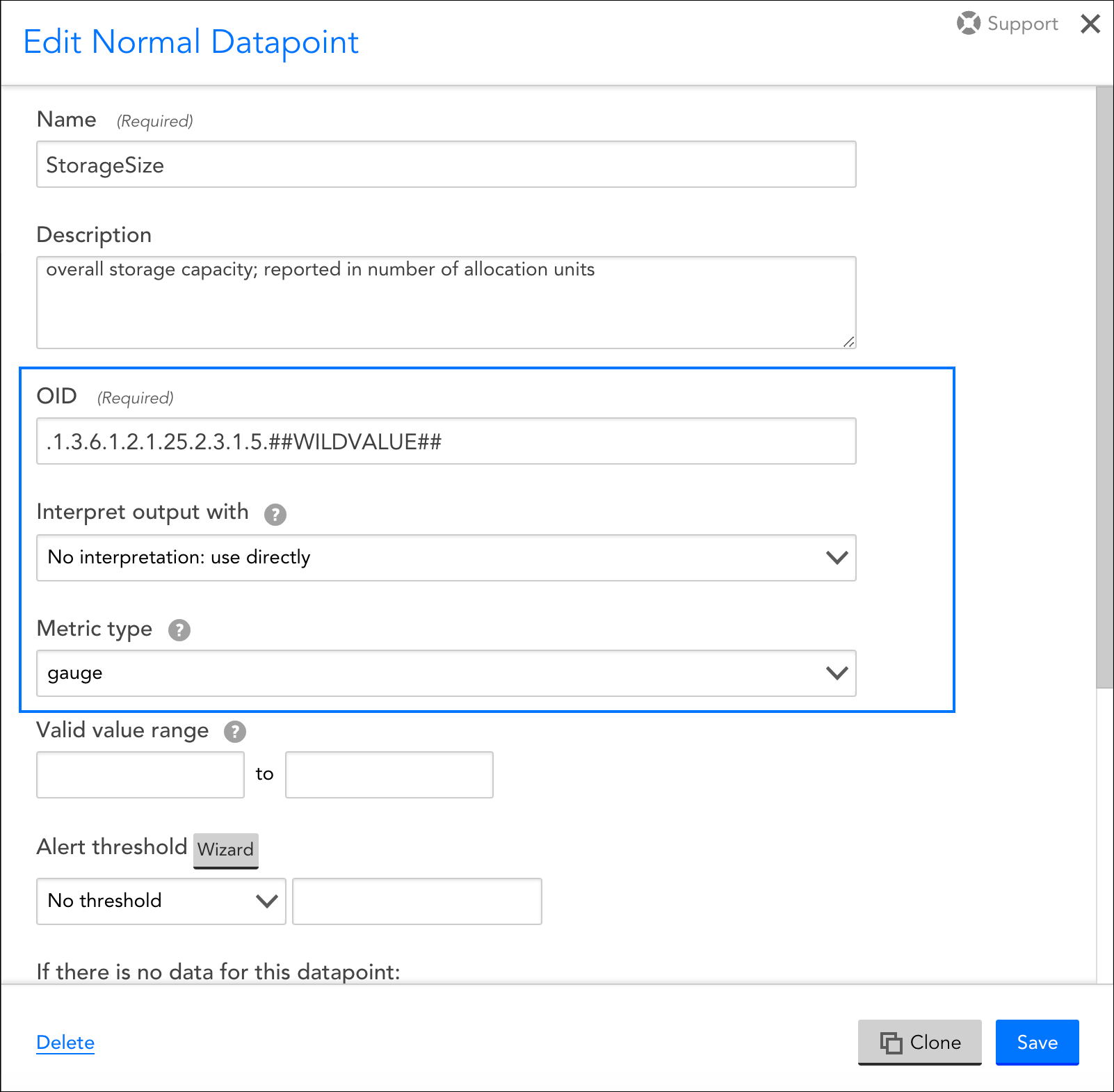

The configurations you need to complete for normal datapoints are highly dependent on the type of collection method being used by the DataSource (as determined by the Collector field, see Creating a DataSource).

For example, the Metric Type and Interpret output with fields are available when using the SNMP and WMI data collection methods, but not when evaluating returned status codes using the script or webpage method. For more information on these fields, see Normal Datapoints.

In addition, there is a field (or set of options) that specify what raw output should be collected, but the wording for these fields/options dynamically changes, also dependent upon the collection method being used. For example, this field is titled WMI CLASS attribute when defining datapoints collected via WMI and OID when defining datapoints collected via SNMP. Similarly, if defining datapoints collected via JMX, the fields MBean object and MBean attribute display. And to provide one more example, if defining datapoints collected via script or batchscript, a listing of script source data options displays. For more information on configuring this dynamic raw output field, see the individual support articles available in our Data Collection Methods topic.

These datapoint configurations display for DataSources that use an SNMP collection method, but for other types of collection methods (e.g. WMI, script, JMX, etc.), a different set of configurations displays.

Configurations Exclusive to Complex Datapoints

Method

If you are constructing your complex datapoint as an expression that is based on existing normal datapoints or resource properties (called a standard complex datapoint), select “Use an expression (infix or rpn) to calculate the value” from the Method field’s dropdown menu.

If you need to process the raw collected data in a more sophisticated way that cannot be achieved by manipulating the normal datapoints or assigned resource properties, select “Use groovy script to calculate the value” from the Method field’s dropdown menu. This is called a Groovy complex datapoint.

For more information on these two methods for building complex datapoints, along with sample expressions and Groovy scripts, see Complex Datapoints.

Expression/Groovy Source Code

If the “Use an expression (infix or rpn) to calculate the value” option was chosen from the Method field’s dropdown menu, enter the expression for your standard complex datapoint in the Expression field.

If the “Use groovy script to calculate the value” option was chosen, enter the Groovy script that will be used to process the datapoint in the Groovy Source Code field.

For more information on building complex datapoints using expressions and Groovy scripts, see Complex Datapoints.

Alerting on Datapoints

Once datapoints are configured, they can be used to trigger alerts when data collected exceeds the threshold(s) you’ve specified, or when there is an absence of expected data. When creating or editing a datapoint from the Add/Edit Datapoint dialog, which is available for display from the Datapoints area of the DataSource definition, you can configure several alert settings per datapoint, including the value(s) that signify alert conditions, alert trigger and clear intervals, and the message text that should be used when alert notifications are routed for the datapoint.

Thresholds

Datapoint alerts are based on thresholds. When the value returned by a datapoint exceeds a configured threshold, an alert is triggered. There are two types of thresholds that can be set for datapoints: static thresholds and dynamic thresholds. These two types of thresholds can be used independently of one another or in conjunction with one another.

Static Thresholds

A static threshold is a manually assigned expression or value that, when exceeded in some way, triggers an alert. To configure one or more static thresholds for a datapoint, click the Wizard button located to the right of the Alert Threshold field. For detailed information on this wizard and the configurations it supports, see Tuning Static Thresholds for Datapoints.

Note: When setting static thresholds from the DataSource definition, you are setting them globally for all resources in your network infrastructure (i.e. every single instance to which the DataSource could possibly be applied). As discussed in Tuning Static Thresholds for Datapoints, it is possible to override these global thresholds on a per-resource or -instance level.

Dynamic Thresholds

Dynamic thresholds represent the bounds of an expected data range for a particular datapoint. Unlike static datapoint thresholds which are assigned manually, dynamic thresholds are calculated by anomaly detection algorithms and continuously trained by a datapoint’s recent historical values.

When dynamic thresholds are enabled for a datapoint, alerts are dynamically generated when these thresholds are exceeded. In other words, alerts are generated when anomalous values are detected.

To configure one or more dynamic thresholds for a datapoint, toggle the Dynamic Thresholds slider to the right. For detailed information on configuring dynamic threshold settings, see Enabling Dynamic Thresholds for Datapoints.

Note: When setting dynamic thresholds from the DataSource definition, you are setting them globally for all resources in your network infrastructure (i.e. every single instance to which the DataSource could possibly be applied). As discussed in Enabling Dynamic Thresholds for Datapoints, it is possible to override these global thresholds on a per-resource or -instance level.

No Data Alerting

By default, alerts will not be triggered if no data can be collected for a datapoint (or if a datapoint value falls outside of the range set in the Value Valid Range field). However, if you would like to receive alerts when no data is collected, called No Data alerts, you can override this default. To do so, select the severity of alert that should be triggered from the If there is no data for this datapoint field’s drop-down menu.

While it’s possible to configure No Data alerts for datapoints that have thresholds assigned (static or dynamic), it is not necessarily best practice. For example, it’s likely you will want different alert messages for these scenarios, as well as different trigger and clear intervals. For these reasons, consider setting the No Data alert on a datapoint that has no thresholds in place so that you can customize the alert’s message, as well as its trigger and clear intervals as appropriate for a no data condition.

In most cases, you can choose to set a No Data alert on any datapoint on the DataSource as it is usually not just one specific datapoint that will reflect a no data condition. Rather, all datapoints will reflect this condition as it is typically the result of the entire protocol (e.g. WMI, SNMP, JDBC, etc.) not responding.

Alert Trigger Interval

The Alert trigger interval (consecutive polls) field defines the number of consecutive collection intervals for which an alert condition must exist before an alert is triggered. The length of one collection interval is determined by the DataSource’s Collect every field, as discussed in Creating a DataSource.

The field’s default value of “Trigger alert immediately” will trigger an alert as soon as the datapoint value (or lack of value if No Data alerting is enabled) satisfies an alert condition.

Setting the alert trigger interval to a higher value helps ensure that a datapoint’s alert condition is persistent for at least two data polls before an alert is triggered. The options available from the Alert trigger interval (consecutive polls) field’s dropdown are based on 0 as a starting point, with “Trigger alert immediately” essentially representing “0”. A value of “1” then, for example, would trigger an alert upon the first consecutive poll (or second poll) that returns a valid value outside of the thresholds. By using the schedule that defines how frequently the DataSource collects data in combination with the alert trigger interval, you can balance alerting on a per-datapoint basis between immediate notification of a critical alert state or quieting of alerting on known transitory conditions.

Each time a datapoint value doesn’t exceed the threshold, the consecutive polling count is reset. But it’s important to note that the consecutive polling count is also reset if the returned value transitions into the territory of a higher severity level threshold. For example, consider a datapoint with thresholds set for warning and critical conditions with an alert trigger interval of three consecutive polls. If the datapoint returns values above the warning threshold for two consecutive polls, but the third poll returns a value above the critical threshold, the count is reset. If the critical condition is sustained for the next two polls (for a total of three polls) a critical alert is what is ultimately generated.

Note: If “No Data” is returned, the alert trigger interval count is reset.

Note: The alert trigger interval set in the Alert trigger interval (consecutive polls) field only applies to alerts triggered by static thresholds. Alerts triggered by dynamic thresholds use a dedicated interval that is set as part of the dynamic threshold’s advanced configurations. See Enabling Dynamic Thresholds for Datapoints for more information.

Alert Clear Interval

The Alert clear interval (consecutive polls) field defines the number of consecutive collection intervals for which an alert condition must not be present before the alert is automatically cleared. The length of one collection interval is determined by the DataSource’s Collect every field, as discussed in Creating a DataSource.

The field’s default value of “Clear alert immediately” will automatically clear as soon as the datapoint value no longer satisfies the alert criteria.

As with the alert trigger interval, the options available from the Alert clear interval (consecutive polls) field’s dropdown are based on 0 as a starting point, with “Clear alert immediately” essentially representing “0”. Setting the alert clear interval to a higher value helps ensure that a datapoint’s value is persistent (i.e. has stabilized) before an alert is automatically cleared. This can prevent the triggering of new alerts for the same condition.

Note: If “No Data” is returned, the alert clear interval count is reset.

Note: The alert clear interval established here is used to clear alerts triggered by both static and dynamic thresholds.

Alert Message

If the “Use default templates” option is selected from the Alert message field’s dropdown menu, alert notifications for the datapoint will use the global DataSource alert message template that is defined in your settings. For more information on global alert message templates, see Alert Messages.

However, as best practice, it is recommended that any datapoint with thresholds defined have a custom alert message that formats the relevant information in the alert, and provides context and recommended actions. LogicMonitor’s out-of-the-box DataSources will typically already feature custom alert messages for datapoints with thresholds defined.

To add a custom alert message, select “Customized” from the Alert message field’s dropdown and enter notification text for the Subject and Description fields that dynamically appear. As discussed in Tokens Available in LogicModule Alert Messages, you can use any available DataSource tokens in your alert message.

Overview

A datapoint is a piece of data that is collected during monitoring. Every DataSource definition must have at least one configured datapoint that defines what information is to be collected and stored, as well as how to collect, process, and potentially alert on that data. LogicMonitor defines two types of datapoints: normal datapoints and complex datapoints.

Normal datapoints represent data you’d like to monitor that can be extracted directly from the raw output collected. For more information on normal datapoints, see Normal Datapoints.

Complex datapoints, on the other hand, enable post-processing of the data returned by monitored systems using data not available in the raw output. This is useful when the data presented by the target resource requires manipulation. For example, on a storage system you typically want to track and alert on percent utilization, which can be calculated based on total capacity and used capacity.

Since the values of complex datapoints are stored in their calculated forms, they can be used in graphs, reports, dashboards and other places in your portal.

LogicMonitor defines two types of complex datapoints: standard complex datapoints and Groovy complex datapoints. Each is discussed in detail in the following sections.

Note: Complex datapoints are configured from the DataSource definition, as outlined in Datapoint Overview.

Standard Complex Datapoints

Standard complex datapoints use LogicMonitor’s expression syntax to calculate new values based on the values of datapoints or device properties.

For example, the SNMP interface MIB provides an OID that reports the inbound octets on an interface (InOctets). Operations engineers tend to think in terms of Mbps rather than octets, so we can convert the value carried by the InOctet datapoint to Mbps using the following expression:

InOctets*8/1000/1000

Standard complex datapoints can also reference device properties within their expressions; simply enclose the property name in double hash marks (i.e. ##PROPERTYNAME##).

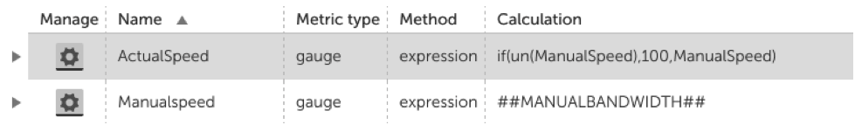

For example, consider that you want to calculate throughput based on a bandwidth limit set for a particular device. You could set a property named “ManualBandwidthLimit”, and then use that property within a standard complex datapoint calculation, as follows:

InOctets*8/##ManualBandwidthLimit##

In this use case, care must be taken to ensure the custom property is defined on all the devices to which the DataSource applies. If the property cannot be found, it will be replaced with an empty string, resulting in an error being returned.

To prevent this issue you could create a standard complex datapoint expression that retrieves the property, and a second standard complex datapoint expression that only uses the property if it has a valid value.

Constructing Standard Complex Datapoints Using Datapoint Expression Syntax

Datapoint expressions can be written in either Infix or RPN (reverse Polish notation) notation.

Infix example:

(sendpkts-recdpkts)/sentpkts*100

RPN example:

sendpkts,recdpkts,-,sentpkts,/,100,*

Note: All expression examples in this support article are presented using the Infix notation.

Expression Operands

There are three kinds of operands that can be used in datapoint expressions:

- Datapoint names

- Device property names

- Arbitrary numbers

The following example uses numbers and datapoint names to calculate the percentage of inbound packets discarded on a particular interface:

100 * InDiscards/(InBroadcastPkts+InUcastPkts+InMulticastPkts+InDiscards)

Expression Operators

Datapoint expressions support the typical operators you find in most programming/scripting languages.

Arithmetic Operators

Bitwise Operators

Logical Operators

Note The Boolean data type is not supported in datapoint expressions. Instead, any non-zero value will be treated as TRUE and zero will be treated as FALSE. So, in the case where both operands are non-zero,

&& / and

|| / or

Expression Functions

In addition to using operators to perform calculations, you can use the following functions in datapoint expressions:

Conditional Syntax

Comparison Functions

The following comparison functions are available. Typically these are used within a conditional (if) statement.

Mathematical Functions

A number of mathematical operations are available:

Constants

The following mathematical constants can be used:

Percentile Functions

Percentile functions are special functions that can be used only in virtual datapoint definitions (within graphs and widgets)—not in complex datapoint expressions. For more information on virtual datapoints, see DataSource Graphs.

Percentile Function Examples

- Consider plotting an hourly graph for bandwidth (bps). In our database, we’ve collected 10 values for bps for this hour: [2, 3, 7, 6, 1, 3, 4, 10, 2, 4]. If we sort this array, they will be [1, 2, 2, 3, 3, 4, 4, 6, 7,10]. The percent(bps, 95) function will return 10 (the 9th value in the 0-based array) – meaning that 95% of the samples are below this value.

- As another example, to calculate the 95% traffic rate (using the max of the in and out 95% rate, as many ISPs do), you could add the following to the Throughput graph of the snmp64_If- datasource:

- Add a virtual datapoint 95In, with an expression of

percent(InMaxBits,95) - Add a virtual datapoint 95Out, with an expression of

percent(OutMaxBits,95) - Add a virtual datapoint 95Percentile, with an expression of

if(gt(95In,95Out), 95In, 95Out) - Add the graph line 95Percentile to plot the 95% line in whatever color you choose.

- Add a virtual datapoint 95In, with an expression of

- Also, note that percentile functions require that x is a datapoint rather than an expression. Meaning:

percent(InOctets*8, 95)will not work, but…percent(InOctets, 95)will

To implement the former, first create a virtual datapoint containing the mathematical expression and use that datapoint as an argument to the percentile function.

Special Case: Negative Values

Datapoint expressions cannot start with a negative sign. To use a negative value in an expression, subtract the value from zero (e.g. use 0-2 rather than-2).

Special Case: Unknown Values

Unknown values are dealt with in two ways, as either part of a test condition, or as a result.

Consider the expression:

if(un(DatapointOne),0,DatapointOne)

In this case, the expression will return 0 if the value of DatapointOne is unknown (NaN — not a number, such as absence of data, data in non-numerical format, or infinity). If the value of DatapointOne is anything other than NaN (an actual number) then that number will be returned.

Next, consider:

if(lt(DatapointTwo,5),unkn,DatapointTwo)

This expression will return NaN (which will be displayed as “No Data”) if the value of DatapointTwo is less than number 5. If DatapointTwo returns value greater than 5, then that value will be displayed.

NaN values also need special consideration when used with operations that only return true/false. Java language specification dictates that logical expressions will be only evaluated as the result TRUE or FALSE even if one of the operands is NaN. For example, the expression eq(x,y) will always be evaluated as a result of 0 or 1 even if the x and/or y is NaN.

To work around this, you can check the values before performing are NaN before the expression evaluation:

if(or(un(Datapoint1), un(Datapoint2)), unkn(), <expression to evaluate>)

Below is a list of operator/function behavior with NaN values:

Groovy Complex Datapoints

Groovy complex datapoints employ a Groovy script to process the raw collected data. In a Groovy complex datapoint, you have access to the raw data payload, but not the actual datapoints (e.g. those calculated as a “counter” or “derive”). Also, Groovy allows for calculations based on both device and instance properties. Typically one would use a Groovy complex datapoint for manipulating data in a way that cannot be achieved with a standard complex datapoint.

In most cases, you can use standard complex datapoints to manipulate collected data. But, when you need to do additional processing not possible with datapoint expressions, you can turn to Groovy scripting for more full-featured datapoint calculations.

When you use Groovy to construct a complex datapoint, the Collector pre-populates Groovy variables with the raw data collected from normal datapoints. The type and syntax of these objects varies based on the collection method employed by the DataSource. See the table below for the variable name(s) assigned based on the collection type.

Note: Groovy complex datapoints reference the raw data exactly as it’s collected (i.e. as a “gauge” datapoint), rather than calculated as a rate such as “counter” or “derive” datapoint.

Once you’ve processed your data in Groovy, you’ll need to use the

return();

Device and Instance Properties in Groovy Complex Datapoints

You can use the device or instance properties that you’ve collected within a Groovy complex datapoint.

Device or instance properties can be referenced within Groovy as:

hostProps.get("auto.PropertyName")to retrieve a device propertyinstanceProps.get("auto.PropertyName")to retrieve an instance propertytaskProps.get("auto.PropertyName")to retrieve a property of either type

See Embedded Groovy Scripting for more details and examples.

Example: Groovy Complex Datapoint using WMI

Consider a WMI datasource that queries the class Win32_OperatingSystem. If you needed to use the raw date output from the WMI class you would write something like:

rawDate=output["LOCALDATETIME"];

return(rawDate);Example: Groovy Complex Datapoint using SNMP

Consider an SNMP datasource that queries interface data, such as snmp64_If-. If you wanted to perform some calculations on the raw values returned you might do something like the following:

// iterate through output array

output.each

{ line ->

(oid, value) = line.toString().split('=');

// get inOctet and outOctet values

if (oid.startsWith(".1.3.6.1.2.1.31.1.1.1.6"))

{

totalInOctets = value.toFloat();

}

else if (oid.startsWith(".1.3.6.1.2.1.31.1.1.1.10"))

{

totalOutOctets = value.toFloat();

}

}

// sum the values and return

totalOctets = totalInOctets + totalOutOctets;

return(totalOctets);Example: Groovy Complex Datapoint using JMX

In this example, consider a JMX Mbean that returns a time as a date string. There are many ways of presenting time as a string, so LogicMonitor cannot include a post-processor for all possible formats, but this is easily achieved with a Groovy script. If the following Mbean returns a time in the form “Thu Sep 11 06:40:30 UTC 2016”:

solr/##WILDVALUE2##:type=/replication,id=org.apache.solr.handler.ReplicationHandler:replicationFailedAtBut we wish to create a datapoint that reports the time since now and the time of that Mbean, we could use the following as the Groovy code:

rawDate = output["solr/##WILDVALUE2##:type=/replication,id=org.apache.solr.handler.ReplicationHandler:replicationFailedAt"];

Date fd = Date.parse( 'EEE MMM dd HH:mm:ss z yyyy', rawDate );

today = new Date();

timeDiff = (today.time - fd.time)/1000;

return (timeDiff);Example: Groovy Complex Datapoint using HTTP

Here’s a simple example of how you might use Groovy to manipulate the status code returned by the HTTP collection mechanism.

if (status < 2)

{

myStatus = 5;

}

else

{

myStatus = 10;

}

return(myStatus);Overview

A datapoint is a piece of data that is collected during monitoring. Every DataSource definition must have at least one configured datapoint that defines what information is to be collected and stored, as well as how to collect, process, and potentially alert on that data. LogicMonitor defines two types of datapoints: normal datapoints and complex datapoints.

Normal datapoints represent data you’d like to monitor that can be extracted directly from the raw output collected. Complex datapoints, on the other hand, represent data that needs to be processed in some way using data not available in the raw output (e.g. using scripts or expressions) before being stored. For more information on complex datapoints, see Complex Datapoints.

Normal datapoints are configured from the DataSource definition, as outlined in Datapoint Overview. In this support article, we’ll discuss in detail the various configurations that make up a normal datapoint.

Normal Datapoint Metric Types

When configuring a normal datapoint from the DataSource definition, one of three metric types can be assigned: gauge, counter, or derive.

Gauge Metric Type

The gauge metric type stores the reported value directly for the datapoint. For example, data with a raw value of 90 is stored as 90.

Counter Metric Type

The counter metric type interprets collected values at a rate of occurrence and stores data as a rate per second for the datapoint. For example, if an interface counter is sampled once every 120 seconds and reports values of 600, 1800, 2400, 3600 respectively, the resulting stored values would be 10 = ((1800-600)/120), 5 = ((2400-1800)/120) and 10 = ((3600-2400)/120).

Counters account for counter wraps. For example, if one data sample is 4294967290, and the next data sample, occurring one second later, is 6, the counter will store a value of 12, and assume the counter wrapped on the 32-bit limit. This behavior can lead to incorrect spikes in data if a system is restarted, and counters start again at 0 (i.e. the counter metric type may assume a very rapid rate caused a counter wrap, and therefore stores a huge value). For this reason, it is advisable to set max values for datapoints that are assigned counter metric types. This is accomplished using the Valid Value Range field available when configuring datapoints from the DataSource definition, as discussed in Datapoint Overview.

It is rare that you will have a use case for a counter metric type. Unless you are working with a datapoint that wraps frequently (e.g. a datapoint for a gigabit interface and using a 32-bit counter), you should use a derive metric type instead of counter.

Derive Metric Type

Derive metric types are similar to counters, with the exception that they do not correct for counter wraps. Derives can return negative values.

Configuring the Raw Output to Be Collected for a Normal Datapoint

When configuring a datapoint from the DataSource definition (as discussed in Datapoint Overview), there is a field (or set of options) that specify what raw output should be collected. However, the wording for these fields/options dynamically changes, depending upon the collection method being used by the DataSource (as determined by the Collector field, see Creating a DataSource).

For example, this field is titled WMI CLASS attribute when defining datapoints collected via WMI and titled OID when defining datapoints collected via SNMP. Similarly, if defining datapoints collected via JMX, the fields MBean object and MBean attribute display. And to provide one more example, if defining datapoints collected via script or batchscript, a listing of script source data options displays. For more information on configuring this dynamic raw output field, see the individual support articles available in our Data Collection Methods topic.

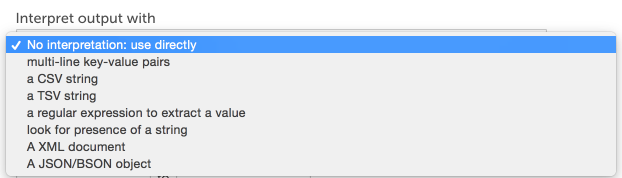

Post Processing Interpretation Methods for Normal Datapoints

The data collected from your devices can sometimes be used directly (e.g. the exit code returned by a script when using the script collection method), but other times requires further interpretation. When further interpretation is available for a collection method, the Interpret output with field becomes available in the datapoint’s configurations (which are found in the DataSource definition).

This field informs LogicMonitor on how to extract the desired values from the raw output collected. If the default “No interpretation: use directly” option is selected, the raw output will be used as the datapoint value; typically, DataSources with an SNMP collection method leave the raw output unprocessed as the numeric output of the OID is the data that should be stored and monitored.

The following sections overview the various interpretation methods available for processing collected data. The methods available for a particular datapoint are dependent on the type of collection method being used by the DataSource (as determined by the Collector field, see Creating a DataSource).

Multi-Line Key-Value Pairs

This interpretation method treats a multi-line string raw measurement as a set of key-value pairs separated by an equal sign or colon.

Buffers=11023

BuffersAvailable=333

heapSize=245MBFor the above multi-line string, you would extract the total number of buffers by specifying “Buffers” as the key, shown next.

Note: Key names should be unique for each key-value pair. If a raw measurement output contains two identical key names paired with different values, where the separating character (equal sign or colon) differs, the Key-Value Pairs Post Processor method will not be able to extract the values.

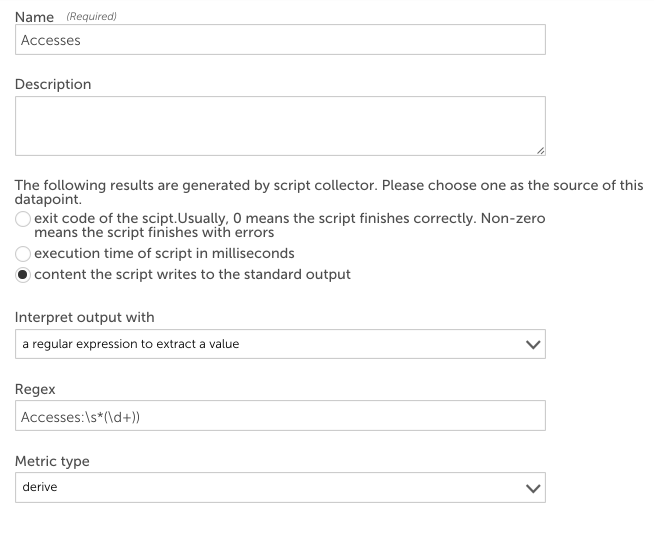

Regular Expressions

You can use regular expressions to extract data from more complex strings. The contents of the first capture group – i.e. text in parenthesis – in the regex will be assigned to the datapoint. For example, the Apache DataSource uses a regular expression to extract counters from the server-status page. The raw output of the webpage collection method looks similar to the following:

Total Accesses: 8798099

Total kBytes: 328766882

CPULoad: 1.66756

Uptime: 80462To extract the Total Accesses, you could define a datapoint as follows:

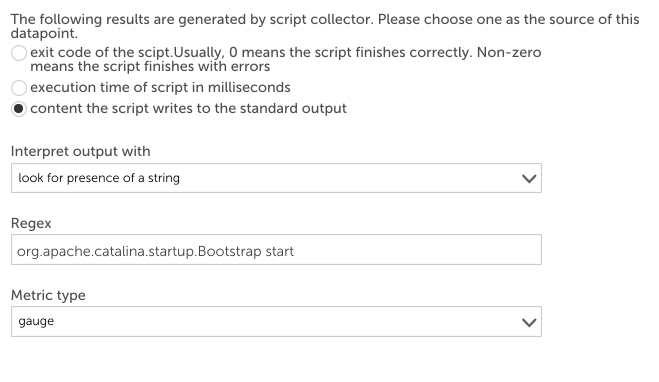

TextMatch

Look for presence of a string (TextMatch) tests whether a string is present within the raw output. This method supports regular expressions for text matching. If the string exists, it returns 1, otherwise 0.

For example, to check if Tomcat is running on a host, you may have a script datasource that executes

ps -ef | grep java periodically. The output from the pipeline should contain

org.apache.catalina.startup.Bootstrap start if Tomcat is running.

The datapoint below checks if the raw measurement output contains the string org.apache.catalina.startup.Bootstrap start. If yes, the datapoint will be 1 indicating Tomcat is running, otherwise the datapoint value will be 0.

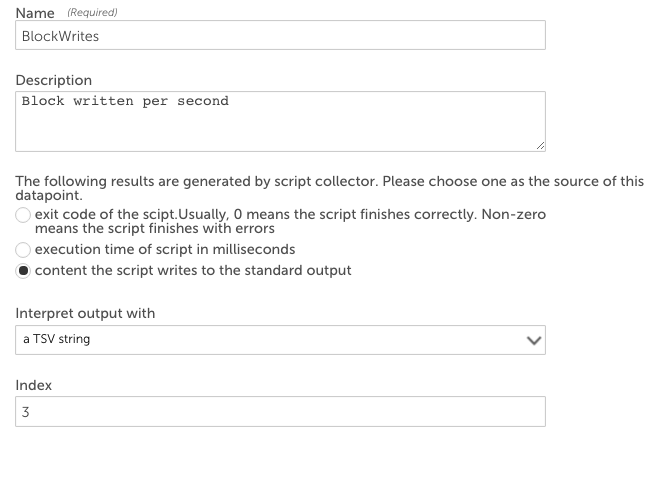

CSV and TSV

If the raw measurement is an array of comma-separated values (CSV) or of tab-separated values (TSV), you can use the CSV and TSV methods to extract values, respectively.

There are three forms of parameters that the post processor will accept for CSV and TSV methods:

- A simple integer N. Extract the Nth element of the first line of input (elements start at zero)

- Index=Nx,Ny. Extract the Nxth element of the Nyth line of the input

- Line=regex index=Nx. Extract the Nxth element of the first line which matches the given regex

For example, a script datasource executes iostat | grep ‘sda’ | head -1 to get the statistics of hard disk “sda”. The output is a TSV array:

sda 33.75 3.92 719.33 9145719 1679687042The fourth column (719.33) is blocks written per second. To extract this value into a datapoint using the TSV interpretation method, select “a TSV string” as the method to interpret the output and enter “3” as the index, shown next.

HexExpr

The “Hex String with Hex Regex Extract Value” (HexExpr) method only applies to the TCP and UDP data collection methods. It is applied to the TCP and UDP payloads returned by these collection methods.

The payload is treated as a byte array. You specify an offset and a length that could be 1, 2, 4, or 8 (corresponding to data type byte, short, int, or long, respectively) in the format of

offset:length in the Regex field. HexExpr will return the value of the byte (short, int, or long) starting at

offset of the array.

For interpreting 32-bit values, you can also specify the underlying byte ordering with the following method selections:

- A Big-Endian 32-bit Integer Hex String with Hex Regex Extract Value

- A Little-Endian 32-bit Integer Hex String with Hex Regex Extract Value

The HexExpr method can be very useful if you’d like a datapoint to return the value of a field in binary packets such as DNS.

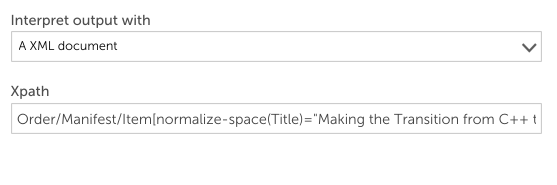

XML

The XML Document interpretation uses XPath syntax to navigate through elements and attributes in an XML document. For example, if the webpage collection method was used to collect the following content…

<Order xmlns="https://www.example.com/myschema.xml">

<Customer>

<Name>Bill Buckram</Name>

<Cardnum>234 234 234 234</Cardnum>

</Customer>

<Manifest>

<Item>

<ID>209</ID>

<Title>Duke: A Biography of the Java Evangelist</Title>

<Quantity>1</Quantity>

<UnitPrice>12.75</UnitPrice>

</Item>

<Item>

<ID>204</ID>

<Title>

Making the Transition from C++ to the Java(tm) Language

</Title>

<Quantity>1</Quantity>

<UnitPrice>10.75</UnitPrice>

</Item>

</Manifest>

</Order>…you could extract the order ID for the book “Making the Transition from C++ to the Java Language” from the XML page above by specifying in the datapoint configuration that the HTTP response body should be interpreted with an XML document and entering the following Xpath into the Xpath field:

Order/Manifest/Item[normalize-space(Title)=”Making the Transition from C++ to the Java(tm) Language”]/ID

Any Xpath expression that returns a number is supported. Other examples that could be used to extract data from the sample XML above include:

- count(/Order/Manifest/Item)

- sum(/Order/Manifest/Item/UnitPrice)

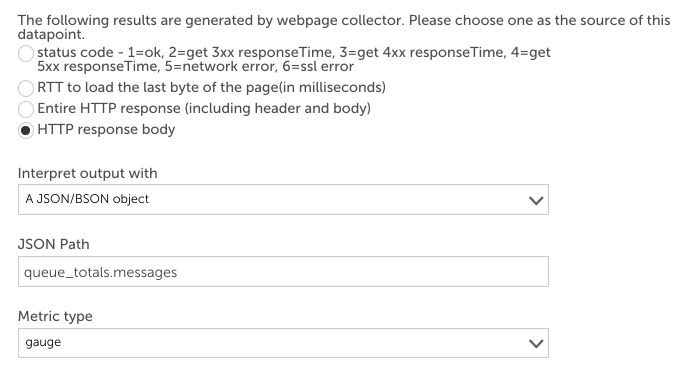

JSON

Some collection methods return a JSON literal string as the value of one of the raw datapoints. For example, you can create a webpage DataSource sending an HTTP request

/api/overview to RabbitMQ server to collect its performance information. RabbitMQ returns a JSON literal string like this:

{

"management_version":"2.5.1",

"statistics_level":"fine",

"message_stats":[],

"queue_totals":{

"messages":0,

"messages_ready":0,

"messages_unacknowledged":0

},

"node":"rabbit@labpnginx01",

"statistics_db_node":"rabbit@labpnginx01",

"listeners":[

{

"node":"rabbit@labpnginx01",

"protocol":"amqp",

"host":"labpnginx01",

"ip_address":"::",

"port":5672

}

]

}You could create a datapoint that uses the JSON/BSON post-processor to extract the number of messages in the queue like this:

Members can be retrieved using dot or subscript operators. LogicMonitor uses the syntax used in JavaScript to identify objects. For example, “listeners[0].port” will return 5672 for the raw data displayed above.

LogicMonitor supports JSON Path to select objects or elements. A comparison of Xpath and JSON Path syntax elements:

{

"store":{

"book":[

{

"title":"Harry Potter and the Sorcerer's Stone",

"price":"10.99"

},

{

"title":"Harry Potter and the Chamber of Secrets",

"price":"10.99"

},

{

"title":"Harry Potter and the Deathly Hallows",

"price":"9.99"

},

{

"title":"Lord of the Rings: The Return of the King",

"price":"17.99"

},

{

"title":"Lord of the Rings: The Two Towers",

"price":"17.99"

}

]

}

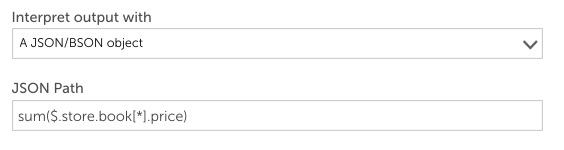

}To retrieve the total price of all the books, you could use the aggregate function of

sum($.store.book[*].price), shown next.

To break this down: sum is the aggregate function. $ is the start of JsonPath. * is a wildcard to return all objects regardless of their names. Then the price element for each object is returned.

The aggregate functions can only be used in the outermost layer.

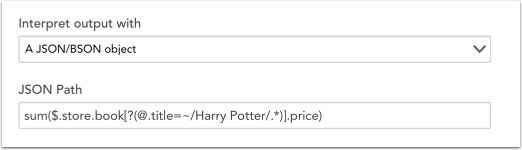

Regex filter =~

The Regex Filter =~ can be used to select all elements that match the specified regex expression. For example, to calculate the sum of the price of all the books in the Harry Potter series, you could use the following expression:

This expression can be broken down as the sum of the price of all books in the store that pass the filter expression (i.e. those whose title match the regex “Harry Potter’). You can use regex match only in filter expressions.

“@” means the current object which represents each book object in the book array in the example.