Why are we still handling IT incident response like it’s 2014?

Every day, ITOps teams are flooded with alerts, spread thin across hybrid systems, and stuck trying to stitch together visibility from solutions that don’t talk to each other. The incidents keep coming, but the tools aren’t getting smarter—and the humans are burned out.

Even with best practices in place, response is often slow, inconsistent, and reactive. You chase symptoms instead of solving problems. You escalate what you can’t decode. And too often, the same issue reappears because the system didn’t learn anything from the last one.

That’s not a people problem; it’s a process problem. And more importantly, it’s a tooling problem.

Manual triage isn’t built for modern infrastructure. Neither are static playbooks or black-box monitoring platforms. What’s needed now is a system that can observe, analyze, and act—with enough context to actually help.

Agentic AIOps makes that shift possible. Edwin AI puts it into practice.

What is incident response?

Incident response is the process ITOps teams follow to detect, investigate, and resolve issues that disrupt normal operations—like outages, performance slowdowns, system errors, or unexpected behavior.

The goal is simple: restore service quickly and prevent the issue from happening again. But in practice, incident response often involves multiple steps and stakeholders, including alert monitoring, root cause analysis, ticketing, escalation, communication, and documentation.

It’s a critical function for keeping systems stable, minimizing downtime, and protecting the business from costly disruptions.

Traditionally, incident response is reactive and manual—driven by processes, playbooks, and on-call rotations. As systems grow more complex, many IT teams are shifting toward automated and intelligent approaches that can help them respond faster and with greater accuracy.

What is an IT incident response plan?

An incident response plan is a documented strategy that outlines how your ITOps team will detect, respond to, and recover from system issues or disruptions.

It typically includes:

- Clear roles and responsibilities, essentially who does what during an incident.

- Step-by-step procedures for identifying, prioritizing, and resolving issues.

- Escalation paths and communication protocols.

- Guidelines for documenting and learning from each incident.

The goal of an incident response plan is to make sure your team can act quickly and consistently, even under pressure. It helps reduce downtime, improve response time, and avoid repeated mistakes.

Who handles incident response?

Incident response is typically handled by a cross-functional team that includes people with different areas of expertise. Who gets involved depends on the size of the organization and the severity of the incident, but common roles include:

- IT operations teams: Often the first to detect and respond to infrastructure issues. They monitor systems, triage alerts, and initiate fixes.

- Site Reliability Engineers (SREs) or DevOps teams: Step in for complex or recurring incidents, especially when root cause analysis or service architecture changes are needed.

- Support and service desk staff: Handle incoming tickets and user reports, escalate issues, and help communicate status updates.

- Incident commander or response lead: In more formal setups, one person owns coordination, makes decisions, and keeps the response on track.

- Communications or stakeholder liaison: For major incidents, someone may be assigned to keep business stakeholders, leadership, or customers informed.

Regardless of structure, the goal is the same: restore service fast, limit impact, and prevent the issue from recurring.

Phases of the incident response life cycle

Incident response is about having a consistent, repeatable process to handle problems efficiently. Most IT teams follow a version of the same core life cycle, whether the issue is a server crash, a misconfigured service, or a performance bottleneck.

Here are the 6 key phases:

1. Detection and alerting

Goal: Spot the incident quickly and trigger a timely response.

The process starts when a system identifies something unusual, such as a spike in latency, a failed service, or a critical error. This might come from monitoring tools, logs, or user reports.

2. Triage and prioritization

Goal: Decide what to fix first—and fast.

Once an alert is triggered, the team assesses its severity. Is it impacting users? Is it isolated or spreading? The goal is to filter signals from noise and focus on what matters most.

3. Investigation and diagnosis

Goal: Find out what’s actually broken and why.

Next, the team works to understand the root cause. That usually means digging into logs, checking system dependencies, and comparing changes or configurations across environments.

4. Containment and resolution

Goal: Stop the bleeding and restore service.

With the cause identified, the team takes action. This could mean restarting services, rolling back code, fixing a configuration, or applying a patch—whatever it takes to get systems back to normal. “Bleeding” here isn’t just metaphorical; it can mean real-world disruptions like delayed patient care, halted payment processing, or critical workflows grinding to a halt. The priority is to minimize impact and restore normalcy as fast as possible.

5. Communication and coordination

Goal: Keep everyone aligned and in the loop.

Throughout the process, teams need to keep stakeholders informed, whether that’s internal leadership, affected users, or customer support teams. Clear, timely updates help manage expectations and reduce chaos.

6. Post-incident review

Goal: Turn incidents into insights.

After resolution, there’s a chance to step back and learn. What caused the issue? How fast did we respond? What can we improve for next time? This stage is where teams build muscle memory and reduce repeat problems.

Modern IT teams are also automating many of these steps—especially triage, diagnosis, and even early-stage resolution—with solutions that bring intelligence into the response flow. (More on that next.)

Use Edwin AI for incident response

That shift toward intelligent automation is where Edwin AI fits in.

Built specifically for IT operations, Edwin is the AI agent for ITOps. But behind that single interface is something more powerful: a system of specialized agents working together in real time. Each one is designed for a specific task—triage, correlation, root cause analysis, resolution—and they operate as a coordinated team, not a monolith.

To your team, Edwin feels like one expert. But under the hood, it’s many—working in sync to analyze data, surface insights, and take action with speed and precision. It’s designed to take on the most manual, time-consuming parts of incident response—triage, correlation, root cause analysis—and automate them with speed and context.

Instead of flooding teams with disconnected alerts, Edwin AI connects the dots. It ingests data across your stack—logs, metrics, config data, tickets, change events, etc.—and analyzes that info in real time to surface the problems that matter most, along with what’s likely causing them and what to do next.

Edwin AI is about improving consistency, reducing escalation, and helping teams respond to incidents with more confidence and less guesswork. In environments where manual IT incident response is no longer sustainable, Edwin AI helps teams move faster, with fewer mistakes—and fewer surprises.

What sets Edwin AI apart from traditional AIOps products

Edwin AI doesn’t just detect that “something’s wrong”—it tells you what’s wrong, why it’s happening, whether it’s happened before, and what to do about it. All in near real-time, without waiting for a human to parse logs or search past tickets.

| Capability | Edwin AI | Traditional AIOps |

| Generative AI summaries | ✅ Built-in | ❌ Limited or unavailable |

| Hybrid dataset correlation | ✅ Operational + contextual | ⚠ Often siloed |

| Transparent, explainable AI | ✅ Open, configurable | ❌ Often black-box |

| Fast time to value | ✅ Live in days | ⚠ Months or longer |

| Built-in integrations | ✅ 3,000+ with full-stack visibility | ⚠ Requires custom work |

Edwin AI doesn’t replace your team—it amplifies it. It cuts through noise, delivers insights in context, and routes incidents to the right teams automatically. Whether you’re starting with Event Intelligence or implementing the full AI Agent, Edwin AI helps your team shift from reactive triage to strategic ops.

How Edwin AI works

Edwin AI is designed to mirror—and improve—every phase of the incident response lifecycle. Where traditional workflows rely on human effort and coordination, Edwin AI brings speed, consistency, and automation to each step.

1. Detection and alerting → Observe

Edwin AI starts with observability, ingesting alerts, metrics, logs, and events across your hybrid environment. It consolidates these signals from multiple sources, so you don’t miss early warning signs—or waste time chasing noise.

2. Triage and prioritization → Correlate

Instead of treating each alert in isolation, Edwin AI correlates related events using time-series analysis, dependency mapping, and system context. This approach narrows down the scope and identifies high-impact issues automatically.

3. Investigation and diagnosis → Reason

Edwin AI analyzes the incident in context—drawing on historical patterns, recent changes, asset metadata, and known fixes. It identifies likely root causes and explains its reasoning, giving teams the clarity they need to act with confidence.

4. Containment and resolution → Act (or recommend)

Edwin AI can auto-populate tickets with root cause summaries, attach supporting evidence, and route issues to the right team. In environments with pre-defined playbooks, it can even recommend or execute remediation steps.

5. Communication and coordination → Summarize

Using generative AI, Edwin AI produces clear, human-readable summaries of the incident: what happened, what caused it, and what should happen next. This context travels with the ticket, keeping everyone, from on-call engineers to execs, informed.

6. Post-Incident Review → Continuous Learning

Every time Edwin AI observes, correlates, or resolves an issue, it gets smarter. It builds a knowledge graph of incident fingerprints, asset behaviors, and successful resolutions—enabling it to improve its recommendations over time.

Edwin AI doesn’t force you to rethink your entire workflow; it builds on what already works and removes what slows you down. It makes every phase of it faster, clearer, and more consistent.

Where agentic AIOps wins

Traditional tools were built to notify you when something breaks. Agentic AIOps is built to help you fix it—faster, smarter, and with less guesswork.

After walking through how Edwin AI mirrors and enhances each phase of the incident response lifecycle, it’s worth zooming in on where those improvements have the biggest impact. These are the moments where automation is a force multiplier.

1. Get to the “why” faster

Manual triage and inconsistent root cause analysis slow everything down. Engineers waste hours stitching together logs and metrics, only to escalate what they can’t fully explain.

What Edwin AI does:

- Clusters noisy alerts into meaningful event groups.

- Maps dependencies and timelines to understand causal flow.

- Highlights the most likely root cause with supporting evidence.

Why it matters:

- Reduces investigation time significantly.

- Empowers junior team members to handle complex incidents.

- Improves signal-to-noise ratio across sprawling environments.

“Edwin AI started correlating and delivering value within an hour, even before we put it into production.” — Kris Manning, Global Head of IT Networks, Syngenta

See how Syngenta used Edwin AI to correlate alerts in real time.

2. Turn repetitive incidents into fast fixes

Too many teams treat recurring incidents like new problems. Fixes live in tribal knowledge, and past context is rarely reused efficiently.

What Edwin AI does:

- Learns from past incidents and their resolutions.

- Matches new issues to historical patterns.

- Recommends validated fixes with context attached.

Why it matters:

- Speeds up resolution by applying known solutions.

- Delivers more consistent responses, regardless of who’s on call.

- Converts one-off knowledge into institutional memory.

“We were seeing more than 1,000 alerts a day—30,000 a month. That’s too much for any team to manage manually. Edwin AI helps us focus on what actually matters.” — Shawn Landreth, VP of Networking and Reliability Engineering, Capital Group

Learn how AI-driven insights can transform your IT operations in from Capital Group’s Shawn Landreth.

3. Proactively detect systemic risk

Recurring alerts often point to deeper systemic problems, but without time to step back, teams miss the big picture until it’s too late.

What Edwin AI does:

- Analyzes long-term patterns and event timelines

- Flags recurring issues by service group, asset class, or dependency layer

- Correlates problems with changes, deployments, and config drift

Why it matters:

- Helps identify root-level infrastructure or design flaws

- Reduces repeated incidents and unplanned downtime

- Enables teams to shift from reactive triage to proactive reliability work

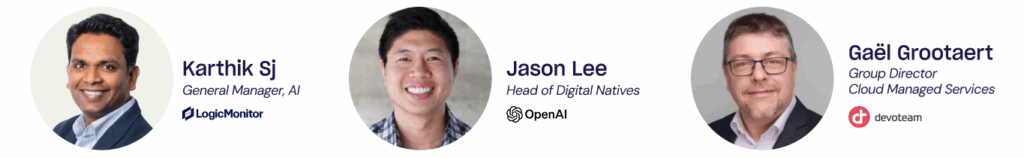

“We’re firefighters sometimes… AI helps us mitigate everything that has an impact on the customer side.”— Gaël Grootaert, Group Director, Devoteam Managed Services

Rethinking incident response starts here

Incident response hasn’t kept up with the systems it supports.

Most teams are still dealing with alert storms, manual triage, and inconsistent resolution paths. Even with good people and solid processes, the old way just can’t scale.

What we’ve seen from teams using Edwin AI—across industries, team sizes, and use cases—is this: When incident response is handled by agents that understand context, history, and impact, the work gets faster. More consistent. Less reactive. And a whole lot less exhausting.

If you’re still stitching together dashboards and parsing logs by hand, it might be time to rethink how your team operates. Not by starting over—but by upgrading what’s already there.

You don’t need to solve everything all at once. But you can start solving the stuff that slows you down most.

Edwin AI is one way to do that. And it’s working—for real teams, right now.

Sometimes the biggest transformations begin with what sounds like the worst possible news. One day, this Fortune 500 technology company’s observability platform was running smoothly. The next, they learned their critical monitoring solution would be discontinued as part of a corporate buyout.

For a leading global IT vendor in data infrastructure serving customers across storage, cloud, and managed services, this was a potential catastrophe. Two of their most critical ITOps teams suddenly faced the prospect of operating blind, with no visibility into the systems that kept customer environments running.

The clock was ticking. They had weeks to find a replacement solution and deploy it before their existing platform went dark.

Within three weeks, they had deployed LogicMonitor Envision and Edwin AI. Within 72 hours of activation, they had eliminated 93% of their incident volume—turning what could have been an operational disaster into a dramatic improvement over their previous state.

TL;DR

“Too Many Alerts, Not Enough Signal”

Before the crisis forced their hand, this tech giant was already drowning in operational noise. Their engineering teams were trapped in a vicious cycle that will sound familiar to anyone managing complex IT environments at scale. They had thousands of incidents generated a day, with engineers spending their mornings sifting through a digital avalanche of duplicates, false positives, and context-free notifications.

Fragmentation was killing their effectiveness. Internal systems generated one stream of alerts, customer-facing environments produced another, and the complete lack of correlation meant engineers were constantly switching contexts as they tried to piece together the bigger picture. A single underlying issue might trigger dozens of separate alerts across different systems, each treated as an independent incident requiring individual investigation.

“We were overwhelmed,” admitted one senior team member. “Too many alerts, not enough signal. We needed help focusing on what actually mattered.”

The distinction between monitoring and observability had never been more critical. Their existing setup could tell them when something crossed a threshold, but it couldn’t explain why systems were failing or how incidents connected across their hybrid infrastructure. When problems cascaded through their environment, engineers burned precious time assembling clues from disparate sources while customer-facing services remained compromised.

Even worse, this noise was actively undermining their ability to deliver on their commitments. SLAs were slipping as real problems got buried under false alarms. Service continuity suffered when critical issues went unnoticed in the flood of notifications. Customer experience degraded as engineers remained trapped in reactive mode.

Ironically, in an age of unprecedented visibility into system performance, they were essentially operating blind.

Why LogicMonitor + Edwin AI

With their existing platform’s expiration date looming and operational chaos mounting, the technology leader faced an impossible timeline. They needed to evaluate, select, and deploy a replacement solution in weeks—all while maintaining service levels for critical customer environments.

The evaluation process revealed a harsh reality. Most enterprise monitoring solutions required lengthy implementations that could stretch for quarters. Complex integrations, extensive customization, and drawn-out deployment cycles were luxuries they simply couldn’t afford. They needed something that could deliver immediate value without the traditional overhead of enterprise software rollouts.

LogicMonitor Envision and Edwin AI emerged as the clear choice, but not just because of their technical capabilities. The combination offered something their crisis demanded: speed without compromise. LogicMonitor’s hybrid observability platform could provide the comprehensive visibility they needed across their complex infrastructure, while Edwin AI promised to solve their most pressing problem—the overwhelming noise that was paralyzing their operations.

What sealed the decision was Edwin AI’s precision approach to incident response. Unlike traditional rule-based systems that simply filtered alerts, Edwin AI could actually understand the relationships between incidents, automatically correlate related events, and accelerate root cause analysis.

The scalability factor proved equally crucial. Their organization needed to support two distinct but interconnected teams: internal engineering focused on infrastructure operations and managed services responsible for customer-facing environments. Most solutions would have required separate deployments with limited coordination between them. LogicMonitor and Edwin AI offered the flexibility to create synchronized deployments that could operate independently while sharing intelligence and insights.

Within days of their initial evaluation, it became clear that this combination could help them regain control of their operations while actually improving their capabilities beyond what they’d lost in the acquisition.

The 72-Hour Transformation

What happened after deployment defied even their most optimistic expectations. Within 72 hours of Edwin AI going live, the same teams that had been drowning were witnessing a complete transformation of their incident management reality.

The numbers were staggering. Edwin AI automatically reduced 93% of incidents flowing into ServiceNow based on external resolution signals and intelligent correlation. The digital avalanche that had consumed their days vanished, replaced by a manageable stream of genuinely critical issues that actually required human intervention.

But the real breakthrough was in speed and precision. Edwin AI cleared 1,300 false positives in under 10 minutes, eliminating hours of investigative work that would have pulled engineers away from real problems. Another 1,650 incidents were resolved within a single hour, turning what used to be day-long troubleshooting marathons into quick, focused responses.

The transformation extended far beyond the numbers. Engineers who had started each day bracing for an overwhelming barrage of notifications were suddenly able to focus on strategic work. SLA adherence improved dramatically as real issues were no longer buried under false alarms. Customer experience benefited as problems were identified and resolved before they could cascade into service disruptions.

“We’ve seen a dramatic reduction in alert noise and faster resolution times,” said one engineering lead. “Edwin AI helped our teams stay focused, maintain SLAs, and deliver a better experience to both internal and external users.”

Perhaps most remarkably, this wasn’t the result of months of fine-tuning and optimization. This was day-one performance from an AI system that understood their environment well enough to immediately distinguish between noise and signal—solving in hours what had taken their previous approach years to create.

Enterprise Deployment at Startup Speed

For the managed services team responsible for maintaining customer-facing environments, the deployment timeline was ambitious. Any disruption to their monitoring capabilities could cascade into service outages affecting dozens of enterprise customers. They needed a migration that was both lightning-fast and completely seamless.

Traditional enterprise software deployments are measured in quarters. Complex integrations, extensive testing phases, and gradual rollouts are standard practice for a reason: they minimize risk. But this team didn’t have the luxury of a cautious approach. Their existing platform’s shutdown date was fixed, and customers couldn’t experience even momentary gaps in service monitoring.

What followed was a masterclass in streamlined implementation. LogicMonitor and Edwin AI were deployed, configured, and activated in just three weeks—a timeline that would be aggressive for a greenfield deployment, let alone a critical system migration under pressure. The deployment required minimal configuration overhead, with Edwin AI’s intelligent capabilities adapting to their environment rather than demanding extensive customization.

Most importantly, the transition happened invisibly to their customers. Service monitoring continued uninterrupted throughout the migration, with no gaps in coverage or temporary blind spots that could have put customer environments at risk. The managed services team maintained their SLA commitments while simultaneously overhauling their entire observability infrastructure.

“We didn’t have time for a long rollout,” explained one team lead. “LogicMonitor and Edwin AI were up and running fast with zero disruption to our customers.”

The speed of deployment proved that modern AI-driven solutions could deliver enterprise-grade reliability without enterprise-typical implementation overhead. In an era where business moves faster than traditional IT deployment cycles, this kind of rapid, low-risk implementation capability represents a fundamental shift in how organizations can adopt new technology.

Beyond the Quick Wins: Building a Predictive Operations Engine

The dramatic success of their initial deployment represented a fundamental shift in how this company approaches IT operations. Having tasted the power of AI-driven incident management, they’re now positioning themselves at the forefront of a broader transformation in enterprise ITOps.

The immediate wins were impressive, but the real opportunity lies in what comes next. With Edwin AI proving its value in their most critical environments, the organization is now expanding their AI strategy across multiple dimensions. The reactive approach that had defined their operations for years is giving way to something far more sophisticated: truly predictive operations.

Their roadmap reveals the full potential of AI-powered ITOps. Generative and agentic capabilities are being activated to provide auto-summarization of complex incidents, accelerated root cause analysis that can trace problems across interconnected systems, and predictive insights that identify potential issues before they manifest as outages. These are improvements that represent a complete reimagining of how IT operations can function.

The expansion is equally ambitious. Having proven the concept with two critical teams, they’re now scaling agentic AI across additional internal and customer-facing environments. Each new deployment builds on the intelligence gathered from previous implementations, creating a network effect where the AI becomes more effective as it monitors more systems and learns from more incidents.

But perhaps the most transformative element is their shift from reactive to preventive operations. Using pattern recognition and historical data analysis, Edwin AI is helping them anticipate disruptions before they impact operations. Instead of waiting for alerts to fire, they’re identifying the conditions that typically precede problems and taking action while issues are still manageable.

“Our goal is to anticipate challenges before they happen,” explained one senior leader. “Edwin AI is a key partner in making that vision a reality.”

This evolution from crisis management to strategic advantage illustrates a broader truth about AI in enterprise operations: the organizations that will thrive aren’t just those that deploy AI to solve today’s problems, but those that leverage it to prevent tomorrow’s challenges entirely.

The New Reality of Enterprise IT Operations

We’ve reached an inflection point where AI has moved from experimental technology to operational necessity. Their story is a preview of how modern ITOps will function in an increasingly complex digital landscape.

And the broader implications are impossible to ignore. Enterprise IT leaders everywhere are grappling with the same fundamental challenges that nearly overwhelmed this organization: hybrid environments that span multiple clouds and on-premises systems, shrinking IT budgets that demand more efficiency from smaller teams, and user expectations that continue to expand while tolerance for downtime approaches zero.

Traditional approaches to IT operations simply can’t scale to meet these demands. The manual processes, reactive workflows, and human-intensive troubleshooting that defined IT operations for decades are breaking down under the weight of modern complexity. This company’s pre-AI state represents the inevitable outcome of trying to manage 21st-century infrastructure with 20th-century methods.

Edwin AI and similar intelligent automation platforms represent a fundamental evolution in how IT operations function. Engineers freed from the constant noise of false alarms can focus on strategic initiatives, complex problem-solving, and the kind of high-value work that actually moves organizations forward. The result is operations teams that can do more with less while maintaining higher service levels than ever before.

Twelve months ago, we shipped Edwin AI with a specific hypothesis that AI agents could handle the operational drudgery slowing down ITOps teams.

It was a deliberate bet against the cautious consensus that AI should act only as a copilot, limited to offering suggestions. Most AIOps tools still follow that script. They’re stuck surfacing insights and stop short of action. Edwin was built differently. It was designed to make decisions, correlate events, and execute fixes.

A year later, we know our bet paid off.

Edwin is now running in production across global retailers, financial institutions, managed service providers, and more. The results validate something important about how AI can change ITOps work by eliminating the noise that buries it.

Here’s what Edwin AI accomplished in its first year.

How Teams Transformed with Edwin AI

Edwin’s first year delivered results across remarkably diverse environments, each presenting unique operational challenges.

Chemist Warehouse operates more than 600 retail locations around the globe with complex multi-datacenter infrastructure. With Edwin AI Event Intelligence, their ITOps team achieved an 88% reduction in alert noise while maintaining full visibility into critical systems. Engineers shifted from constant, reactive firefighting to strategic infrastructure improvements.

The Capital Group, one of the world’s largest investment management firms, processes over 30,000 alerts monthly across regulated financial systems. Edwin enabled their teams to move from volume-based triage to impact-based operations, focusing resources on business-critical issues while handling routine incidents automatically.

Nexon, managing multi-tenant infrastructure for clients across ANZ, saw a 91% reduction in alert noise and 67% fewer ServiceNow incidents. Edwin’s ability to maintain context across client boundaries while acting autonomously improved SLA performance across their entire client base.

A global retailer supported by Devoteam went from managing 3,000+ incidents monthly to fewer than 400, with correlation models delivering accurate results within the first hour of deployment.

Across all deployments, Edwin delivered consistent operational improvements:

- Up to 91% alert noise reduction

- 30–60% faster resolution times

- 67% fewer ITSM incidents

- 20% increase in engineering productivity

- 342% ROI over three years

The Architecture That Makes Edwin AI Work

Edwin’s impact was driven by key technical advances that pushed the boundaries of what’s possible in agentic AIOps. Our modular architecture matured rapidly, enabling specialized AI agents to handle correlation, root cause analysis, and remediation—each operating with shared context via a unified infrastructure knowledge graph.

This foundation allowed agents to reason in context, collaborate across workflows, and take targeted action.

Key technical milestones included:

- Agent orchestration: Edwin is now able to chain actions across multiple agents—correlating events, analyzing root causes, and executing remediation—without human handoffs between steps.

- Inference speed: Response times under high-load scenarios dropped significantly, making Edwin viable for frontline operations teams dealing with active incidents.

- Expanded integration: Support grew to over 3,000 tools across observability, ITSM, and CMDB systems, with particularly strong advances in hybrid cloud and modern observability stack integration.

- Enhanced root cause analysis: Integration with change management systems, security tools, and historical incident response data improved accuracy and provided clearer explanations of complex failure scenarios.

- Workflow automation: Edwin gained the ability to execute remediation through built-in runbooks and suggest automated responses based on historical patterns and current context.

Most significantly, Edwin proved it could deliver value immediately and across many use cases—many teams saw working correlation models within hours of deployment, with full operational benefits appearing within the first week.

Expanding Through Strategic Partnerships

Edwin’s first year included strategic partnerships that expanded its operational reach. LogicMonitor’s collaboration with OpenAI brought purpose-built generative AI capabilities directly into the agent framework, enabling clear explanations of complex infrastructure behavior in natural language.

The partnership with Infosys integrated Edwin with AIOps Insights, extending correlation capabilities across multiple data planes and observability stacks without duplicating monitoring logic.

Deep ServiceNow integration evolved beyond simple ticket sync to enable true multi-agent collaboration between Edwin and Now Assist, allowing both systems to contribute to faster triage and more intelligent incident handling.

Product Evolution Based on Real Usage

Edwin’s development throughout the year was driven by feedback from teams running it in production under pressure. Every deployment, support interaction, and correlated alert contributed to system improvements.

New AI Agent capabilities launched in beta included chart and data visualization agents, public knowledge retrieval agents, and guided runbook generation—all responding to specific operational needs identified by customer teams.

ITSM integration improvements delivered better field-level enrichment, more reliable bidirectional sync, and clearer handoff traceability to downstream systems.

The continuous feedback loop between operators, telemetry, and product development shaped Edwin’s evolution toward practical operational value rather than theoretical capability.

What’s Next: Building on Edwin AI’s Early Success

Year two is about building on what’s working. Our development priorities focus on expanding proven capabilities rather than experimental features.

- Predictive automation will leverage the patterns Edwin has learned from a year of live telemetry to prevent problems before they impact users.

- Domain-specific agents for SecOps and DevOps will extend Edwin’s proven agent architecture into adjacent operational domains.

- Explainability will make Edwin’s root cause analysis and impact assessments even more transparent, supporting better decision-making under pressure.

- Cross-platform orchestration will improve Edwin’s ability to coordinate with existing IT tools and workflows.

The roadmap follows the natural adoption curve many teams experienced in year one: starting with alert correlation and noise reduction, adding root cause analysis and automated workflows, then expanding into predictive operations.

A Year In, Proven in the Field

A year ago, we hypothesized that AI agents could handle operational complexity. The evidence is now clear: they can, and teams that deploy them gain significant competitive advantage.

Edwin’s success across diverse environments validates a broader principle about AI in operations. The technology works best when it operates autonomously.

The teams running Edwin today are solving different problems than they were a year ago. They’ve moved beyond alert fatigue into predictive operations, automated remediation, and strategic infrastructure planning.

The technology works. The results are measurable. The transformation is real.

See Edwin AI for yourself:

Today’s IT environments span legacy infrastructure, multiple cloud platforms, and edge systems—each producing fragmented data, inconsistent signals, and hidden points of failure. This scale brings opportunity, but also operational strain: fragmented visibility, overwhelming alert noise, and slower time to resolution.

With good reason, public and private sector organizations alike are moving beyond basic visibility, demanding hybrid observability that’s context-aware and action-oriented.

To meet this need, LogicMonitor and Infosys have partnered to integrate Edwin AI with Infosys AIOps Insights, part of the Infosys Cobalt suite. The collaboration combines LogicMonitor’s AI-driven hybrid observability platform with Infosys’ strength in automation and enterprise transformation.

“The scale and complexity of today’s enterprise IT environments demand a unified, AI-powered observability platform that can intelligently monitor, analyze, and automate across hybrid infrastructures,” said Michael Tarbet, Global Vice President, MSP & Channel, LogicMonitor. “Our collaboration with Infosys reflects our shared goal of equipping organizations with the tools they need to anticipate problems, reduce downtime, and keep mission-critical systems performing at their peak.”

TL;DR

The Challenge of Complex IT Environments

Enterprises today are operating in environments where no single system tells the whole story.

Critical infrastructure spans data centers, public and private clouds, and edge devices—all generating massive volumes of telemetry. But without a unified lens, teams are left stitching together siloed data, reacting to symptoms instead of solving root issues.

Legacy monitoring tools weren’t built for this level of complexity. What’s needed is an observability platform that both spans these hybrid environments and applies AI to interpret signals in real time, surface what matters, and drive faster, smarter decisions.

The LogicMonitor-Infosys Collaboration: Bringing Together AI-Powered Strengths

This collaboration integrates Edwin AI—the AI agent at the core of LogicMonitor’s hybrid observability platform—directly into Infosys AIOps Insights, part of the Infosys Cobalt cloud ecosystem.

As an agentic AIOps product, Edwin AI processes vast streams of observability data to detect patterns, identify anomalies, and surface root causes with context. And when paired with Infosys’ AIOps capabilities, the result is a smarter, more adaptive layer of IT operations—one that helps teams respond faster, reduce manual effort, and shift from reactive to proactive management.

The power of this partnership lies in the combination of domain depth and technological breadth. LogicMonitor brings its AI-powered, SaaS-based observability platform trusted by enterprises worldwide; Infosys brings deep expertise in enterprise transformation, AI-first consulting, and operational frameworks proven across industries. Together, the two are using IT complexity as a springboard for smarter decisions, faster innovation, and more resilient digital operations.

Unlocking Key Outcomes and Benefits

By integrating Edwin AI with Infosys AIOps Insights, enterprises gain access to a powerful toolset designed to streamline operations and deliver measurable results. Customers can reduce problem diagnosis and resolution time by up to 30%, allowing IT teams to resolve incidents faster and minimize service disruptions. Redundant alerts—a major source of noise and inefficiency—can be cut by 80% or more, freeing teams to focus on what truly requires attention.

Beyond faster triage, the integration provides a unified view across hybrid environments, enriched with persona-based insights tailored to roles, from infrastructure engineers to CIOs. With improved forecasting and signal correlation, IT teams can anticipate issues before they escalate, and make decisions grounded in data, not guesswork.

This collaboration strengthens operational stability and gives teams the insight they need to make timely, confident decisions in complex environments. In an era where digital experience is a competitive edge, the ability to anticipate, adapt, and act with precision is what sets leading organizations apart.

Real-World Impact: Sally Beauty’s Results

Sally Beauty Holdings offers a clear example of the impact this collaboration can deliver. By leveraging Infosys AIOps Insights, enabled through the LogicMonitor Envision platform, the company increased its proactive issue detection and reduced alert noise by 40%.

This improvement translated directly into minimized downtime and smoother day-to-day operations. The ability to detect and address issues before they escalated meant IT teams could focus on driving value rather than reacting to incidents. Infosys’ commitment to operational excellence played a key role in enabling these outcomes—demonstrating how strategic AI partnerships can drive measurable, business-critical improvements.

LogicMonitor + Infosys: Validated by Leaders, Built for Enterprise Impact

The collaboration between LogicMonitor and Infosys brings together two leaders in enterprise IT—pairing deep AI expertise with operational scale. For Infosys, the decision to integrate Edwin AI into its Cobalt suite reflects a strategic commitment to delivering modern, automation-ready observability to its global customer base. For LogicMonitor, it affirms our position as a trusted partner in AI-powered data center transformation, capable of delivering results in some of the most demanding enterprise environments.

“With our combined expertise in AI and automation, we’re redefining the observability landscape,” said Anant Adya, EVP at Infosys. “This partnership represents a significant step forward in enabling enterprises to adopt AI-first strategies that drive agility, resilience, and innovation.”

As organizations push toward more autonomous, resilient IT ecosystems, the LogicMonitor–Infosys relationship sets a new standard for what observability can—and should—deliver.

Most internal AI projects for IT operations next exit pilot. Budgets stretch, priorities shift, key hires fall through, and what started as a strategic initiative turns into a maintenance burden—or worse, shelfware.

Not because the teams lacked vision. But because building a production-grade AI agent is an open-ended commitment. It’s not just model tuning or pipeline orchestration. It’s everything: architecture, integrations, testing frameworks, feedback loops, governance, compliance. And it never stops.

The teams that do manage to launch often find themselves locked into supporting a brittle, aging system while the market moves forward. Agent capabilities improve weekly. New techniques emerge. Vendors with dedicated AI teams ship faster, learn faster, and compound value over time.

Edwin AI, developed by LogicMonitor, reflects that compounding advantage. It’s live in production, integrated with real workflows, and delivering results for our customers. Built as part of a broader agentic AIOps strategy, it’s engineered to reduce alert noise, accelerate resolution, and handle the grunt work that slows teams down.

What follows is a breakdown of what it actually takes to build an AI agent in-house—what gets overlooked, what it costs, and what’s gained when you deploy a product that’s already proven at scale.

TL;DR

The Complexities and Costs of Building an AI Agent In-House

Building an AI agent sounds like control. In reality, it’s overhead. What starts as a way to customize quickly becomes a full-stack engineering program. You’re taking on a distributed system with fragile dependencies and fast-moving interfaces.

You’ll need infrastructure to run inference at scale, models that stay relevant, connectors that don’t break, testing frameworks to avoid bad decisions, and enough governance to keep it all stable in production.

None of this is one-and-done. AI systems require constant tuning. As environments shift, so does the data. AI systems degrade fast. Environments shift. Data patterns break. The agent falls out of sync.

Staffing alone breaks the model for most teams. Engineers who’ve built agentic systems at scale are rare and expensive. Hiring them is hard. Retaining them is harder. And once they’re on board, they’ll be tied up supporting internal tooling instead of moving the business forward.

LogicMonitor’s internal data shows that building your own AI agent is roughly three times more expensive than adopting an off-the-shelf product like Edwin AI. The top cost drivers are predictable: high-skill staffing, platform infrastructure, and the integrations needed to stitch the system into your existing environment.

The real cost is your team’s time and focus. Every hour spent maintaining a custom AI agent is an hour not spent improving customer experience, strengthening resilience, or driving innovation. Unless building AI agents is your core business, that effort is misallocated. Time here comes at the expense of higher-impact work.

The Advantage of Buying an Off-the-Shelf AI Agent for ITOps

Buying a mature AI agent allows teams to move faster without taking on the overhead of building and maintaining infrastructure. It removes the need to architect complex systems internally and shifts the focus to applying automation instead of construction.

The cost difference is significant. The majority of build expense comes from compounding investments: engineering headcount, platform maintenance, integration work, retraining cycles, and the ongoing support needed to keep pace with change. These are decisions that create operational weight that grows over time.

Off-the-shelf agents are designed to avoid that drag. They’re built by teams focused entirely on performance, tested in diverse environments, and updated continuously based on feedback at scale. That means less risk, shorter time to impact, and lower total cost of ownership.

The Benefits of Agentic AIOps

The power of agentic AIOps lies in the value it delivers across your organization. Products like Edwin AI aren’t just automating workflows—they’re transforming the way IT teams operate, enabling faster resolution, less noise, and a more resilient digital experience.

At the core of agentic AIOps are four high-impact value drivers:

- Improved Operational Efficiency

- Improved Employee Productivity

- Improved Customer Experience

- Reduced License and Training Costs

To go deeper, the reduction in alert and event noise directly translates to avoided IT support costs. With Edwin AI, organizations have reported up to 80% reduction in alert noise, cutting down the number of incidents that reach human teams and freeing up capacity for more strategic work.

Generative AI capabilities—like AI-generated summaries and root cause suggestions—not only improve Mean Time To Resolution (MTTR), they also minimize time spent in war rooms. The result? A 60% drop in MTTR, faster incident triage, and fewer late-night escalations.

By catching issues before they escalate, organizations also reduce the frequency and duration of outages—translating to avoided outage costs and improved service reliability. Fewer incidents means fewer people involved, fewer systems impacted, and happier end users.

Then there’s license and training optimization. By consolidating capabilities within a single AI observability product, companies are seeing reduced licensing overhead and fewer hours spent training teams across disparate tools.

See the data behind the shift to AIOps. See the infographic.

What Edwin AI Delivers Off-the-Shelf

Edwin AI, developed by LogicMonitor, is one example of what an agentic product looks like in production. It’s deployed across enterprise environments today, already delivering outcomes that internal teams often struggle to reach with homegrown tools.

Edwin delivers:

- Event Intelligence: Features like alert noise reduction, ITSM integration, third-party data ingestion, and support for open, configurable AI models. These are built to surface relevant incidents faster and reduce alert fatigue without sacrificing context.

- AI Agent: Tools like AI-generated titles and summaries, root cause analysis, and Actionable Recommendations. Upcoming additions include predictive insights and agent-driven automation—designed to reduce manual triage and improve decision quality.

Buying an agentic product like Edwin AI removes the engineering burden, and it gives teams a system that scales with them, adapts to their stack, and starts delivering value on day one. No internal build cycles. No integration firefights. Just function.

Take the Edwin AI product tour.

Don’t Just Take our Word for It: Customers are Sharing their Success with Edwin AI in Production

The benefits of Edwin AI are playing out in real production environments across the globe. Companies in diverse industries are turning to Edwin AI to solve for a number of use cases, including simplifying operations, eliminating noise, and accelerating time to resolution. The results speak for themselves.

- Syngenta saw value within just one hour of implementation, a testament to Edwin AI’s low time-to-value and out-of-the-box intelligence.

- Chemist Warehouse achieved an 88% reduction in alert noise, allowing their teams to focus on real issues instead of chasing false alarms.

- Nexon reduced incidents by 67%, streamlining IT workflows and dramatically improving service reliability.

- Devoteam reported a 58% improvement in operational efficiency, thanks to Edwin AI’s ability to correlate signals across complex environments.

- MARKEL achieved 68% deduplication, cutting through redundant alerts and surfacing what truly matters.

These are consistent indicators of how agentic AIOps, delivered through Edwin AI, transforms IT operations.

So Should You Build or Buy an AI Agent for ITOps?

Building an AI agent for ITOps is a resource-intensive initiative. It requires sustained investment across architecture, infrastructure, staffing, and maintenance—often without a clear timeline to value. Teams that take this path often find themselves maintaining internal systems instead of solving operational problems.

Edwin AI takes that complexity off the table. It’s already in production, already integrated, and already delivering results. Internal analysis shows it’s roughly three times more cost-effective than building from scratch, with real-world returns on investment that include 80% alert noise reduction and a 60% drop in mean time to resolution.

These gains are happening now, in live environments, under real pressure.

For organizations focused on reliability, efficiency, and speed, products like Edwin AI remove friction and deliver impact without adding overhead.

Few teams have the time or capacity to support a product this complex. Most don’t need to. So when budgets are tight and expectations are high, shipping value quickly matters more than owning every component.

When you’re running IT for 600+ stores across Australia, New Zealand, Ireland, Dubai, and China, “complexity” doesn’t quite cover it. Every POS terminal, warehouse server, store router, and mobile device adds to the operational noise—and when something breaks, the ripple effect hits fast.

For Chemist Warehouse, reliable IT operations is about meeting community healthcare needs, staying compliant across regions, and keeping essential pharmacy services online 24/7.

In this recent LogicMonitor webinar, Cutting Through the Noise, Jesse Cardinale, Infrastructure Lead at Chemist Warehouse, joined LogicMonitor’s Caerl Murray to walk through how they tackled alert fatigue, streamlined incident response, and shifted their IT team from reactive to proactive—with the help of Edwin AI.

If you’re dealing with high alert volumes, fragmented monitoring tools, or the growing pressure to “do more with less,” this recap is for you.

Meet Chemist Warehouse: Retail at Global Scale

Chemist Warehouse is Australia’s largest pharmacy retailer, but it’s also a global operation. With over 600 stores and a rapidly growing presence in New Zealand, Ireland, Dubai, and China, the company’s IT backbone has to support a 24/7 environment. That includes three major data centers, a cloud footprint spanning multiple providers, and an SD-WAN network connecting edge systems across every store and distribution center.

Jesse Cardinale, who leads infrastructure at Chemist Warehouse, summed it up: “We’re responsible for the back end of everything that keeps the business running 24/7. There’s the technology, of course—but it’s the scale, the people, and the processes can make it a bit of a beast.”

That global scale introduces serious complexity. From legacy systems in remote towns to modern cloud workloads supporting ecommerce, Jesse’s team is constantly balancing uptime, cost control, and compliance across vastly different regions. And unlike a typical retailer, Chemist Warehouse carries an added layer of responsibility: pharmaceutical care.

“Yes, you can walk in and grab vitamins or fragrance,” Jesse said. “But I think the major thing that gets overlooked is that we’re a healthcare provider. We need to be online, operational, and ready to provide pharmaceutical goods to our community.”

This is all to say that IT performance is mission-critical, not a back office function.

The ITOps Challenge: Noise, Complexity, Compliance

Before partnering with LogicMonitor, Chemist Warehouse faced a familiar, but painful, reality: fragmented monitoring tools, endless alerts, and no clear view of what really mattered.

“We had to rely on multiple platforms to get the visibility we needed. What we ended up with was one tool that was specialized in getting network monitoring, another for servers, another for cloud workloads, and so on,” Jesse explained. “Each of these tools had its own learning curve and required dedicated expertise, which made it hard to manage at scale. And the view into our environment was completely siloed. It was difficult to correlate events across the stack.”

That fragmented setup led to alert fatigue. Every system was generating noise. Teams were overloaded, trying to parse out what was real, what was urgent, and what was just background chatter. Without correlation or context, IT spent more time firefighting than preventing issues.

And with thousands of endpoints—POS systems, mobile devices, local servers, and more—spread across urban and remote locations, even a small issue could ripple across the business. A power outage in a single store could trigger ten separate alerts, resulting in duplicate tickets, delayed resolution, and frustrated teams.

The lack of visibility didn’t stay behind the scenes; it disrupted customer experience in real time: “A delay at checkout. A failed online order. A warehouse bottleneck. All of those can impact someone’s ability to get their medication,” Jesse said. “We can’t afford that.”

Compliance made it even more critical. Different countries meant different regulations, and stores needed to stay online and accessible no matter the circumstances. Whether responding to a storm in Australia or managing pharmaceutical access in Dubai, Chemist Warehouse had to deliver seamless, uninterrupted service. No excuses.

Enter LogicMonitor: One Platform to See It All

To get ahead of the complexity, Chemist Warehouse needed a platform that could centralize their view, eliminate blind spots, and scale with their business. LogicMonitor delivered that foundation.

“We moved to LogicMonitor because it gave us a unified, scalable observability platform,” Jesse said. “Instead of manually defining thresholds or trying to stitch everything together, we could monitor everything and let the AI tell us what actually mattered.”

With LogicMonitor, the Chemist Warehouse infrastructure team consolidated visibility across their global environment—from cloud workloads to edge compute, from distribution centers to retail stores. Integrating with ServiceNow allowed for streamlined incident routing, while dashboards gave teams immediate clarity into system health.

Still, even with this unified observability, the alert volume remained high. Everything was visible, but not everything was actionable.

That’s when the team turned to Edwin AI, LogicMonitor’s AI agent for ITOps.

“It was either spend a lot of man hours trying to further tune the environment, optimize it, work with your teams, or we could get AI to do the work,” Jesse said. “We chose AI.”

Once deployed, the numbers told the story:

- 88% reduction in alert volume

- 22% fewer incidents within the first month

- Faster correlation, smarter prioritization, and clearer root cause analysis

Instead of ten noisy alerts, Edwin AI can deliver a single, correlated incident—saving hours of manual triage and helping the right teams respond faster. A power outage at a store, for example, no longer triggered a flood of device-specific alerts. Edwin identified the root cause and pushed a single, actionable incident to ServiceNow.

“The results were dramatic… An 88% reduction in alert volume after enabling Edwin AI. To us, that’s not just a number; it’s a daily quality-of-life improvement for my team,” said Jesse.

How Edwin AI Transformed ITOps

With fewer distractions and clearer insights provided by Edwin AI, Jesse’s team was able to shift from reactive incident response to proactive service improvement. Instead of chasing false positives and duplicative tickets, engineers could focus on building new solutions, rolling out infrastructure upgrades, and delivering business value.

“Before Edwin, we needed more people spending more time looking at alerts, just to figure out what was real,” said Jesse. ”Now, we have more time and more people focused on the future.”

That shift enabled faster innovation and more dynamic support for the business. Changes that used to take weeks could now be delivered quickly and with less risk. Incident resolution times dropped, because each ticket came with context, correlation, and actionable insight.

Edwin AI’s integration with ServiceNow played a key role in that improvement. Edwin AI acted as the front door, correlating and filtering alerts before pushing them into Chemist Warehouse’s ITSM workflows. The setup process was simple, requiring just one collaborative session between LogicMonitor and Chemist Warehouse’s internal teams.

“It wasn’t a tool drop. LogicMonitor really partnered with us,” Jesse noted. “Edwin AI made our workflows smarter.”

Fewer disruptions meant more consistent store operations, better customer experiences, and stronger business continuity across regions. The team was also able to support more infrastructure, at greater scale, without increasing headcount.

“We’re actually monitoring more than ever,” Jesse said. “But thanks to Edwin AI, the incident volume went down.”

Lessons from the Field: Advice from Chemist Warehouse

If there’s one thing Jesse Cardinale emphasized, it’s that observability isn’t something you just “turn on”. It’s a practice that needs attention, iteration, and ownership.

“Don’t treat monitoring like a set-and-forget solution,” he said. “It needs to evolve with your business, just like any other critical platform.”

One of the most impactful decisions Chemist Warehouse made was to dedicate a single engineer to own the monitoring and alerting stack. That person became the internal subject matter expert on LogicMonitor and Edwin AI, working closely with LogicMonitor’s team to fine-tune dashboards, enrich data, and ensure alerts had the right context.

Instead of spending time writing manual thresholds or tuning every alert rule, that engineer focused on feeding Edwin the data it needed to succeed:

- Tagging infrastructure accurately

- Connecting incident resolution notes

- Integrating change data from ServiceNow

This shift, from rules-based monitoring to intelligence-driven signal detection, paid dividends in productivity and clarity.

“The biggest thing that I’ve seen with Edwin AI is that it doesn’t replace our engineers. It complements them. It enables them,” Jesse said. “It gives them the space to think, to solve problems with context and creativity.”

When paired with strong observability practices and intentional data hygiene, AI becomes a force multiplier for your team.

What’s Next for Chemist Warehouse

For Chemist Warehouse, the journey with Edwin AI is far from over. The results so far have been impressive, but Jesse and his team see even more potential in continuing to enrich and evolve their agentic AIOps strategy.

The next phase is deeper data integration. The team is working on feeding Edwin AI even more contextual inputs—like incident resolution notes and change data from ServiceNow—to improve the accuracy of root cause suggestions and predictive insights.

They’re also investing in better tagging and metadata hygiene, ensuring that Edwin understands the relationships and criticality of different systems across their hybrid environment.

“Good data in, good data out,” Jesse emphasized. “The more we help Edwin understand our ecosystem, the smarter it gets.”

But through it all, Jesse remains clear-eyed about the role AI should play in IT operations. It’s not magic—and it’s not meant to replace skilled engineers.

That philosophy shapes how Chemist Warehouse continues to scale its infrastructure: by building a symbiotic relationship between people and AI, where each complements the other.

Want More? Here’s Where to Go Next.

- Watch the full webinar replay

- Get a demo of Edwin AI

- Read: What is an AI agent?

- Explore: The Edwin AI Product Tour

This is the fourth blog in our Azure Monitoring series, and this time, we’re digging into cost efficiency. Azure makes it easy to scale, but just as easy to overspend. Idle VMs, forgotten disks, and silent data transfer fees add up fast. The result is budget overruns that catch teams off guard and force reactive cuts. This blog breaks down the Azure metrics that actually help you reduce waste, improve visibility, and keep cloud spend aligned with business priorities. Missed our earlier posts? Catch up.

Cloud costs scale fast. Compute, storage, networking, and API calls all add up, and without the right metrics, it’s easy to lose track of where the money is going. Many teams monitor overall spend but miss the operational inefficiencies that drive waste.

The key to cost efficiency is using data to pinpoint unnecessary expenses while keeping performance strong. In 2024, 80% of organizations overshot their cloud budgets by 20–50% without effective cost controls. Tracking the right cost metrics makes the difference between staying in control and scrambling to justify unexpected bills.

TL;DR

Resource Utilization Metrics: Optimize Azure Costs

Cloud costs add up fast, and it’s usually not because of one big mistake. It’s the slow creep of underused resources, oversized instances, and things no one remembers setting up. That’s why it pays to keep a close eye on how your infrastructure is actually being used. When you understand what’s pulling weight and what’s just pulling budget, it’s a lot easier to make smart decisions and stay ahead of surprises.

Virtual Machine (VM) Right-Sizing

It’s one of the most common and most expensive cloud mistakes: overprovisioning VMs “just in case.” The result is just massive waste. When teams don’t track how VMs are actually used, they end up paying for headroom they don’t need. That’s why right-sizing starts with metrics.

Metrics to track:

- CPU-to-memory ratio: Flag imbalanced instances with underused cores or overallocated memory.

- Peak-to-average utilization gap: Spiky workloads may need autoscaling rather than permanent capacity.

- Weekend vs. weekday usage: Spot dev/test environments that can be paused or shut down after hours.

Platforms like LogicMonitor Envision make this easier by automatically surfacing patterns. You can group utilization by instance type, workload, or environment, so instead of scanning thousands of VMs, you can take action where it counts.

Storage Optimization

Storage costs often climb quietly, without any alerts or obvious symptoms. Teams hold onto old backups, store infrequently accessed data in premium tiers, and leave unattached disks behind after deleting VMs. These are silent budget killers hiding in plain sight.

Key metrics to track:

- Storage by access tier to identify hot-tier volumes that haven’t been accessed in 30+ days.

- Snapshot and backup retention to identify outdated data still incurring charges.

- Unattached disks to reclaim storage that’s no longer tied to active workloads.

Azure bills for all managed disks, whether they’re attached to a VM or not. Monthly audits catch this kind of waste early and consistently. Platforms like LM Envision make it easier to surface these patterns at scale, allowing teams to free up capacity and reduce spending without compromising reliability.

Idle and Abandoned Resources

As environments scale, temporary resources have a habit of becoming permanent line items. Engineers spin up environments for testing or troubleshooting and forget to shut them down. Services get deprecated, but the infrastructure they relied on continues to run and generate billing.

Key signals of waste:

- Unused load balancers, App Service plans, and API Management instances.

- Public IPs and ExpressRoute circuits with no active traffic.

- Underutilized SQL databases, Cosmos DB, and Azure Cache instances.

Cost Attribution: Make Spend Visible and Accountable

Cloud budgets break down when teams can’t see who’s spending what. Without clear attribution, costs get centralized, ownership gets blurred, and optimization becomes someone else’s problem.

The fix starts with tagging and grouping resources by team, environment, or application. That turns vague spend reports into actionable insights.

Key focus areas:

- Month-over-month spending trends to highlight cost spikes before they become problems.

- Application-tier breakdowns to reveal frontend/backend/database imbalances.

- Environment-level spend to ensure dev and test environments aren’t burning more than production.

Tagging policies need to be enforced. When done right, platforms like LM Envision can automatically read and surface those tags, making it easy to group resources, build cost dashboards, and tie usage back to ownership without manual sorting.

Budget Tracking: Prevent Cost Overruns

Cloud budgets can quickly spiral out of control when spending isn’t actively monitored. Setting up tracking mechanisms helps teams stay ahead of unexpected costs and maintain financial discipline.

Tracking Cloud Budgets in Real Time

Most budget overruns don’t come from massive events. They creep up over time. Without real-time tracking, teams find out they’ve blown past budget only after the invoice hits.

Staying in control means monitoring actual spend against expectations throughout the month, and not just at the end of the month.

Key metrics to watch:

- Budget variance by team, app, or environment to catch overspending early.

- Forecast accuracy to see how well your predictions align with real usage.

- Budget vs. actual by service type to uncover consistently underestimated costs.

Visibility drives accountability. With LM Envision, teams can build live budget dashboards using Azure’s billing APIs, helping make sure that finance and engineering stay on the same page.

Optimization Metrics: Where to Take Action

Tracking cost data is only the start. To see real savings, teams need to take action and continuously fine-tune their environments.

Resource Scheduling

Not all workloads require continuous operation 24/7. Scheduling non-production resources during business hours can dramatically reduce waste.

Track:

- Off-hours utilization to identify idle systems.

- Automated shutdown compliance to ensure schedules are enforced.

- Savings from scheduled actions to prove impact.

Automation is key. Manual shutdowns don’t scale, so make sure to enforce schedules with tools that do the work for you.

Service-Specific Cost Metrics

Some Azure services rack up charges that aren’t immediately obvious. Without careful tracking, these hidden costs can quickly add up and lead to budget overruns. Some metrics to watch are:

- Data transfer charges, especially cross-region traffic that quietly inflates spend.

- Overprovisioned PaaS tiers, where managed services run far below their capacity.

- High-volume ingestion costs from tools like Azure Monitor and Security Center.

Detect Cost Anomalies Before They Become Budget Issues

Misconfigurations, runaway queries, and scaling glitches are common and expensive. Without proactive alerts, cost anomalies go unnoticed until the damage is done.

Track for:

- Sudden jumps in data processing or logging volume which often signal inefficient workloads.

- Unexpected cross-region transfers, possibly from misconfigured replication or backup policies.

- Unintended scaling activity, which may indicate config drift or poor thresholds.

Building a Cost-Conscious Culture

Tooling helps. Culture sustains.

Financial efficiency happens when teams own their usage, see their impact, and have the data to make smarter decisions every sprint, not just during budget reviews.

Build the habit:

- Standardize tagging so ownership is baked into every resource.

- Make real-time cost dashboards visible to every team, not just finance.

- Tie KPIs to outcomes, like cost per transaction or cost per deployment.

- Schedule quarterly cost reviews and link them to planning, not just retros.

Turning Cost Optimization Metrics Into Savings

The teams that stay ahead of Azure spend treat cost metrics the same way they treat performance metrics: constantly tuned, tied to outcomes, and backed by automation.

Cost optimization isn’t a one-time project. The best teams make financial efficiency an ongoing part of their cloud operations. With the right metrics and a unified observability platform—a level up from traditional monitoring—teams can identify cost drivers early, align infrastructure to real demand, and shift cost control from reactive to routine.

Next, we’ll shift our focus from cost to risk, breaking down the metrics that matter for security, availability, and compliance. From suspicious authentication patterns to resource health and service-level reliability, we’ll show you how to stay resilient and audit-ready.

This is the eighth blog in our Azure Monitoring series, focusing on monitoring tool sprawl. As your cloud footprint grows, you will likely end up with a patchwork of monitoring tools that create more problems than they solve. We’ll explore why this happens, the headaches it causes, and practical ways to consolidate without sacrificing visibility. Missed our earlier posts? Catch up.

The larger your cloud footprint becomes, the more monitoring tools start to accumulate. What starts as a quick fix—covering a blind spot here, adding visibility there—can quickly turn into chaos: disconnected dashboards, alert fatigue, and overlapping costs. In this blog, we’ll break down what causes tool sprawl, why it slows teams down, and how to consolidate your monitoring without losing visibility.

TL;DR

Why Monitoring Tools Multiply and Why That’s a Problem

Legacy Tools Can’t Keep Up

Traditional monitoring solutions weren’t built for today’s dynamic Azure and multi-cloud environments. These legacy tools struggle with:

- Integrating deeply with Azure’s APIs and ephemeral resources.

- Providing comprehensive visibility into Kubernetes clusters.

- Handling dynamic auto-scaling and containerized workloads.

- Capturing custom metrics and correlating them across diverse environments.

As teams try to fill these gaps, new specialized tools pile up, each promising better visibility but collectively causing fragmentation.

Operational Chaos from Monitoring Fragmentation

Even within the same organization, different technical teams gravitate toward specialized tools that address their specific domains.

| Infrastructure teams typically rely on | DevOps teams focus on cloud-native monitoring approaches | Specialized teams bring their own monitoring requirements |

| – A monitoring platform for network monitoring and topology mappingSNMP trap collection and analysis for hardware alerts – Network flow monitoring for traffic analysis and capacity planning – Hardware health metrics for physical infrastructure components | – A monitoring platform for cloud metrics and container monitoring – Container health monitoring with specialized Kubernetes tooling – Service mesh telemetry for microservice communication patterns – CI/CD pipeline metrics to track deployment health and frequency | – Custom application metrics and business KPIs – Trace sampling and distributed tracing for complex transactions – Database performance monitoring and query analysis – Security event correlation and threat detection |

This fragmentation creates data silos that make it nearly impossible to get a unified view of infrastructure health, particularly when incidents span multiple domains.

What Monitoring Sprawl Is Really Costing You

Alert Fatigue and Noise

Multiple monitoring tools inevitably generate redundant alerts, forcing teams to spend more time filtering through noise than solving actual issues. Without intelligent correlation, a single incident can trigger dozens of separate notifications across different platforms.

This alert overload has serious consequences:

- Critical incidents get buried among hundreds of low-priority alerts.

- Teams develop “alert blindness” and start ignoring notifications.

- Response times increase as staff waste time determining which alerts matter.

- Incident prioritization becomes nearly impossible without unified context.

Inefficient Troubleshooting

Without a single pane of glass for monitoring, you’ll waste precious time during incidents:

- Switching between multiple tool interfaces to gather related metrics.

- Manually correlating timestamps across systems with different time formats and zones.

- Dealing with inconsistent metric naming conventions and thresholds.

- Missing service topology mapping that shows how components interact.

These inefficiencies directly impact mean time to resolution (MTTR).

Rising Costs and Overlapping Licensing

The financial impact of monitoring sprawl extends beyond the obvious licensing costs:

- Organizations pay for redundant features across multiple platforms.

- Each tool requires administrative overhead and maintenance.

- Teams need training on multiple interfaces and alert paradigms.

- Integration work to connect disparate systems consumes engineering resources.

- Data duplication across platforms increases storage and processing costs.

How to Consolidate Without Losing Visibility

Adopt Unified, Vendor-Agnostic Observability

Effective consolidation means choosing a solution that seamlessly integrates data from all relevant environments:

- Native support for Azure, AWS, Google Cloud, and other public clouds.

- Deep Kubernetes observability for container orchestration.

- Traditional monitoring capabilities for on-premises infrastructure.

- Application performance monitoring for custom workloads.

This approach provides centralized dashboards that eliminate data silos and improve cross-team collaboration. Rather than replacing specialized tools immediately, the best approach is often to integrate their data into a central platform while gradually transitioning functionality.

Smarter Alert Correlation to Cut Through Noise

AI-driven alerting can dramatically reduce noise by intelligently correlating related events and prioritizing issues based on business impact:

- Automatic grouping of related alerts across different systems.

- Root cause analysis that identifies primary issues versus symptoms.

- Dependency mapping that shows how components affect each other.

- Business service impact assessment that prioritizes user-impacting issues.

This approach ensures teams focus on critical problems first, reducing the “alert fatigue” that plagues fragmented monitoring environments.

Automation for Consistency and Scale

Infrastructure-as-Code (IaC) tools like Terraform or Ansible can standardize monitoring configurations across environments, ensuring consistency and reducing manual overhead:

Implementation approaches include:

- Template-based monitor deployment that ensures consistent coverage.

- API-driven configuration management for automated updates.

- Version control for monitoring configurations to track changes.

- Auto-discovery of new resources to eliminate monitoring gaps.

This automated approach reduces human errors and ensures monitoring consistency across environments.

Why Unifying Your Monitoring Stack Changes Everything

Managing multiple monitoring tools inevitably increases complexity, costs, and operational inefficiencies. By consolidating monitoring into a unified platform, you can streamline operations and improve overall efficiency.

- A single source of truth for all infrastructure and application data enables faster troubleshooting, reducing the time spent diagnosing and resolving issues.

- Intelligent correlation and automated noise reduction help minimize alert fatigue, ensuring teams focus only on critical incidents.

- Eliminating unnecessary tools lowers the total cost of ownership (TCO) by reducing administrative overhead and simplifying management.

- Shared visibility and consistent alerting foster better cross-team collaboration, ensuring that all stakeholders have access to the same insights.

LM Envision gives teams one place to see everything—whether it’s a VM in Azure, a Kubernetes pod in EKS, or an on-prem network device. That unified view makes it easier to solve problems, cut costs, and align teams around shared truths.

Bring Order to the Chaos

Taking a strategic approach to monitoring consolidation not only reduces tool sprawl but also enhances visibility. What was once a fragmented monitoring landscape becomes a unified observability strategy that properly supports modern hybrid IT environments.

Next in our Azure Monitoring series: controlling your Azure costs without losing visibility. We’ll show you why cloud bills often spiral out of control, where Azure’s native cost tools fall short, and how to cut spending while preserving performance. You’ll get practical strategies for real-time cost insights and waste elimination that keep your bills down without sacrificing the observability your team needs