How We Used JMH to Benchmark Our Microservices Pipeline

LogicMonitor + Catchpoint: Enter the New Era of Autonomous IT

Proactively manage modern hybrid environments with predictive insights, intelligent automation, and full-stack observability.

Explore solutionsExplore our resource library for IT pros. Get expert guides, observability strategies, and real-world insights to power smarter, AI-driven operations.

Explore resources

Our observability platform proactively delivers the insights and automation CIOs need to accelerate innovation.

About LogicMonitor

Get the latest blogs, whitepapers, eGuides, and more straight into your inbox.

Your video will begin shortly

At LogicMonitor, we are continuously improving our platform with regards to performance and scalability. One of the key features of the LogicMonitor platform is the capability of post-processing the data returned by monitored systems using data not available in the raw output, i.e. complex datapoints.

As complex datapoints are computed by LogicMonitor itself after raw data collection, it is one of the most computationally intensive parts of LogicMonitor’s metrics processing pipeline. Thus, it is crucial that as we improve our metrics processing pipeline, we benchmark the performance of the computation of complex datapoints, so that we can perform data-driven capacity planning of our infrastructure and architecture as we scale.

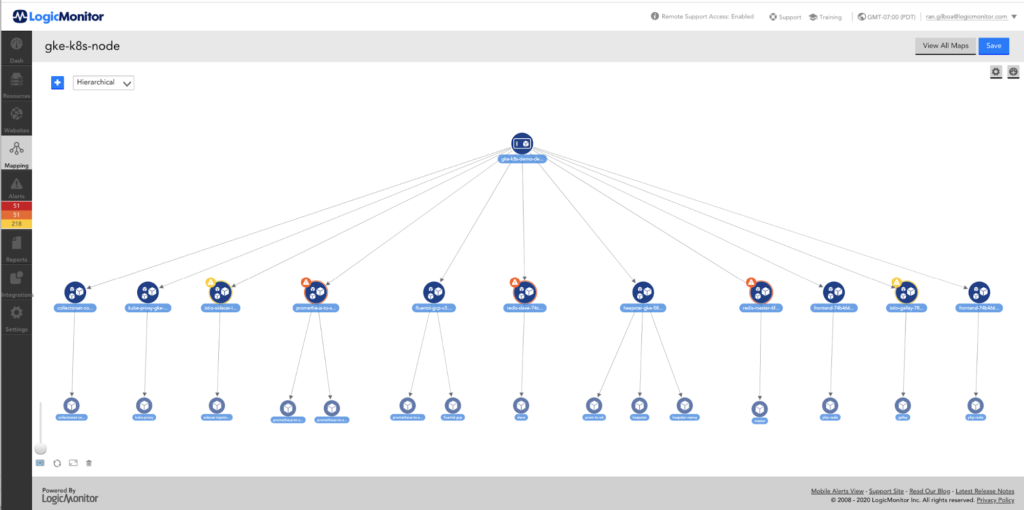

LogicMonitor’s Metric Pipeline, where we built out a proof-of-concept of Quarkus in our environment, is deployed on the following technology stack:

JMH (Java Microbenchmark Harness) is a library for writing benchmarks on the JVM, and it was developed as part of the OpenJDK project. It is a Java harness for building, running, and analyzing benchmarks of performance and throughput in many units, written in Java and other languages targeting the JVM. We chose JMH because it provides the following capabilities (this is not an exhaustive list):

The first step for benchmarking computationally intensive code is to isolate the code so that it can be run by the benchmarking harness without the other dependencies factoring into the benchmarking results. To this end, we first refactored our code that does the computation of complex datapoints into its own method, such that it can be invoked in isolation without requiring any other components. This also had the additional benefit of modularizing our code and increasing its maintainability.

In order to run the benchmark, we used an integration of JMH version 1.23 with JUnit. First, we wrote a method that will invoke our complex datapoint computation with some test data. Then, we annotated this method with the @Benchmark annotation to let JMH know that we’d like this method to be benchmarked. Then, we actually configured the JMH benchmark runner with the configurations we wanted the benchmark to run with. This included:

We also had the option of specifying JVM memory arguments, but in this case, the code we were benchmarking was computationally intensive and not memory intensive, so we chose to forego that.

Finally, we annotated the JMH benchmark runner method with @Test, so that we could leverage the JUnit test runner to run and execute the JMH benchmark.

This is how our JMH benchmark runner method looks:

| @Test public void executeJmhRunner() throws Exception { Options jmhRunnerOptions = new OptionsBuilder() .include(“\\.” + this.getClass().getSimpleName() + “\\.”) .warmupIterations(5) .measurementIterations(10) .forks(0) .threads(1) .mode(Mode.AverageTime) .shouldDoGC(true) .shouldFailOnError(true) .resultFormat(ResultFormatType.JSON) .result(“/dev/null”) .shouldFailOnError(true) .jvmArgs(“-server”) .build(); new Runner(jmhRunnerOptions).run(); } |

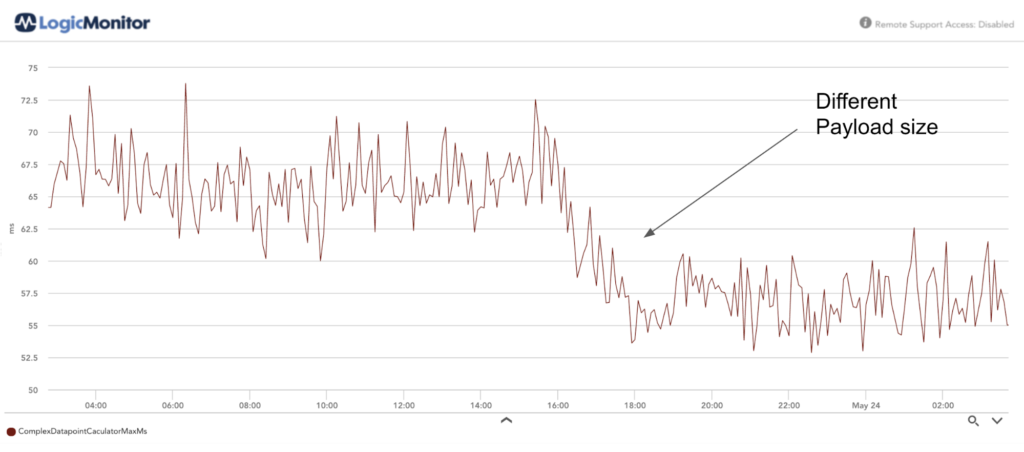

We ran the benchmark against different sizes of input data and recorded the throughput vs. size of input data. From the result of this, we were able to identify the computational capacity of individual nodes in our system, and from that, we were able to successfully plan for autoscaling our infrastructure for customers with computationally-intensive loads.

| No. of complex datapoints per instance | Average Time (in ms) |

| 10 | 2 |

| 15 | 3 |

| 20 | 5 |

| 25 | 7 |

| 32 | 11 |

© LogicMonitor 2026 | All rights reserved. | All trademarks, trade names, service marks, and logos referenced herein belong to their respective companies.