From Monolith to Microservices

LogicMonitor + Catchpoint: Enter the New Era of Autonomous IT

Proactively manage modern hybrid environments with predictive insights, intelligent automation, and full-stack observability.

Explore solutionsExplore our resource library for IT pros. Get expert guides, observability strategies, and real-world insights to power smarter, AI-driven operations.

Explore resources

Our observability platform proactively delivers the insights and automation CIOs need to accelerate innovation.

About LogicMonitor

Get the latest blogs, whitepapers, eGuides, and more straight into your inbox.

Your video will begin shortly

Today, monolithic applications evolve to be too large to deal with as all the functionalities are placed in a single unit. Many enterprises are tasked with breaking them down into microservices architecture.

At LogicMonitor we have a few legacy monolithic services. As business rapidly grew we had to scale up these services, as scaleout was not an option.

Eventually, our biggest pain points became:

In this article, we will cover the benefits of both Monolith and Microservices, our specific requirements from Microservice, Kafka Microservice communication, and how to distribute data and balance the load.

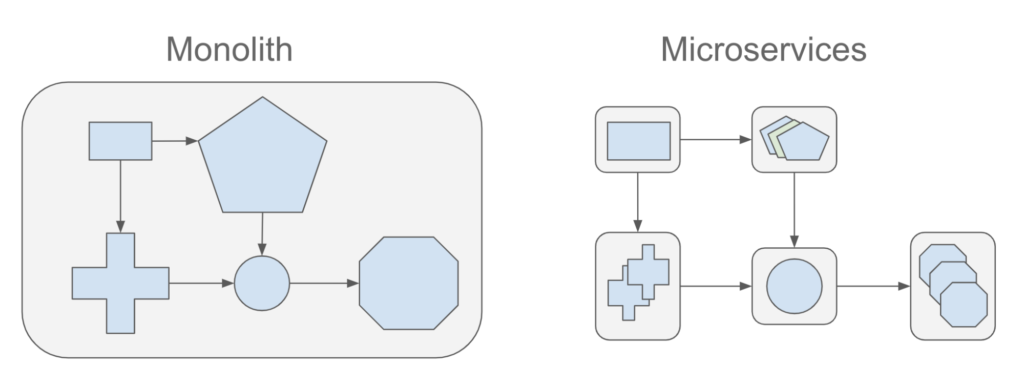

A monolith application is an application with all the functionalities built in a single unit. Microservice applications are the smaller services that break down the single unit. They run independently and work together. Below are some benefits of both Monolith and Microservices.

As long as the application complexity is not high and fairly static and the number of engineers is “small”, then it is:

Microservices provide many advantages in building scalable applications and solving complex business problems:

LogicMonitor processes billions of payloads (e.g data points, logs, events, configs) per day. The high-level architecture is a data processing pipeline.

Our requirements for each microservice in the pipeline are:

Today we have microservices implemented in Java, Python, and Go. Each microservice is deployed to Kubernetes clusters and can be automatically scaled out or in using Kubernetes’ Horizontal Pod Autoscaler based on a metric.

For Java, after evaluating multiple microservice frameworks, we decided to use Quarkus in our new Java microservices. Quarkus is a Kubernetes-native Java framework tailored for Java virtual machines (JVMs).

To learn more about why we chose Quarkus, check out our Quarkus vs. Spring blog.

We have implemented microservices to process and analyze the data points that are monitored by LogicMonitor. Each microservice performs a certain task on the incoming data.

After evaluating a few technologies, we decided to use Kafka as the communication mechanism between the microservices. Kafka is a distributed streaming message platform that can be used to publish and subscribe to streaming messages. Kafka provides a fast, scalable, and durable message platform for our large amount of streaming data.

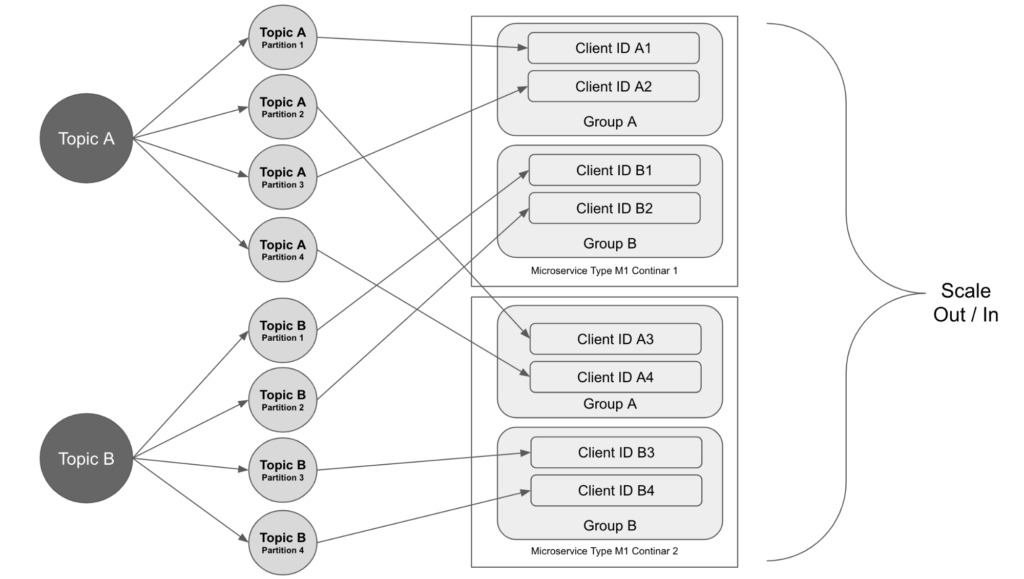

Our microservices use Kafka topics to communicate. Each microservice gets data messages from some Kafka topics and publishes the processing results to some other topics.

Each Kafka topic is divided into partitions. The microservice that consumes the data from the topic has multiple instances running and they are joining the same consumer group. The partitions are assigned to the consumers in the group. When the service scales out, more instances are created and join the consumer group. The partition assignments will be rebalanced among the consumers. Each instance gets one or more partitions to work on.

In this case, the total number of partitions decides the maximum number of instances that the microservice can scale up to. When configuring the partitions of a topic, we should consider how many instances of the microservice that will be needed to process the volume of the data.

When a microservice publishes a data message to a partition of a Kafka topic, the partition can be decided randomly or based on a partitioning algorithm based on the message’s key.

We chose to use some internal ID as the message key so that the time-series data messages from the same device can arrive at the same partition in order and processed in the order.

The data on a topic across the multiple partitions sometimes can be out of balance. In our case, some data messages require more processing time than others and some partitions have more such messages than the other partitions. This leads to some microservice instances falling behind. To solve this problem, we separated the data messages to different topics based on their complexity and configure the consumer groups differently. This allows us to scale in and out services more efficiently.

Some of our microservices are stateful, which makes it challenging to scale. To solve this problem, we use Redis Cluster to keep the states.

Redis Cluster is a distributed implementation of multiple Redis nodes which provides a fast in-memory data store. It allows us to store and load the algorithm models fast and can scale-out if needed.

Migrating Monolith to microservices is a journey that in some cases can take a few years.

At LogicMonitor we started this journey two years ago. The return on the investment is not arguable. The benefits include but are not limited to:

© LogicMonitor 2026 | All rights reserved. | All trademarks, trade names, service marks, and logos referenced herein belong to their respective companies.