AWS Logs Ingestion Overview

Last updated - 23 July, 2025

Using AWS CloudWatch to monitor your Lambda functions is a valuable method to obtain useful reporting and alerts. However, relying solely on AWS CloudWatch to monitor your data can leave you without in-depth insights and contextual information.

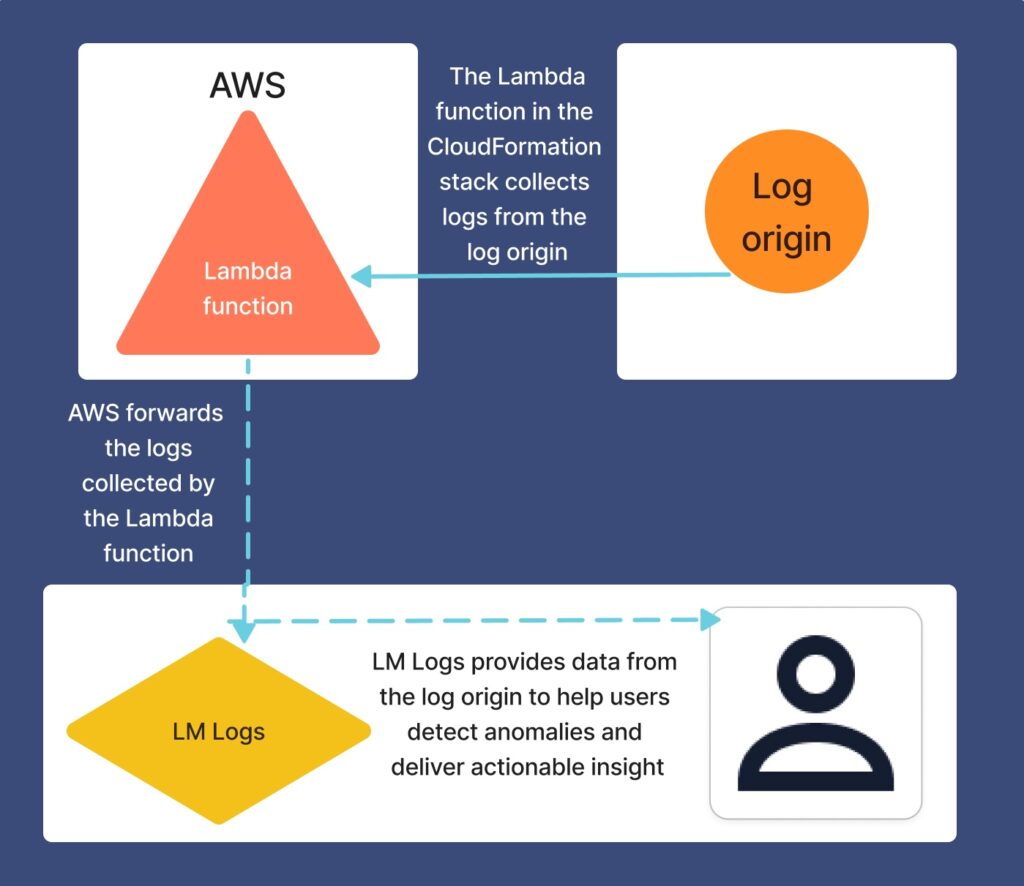

LogicMonitor integrates with your AWS services to provide detailed analysis of the performance of your Lambda functions. By utilizing a customizable template to configure and deploy Lambda functions that forward log data, LogicMonitor ingests the logs and then uses the data from them to detect anomalies and deliver actionable insights. For more information, see Lambda Function Deployment for AWS Logs Ingestion.

Review the following to see how log ingestion works when integrated with AWS using CloudFormation stacks:

Supported AWS Services for Log Ingestion

See the AWS documentation for the following supported AWS services:

- AWS EC2 Logs

- AWS ELB Access Logs

- AWS RDS Logs

- AWS Lambda Logs

- AWS EC2 Flow Logs

- AWS NAT Gateway Flow Logs

- AWS CloudTrail Logs

- AWS CloudFront Logs

- AWS Kinesis Data Streams Logs

- AWS Kinesis Data Firehose Logs

- AWS ECS Logs

- AWS EKS Logs

- AWS Bedrock Logs

- AWS EventBridge CloudWatch Events Logs

For information about how to configure each AWS service to LM Logs, see AWS Service Configuration for Log Ingestion.